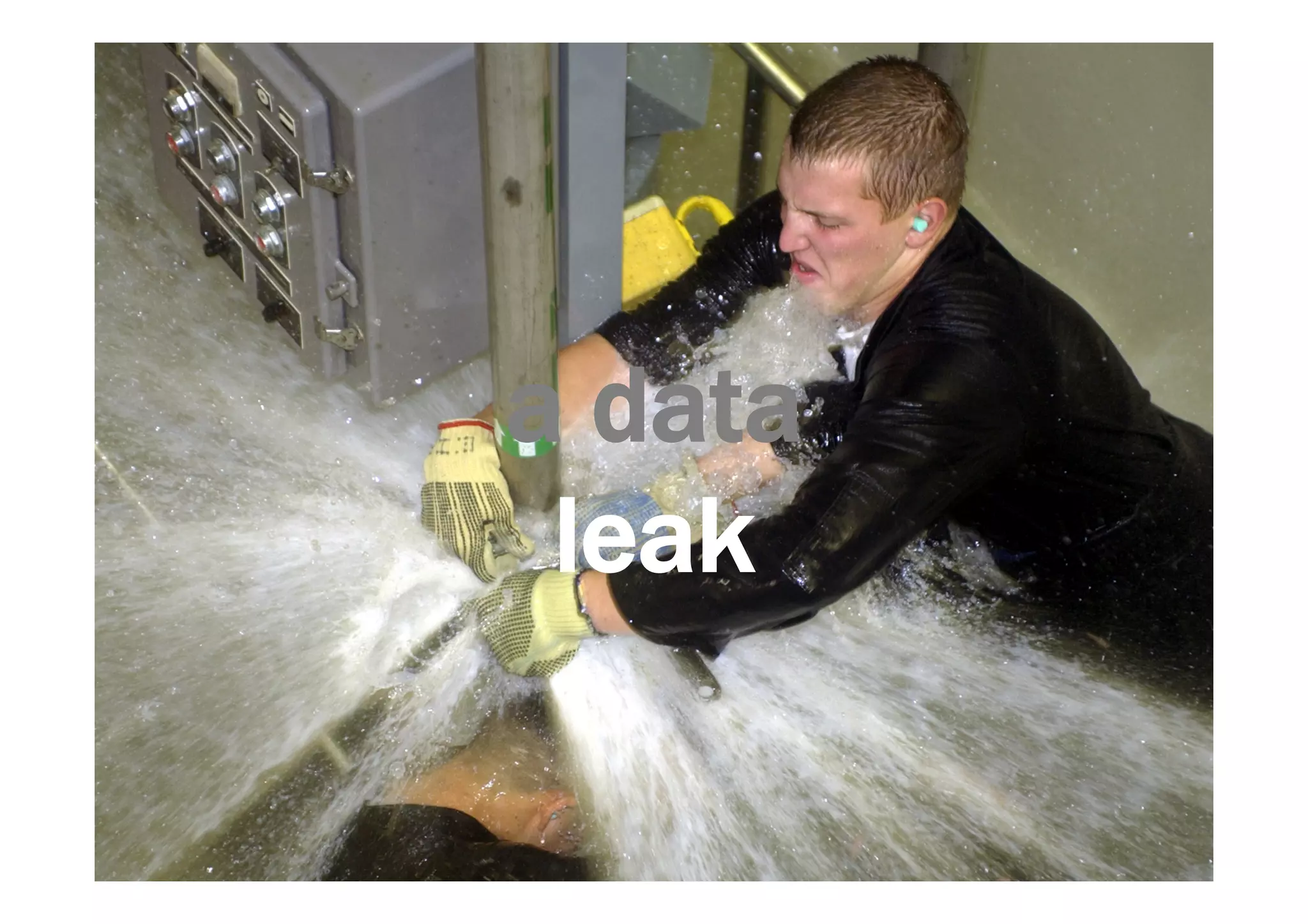

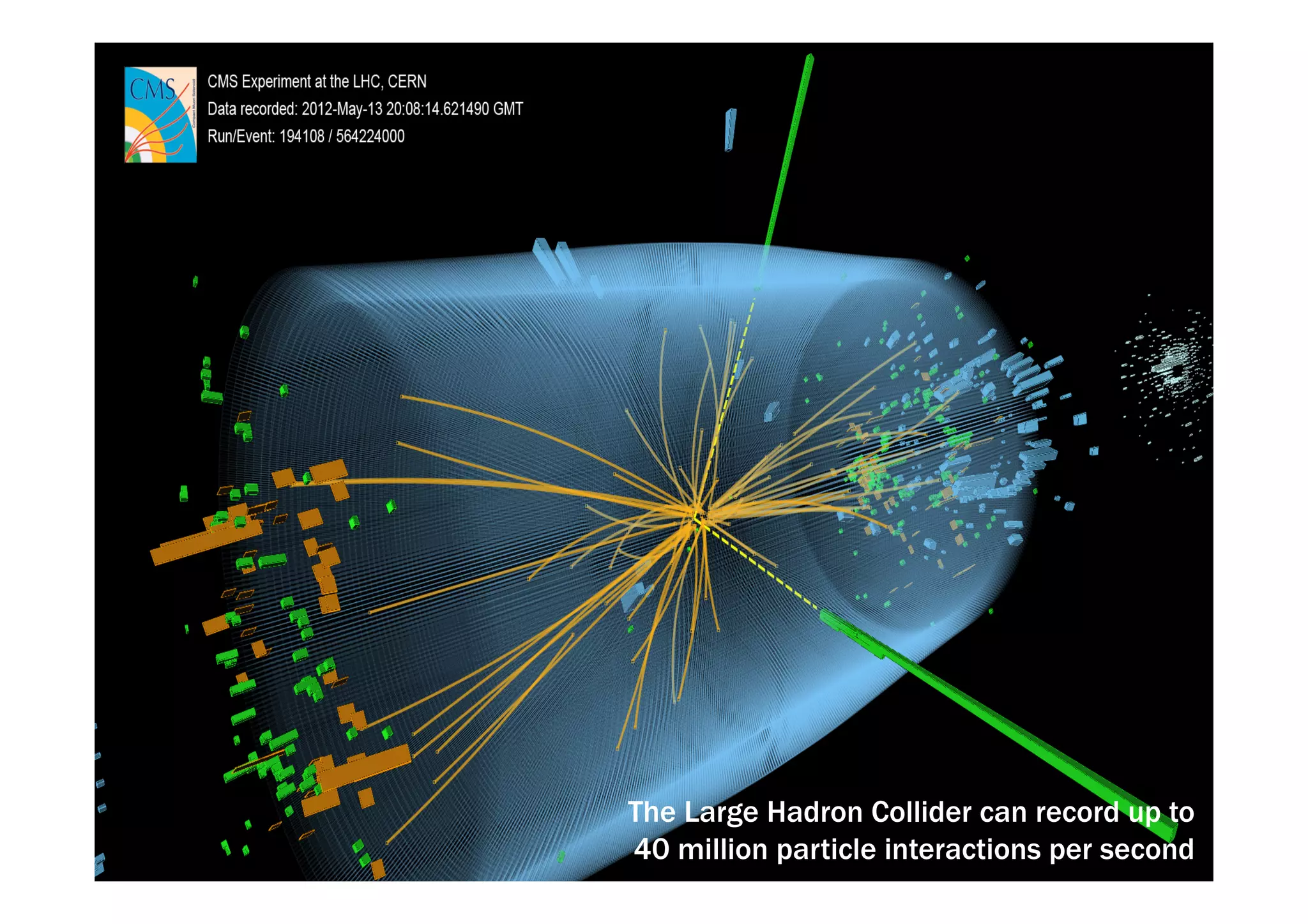

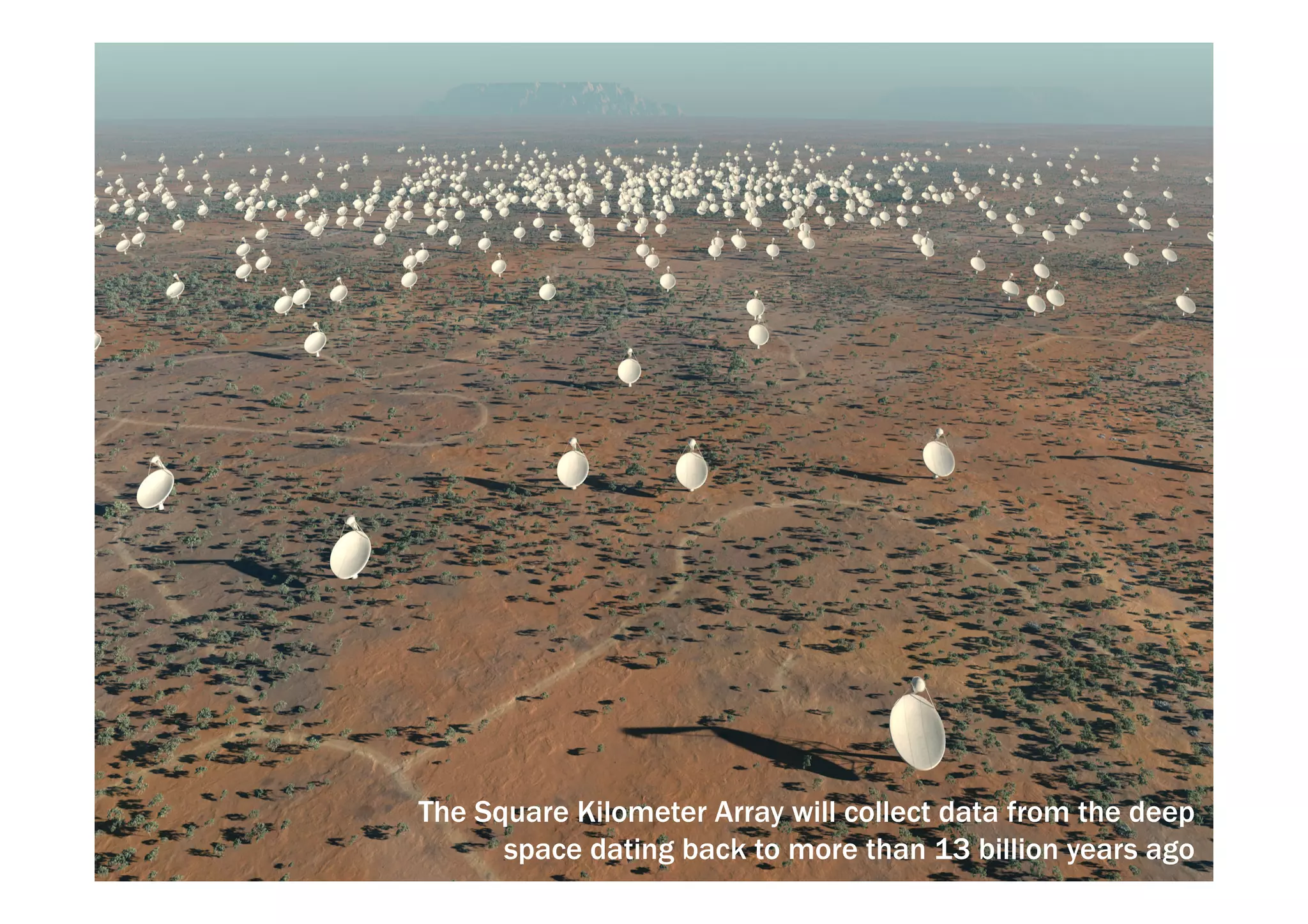

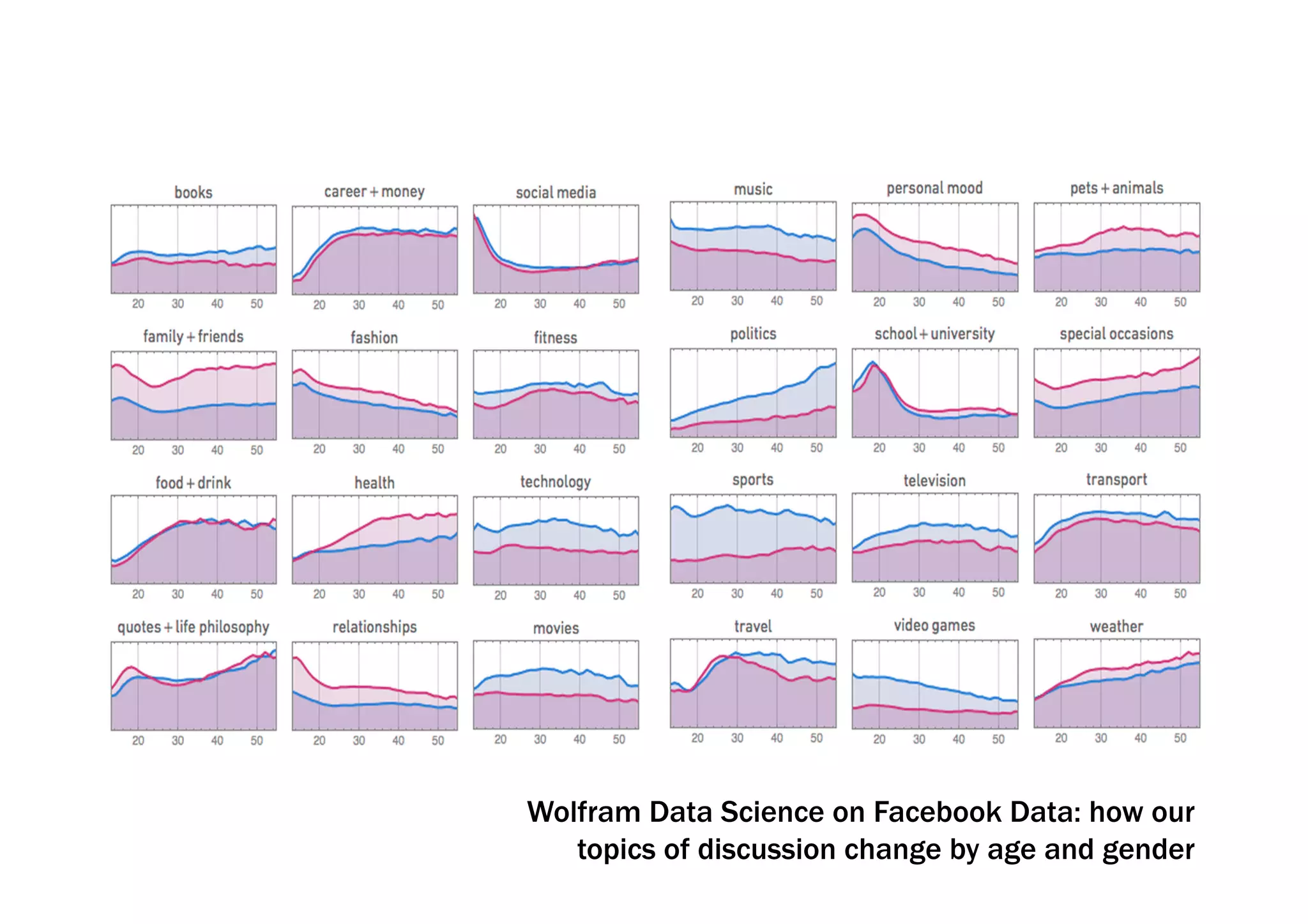

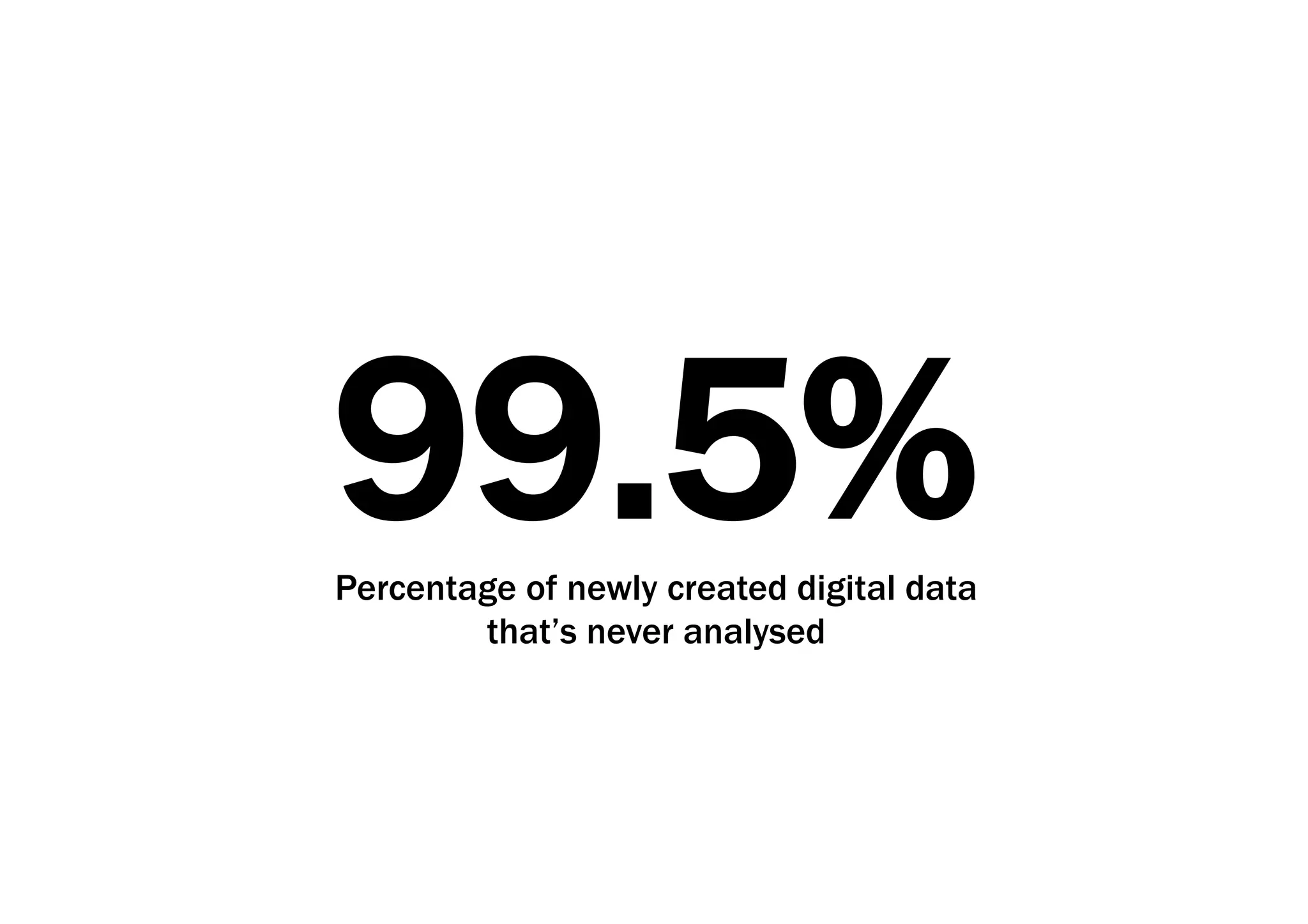

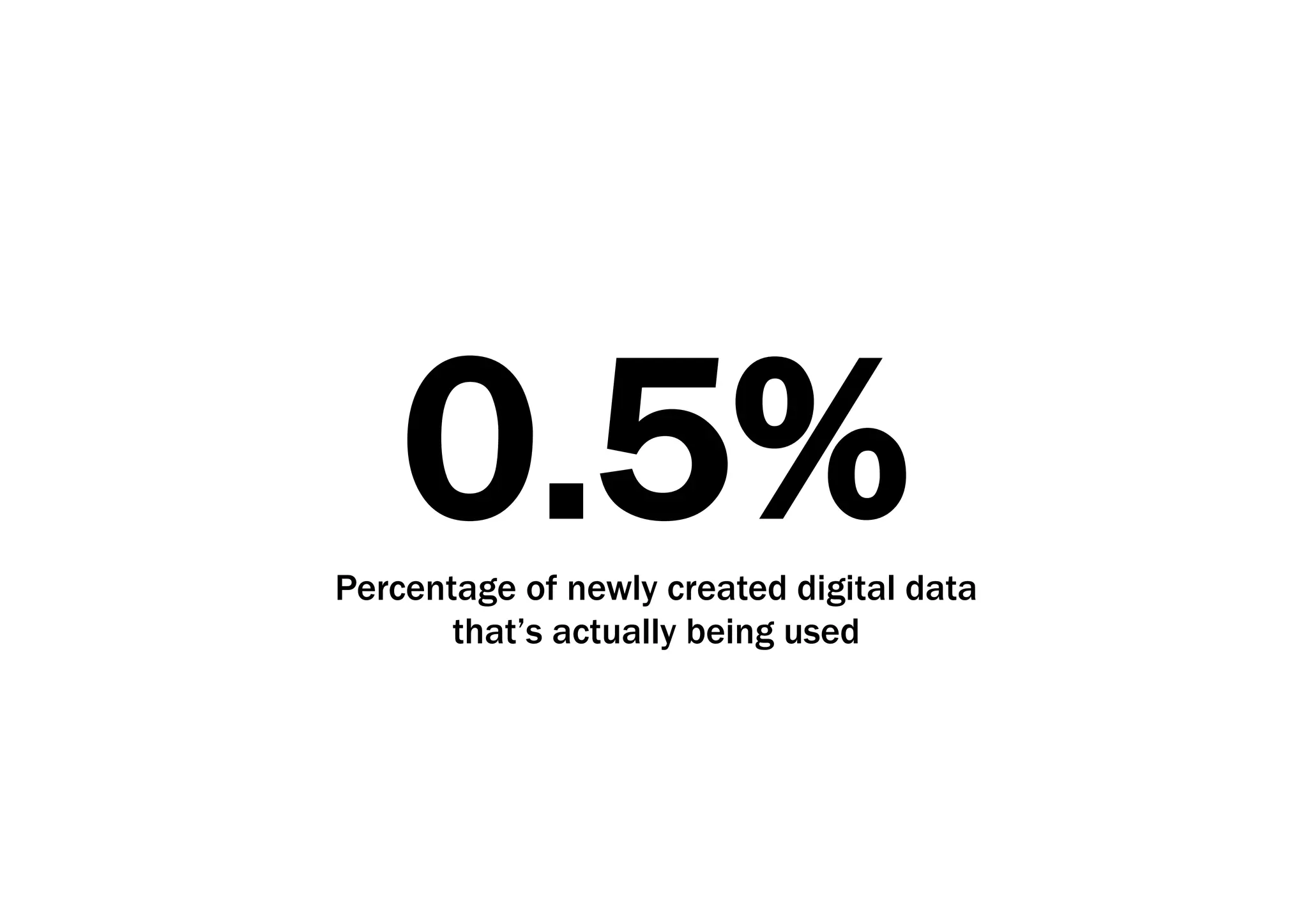

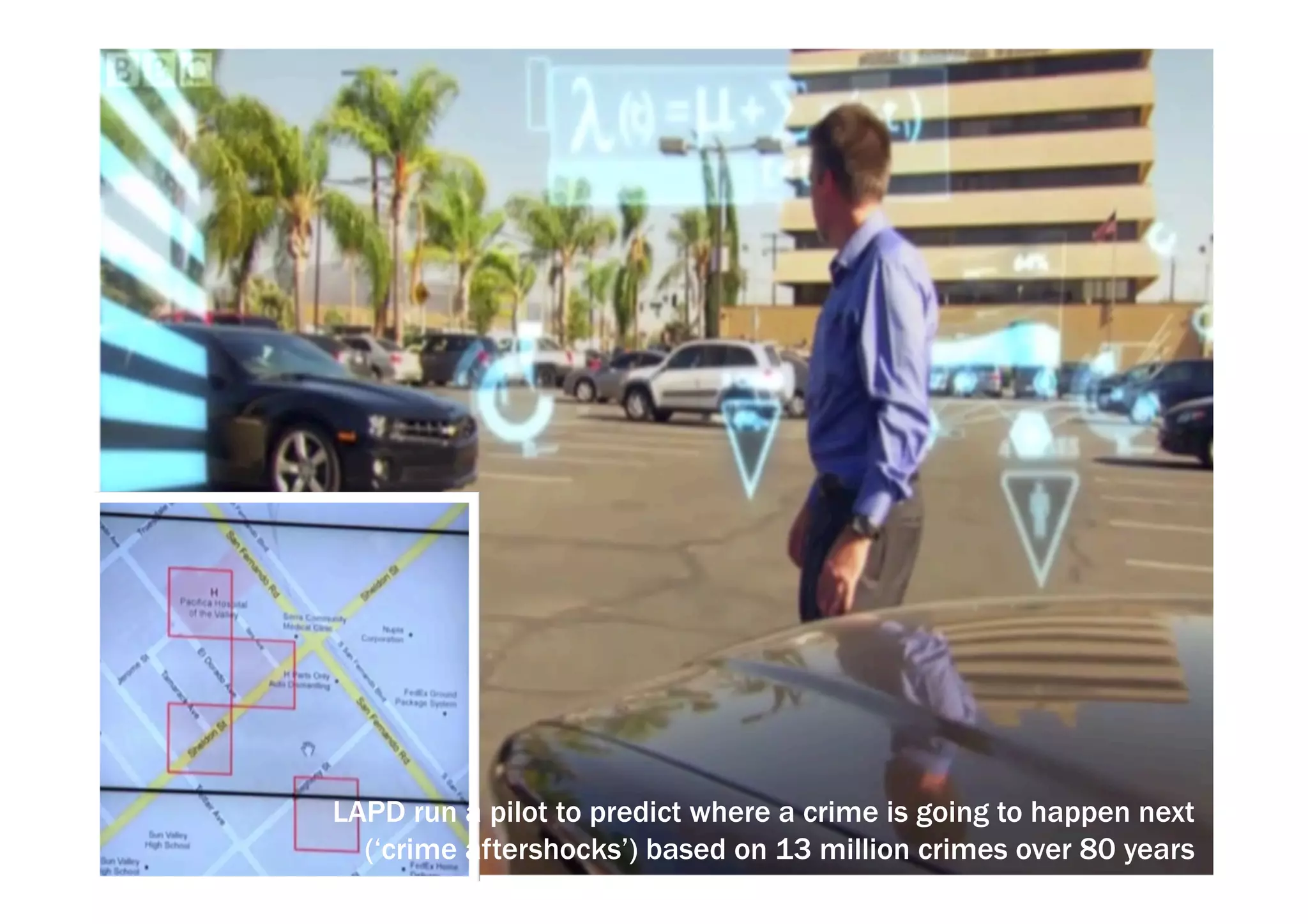

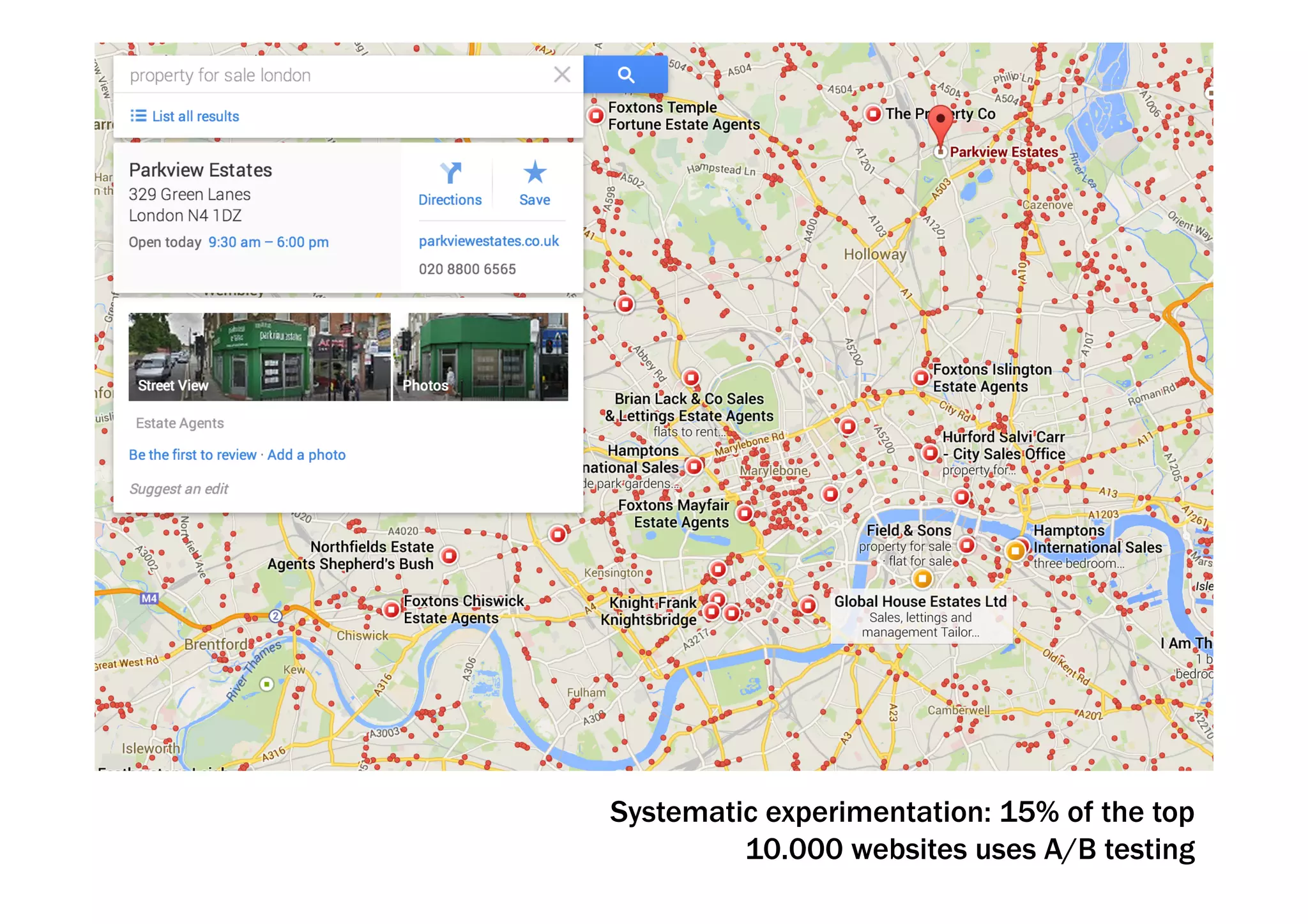

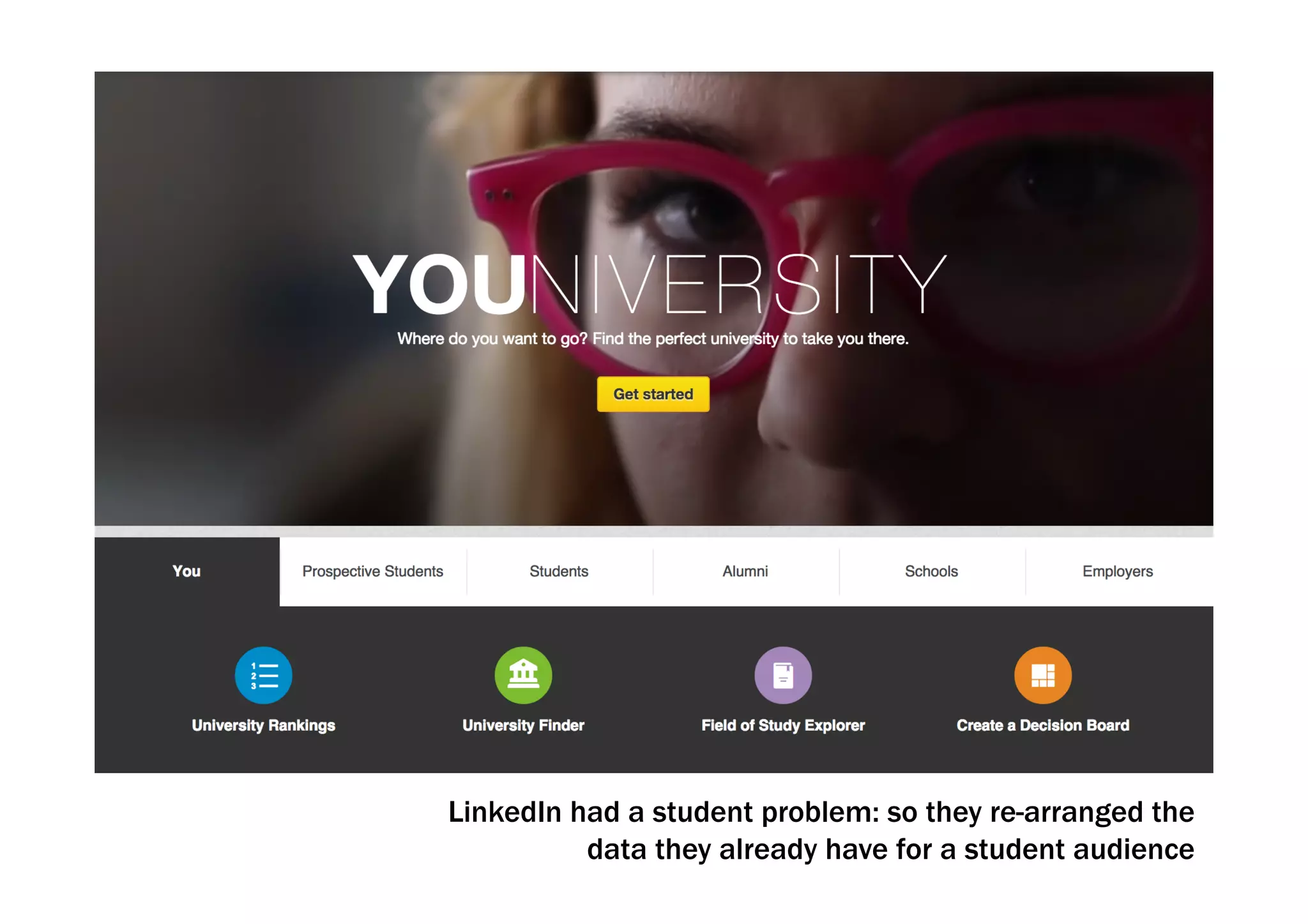

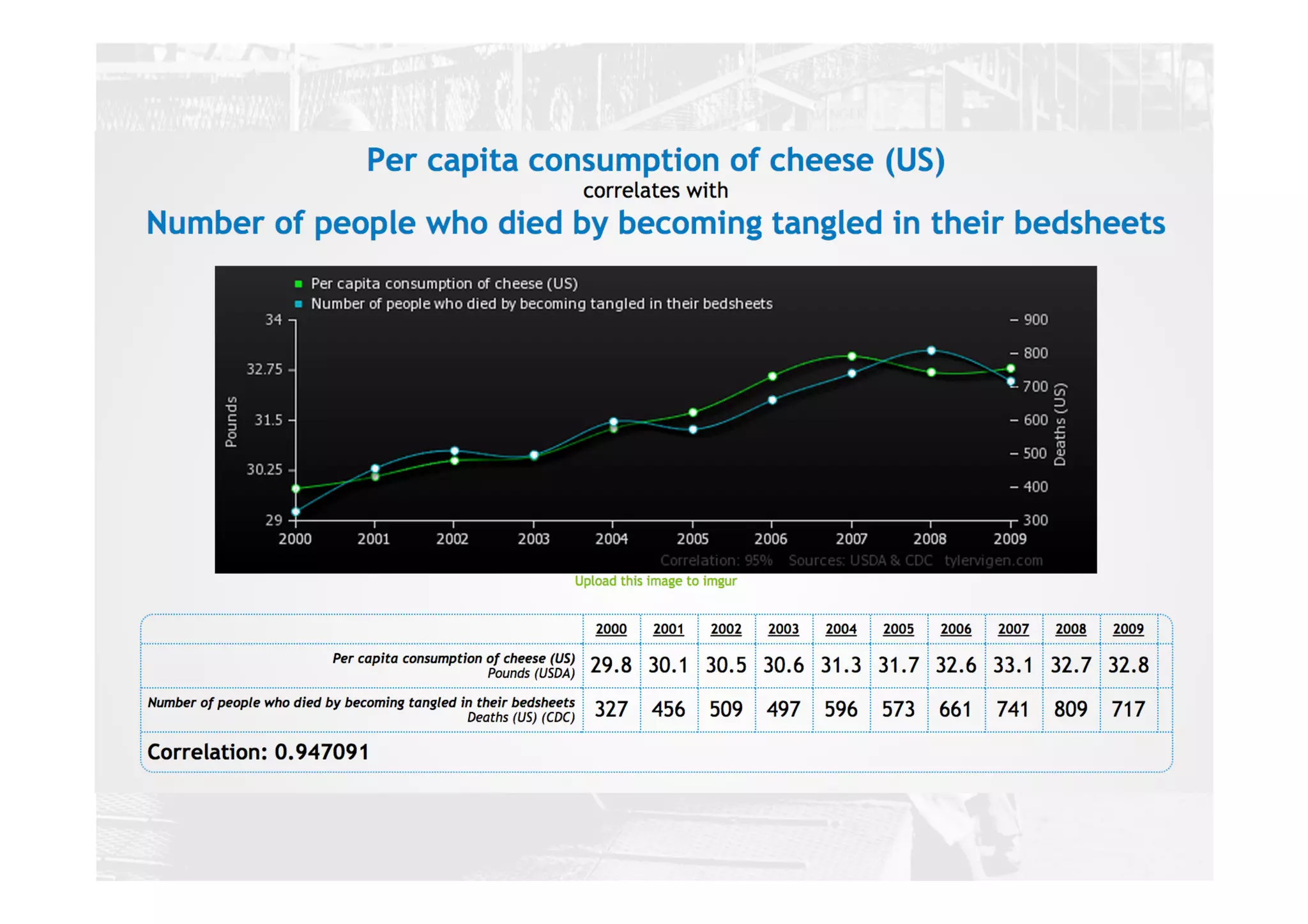

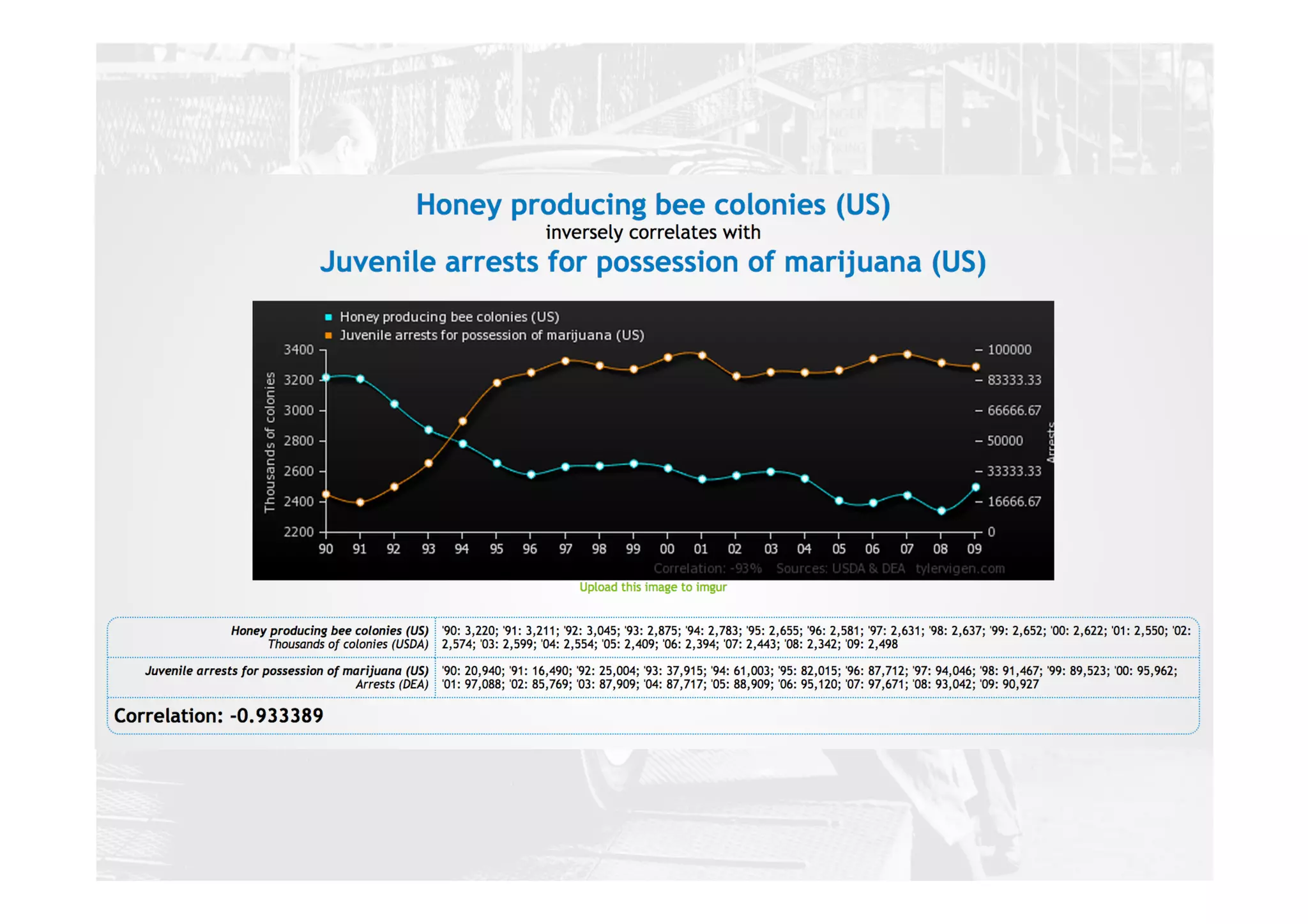

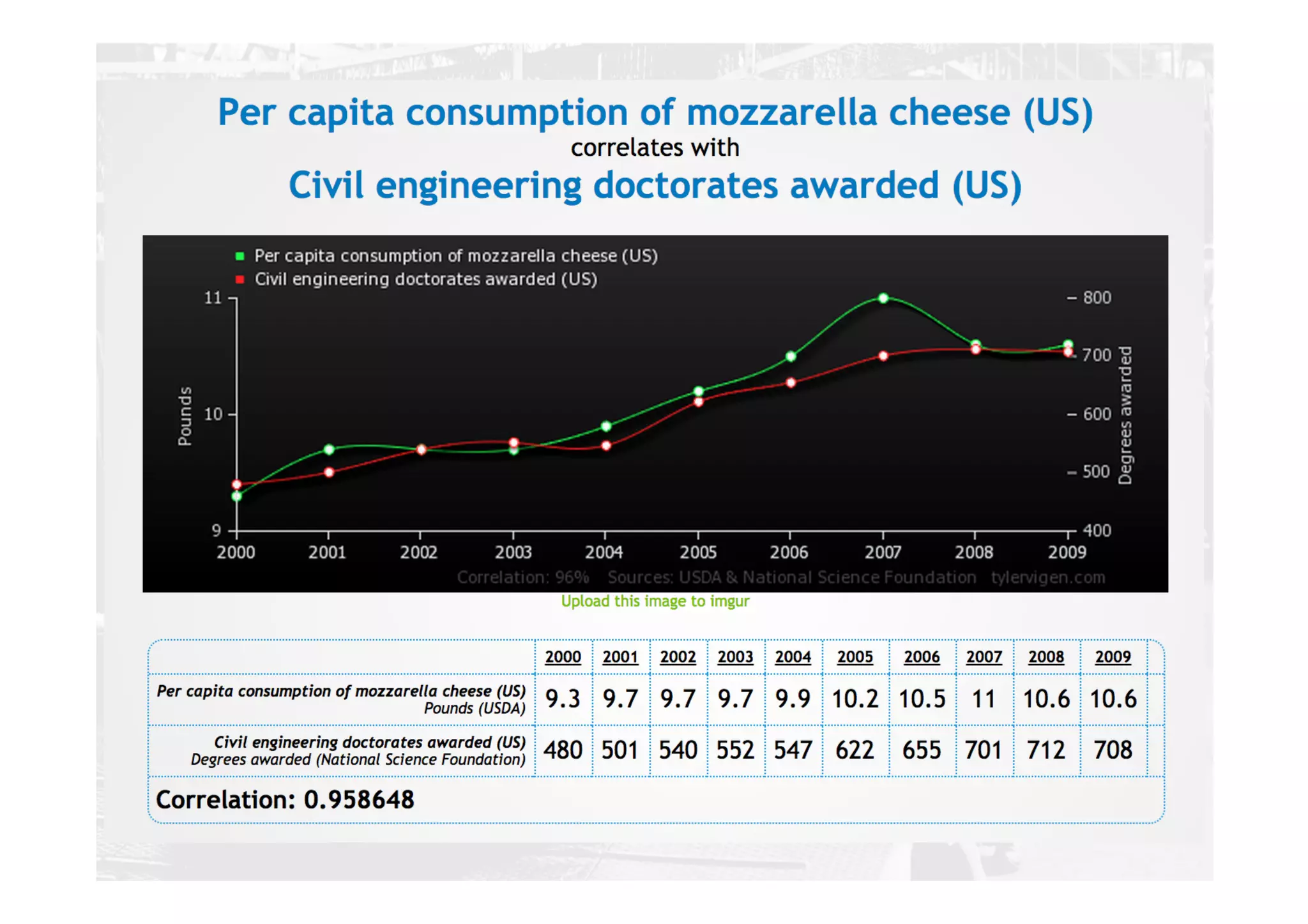

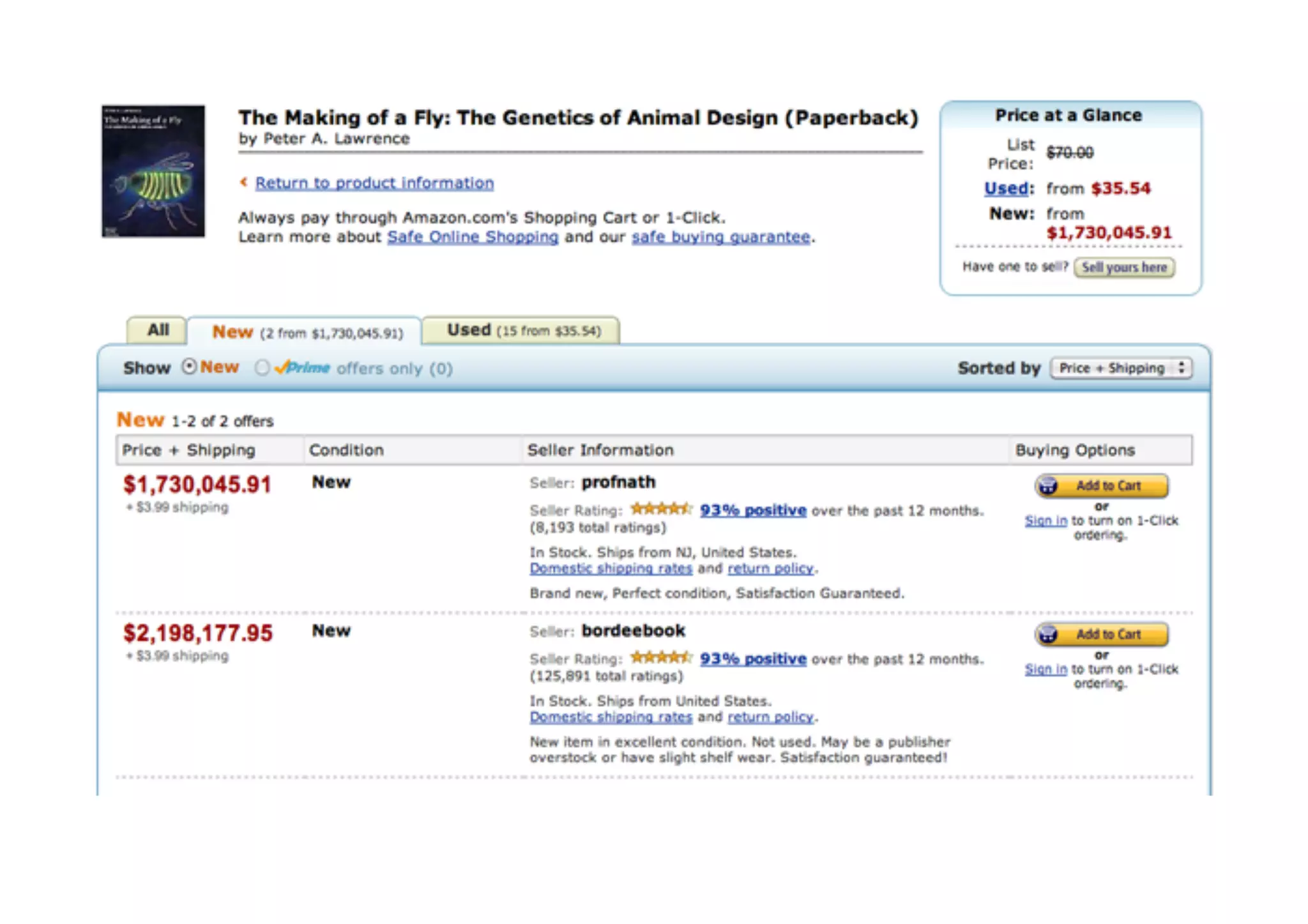

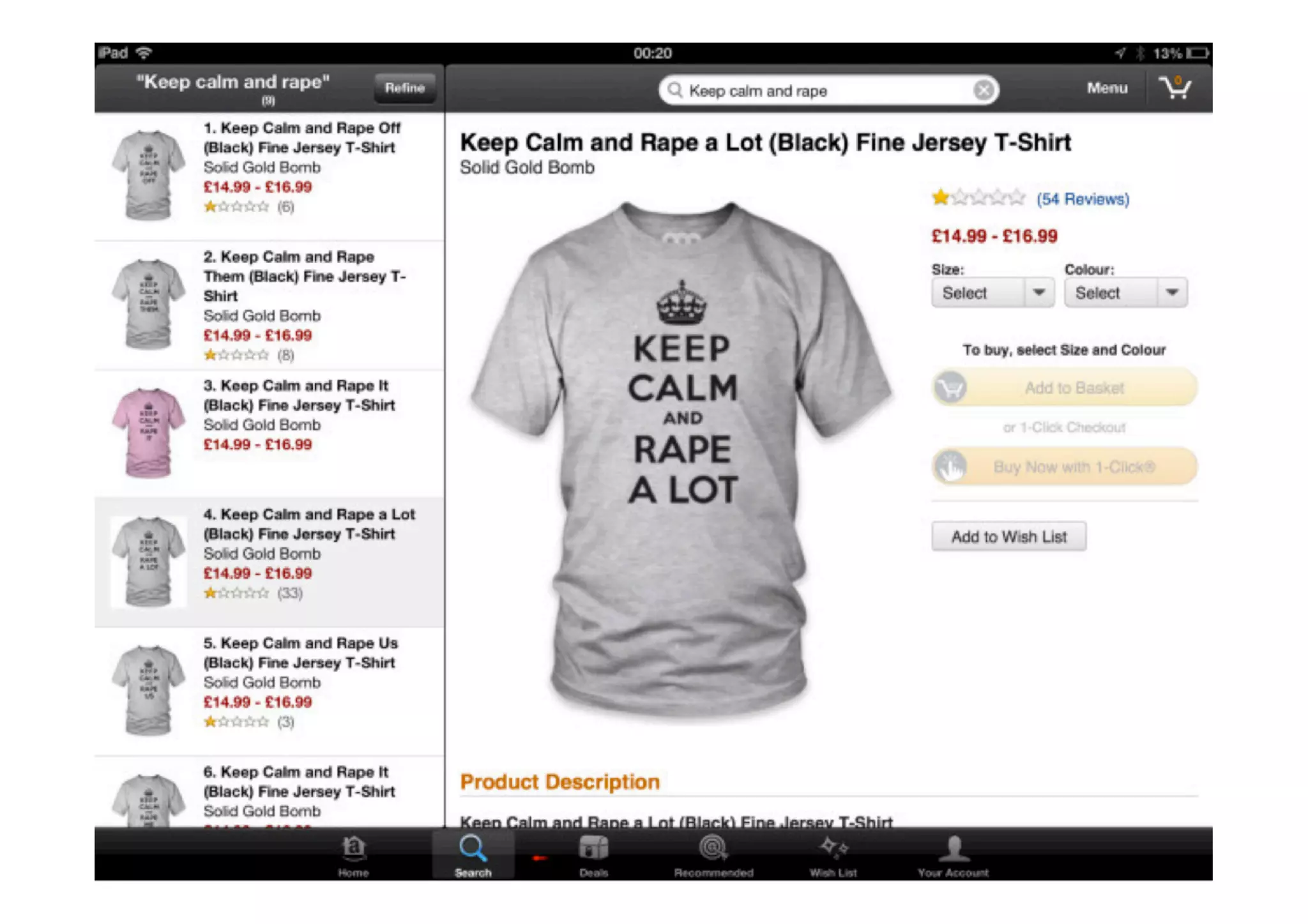

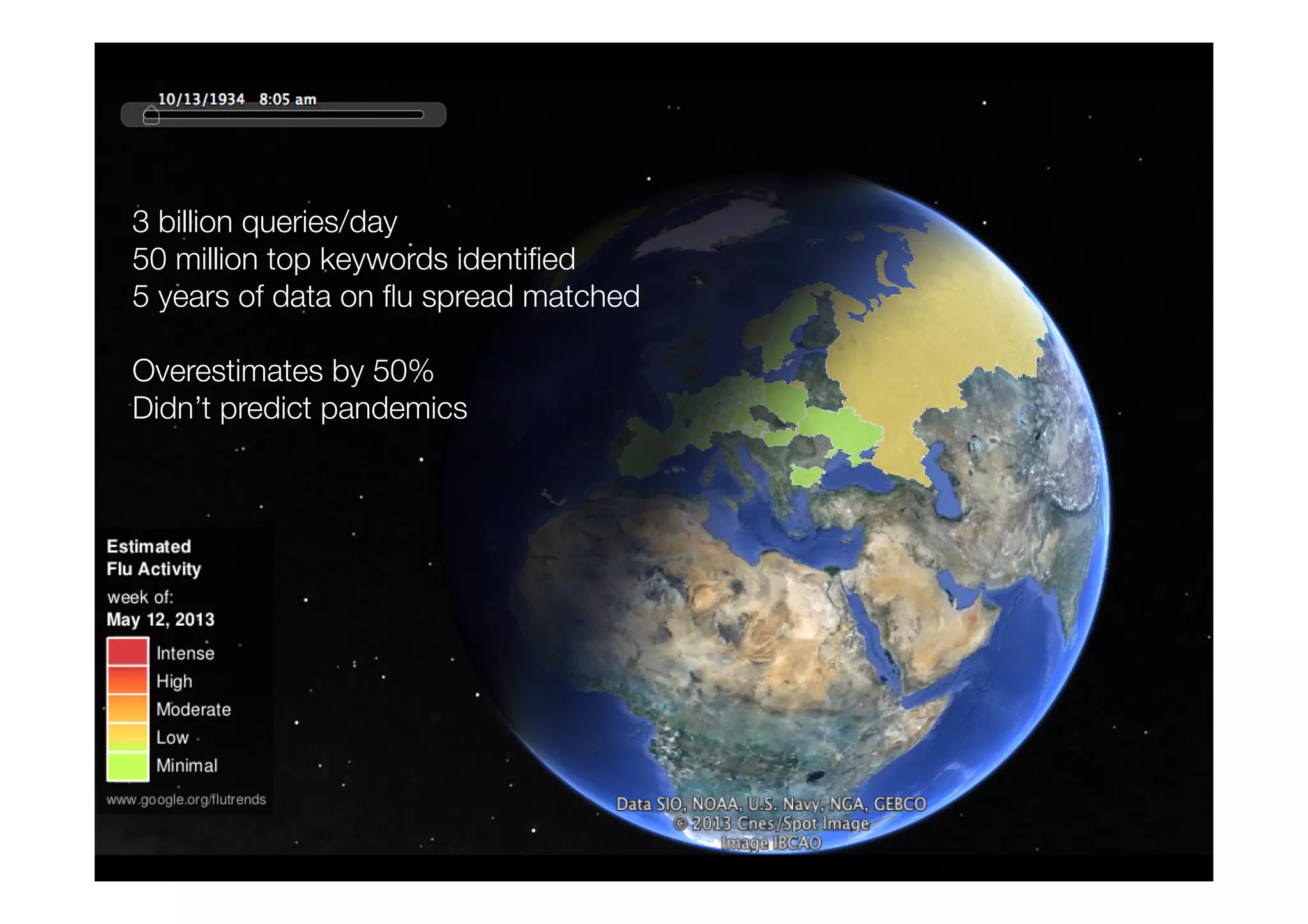

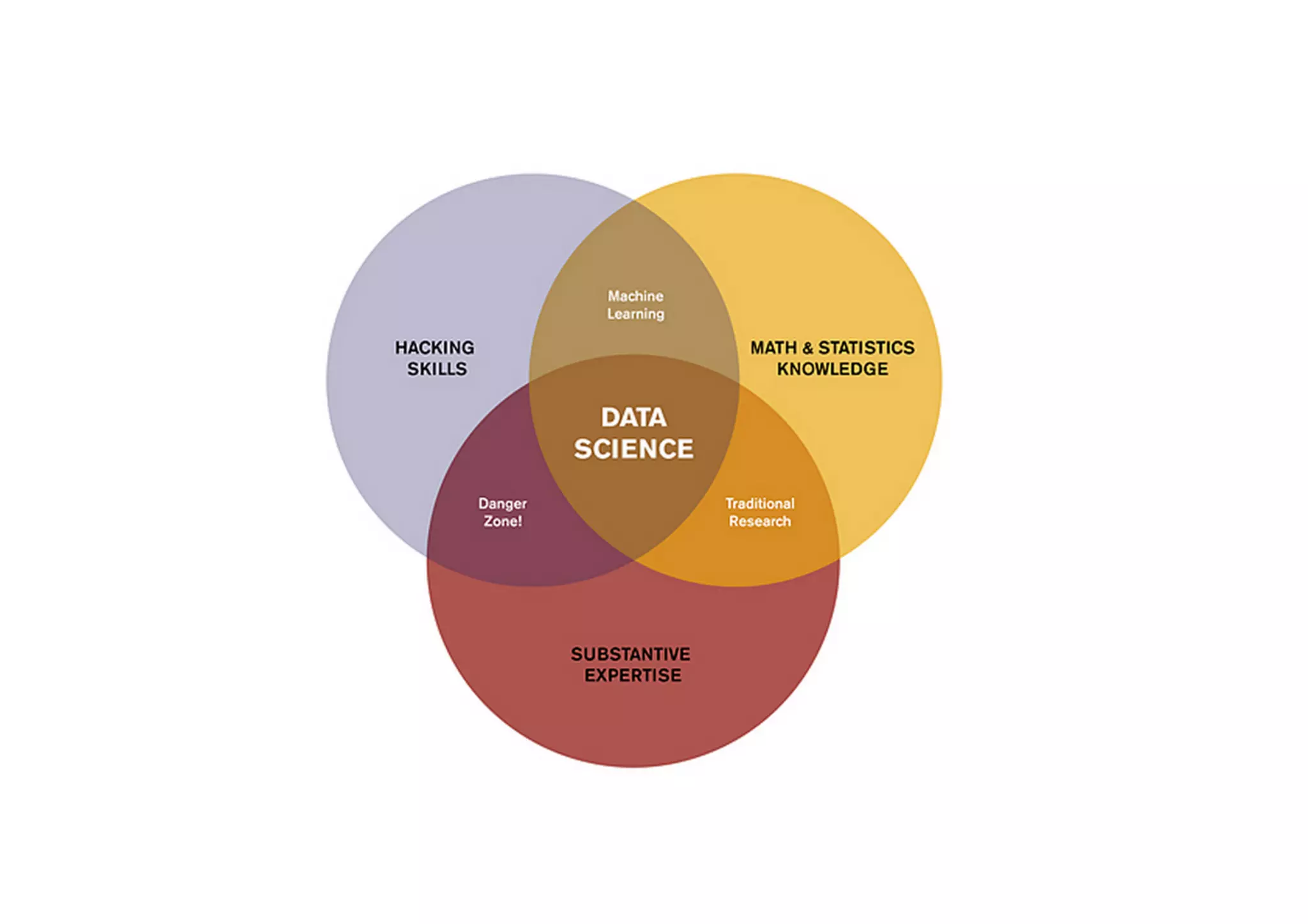

This document discusses how big data is primarily a human problem rather than a technological one. It argues that while technology enables the collection and analysis of vast amounts of data, humans define the problems, frame the questions, and interpret the results, which can be biased. The document also notes that while a lot of data is collected, most is never analyzed due to challenges in preparing, standardizing, and making sense of large, messy datasets. Overall, big data represents an innovation in human decision-making and problem-solving rather than just a technical advancement.