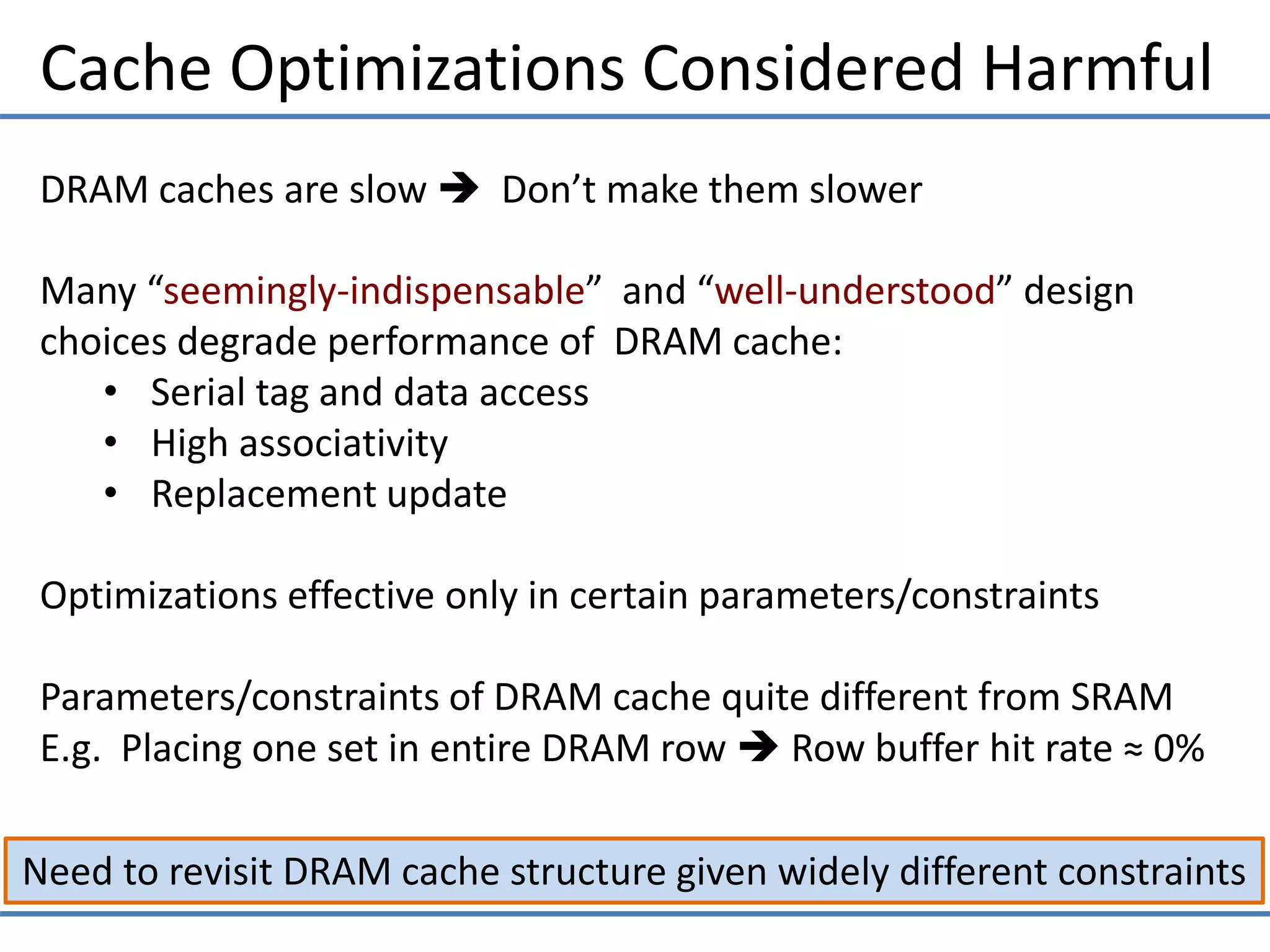

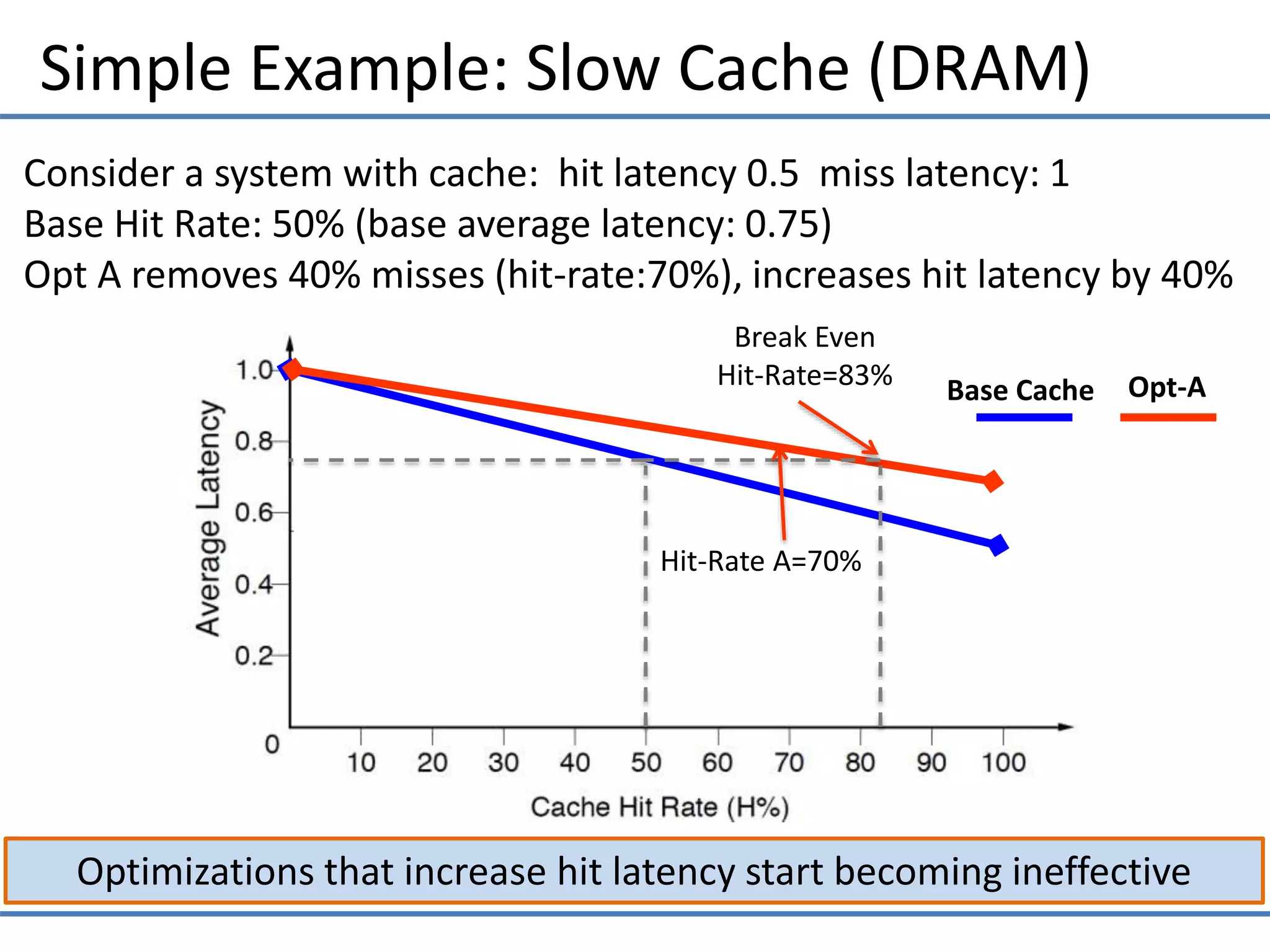

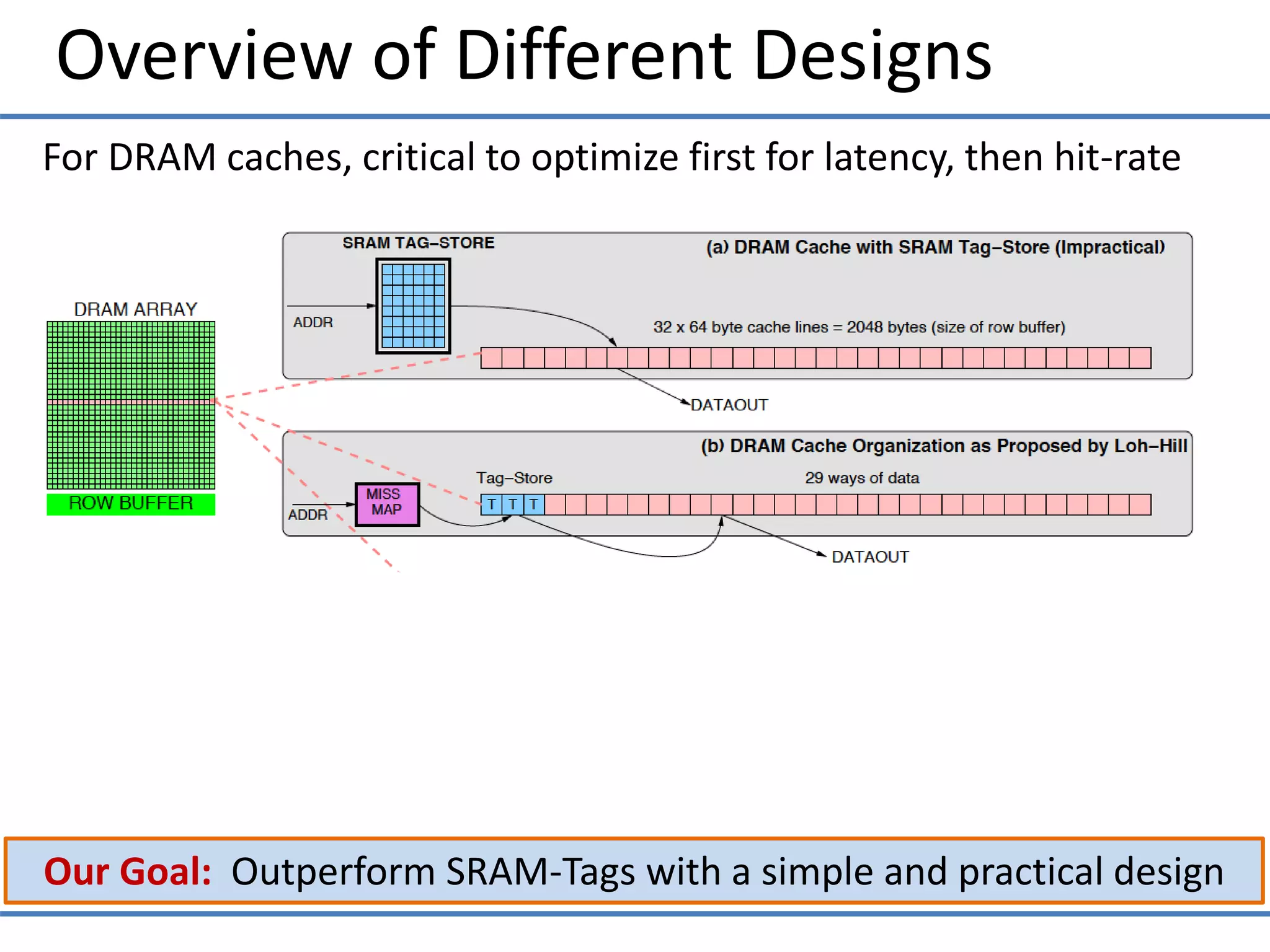

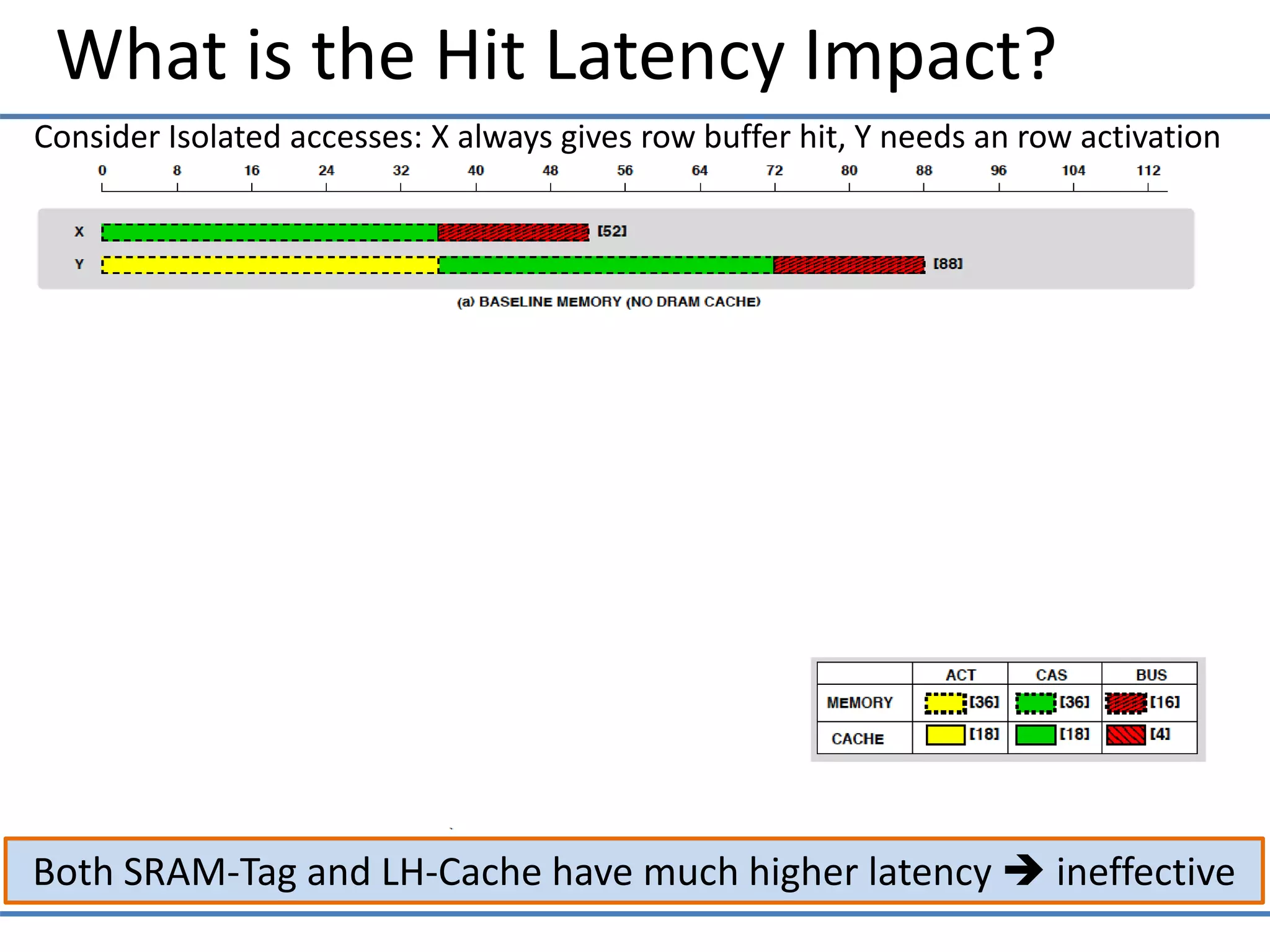

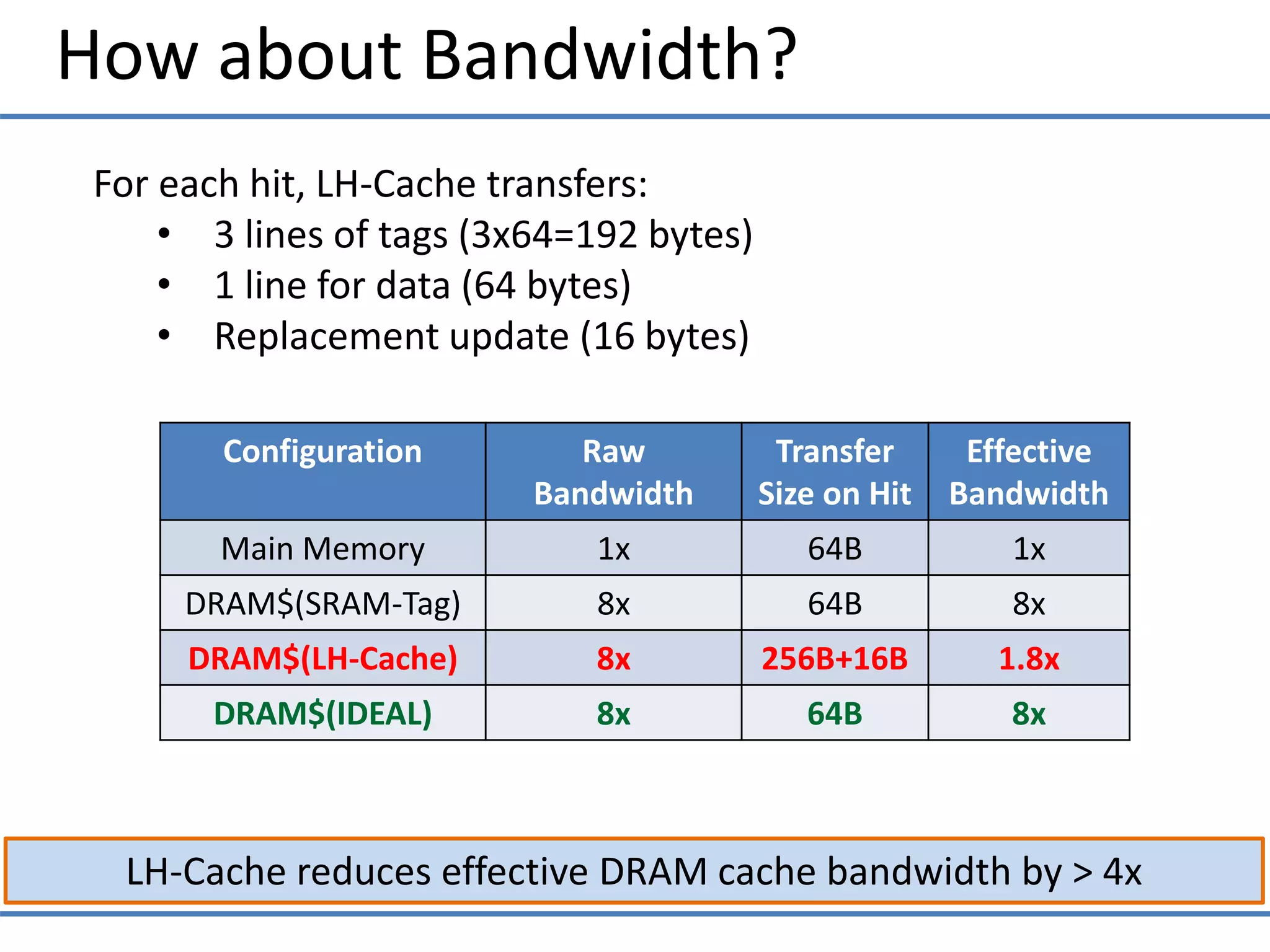

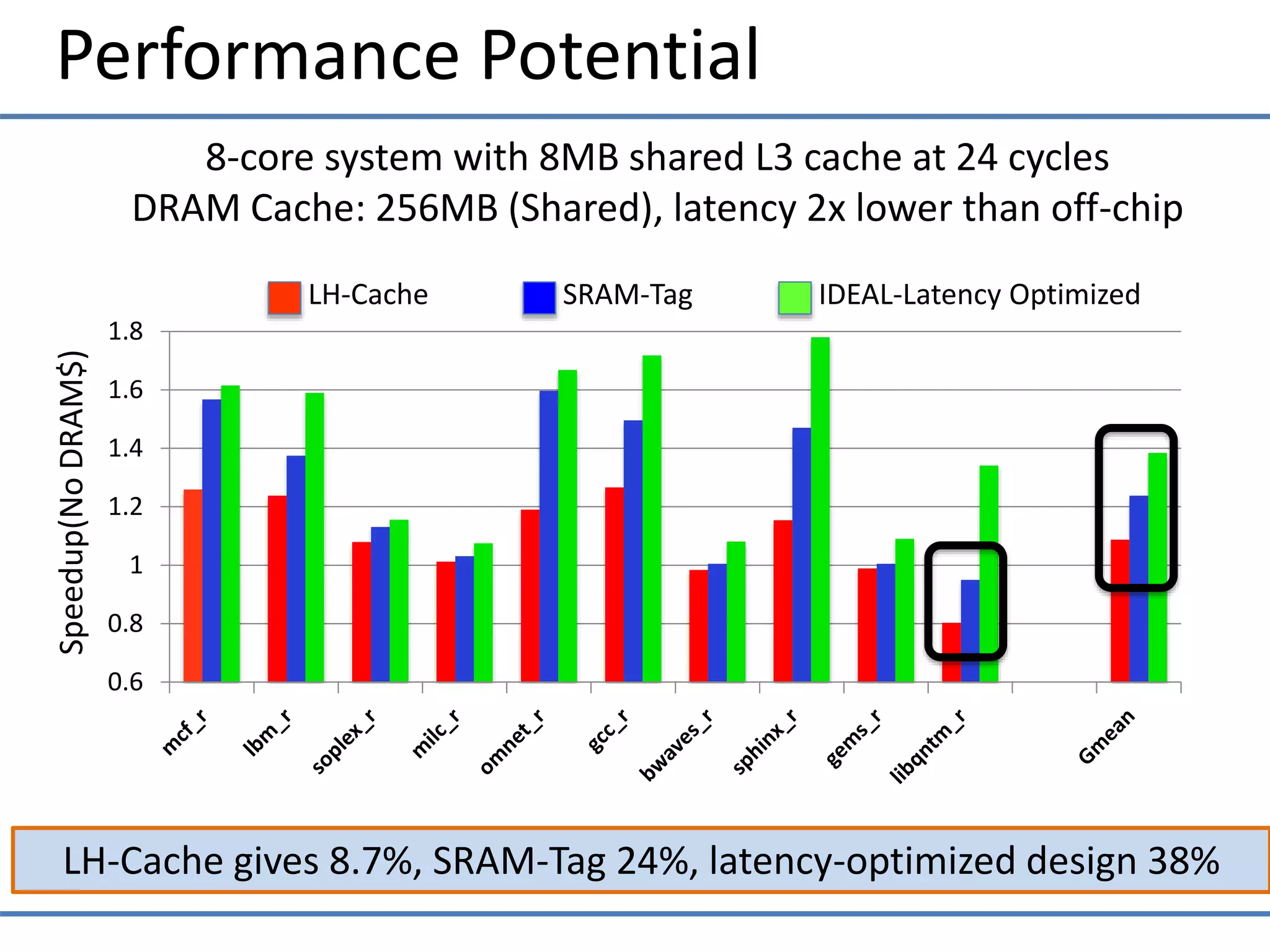

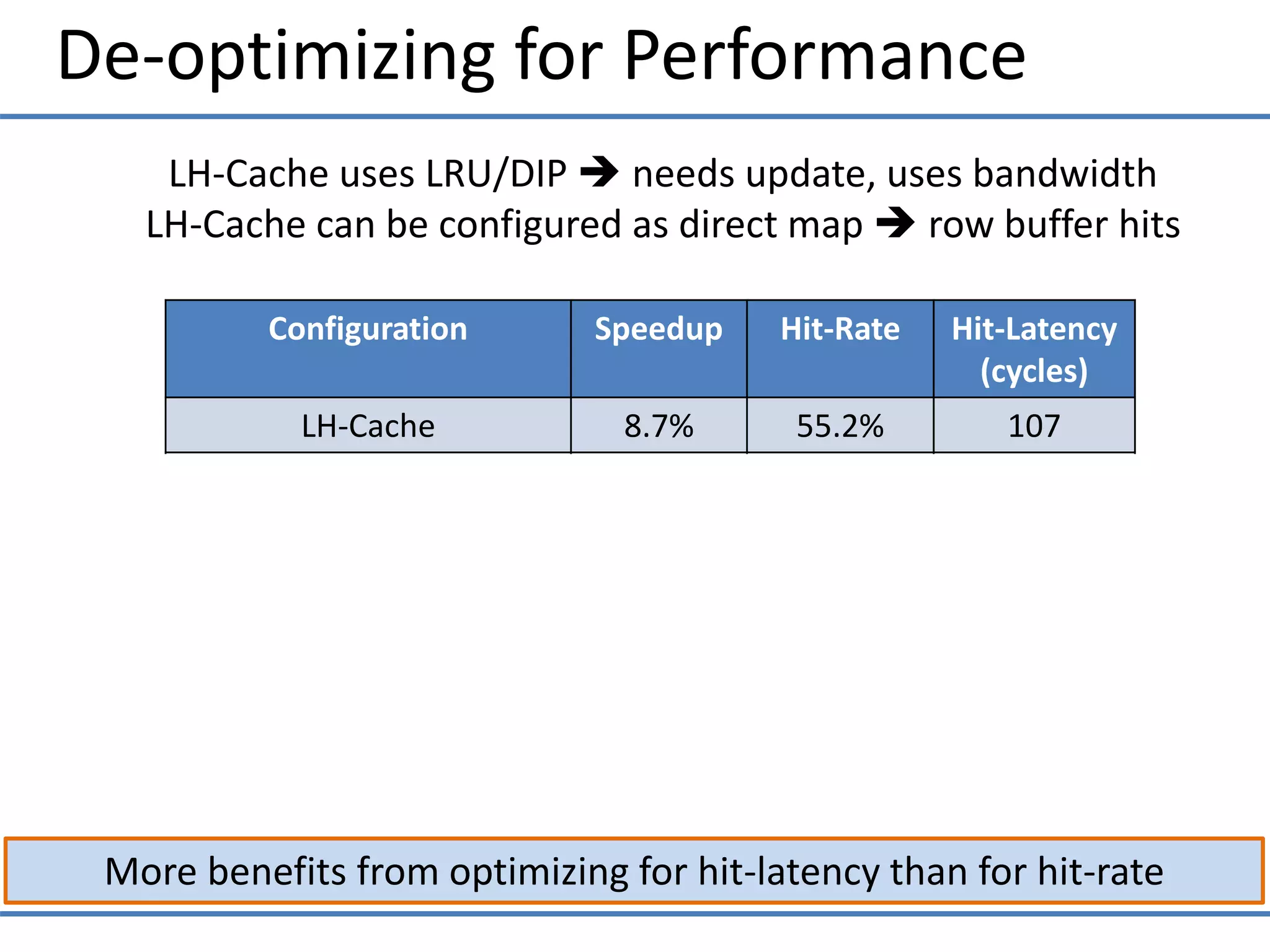

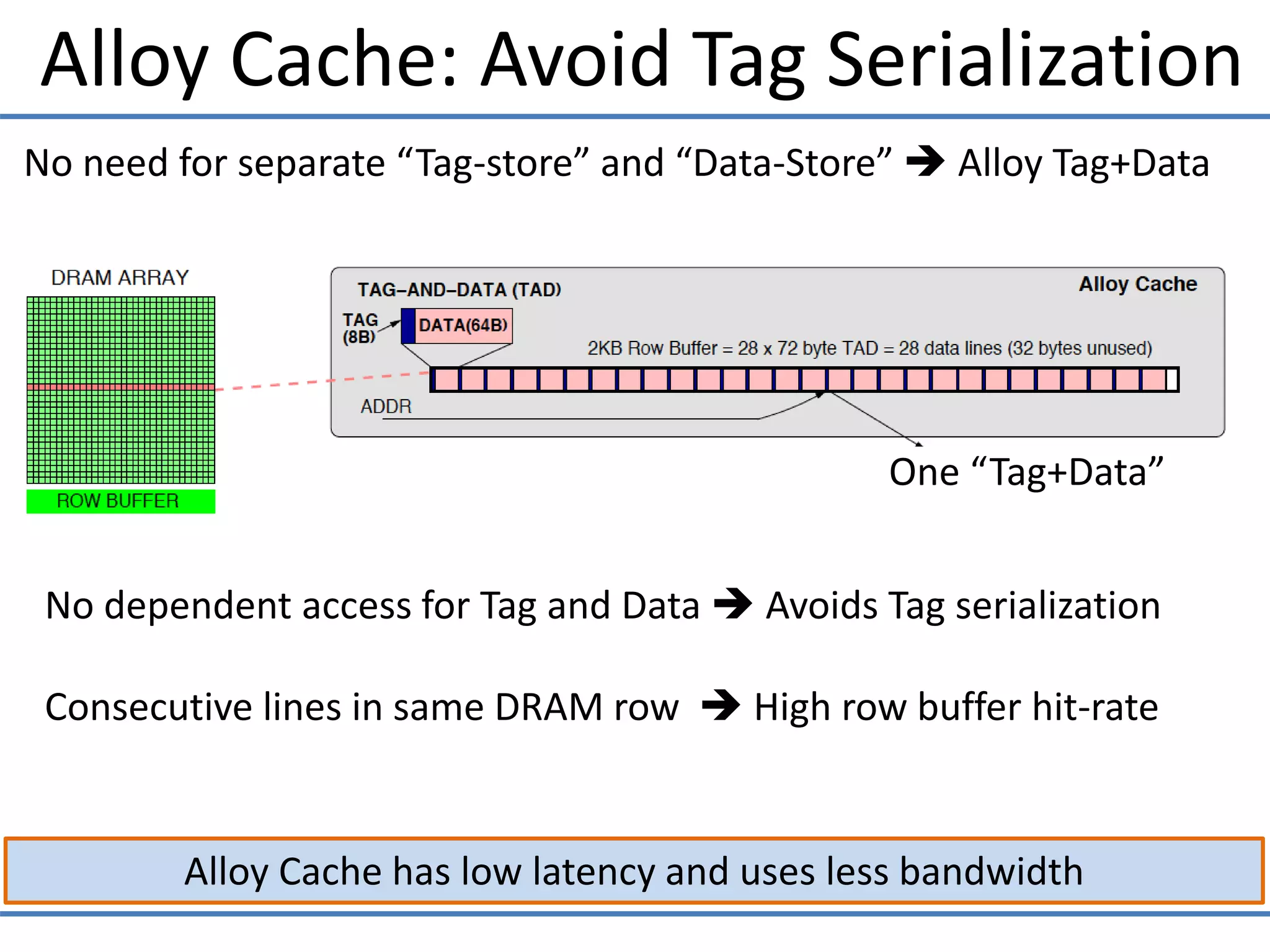

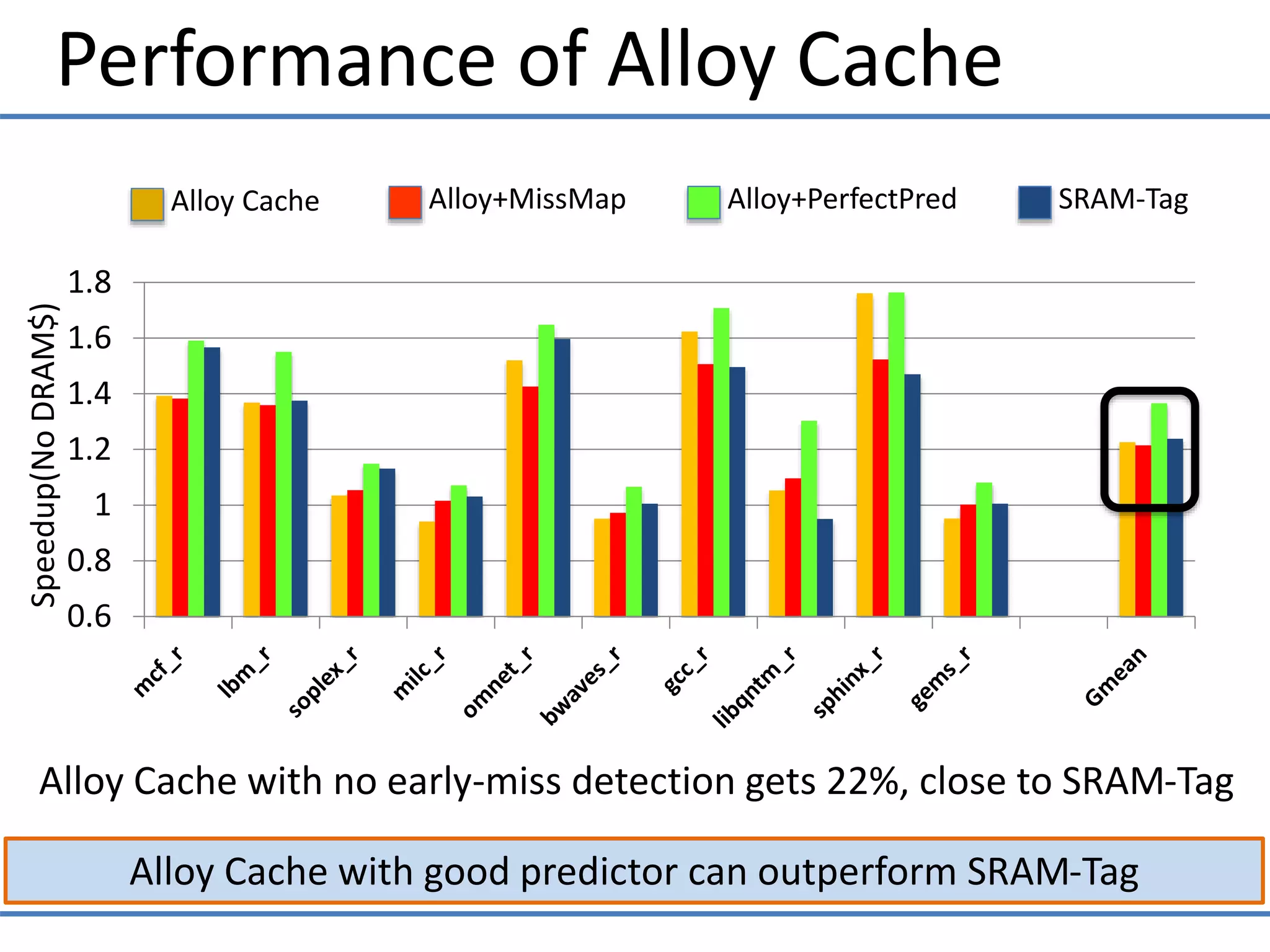

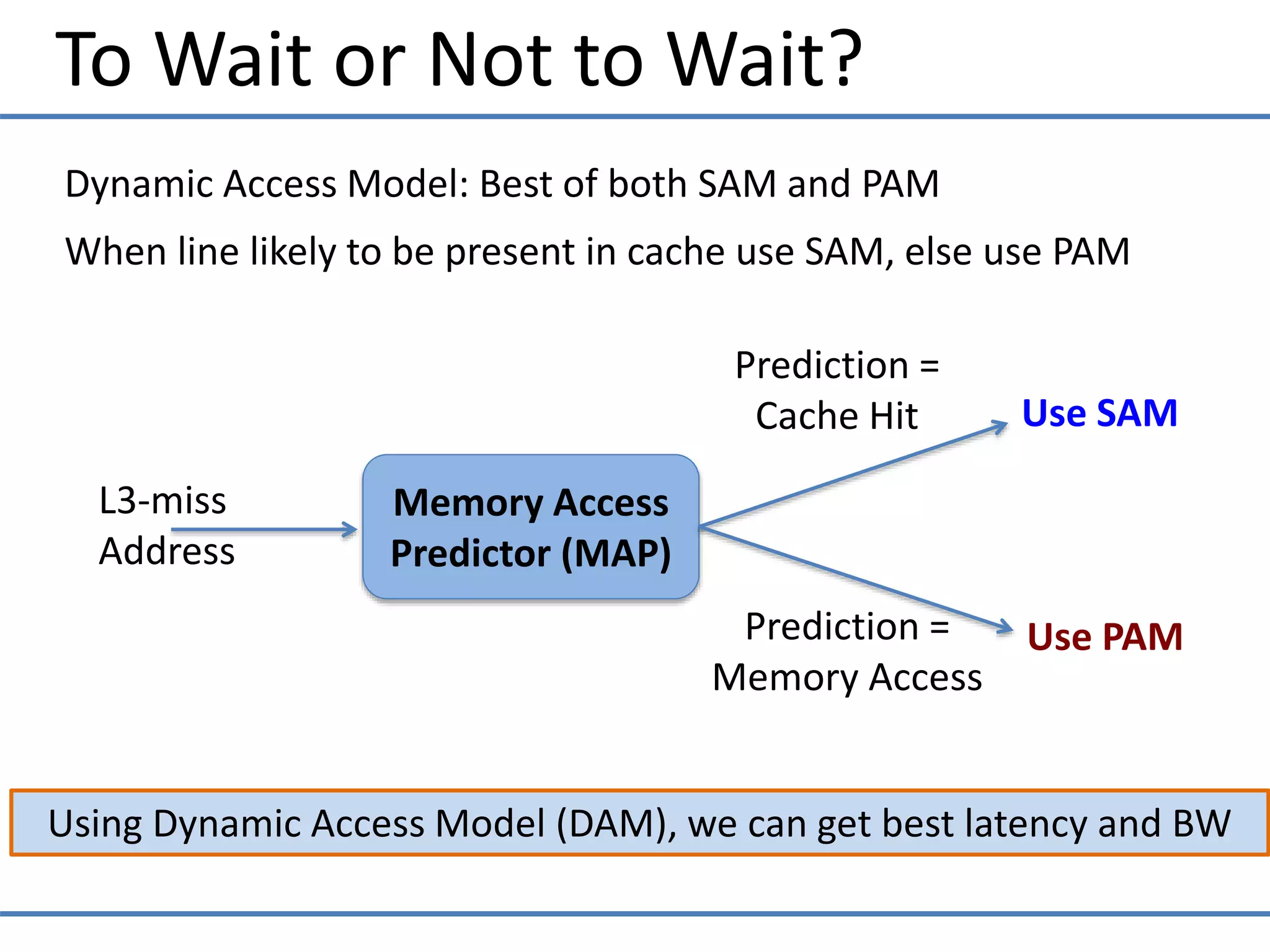

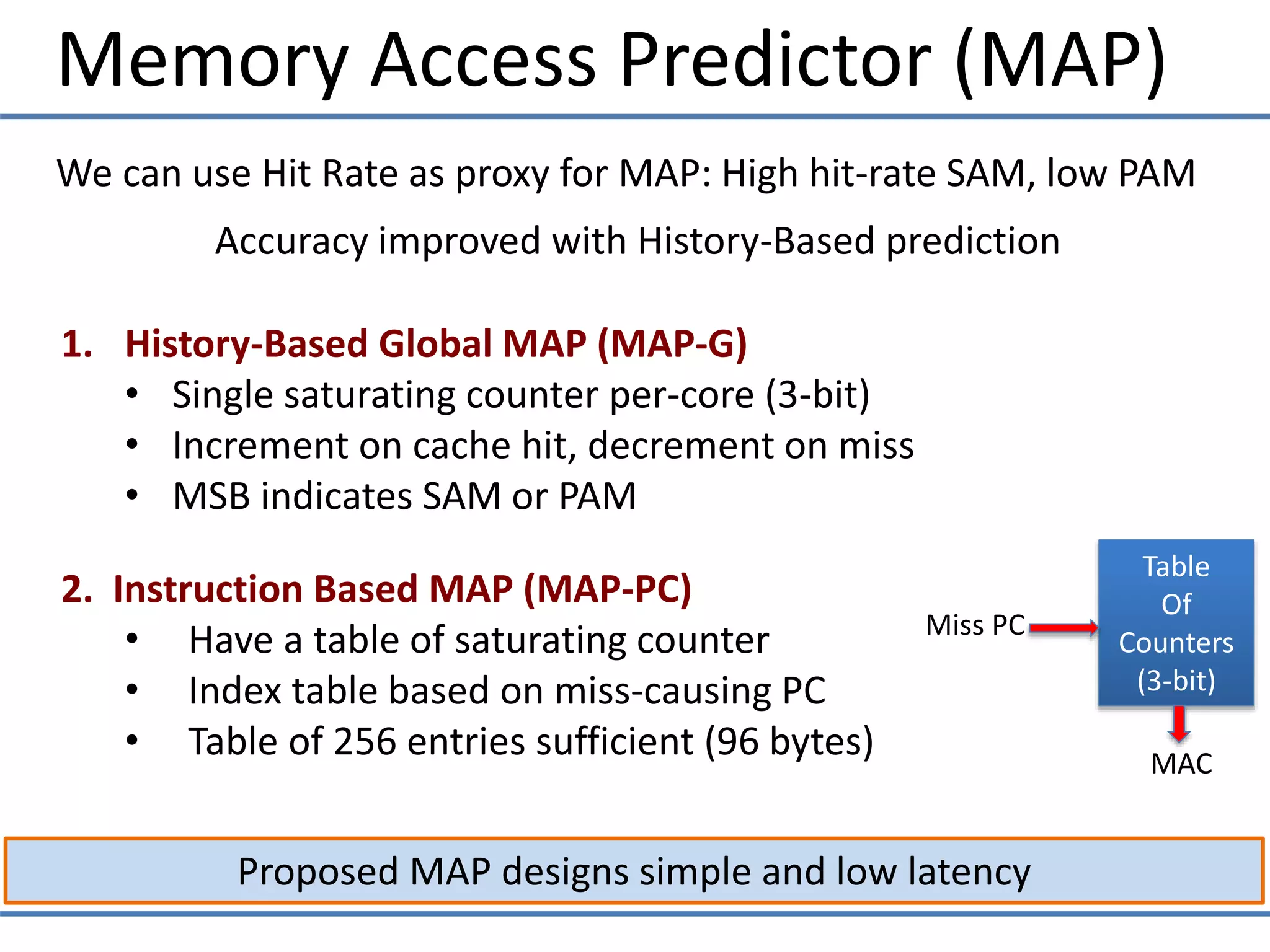

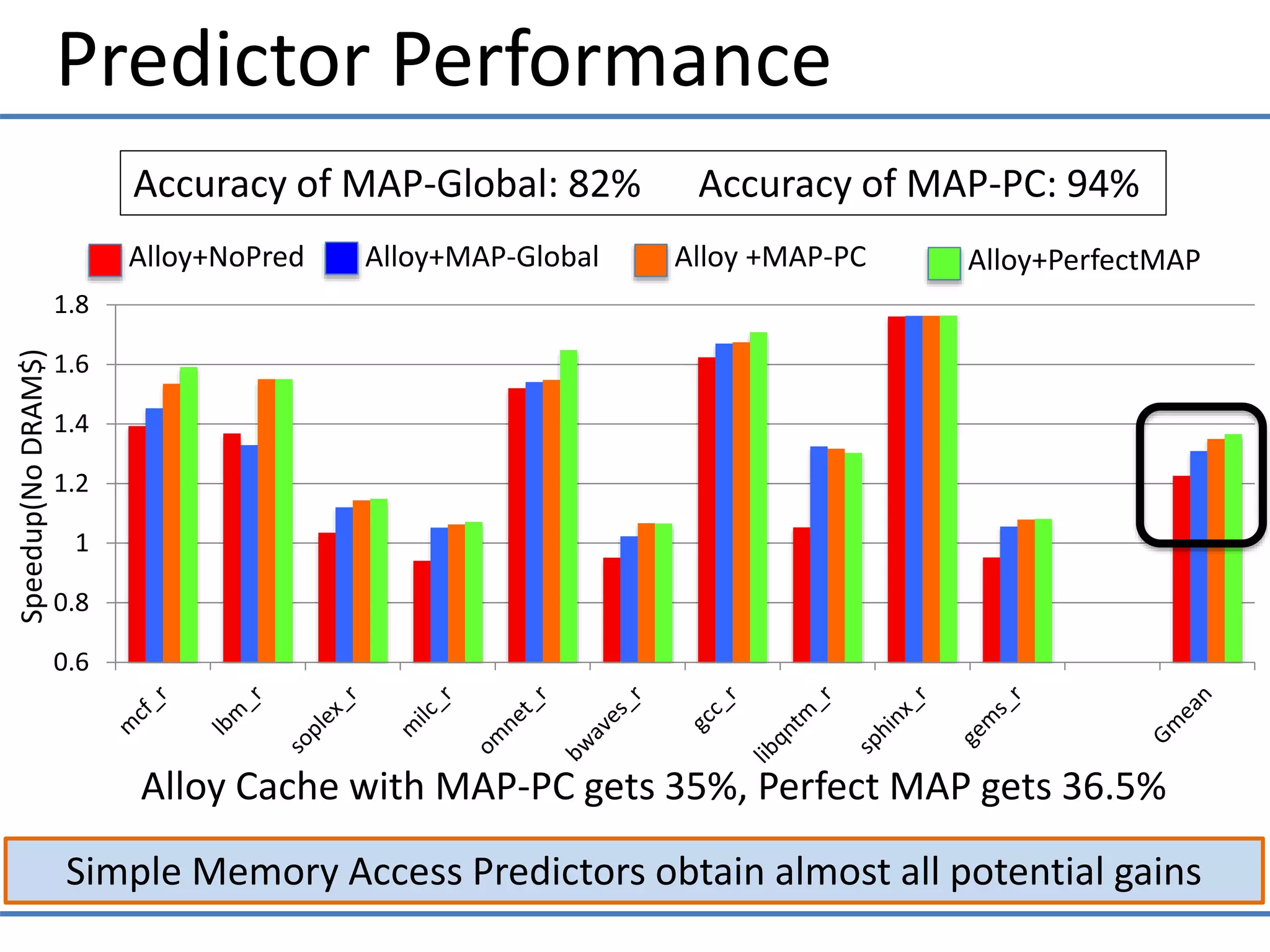

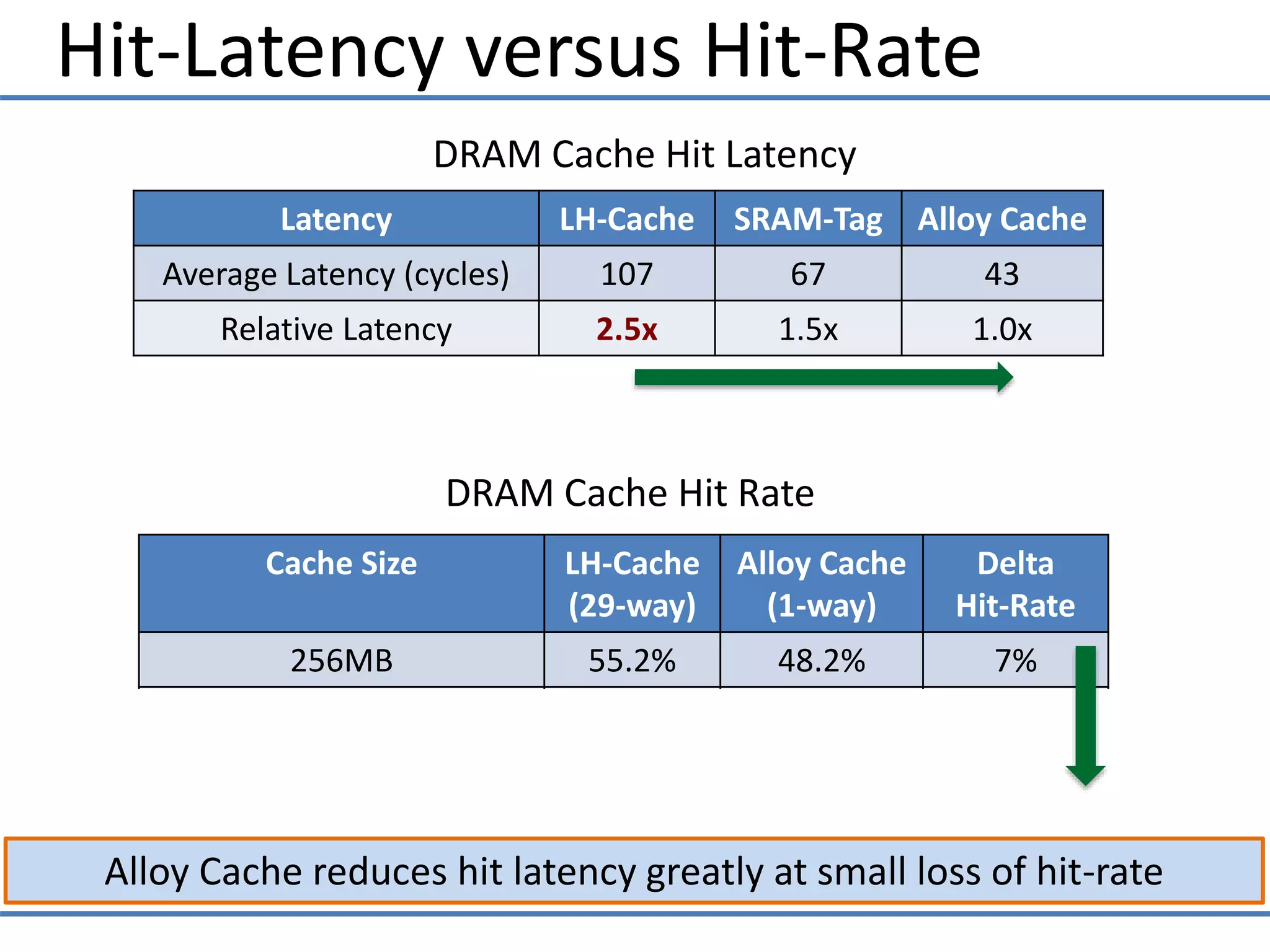

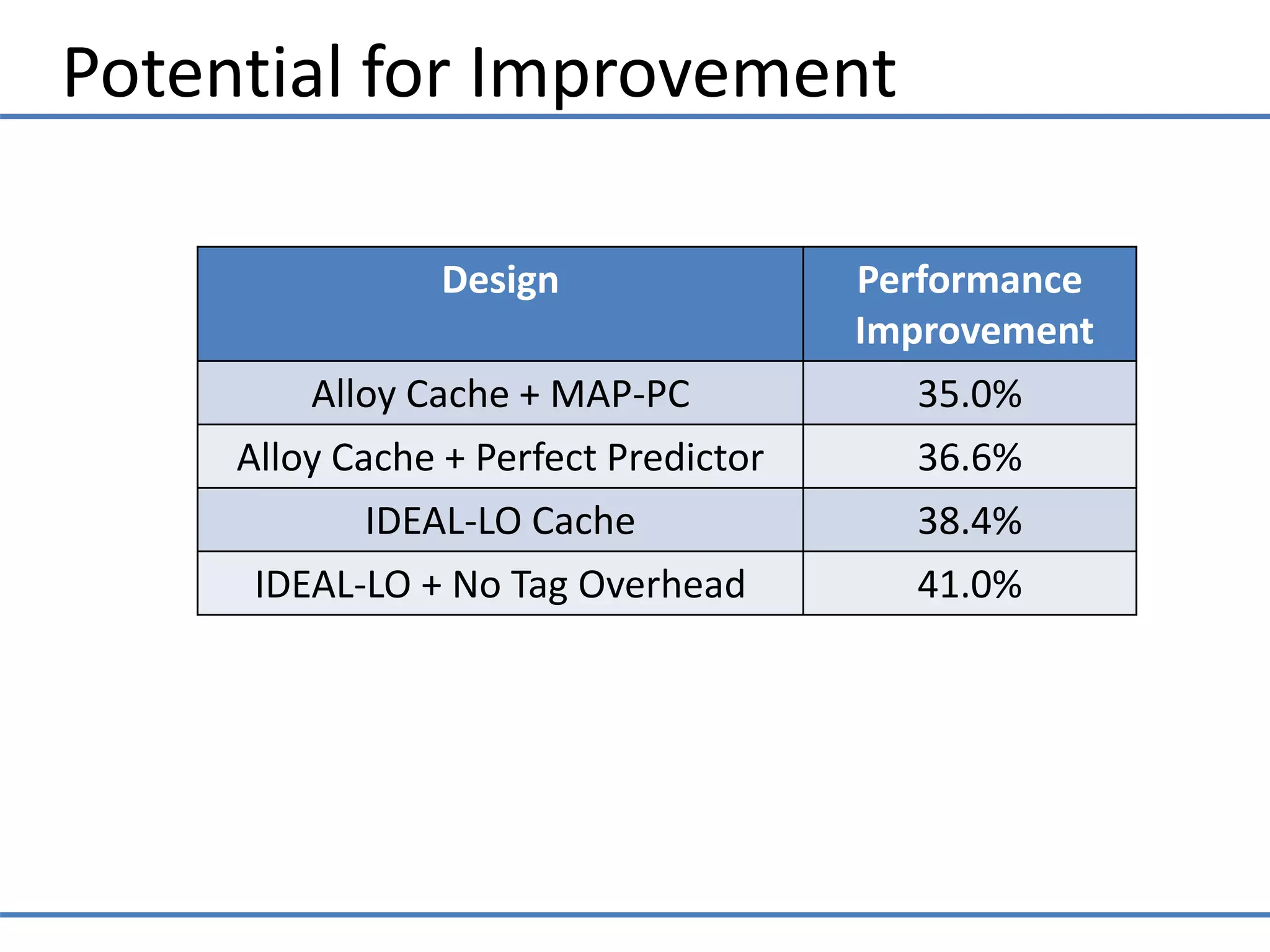

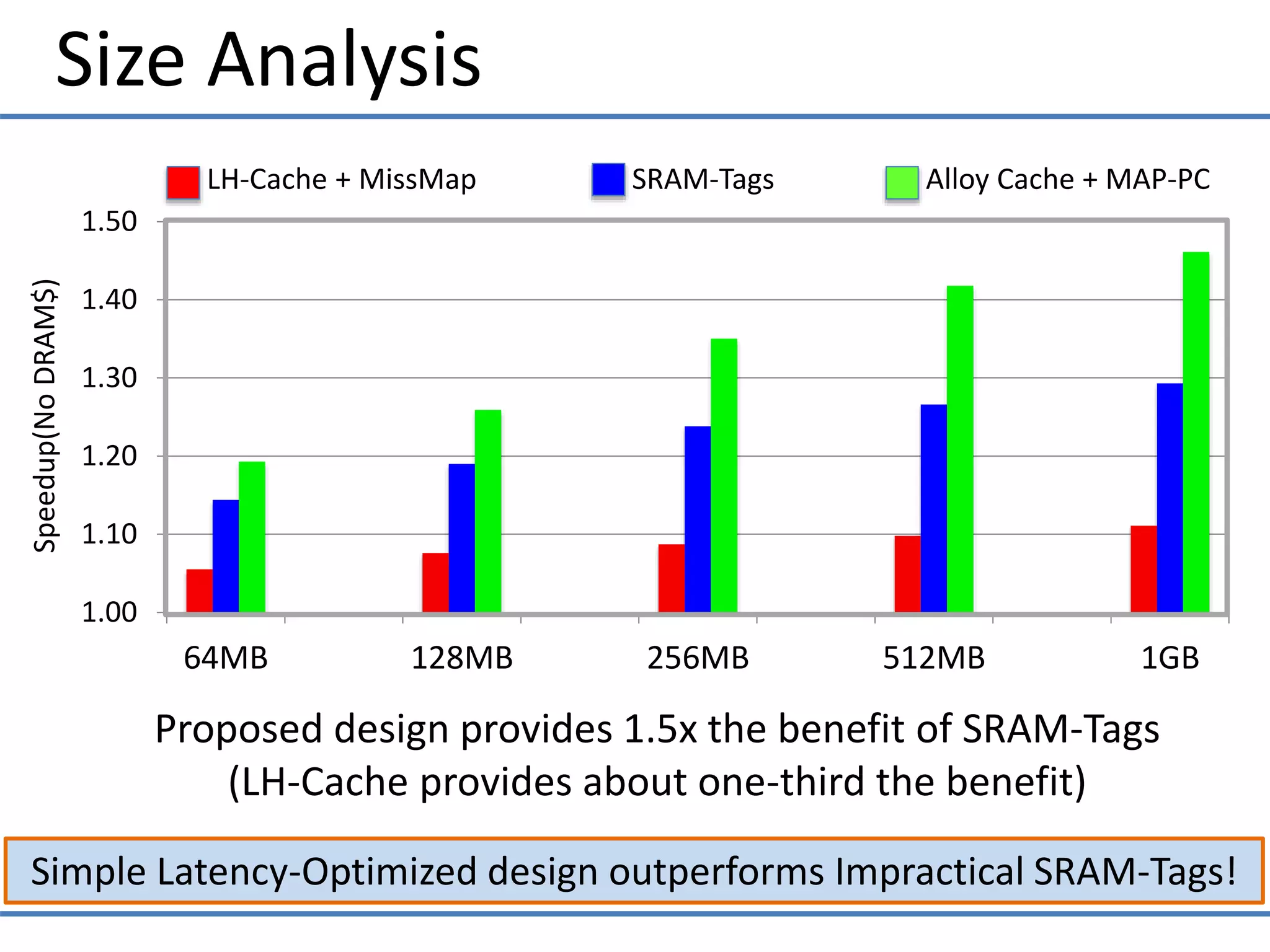

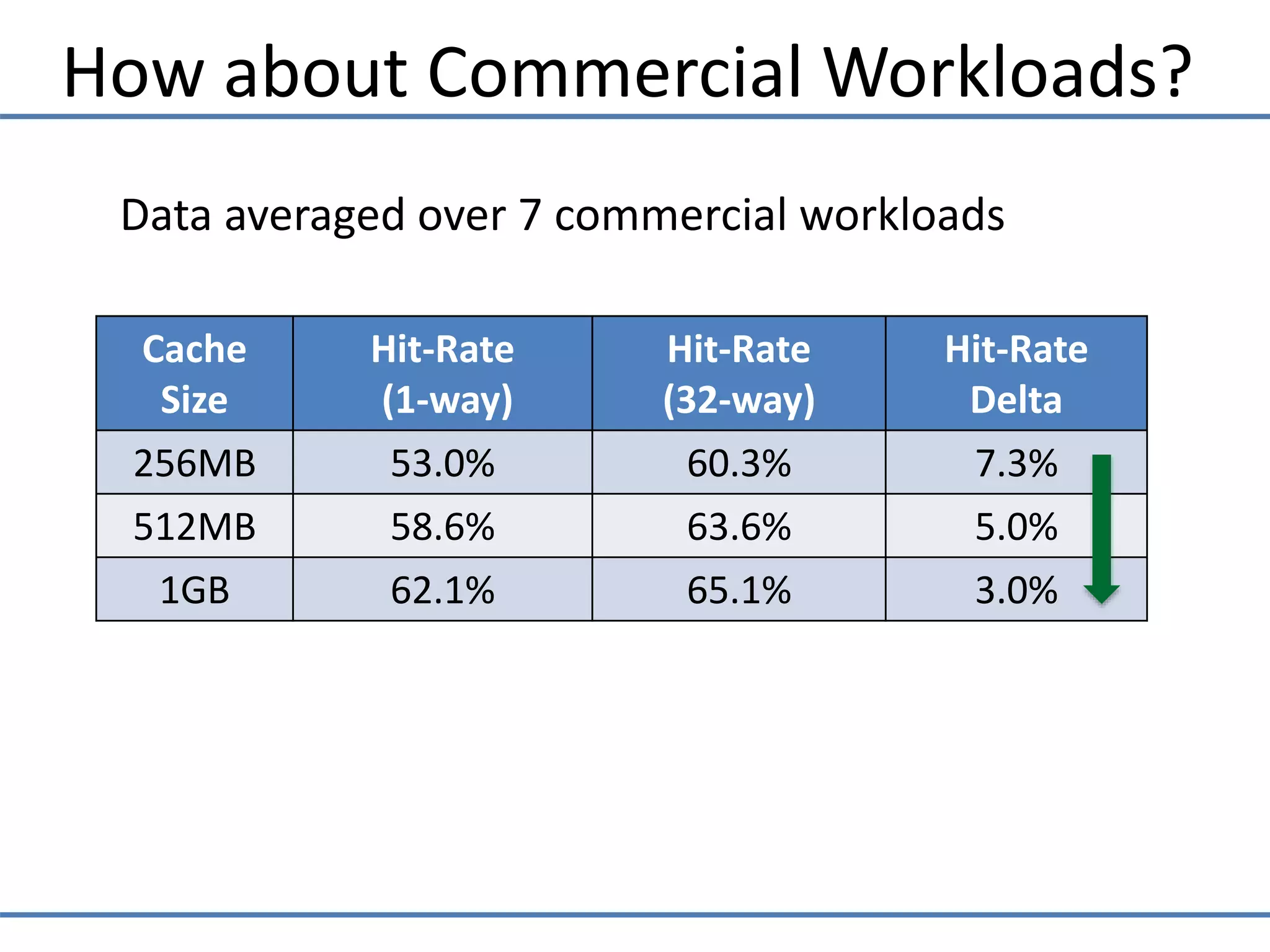

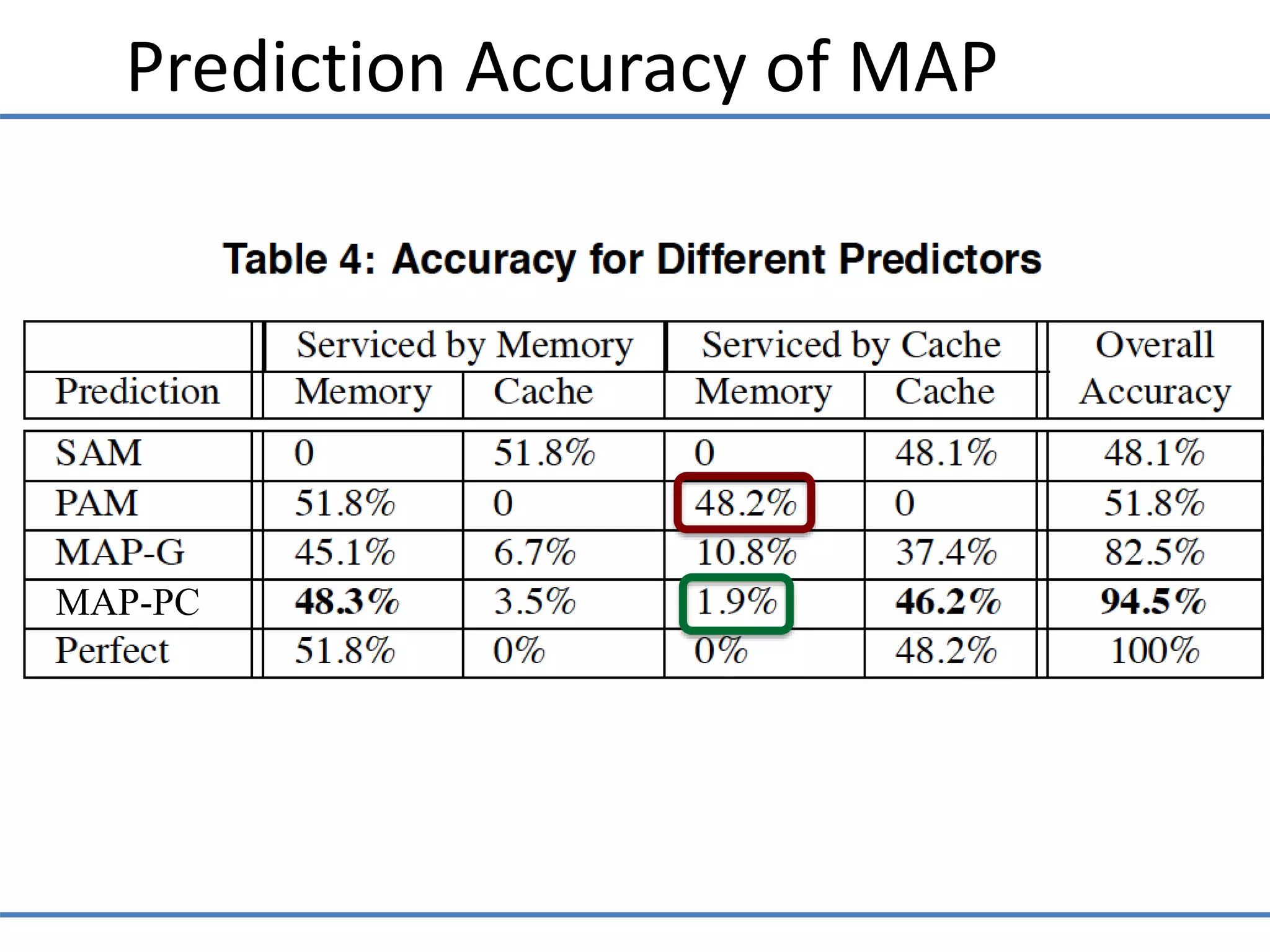

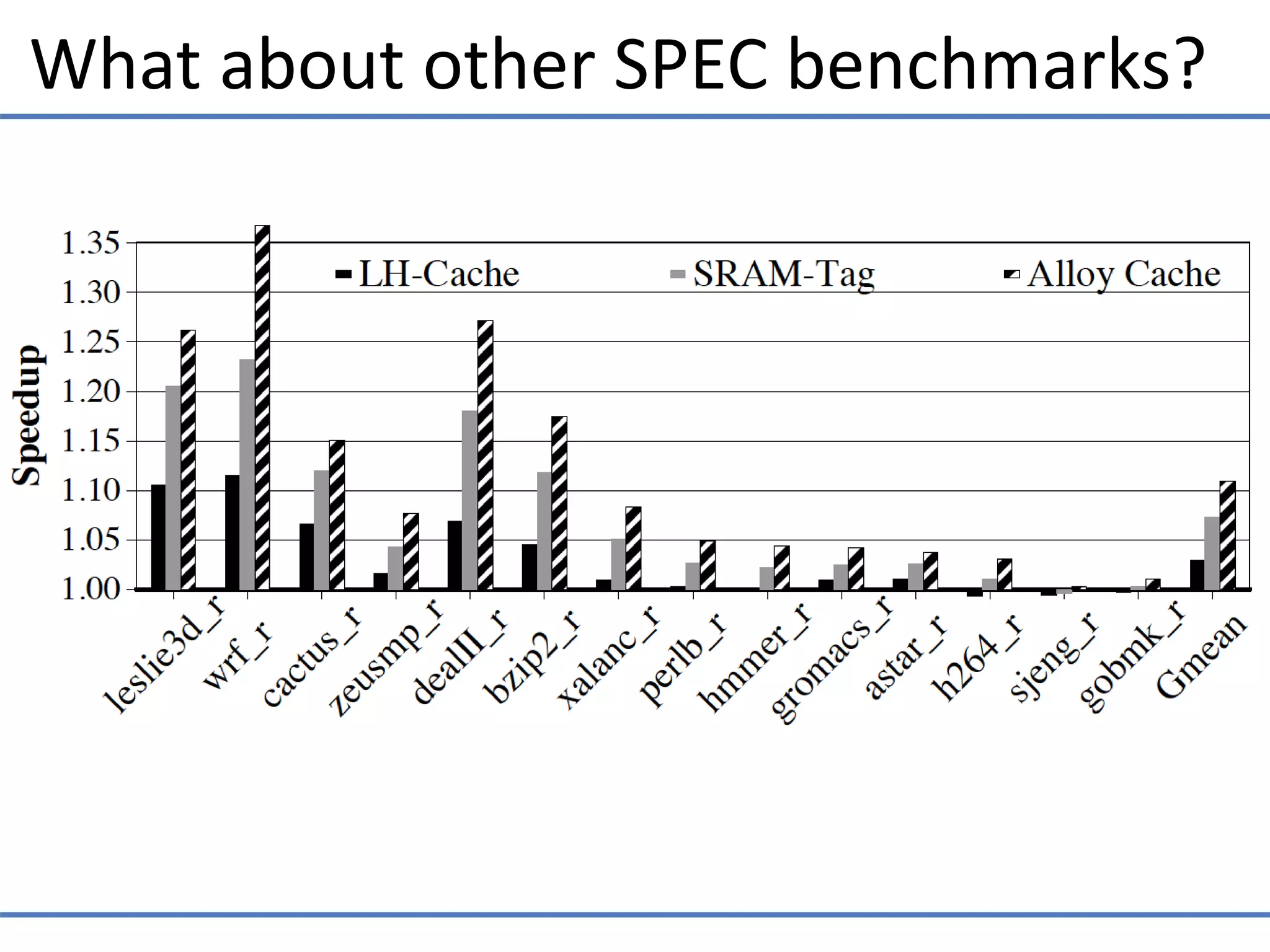

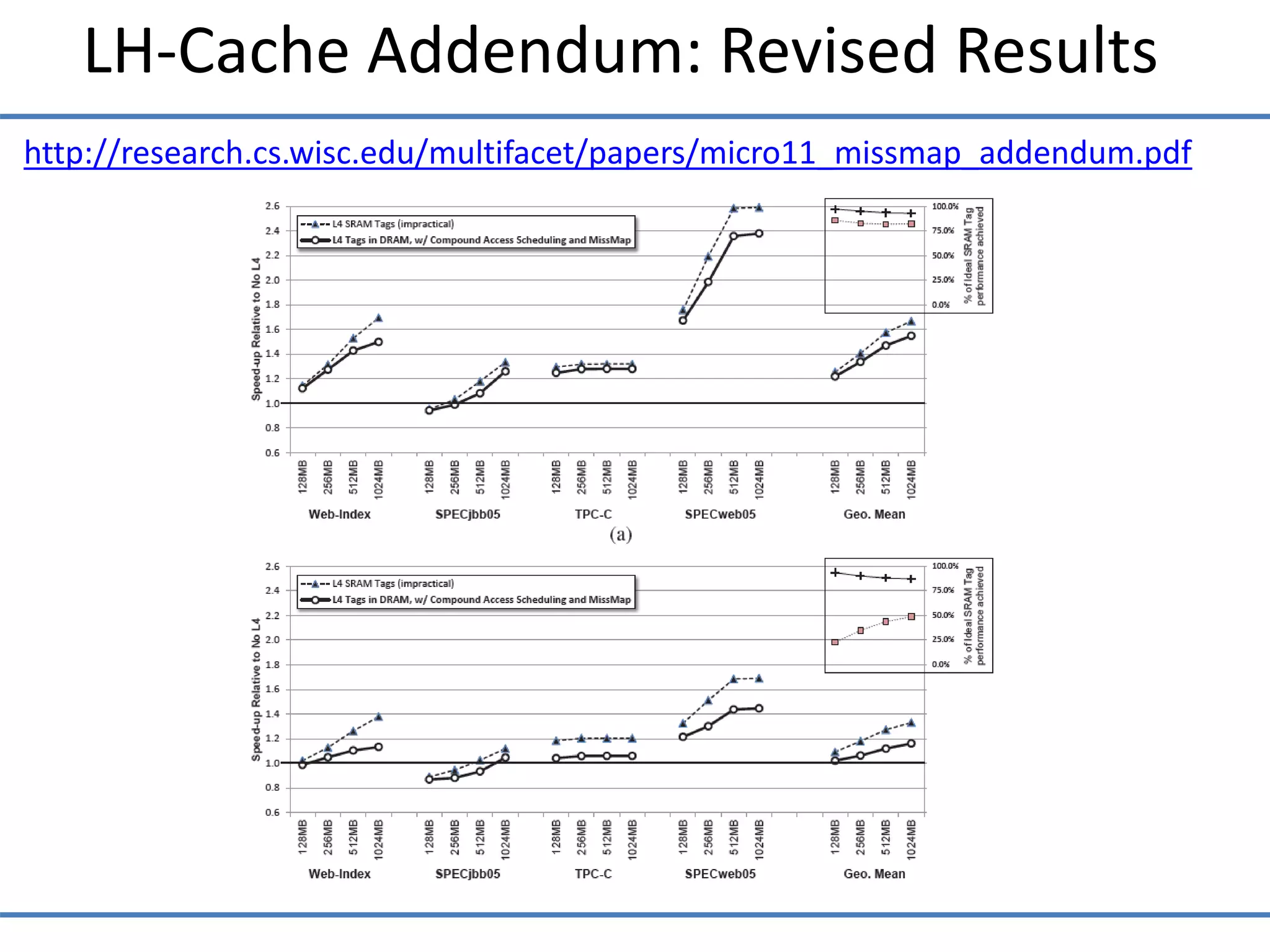

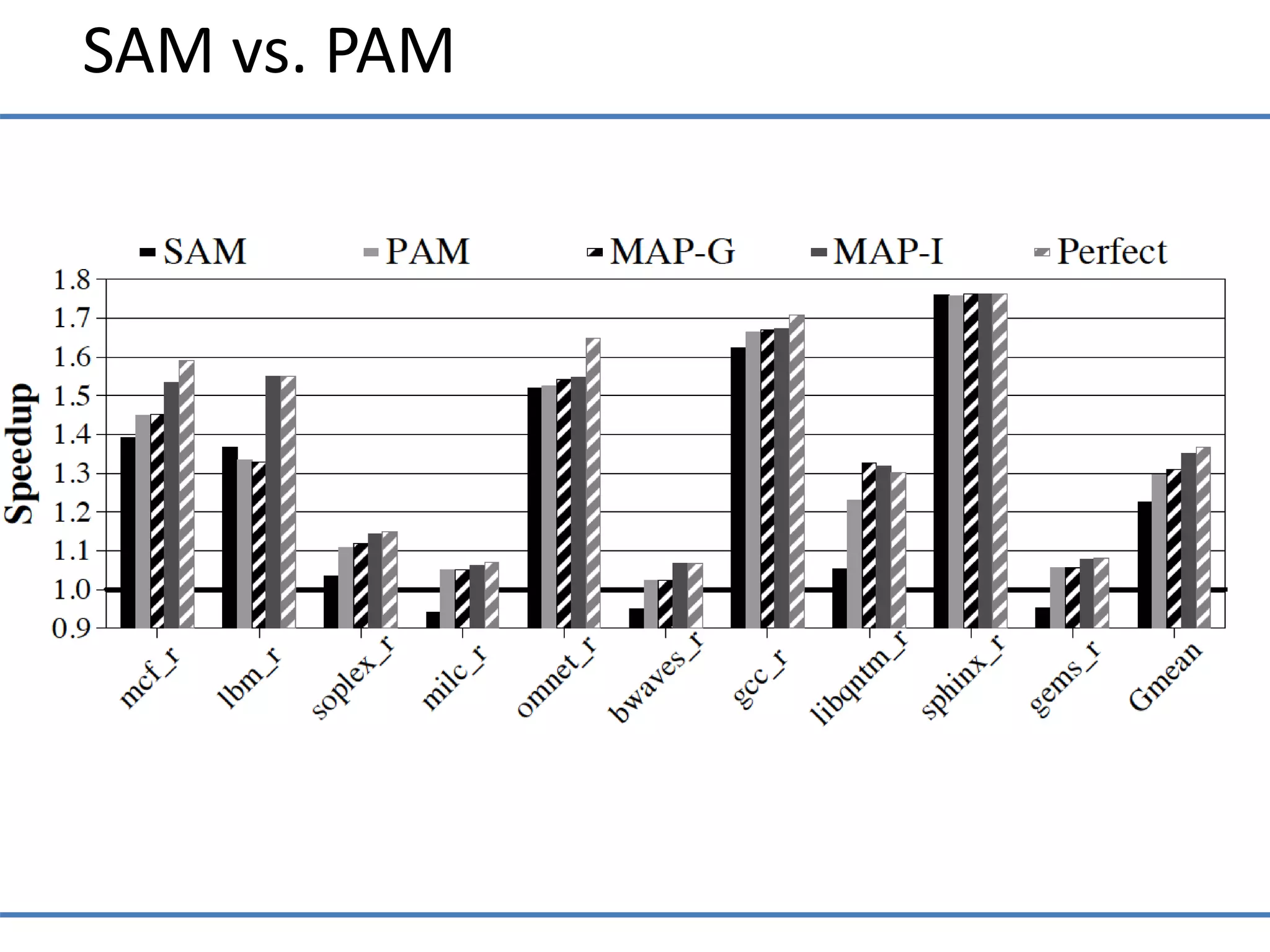

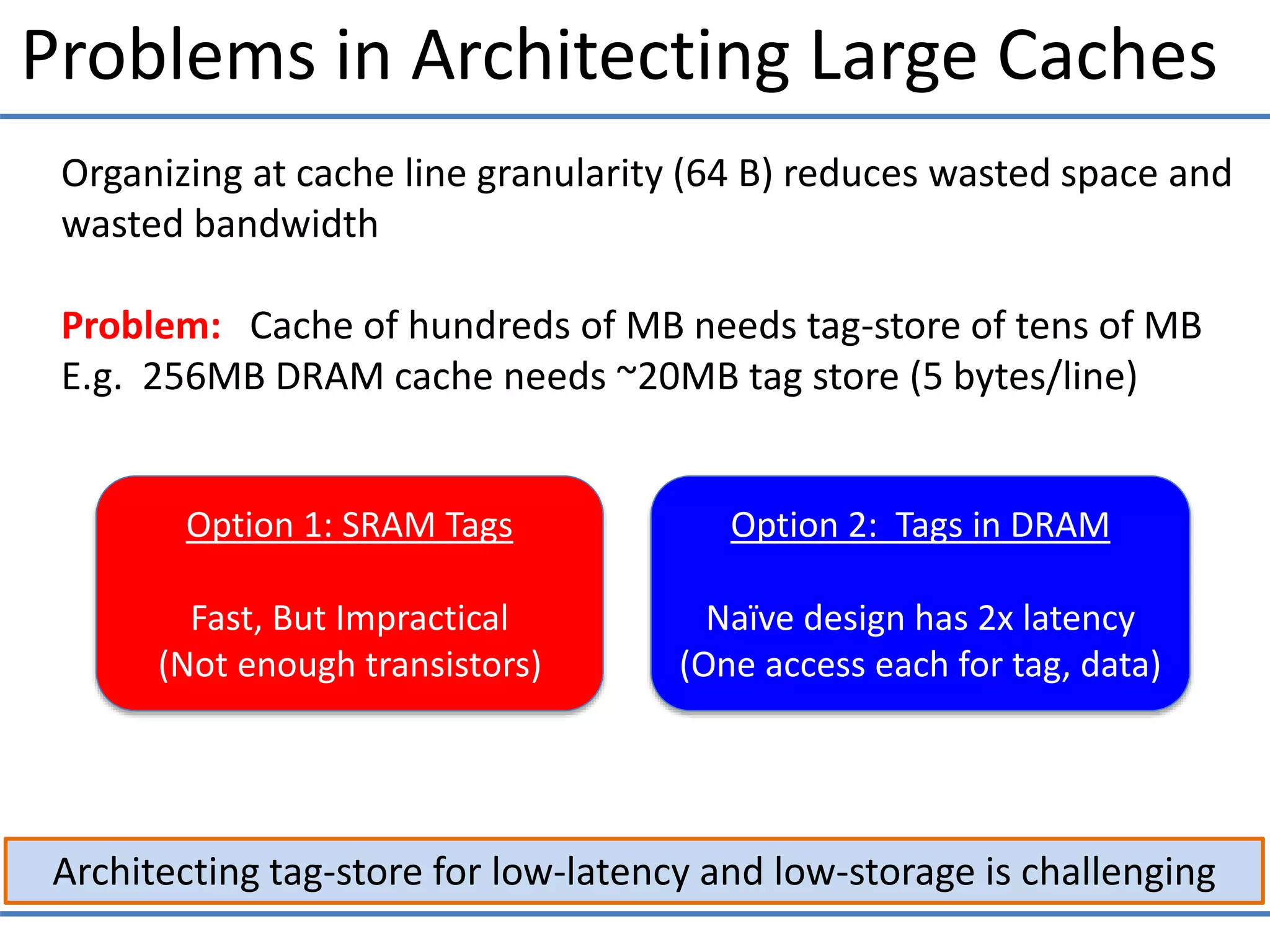

The document proposes optimizing DRAM caches for latency rather than hit rate. It presents the Alloy Cache design which avoids tag serialization to reduce latency. The Alloy Cache uses a Memory Access Predictor to selectively use either serial or parallel access models for tags and data to minimize latency and bandwidth usage. Evaluation shows the Alloy Cache with a simple predictor outperforms previous designs like SRAM-tag caches and the LH-Cache, achieving over 35% speedup compared to 24% for SRAM-tags. The design provides better performance than previously assumed to be necessary structures like SRAM tags in a simpler and more practical way.

![3-D Memory Stacking

3-D Stacked memory can provide large caches at high bandwidth

3D Stacking for low latency and high bandwidth memory system

- E.g. Half the latency, 8x the bandwidth [Loh&Hill, MICRO’11]

Stacked DRAM: Few hundred MB, not enough for main memory

Hardware-managed cache is desirable: Transparent to software

Source: Loh and Hill MICRO’11](https://image.slidesharecdn.com/2st1psoqsyap5qm4bzgk-signature-0762a8c9312704fceea2364892c07409daf56c3672d9678e5c1f617b777fd1dc-poli-160505164102/75/Hardware-managed-cache-1-2048.jpg)

![Loh-Hill Cache Design [Micro’11, TopPicks]

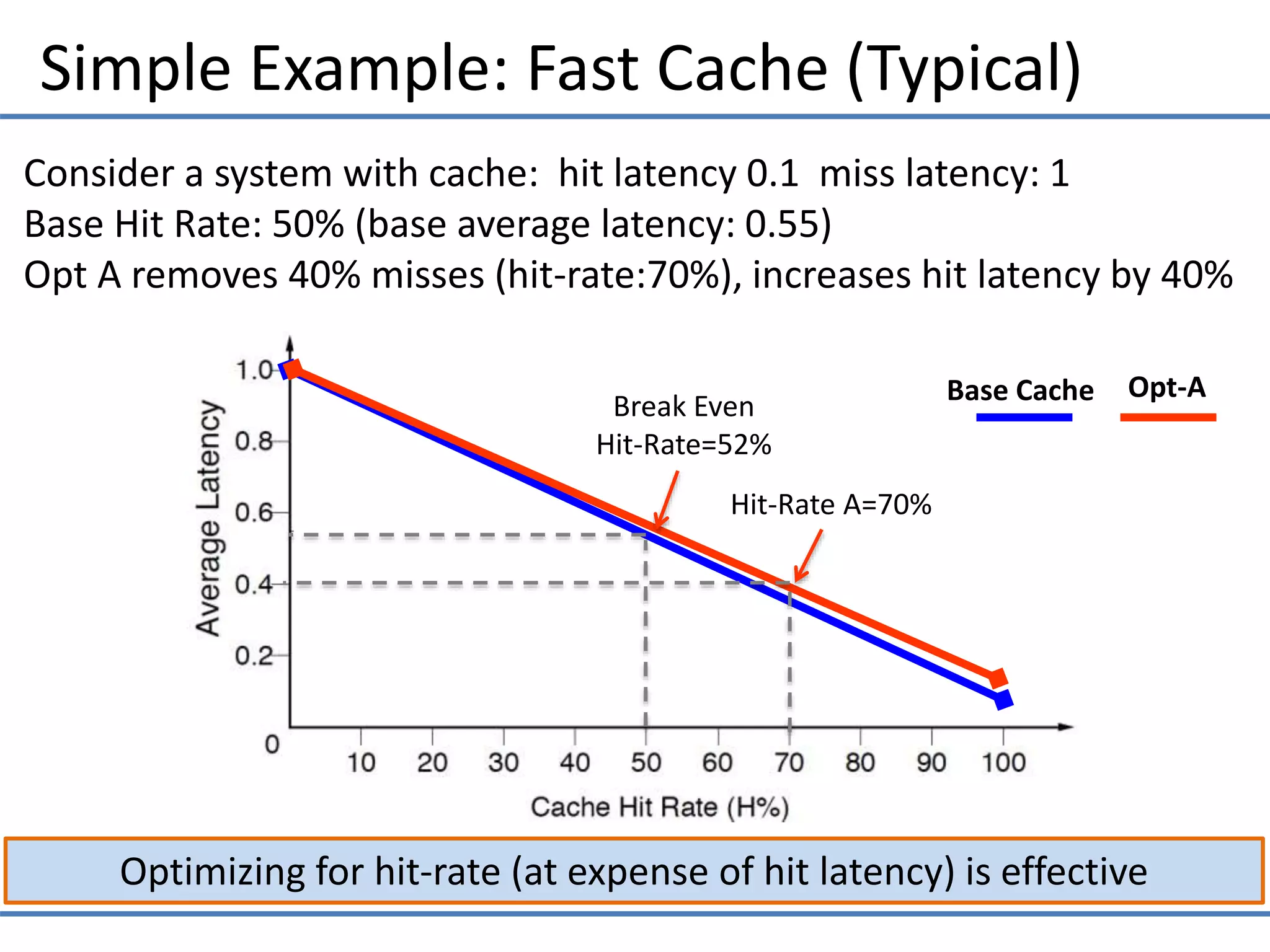

Recent work tries to reduce latency of Tags-in-DRAM approach

LH-Cache design similar to traditional set-associative cache

2KB row buffer = 32 cache lines

Speed-up cache miss detection:

A MissMap (2MB) in L3 tracks lines of pages resident in DRAM cache

Miss

Map

Data lines (29-ways)Tags

Cache organization: A 29-way set-associative DRAM (in 2KB row)

Keep Tag and Data in same DRAM row (tag-store & data store)

Data access guaranteed row-buffer hit (Latency ~1.5x instead of 2x)](https://image.slidesharecdn.com/2st1psoqsyap5qm4bzgk-signature-0762a8c9312704fceea2364892c07409daf56c3672d9678e5c1f617b777fd1dc-poli-160505164102/75/Hardware-managed-cache-3-2048.jpg)