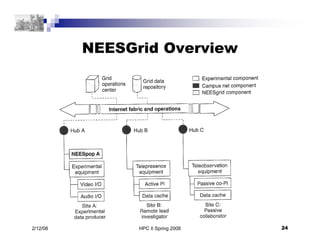

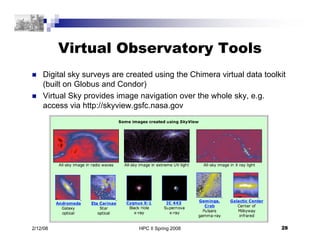

The document provides an overview of grid computing concepts, architecture, and applications. It discusses how grid users like scientists need coordinated sharing of resources for tasks like data-intensive analysis and simulations. The key challenges for grids are establishing trust between participants and enabling secure sharing of applications and data. Standards like Globus and OGSA have evolved to address these through services, virtual organizations, and other architectural components. Example applications described are the NEESgrid for earthquake engineering and the Virtual Observatory for astronomy data sharing.

![HPC II Spring 2008 18

2/12/08

Resource Allocation via

Description Languages

Condor classified advertisements (ClassAds) describe job and requirements

machine architecture

command or executable starting application

constraints on required resources

executable size

required memory

operating system and release

owner

rank specifying preferred resources

Globus RSL uses LDAP-like notation

[

Type = "job";

Owner = "Myself";

Executable = "Myimage";

Rank = other.PhysicalMemory > 256 && other.Mips > 20 || other.Kflops > 2000;

Imagesize = 275M;

Constraint = other.Virtual > self.Imagesize;

]](https://image.slidesharecdn.com/grid-230203175542-2ab6d07e/85/Grid-pdf-18-320.jpg)

![HPC II Spring 2008 29

2/12/08

Further Reading

[Grid] Chapter 4

Optional:

NEESgrid: [Grid] Chapter 6

Virtual Observatory: [Grid] Chapter 7

Condor and reliability: [Grid] Chapter 19](https://image.slidesharecdn.com/grid-230203175542-2ab6d07e/85/Grid-pdf-29-320.jpg)