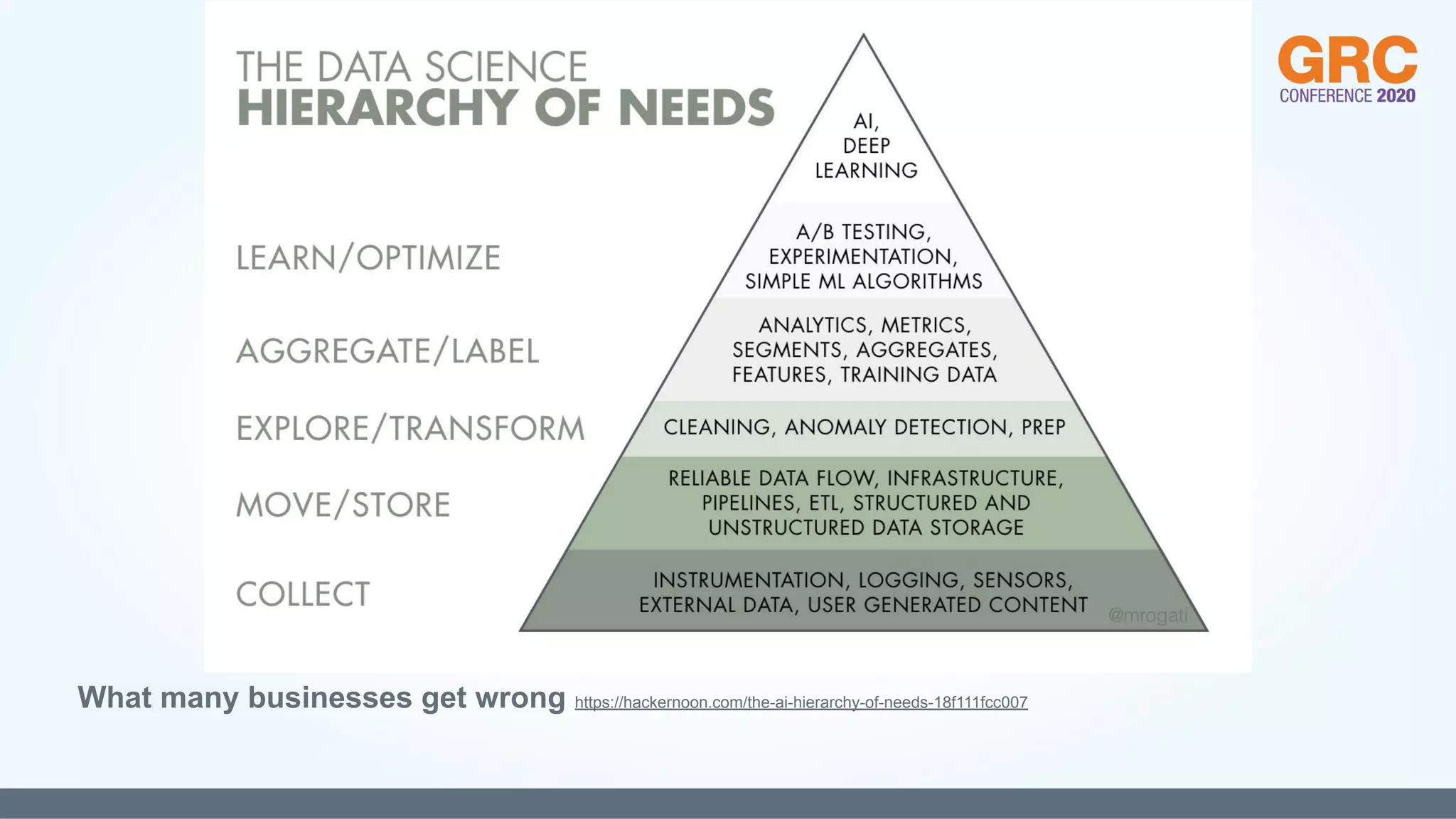

The document, authored by Andrew Clark, outlines the importance of machine learning (ML) monitoring, compliance, and governance, emphasizing the need for a robust governance program to manage risks associated with ML applications. It covers relevant regulations such as GDPR and the California Consumer Privacy Act, detailing essential frameworks and guidelines for organizations to effectively govern and monitor their ML systems. Key topics include the construction of governance programs, risk management, and the significance of ongoing algorithm performance monitoring.

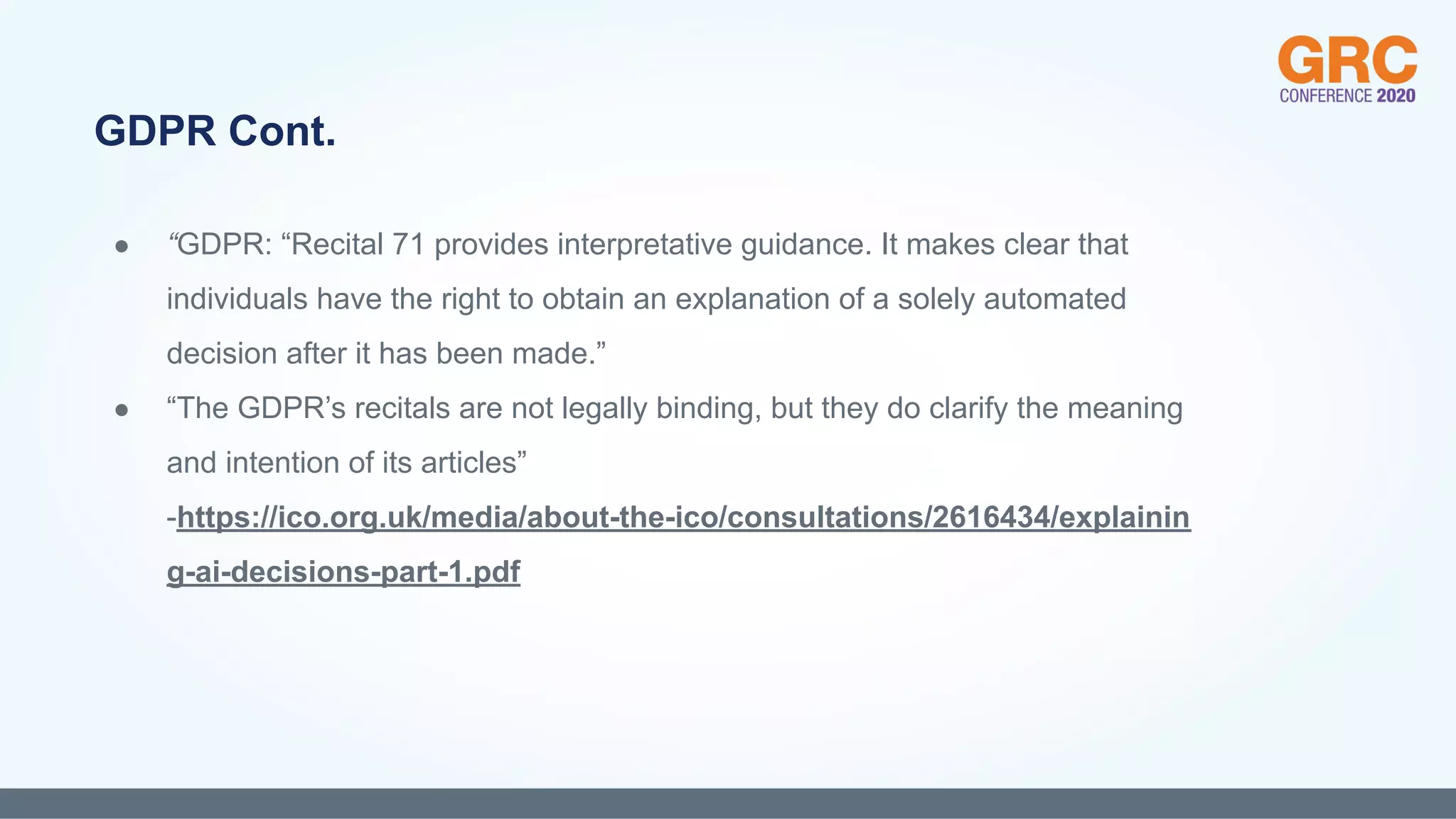

![● “As above, the GDPR has specific requirements around the provision of information about, and an

explanation of, an AI-assisted decision where:

○ it is made by a process without any human involvement; and

○ it produces legal or similarly significant effects on an individual (something affecting an individual’s

legal status/ rights, or that has equivalent impact on an individual’s circumstances, behaviour or

opportunities, eg a decision about welfare, or a loan). In these cases, the GDPR requires that you:

■ are proactive in “…[giving individuals] meaningful information about the logic involved, as

well as the significance and envisaged consequences…” (Articles 13 and 14);

■ “… [give individuals] at least the right to obtain human intervention on the part of the

controller, to express his or her point of view and to contest the decision.” (Article 22); and

■ • “… [give individuals] the right to obtain… meaningful information about the logic involved,

as well as the significance and envisaged consequences…” (Article 15) “…[including] an

explanation of the decision reached after such assessment…” (Recital

71)”-https://ico.org.uk/media/about-the-ico/consultations/2616434/explaining-ai-decis

ions-part-1.pdf

GDPR](https://image.slidesharecdn.com/machinelearningmonitoringcomplianceandgovernance-iiagrc-200825191520/75/GRC-2020-IIA-ISACA-Machine-Learning-Monitoring-Compliance-and-Governance-16-2048.jpg)

![● What dataset[s] was utilized to train the model?

● What dataset[s] is utilized for production prediction?

● Where did the data set[s] identified in 1,2 originate? I.e. web scrapped data, log

files, relational databases.

● Are all of the input variables in the same format? I.e. miles or kilometers.

● Have the correlations and covariances been examined?

CRISP-DM - Data Understanding](https://image.slidesharecdn.com/machinelearningmonitoringcomplianceandgovernance-iiagrc-200825191520/75/GRC-2020-IIA-ISACA-Machine-Learning-Monitoring-Compliance-and-Governance-30-2048.jpg)

![● What was the thought process behind choosing algorithm[s] for the model?

● What steps were used to guard against overfitting?

● What process was used to optimize the chosen algorithm?

● Was the algorithm coded from scratch or was a standard library used? If so, what

are the license terms of the library?

● What type of version control was utilized?

CRISP-DM - Modeling](https://image.slidesharecdn.com/machinelearningmonitoringcomplianceandgovernance-iiagrc-200825191520/75/GRC-2020-IIA-ISACA-Machine-Learning-Monitoring-Compliance-and-Governance-32-2048.jpg)