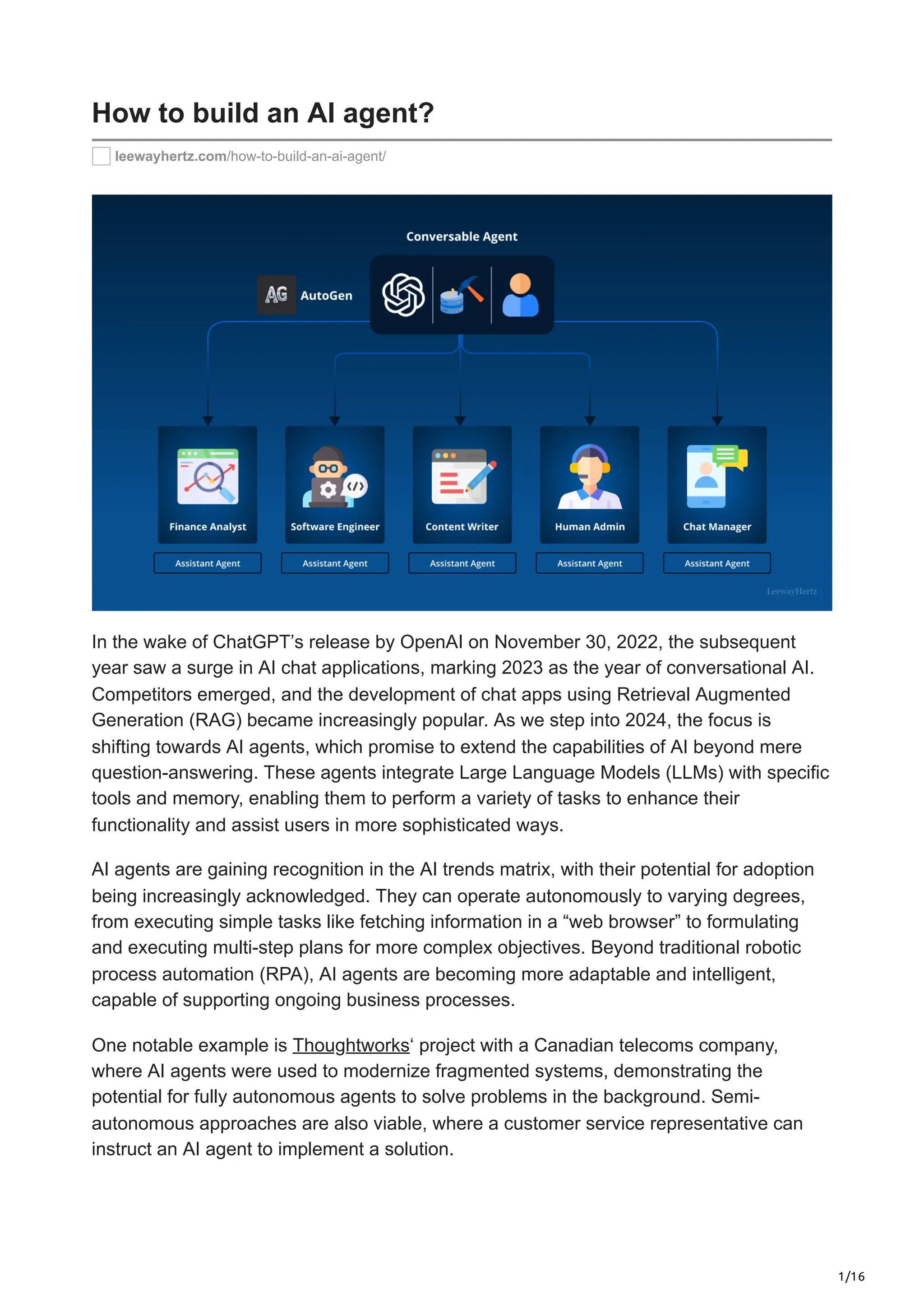

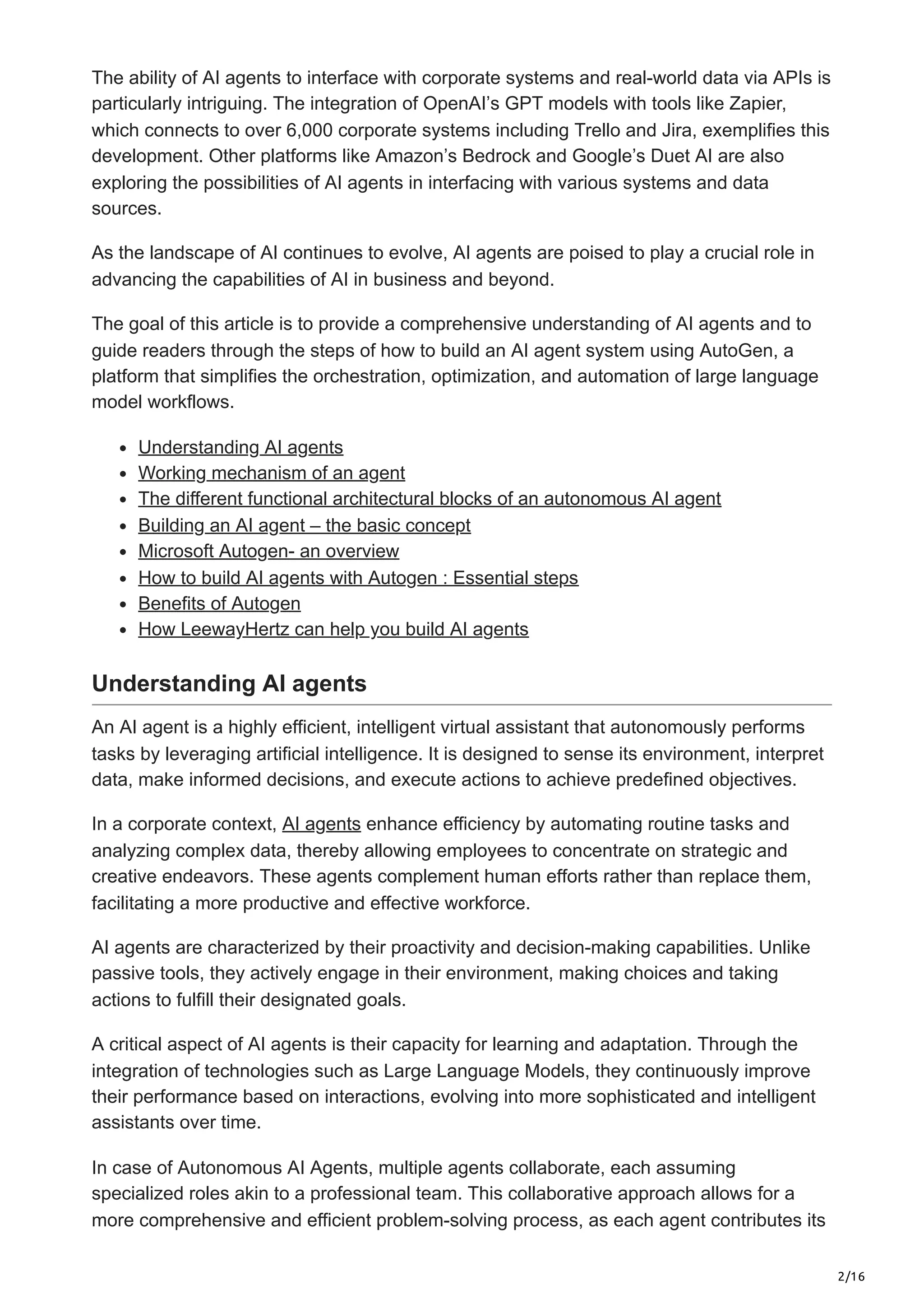

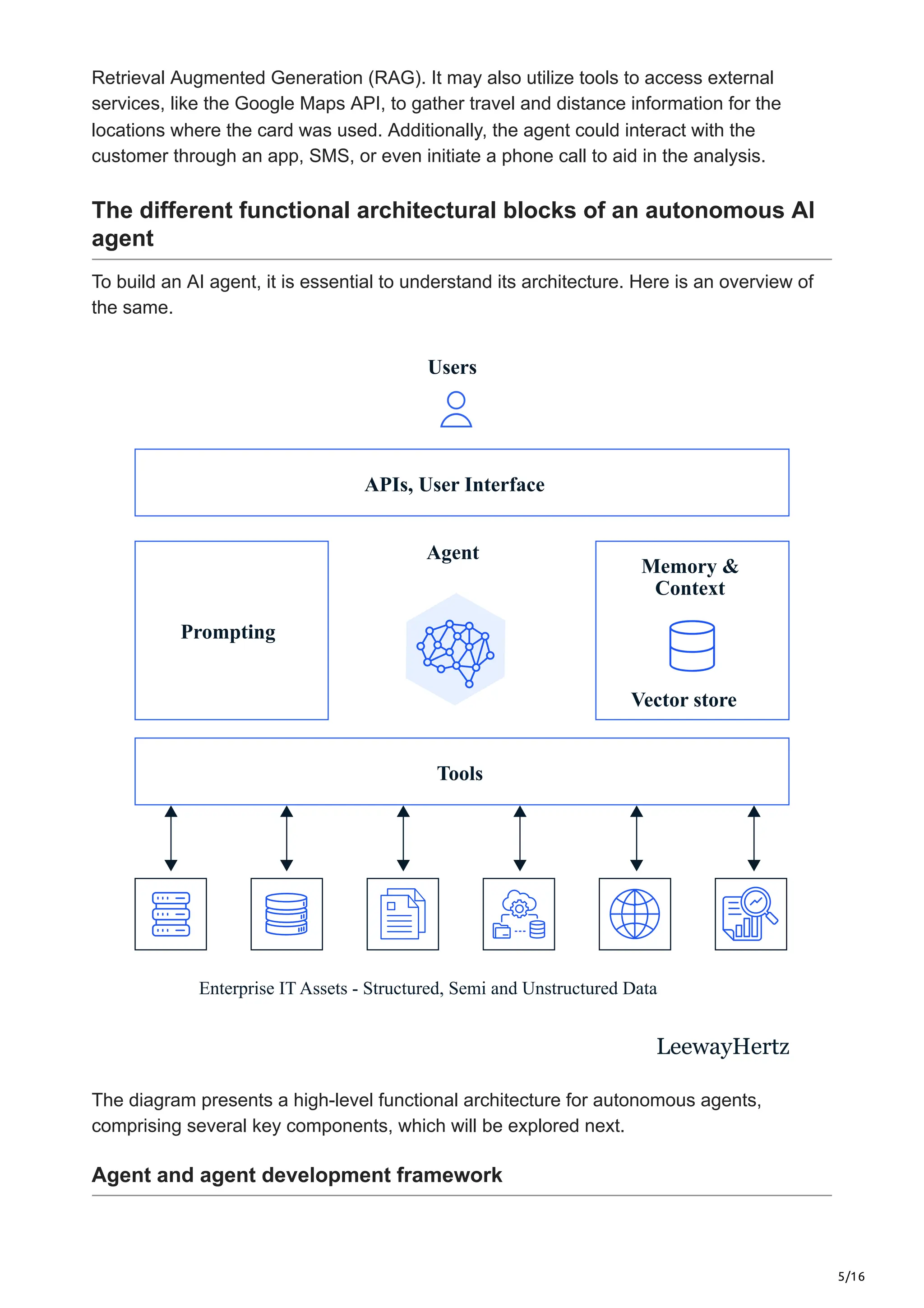

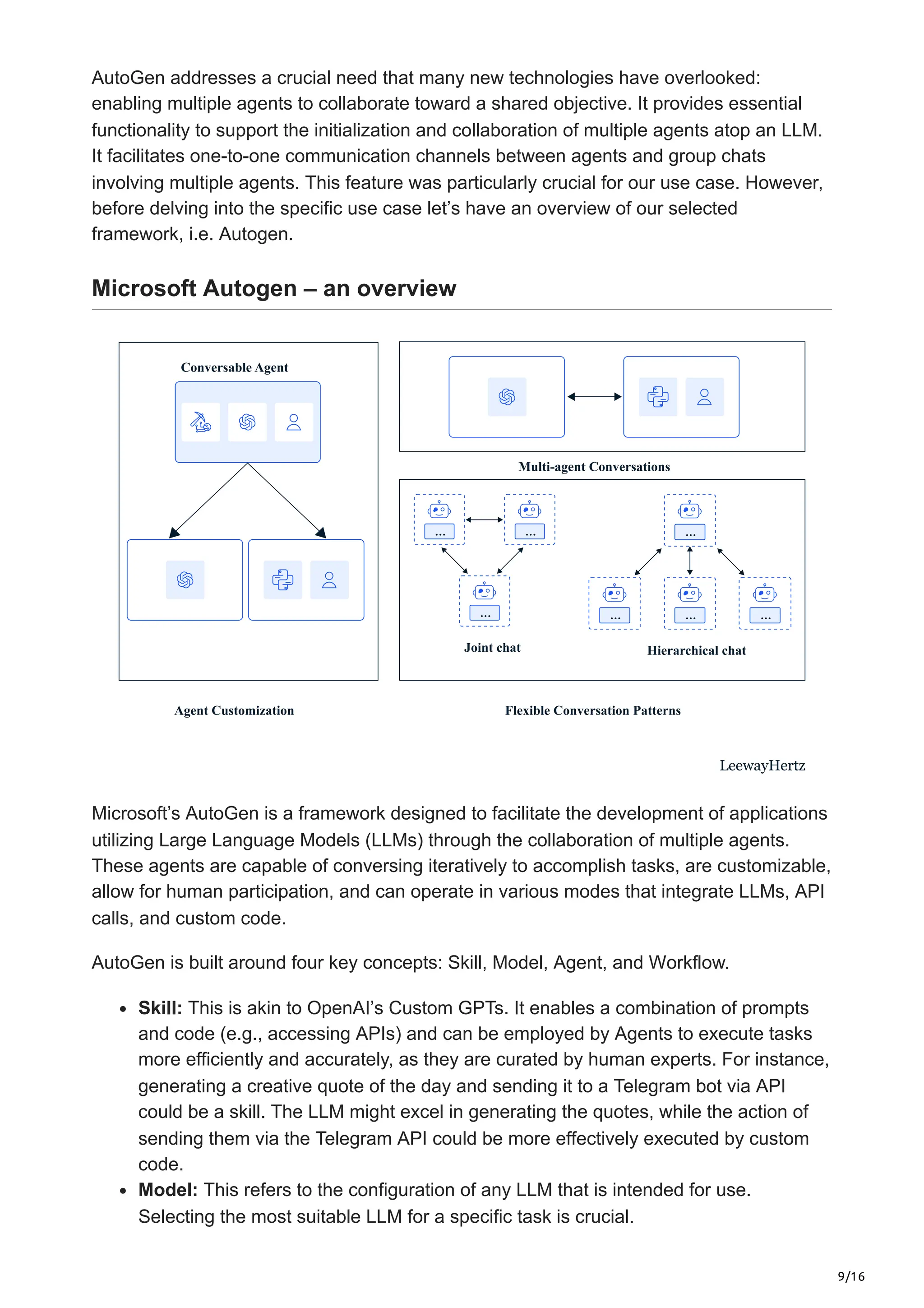

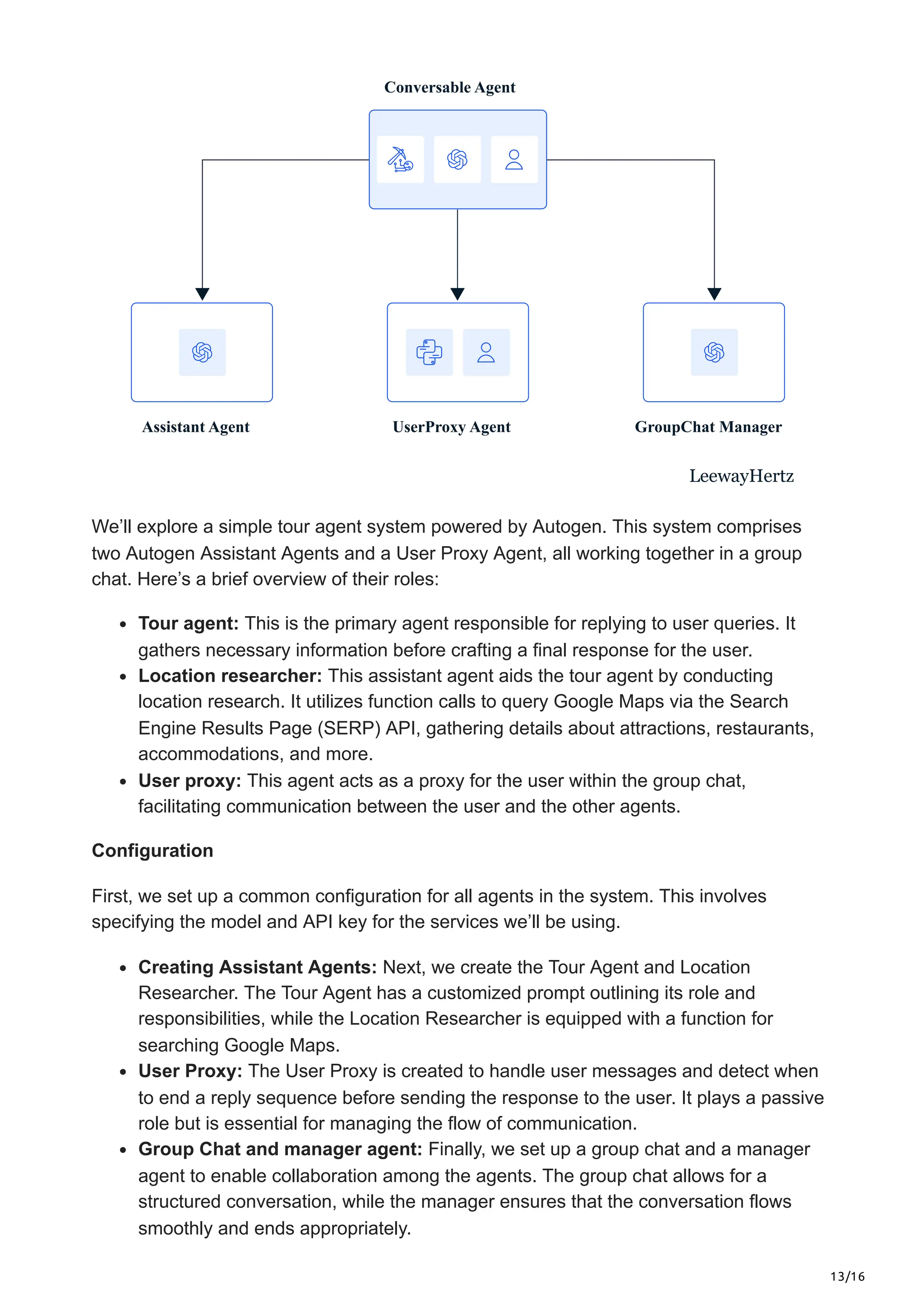

The document discusses the rise of AI agents following the release of ChatGPT, highlighting their ability to extend AI functionalities beyond basic question-answering to execute and automate more complex tasks using large language models (LLMs) and tools. It explains how AI agents can work autonomously or semi-autonomously in various contexts, integrating with corporate systems and improving workflows. The article also outlines a framework for building AI agents, focusing on Microsoft's Autogen platform, which promotes collaboration between multiple agents to enhance task execution and efficiency.