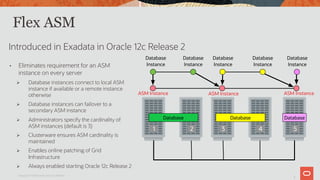

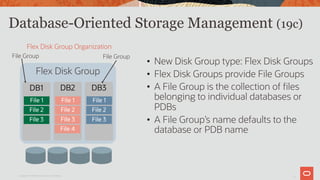

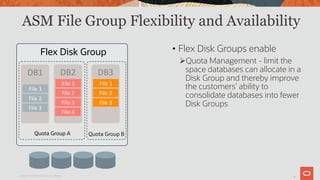

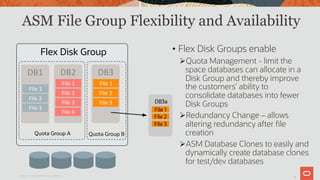

Automatic Storage Management allows Oracle databases to use disk storage that is managed as an integrated cluster file system. It provides functions like striping, mirroring, and rebalancing of data across storage disks. The document outlines new features in Oracle Exadata and Automatic Storage Management including Flex ASM, which eliminates the requirement for an ASM instance on every server, and Flex Disk Groups, which provide file groups and enable quota management and redundancy changes for databases. It also discusses enhancements to disk offline and online operations and rebalancing.

![30

Checking for Corruption

• Silent data corruption is a fact of life in today’s storage world

• The database checks for corruption when reading data

Ø If physical corruption is detected then automatic recovery can be

performed using the ASM mirror copies

Ø For seldom accessed data, over time all mirror copies of data could be

corrupted

• ASM data can be proactively scrubbed:

Ø Scrubbing occurs automatically during rebalance operations

Ø Scrubbing of a Disk Group, individual files, or individual disks

Ø Check alert log for results

Ø ALTER DISKGROUP <NAME> SCRUB [REPAIR];

Copyright © 2019 Oracle and/or its affiliates.](https://image.slidesharecdn.com/exadatamasterseriesasm2020-201016160120/85/Exadata-master-series_asm_2020-29-320.jpg)

![42

HARD Check During Rebalance

• Prevents physical corruptions from spreading from disk to disk

• What happens if HARD check fails?

• ORA-00600 [kfk_iodone_invalid_buffer] (12.1.0.2DBBP, 12.2RU)

• Results in ORA-59035: HARDCHECK error during I/O (18.3 or later)

• Rebalance tries to continue until a number of failures occur, then ORA-

59035 (18.3 or later)

• Checks limited to major file types like datafile, online redo log,

archive log, backup files, etc. File types like temp files are

excluded from these checks.

Oracle Confidential

42](https://image.slidesharecdn.com/exadatamasterseriesasm2020-201016160120/85/Exadata-master-series_asm_2020-40-320.jpg)

![43

Content.check – 19.2 ASM Feature

• Scrubs relocating extents during rebalance for physical

corruptions just like HARD check

• If a corruption is found and there is a good mirror side,

automatically repair from mirror side

• Throws ORA-00700:

[KFFSCRUBDUMPEXTENTSET_ONSUSPICIOUSBLKS] for root

cause analysis

• Rebalance will continue after the repair

Oracle Confidential

43](https://image.slidesharecdn.com/exadatamasterseriesasm2020-201016160120/85/Exadata-master-series_asm_2020-41-320.jpg)