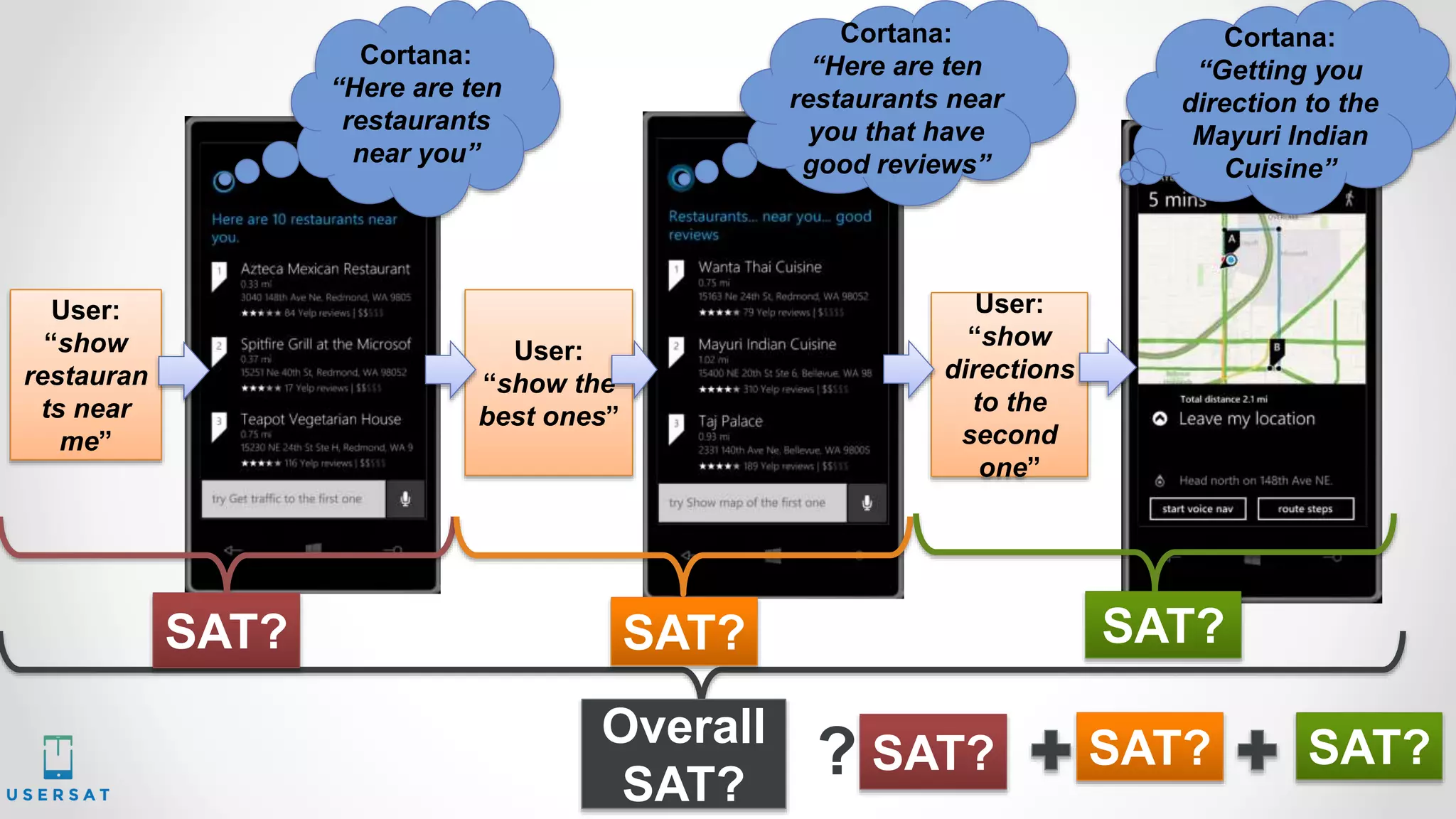

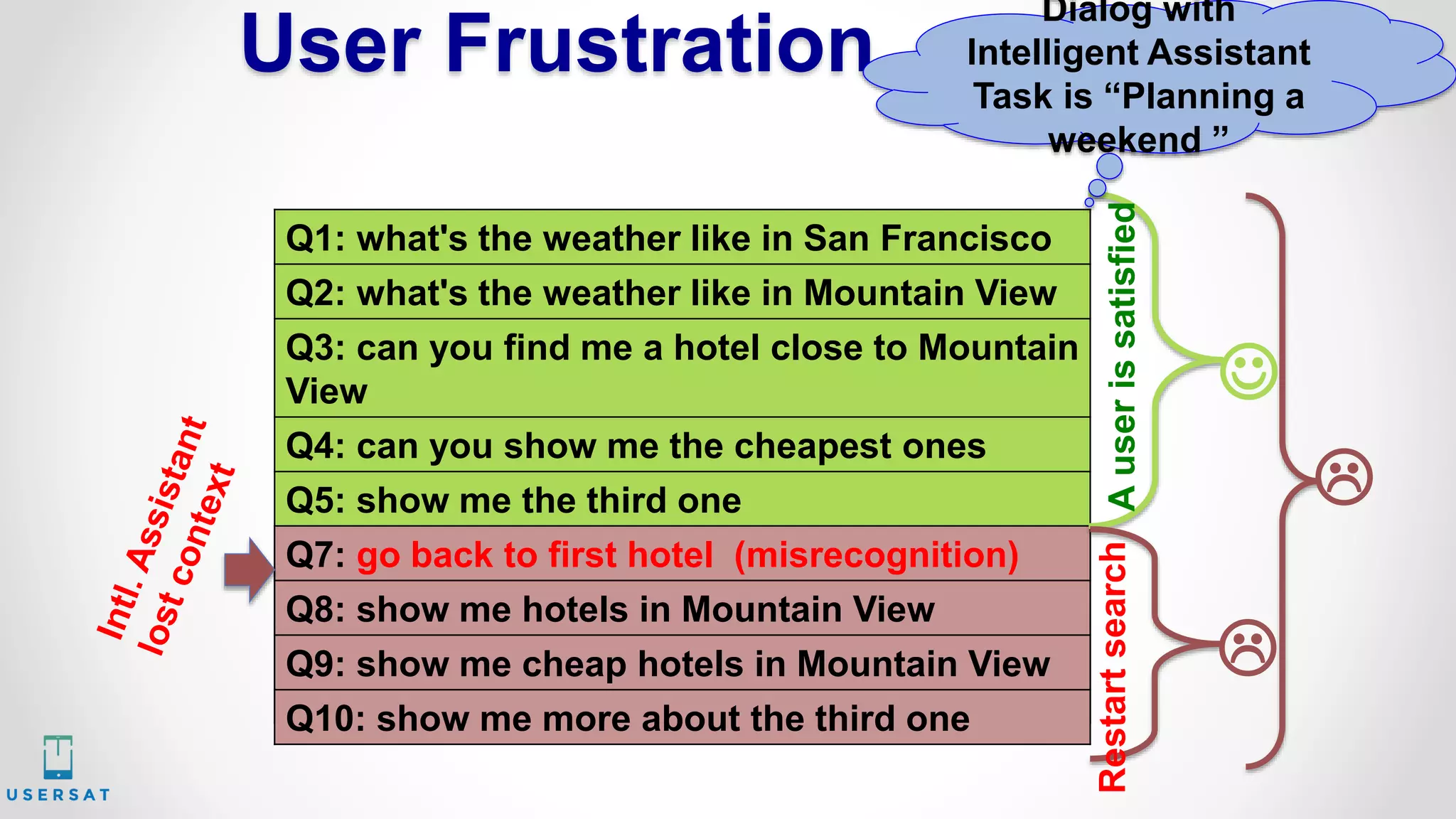

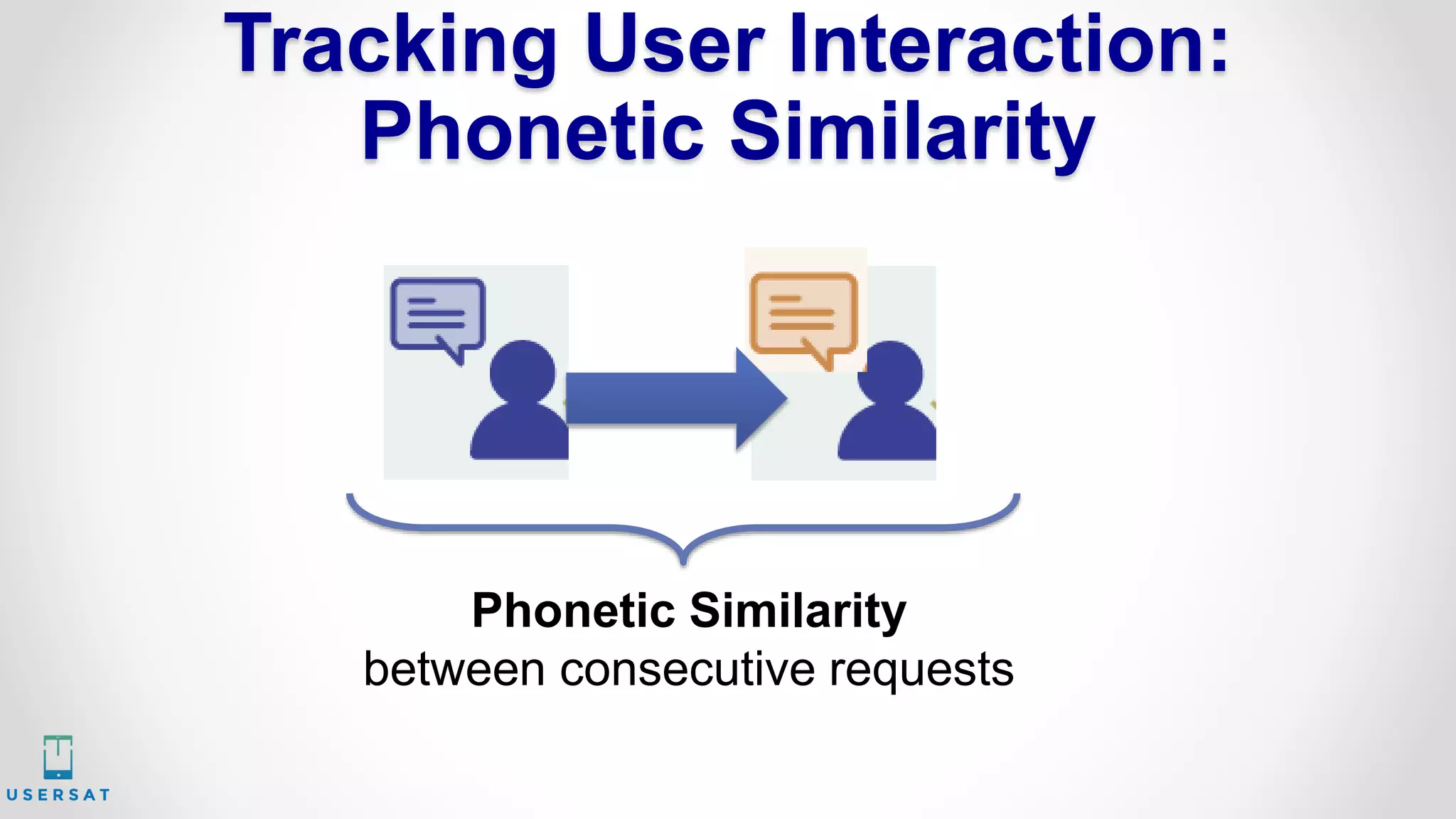

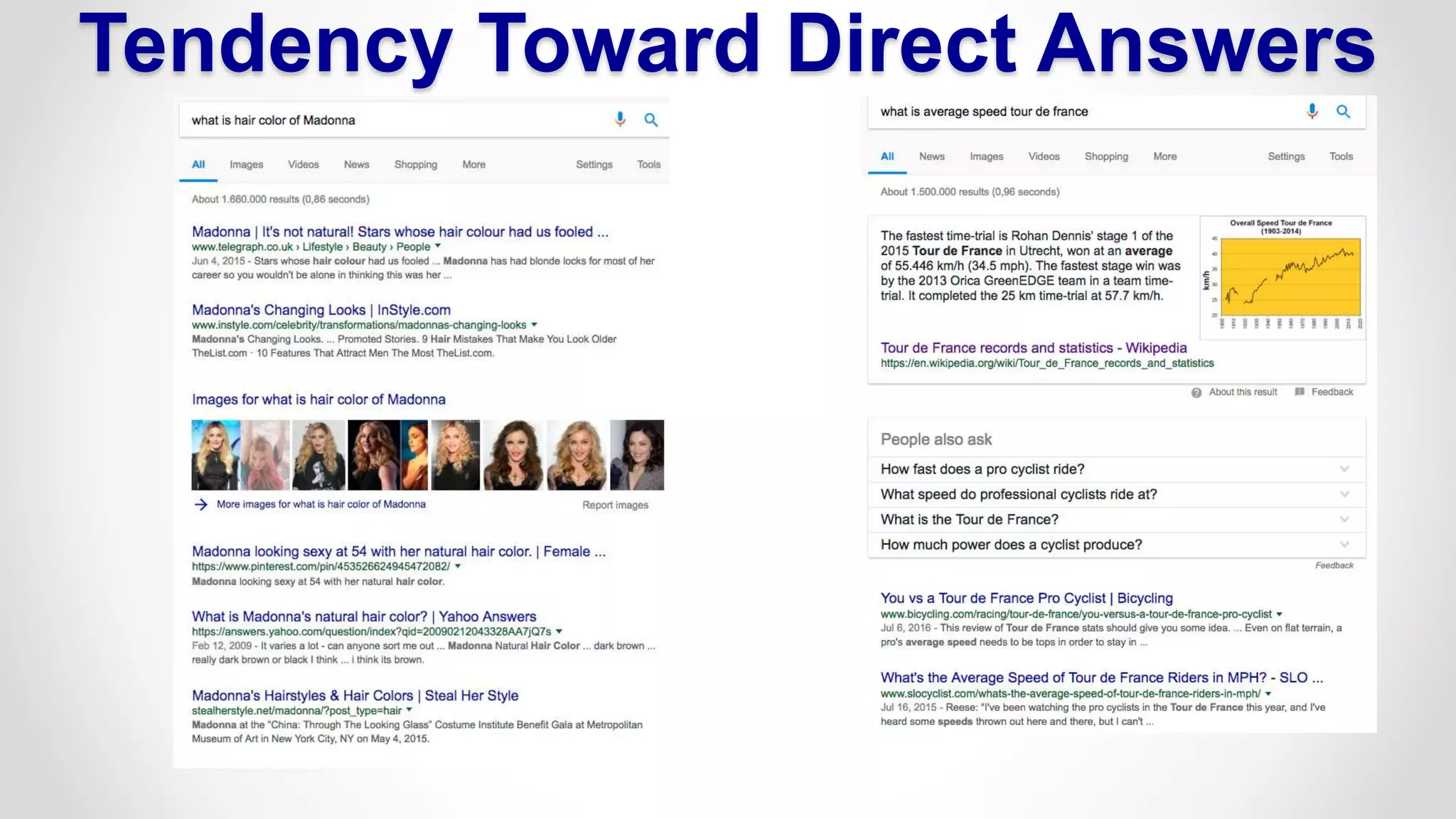

The document discusses the evaluation of personal assistants like Cortana in predicting user satisfaction during search dialogues. It explores the transition from query-based interactions to more conversational dialogues, outlines methods for tracking user interaction signals, and compares performance between baseline and interaction models. Additionally, it highlights the importance of user emotions and situational context in understanding and improving user satisfaction with intelligent assistants.

![Inverse Reinforcement Learning

[P. Abbeel’s slides on IRL]](https://image.slidesharecdn.com/coffeedatamay12-170512094633/75/Evaluation-Personal-Assistants-39-2048.jpg)