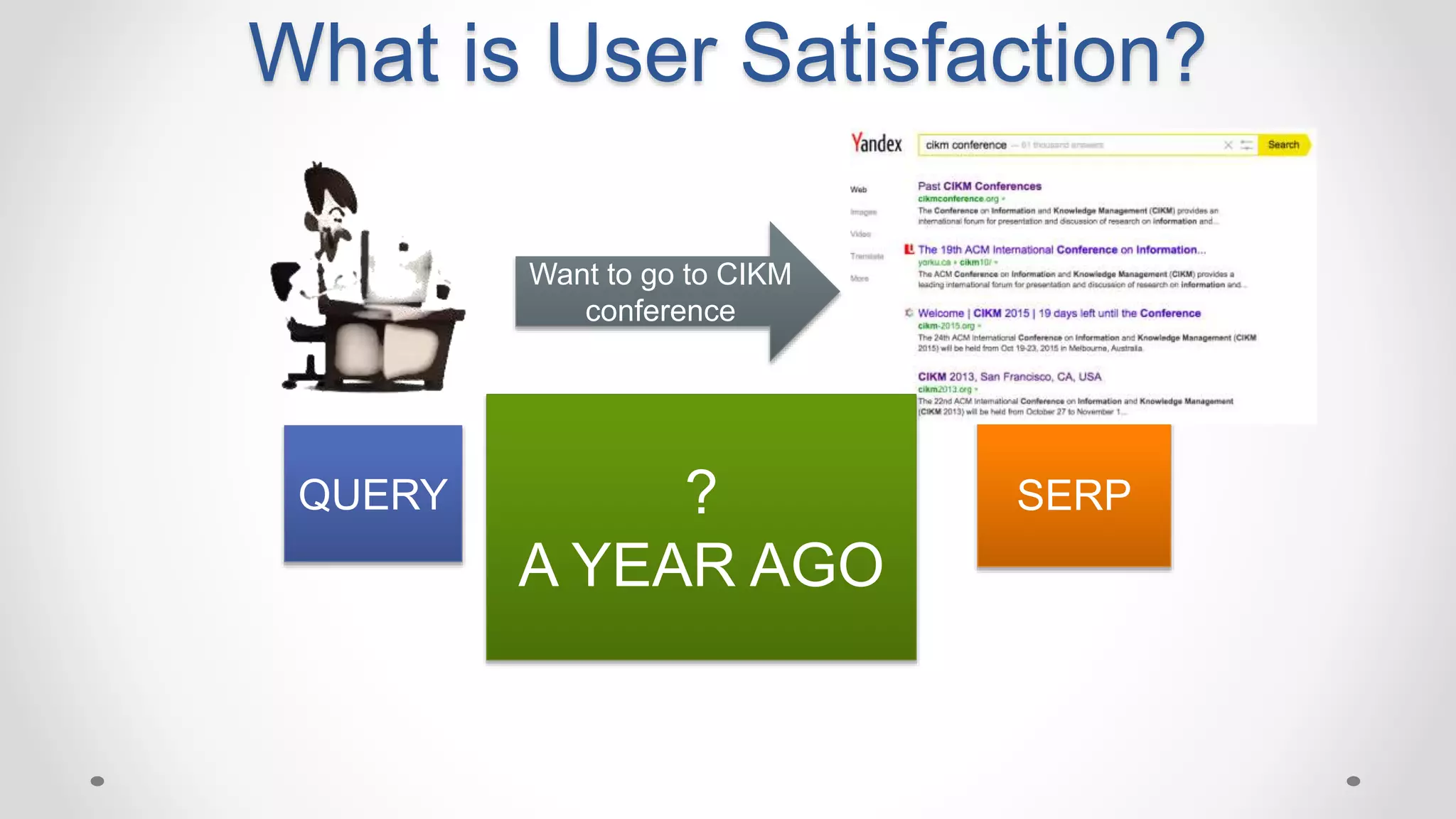

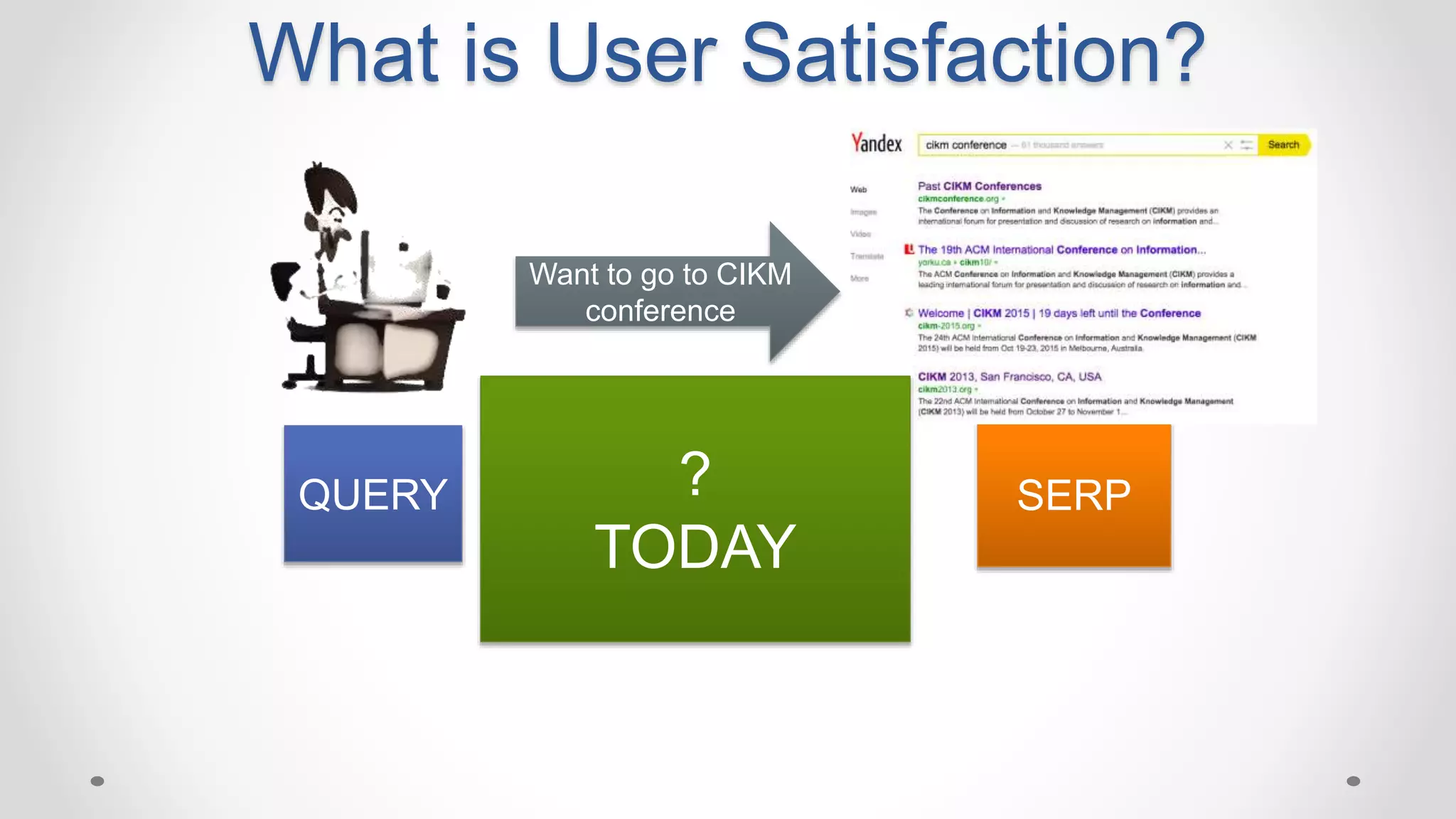

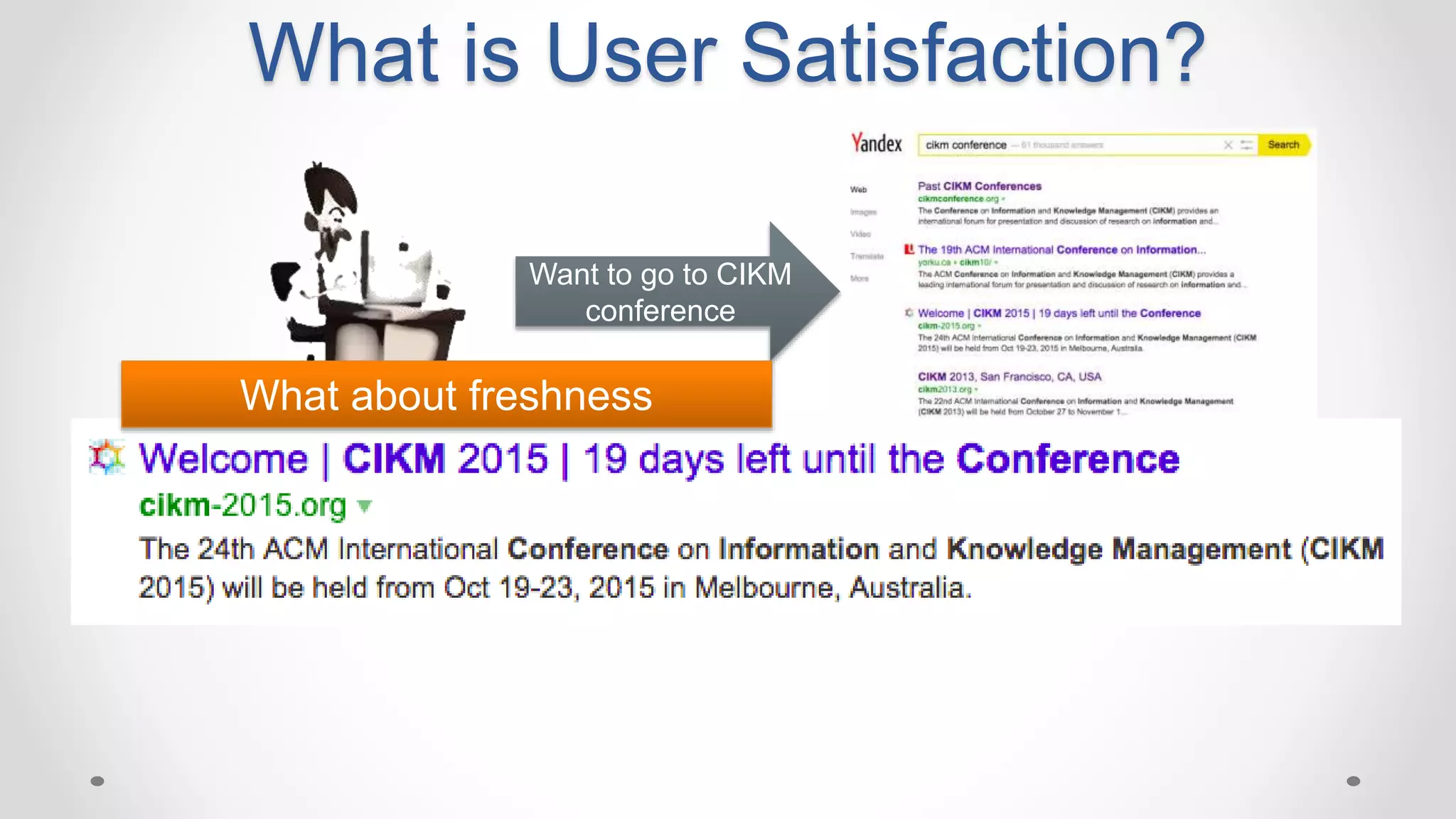

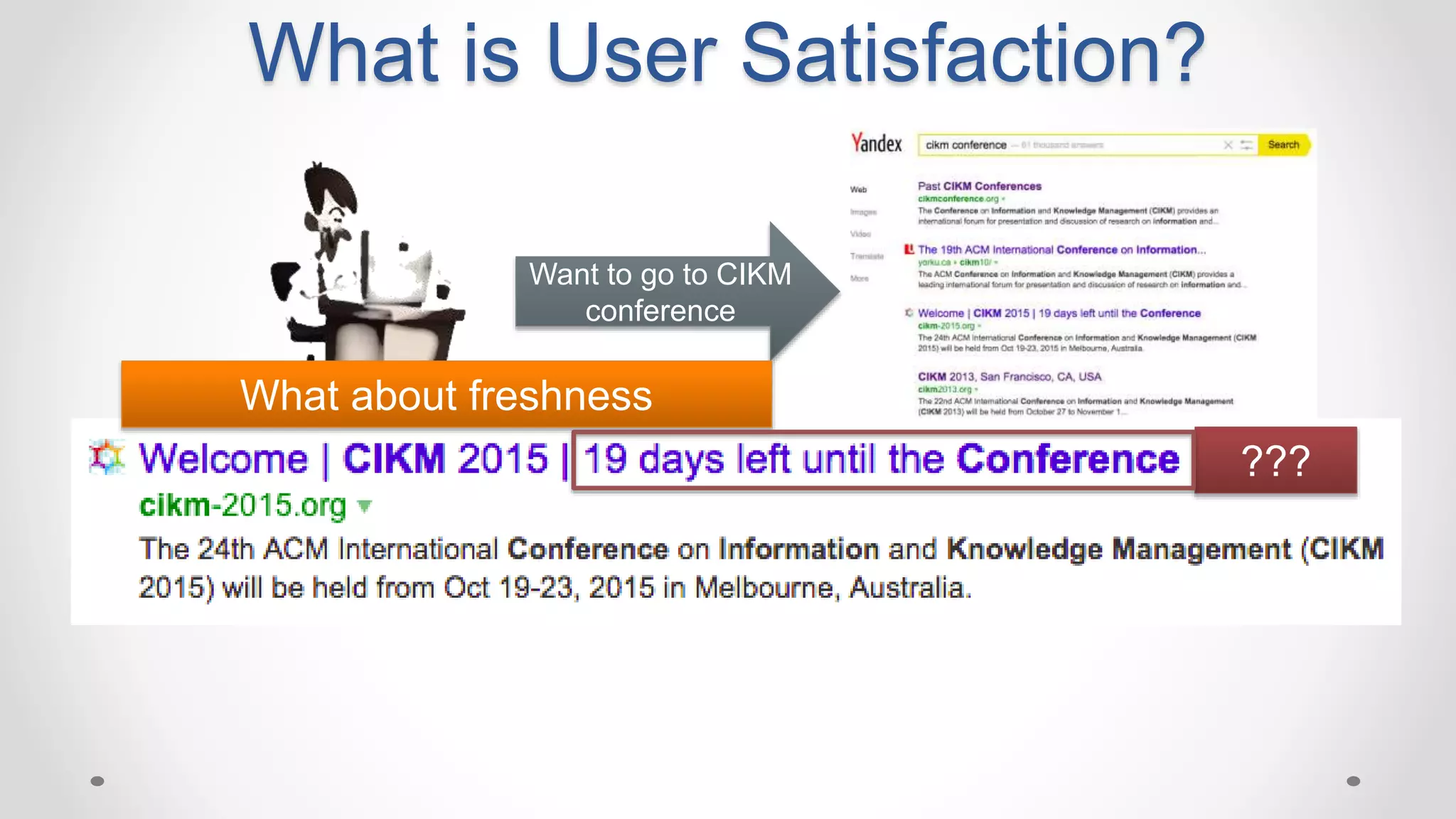

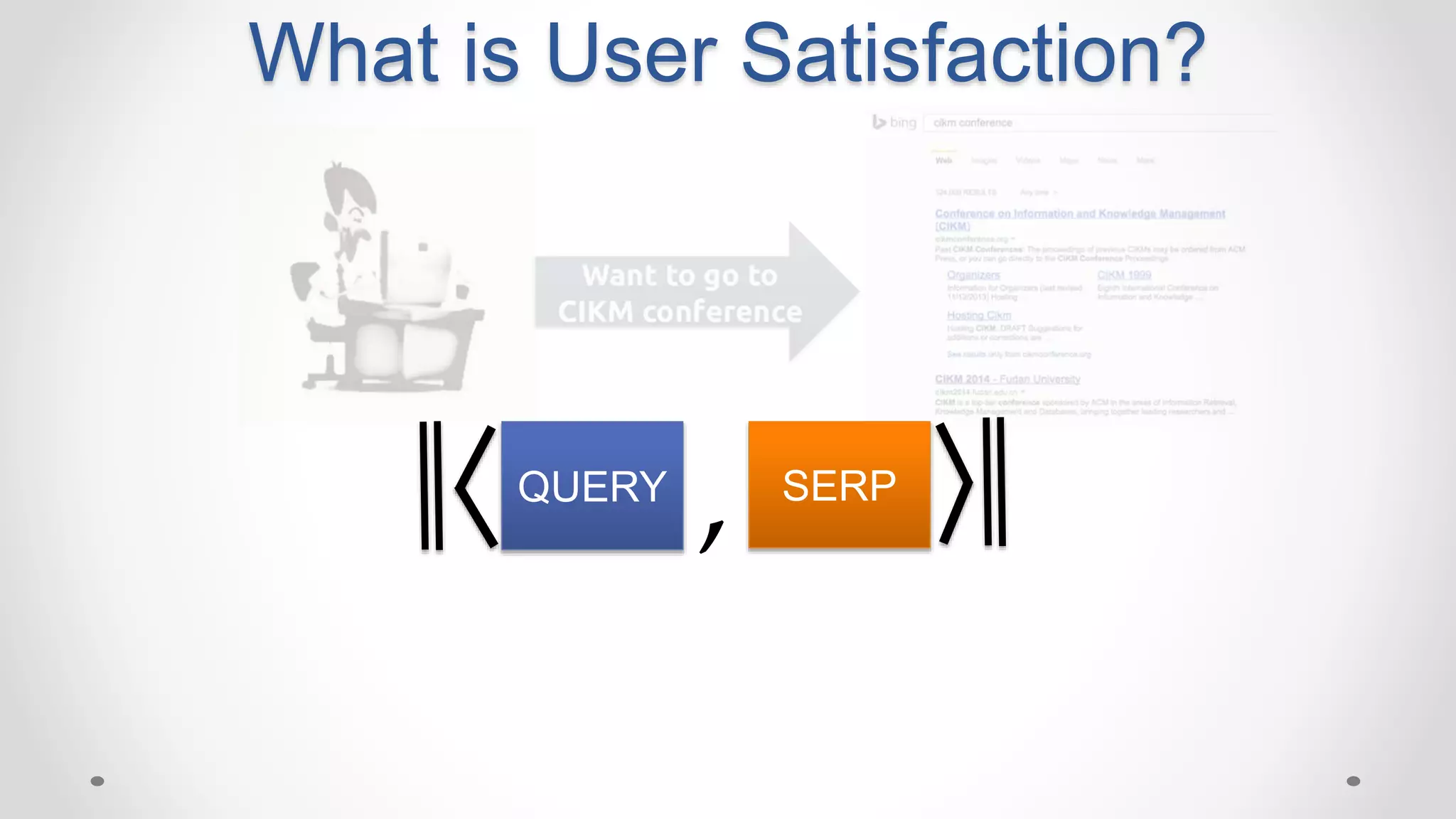

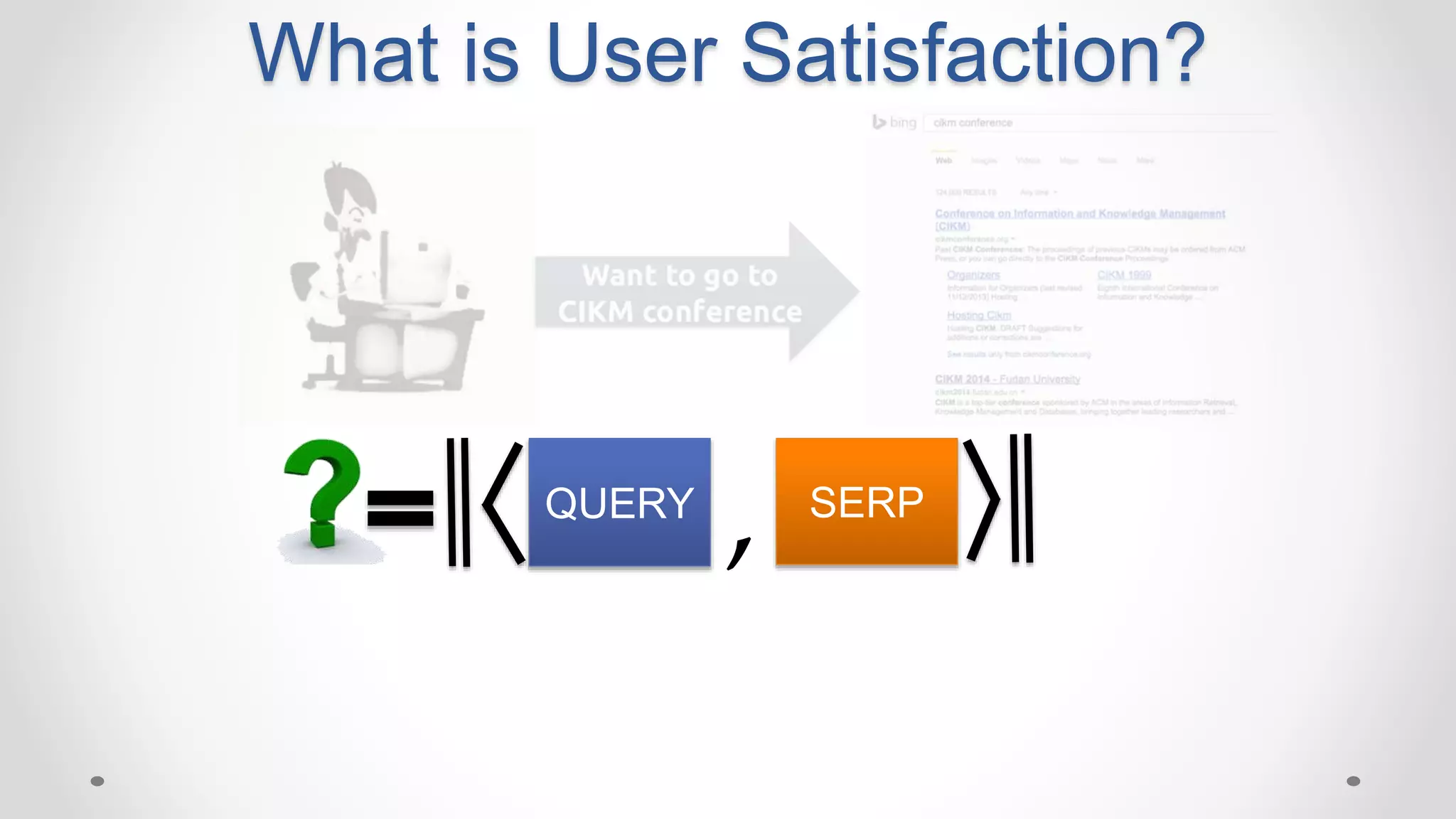

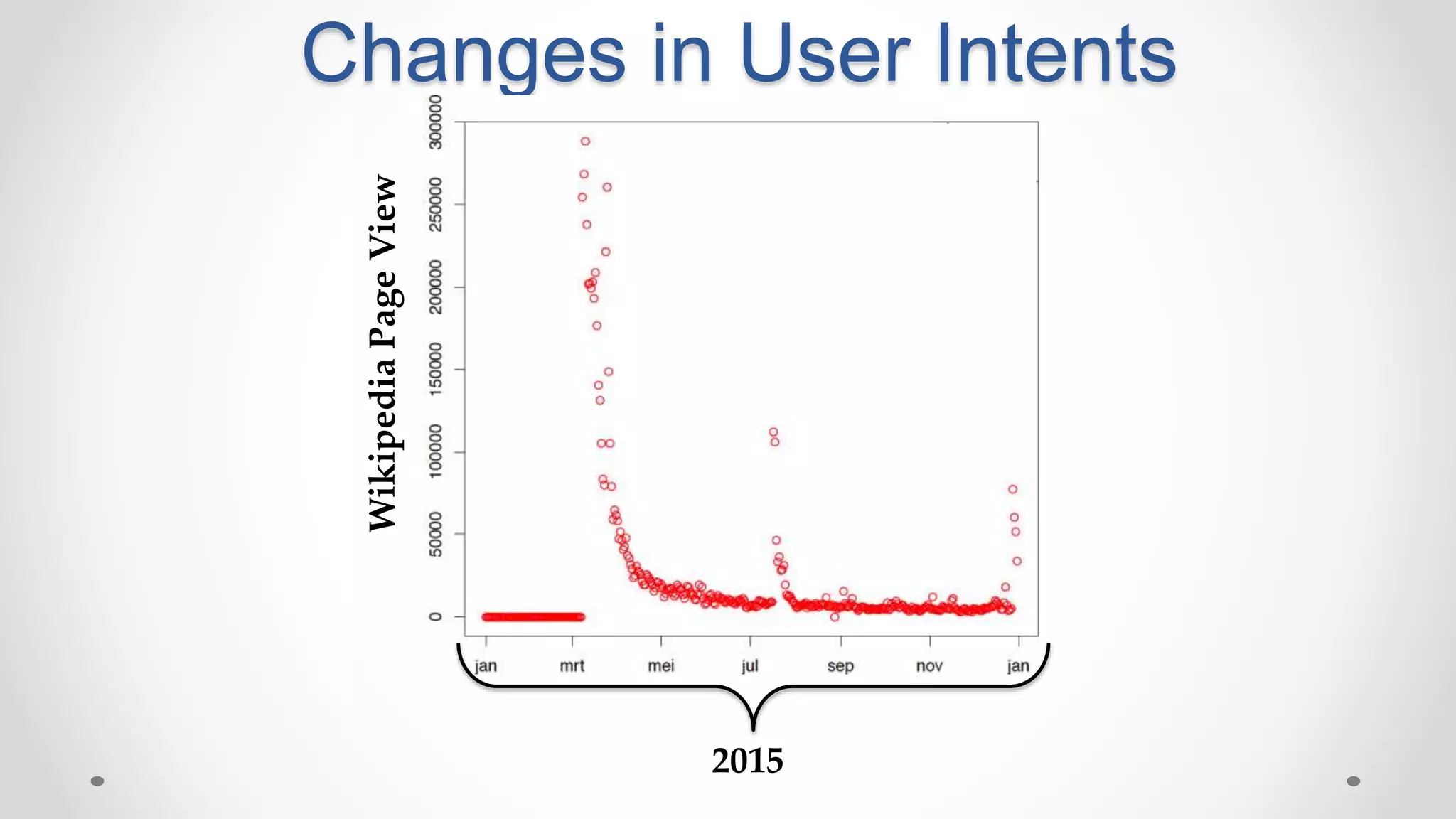

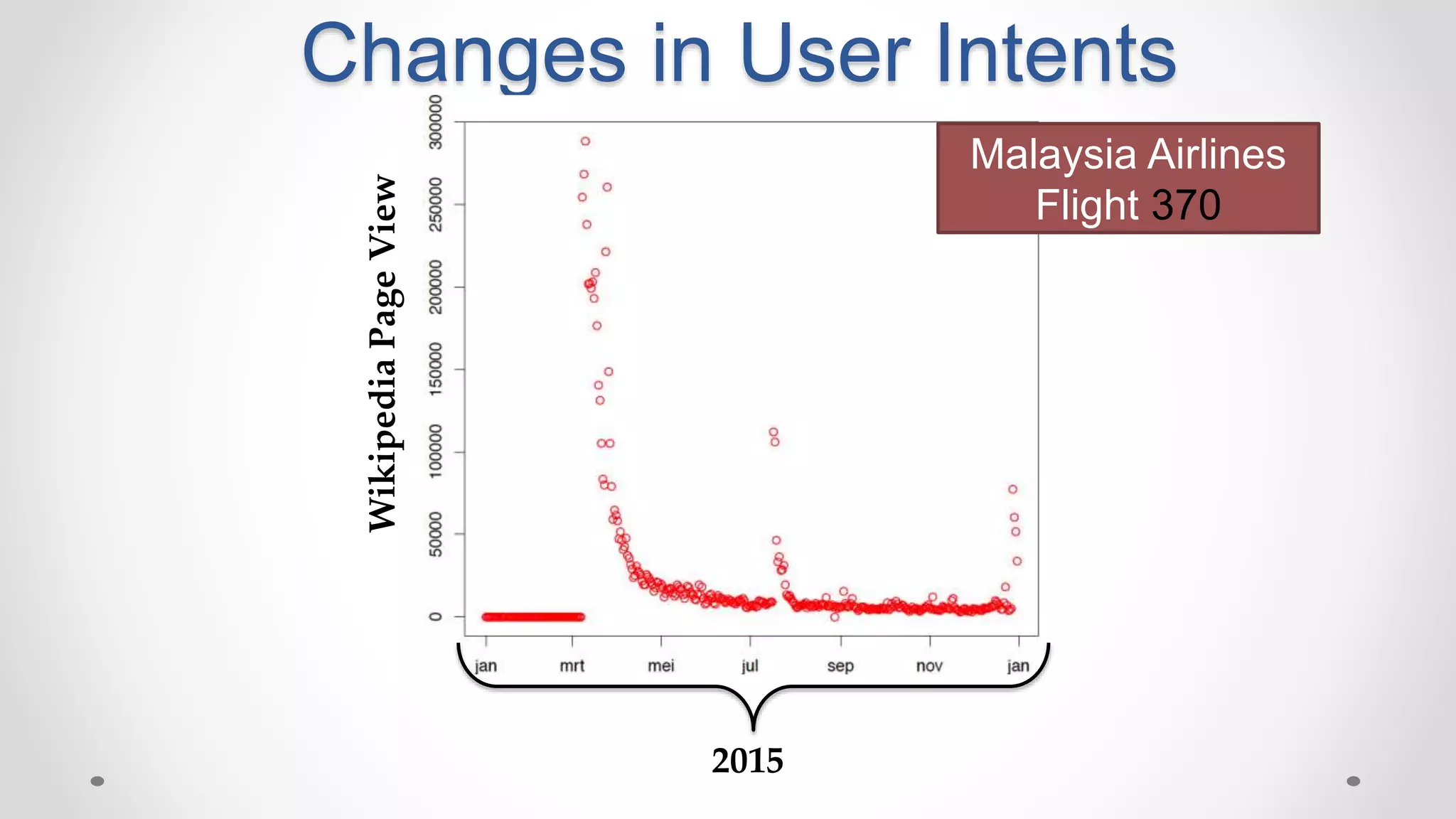

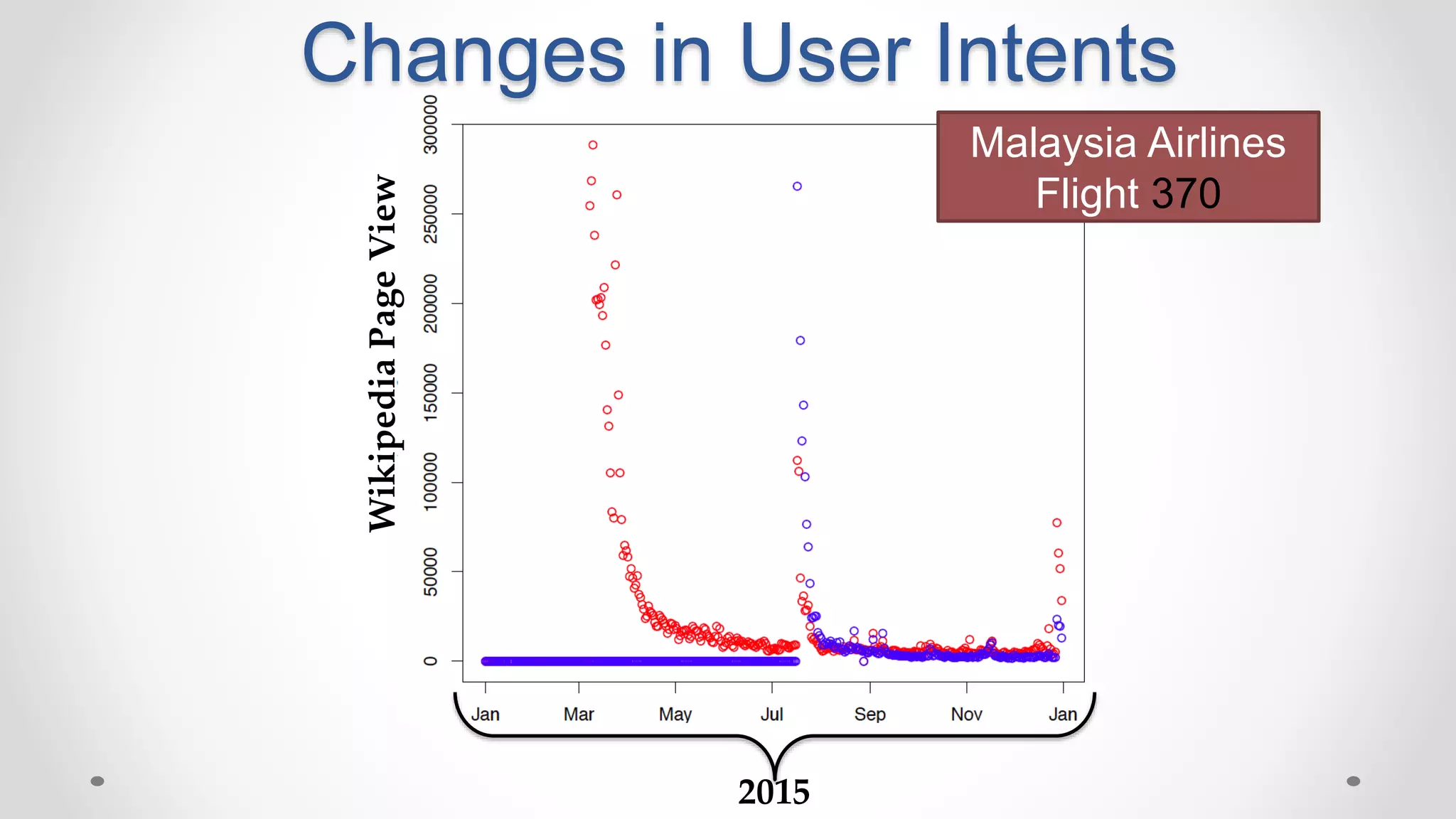

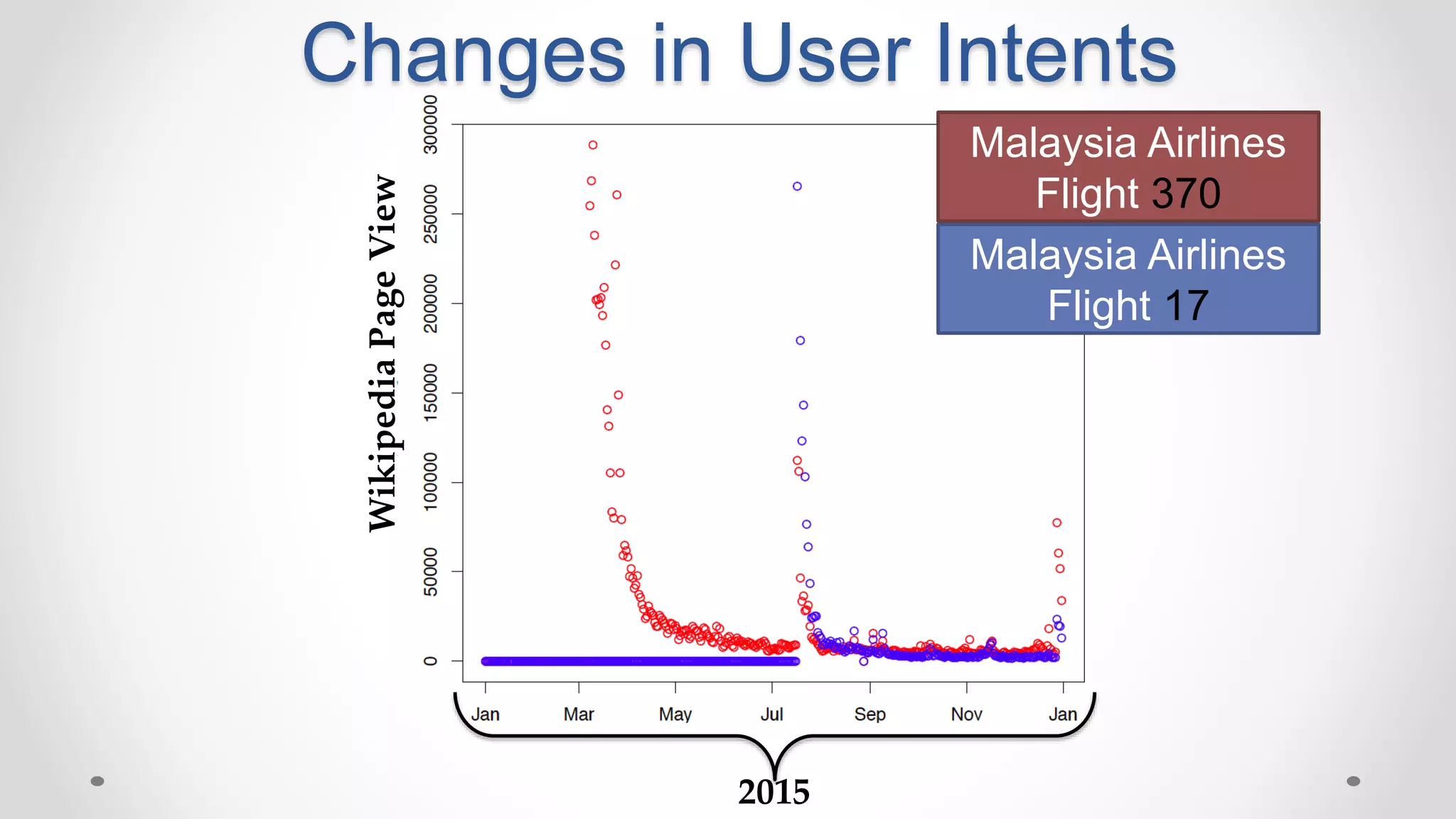

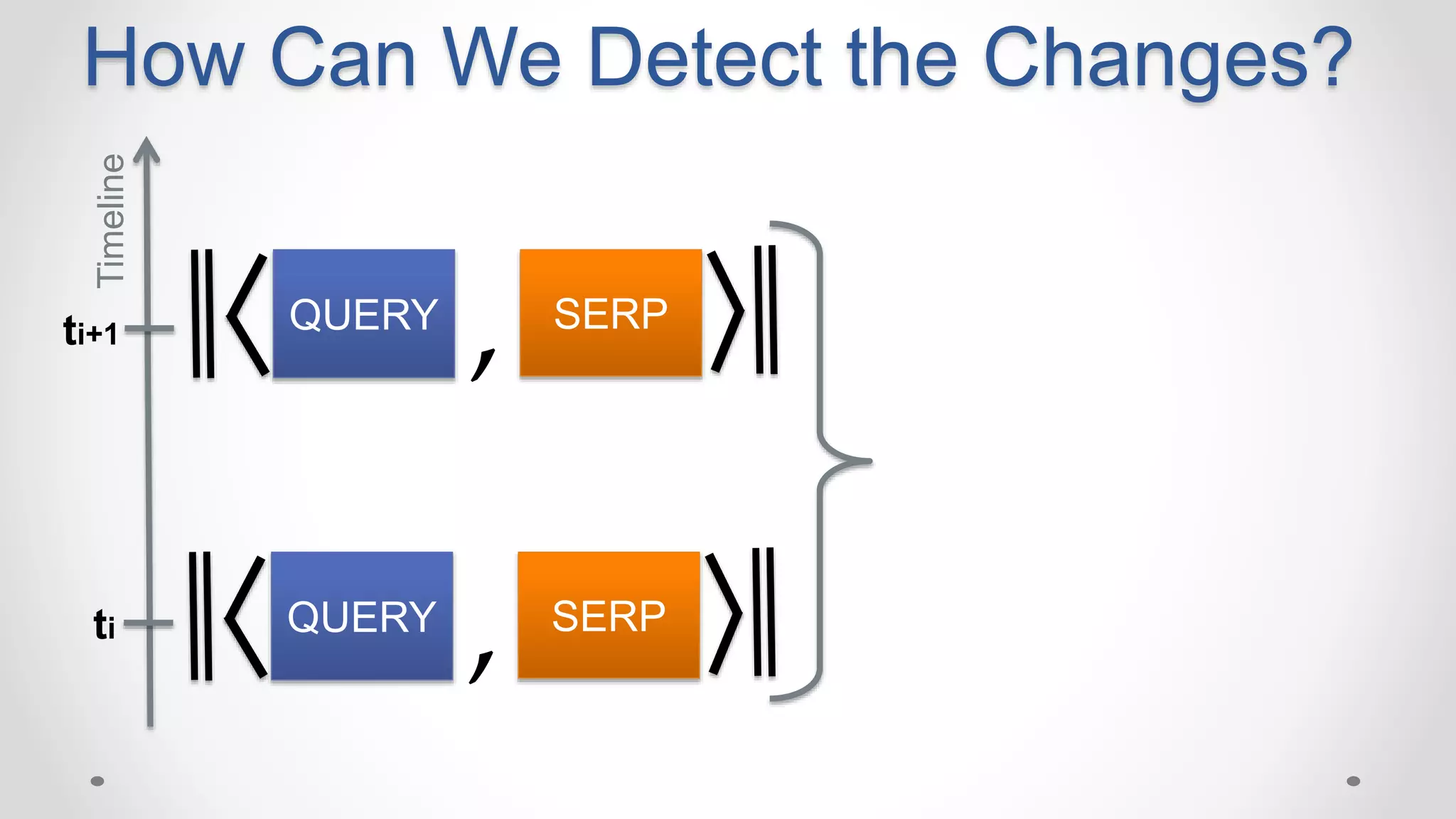

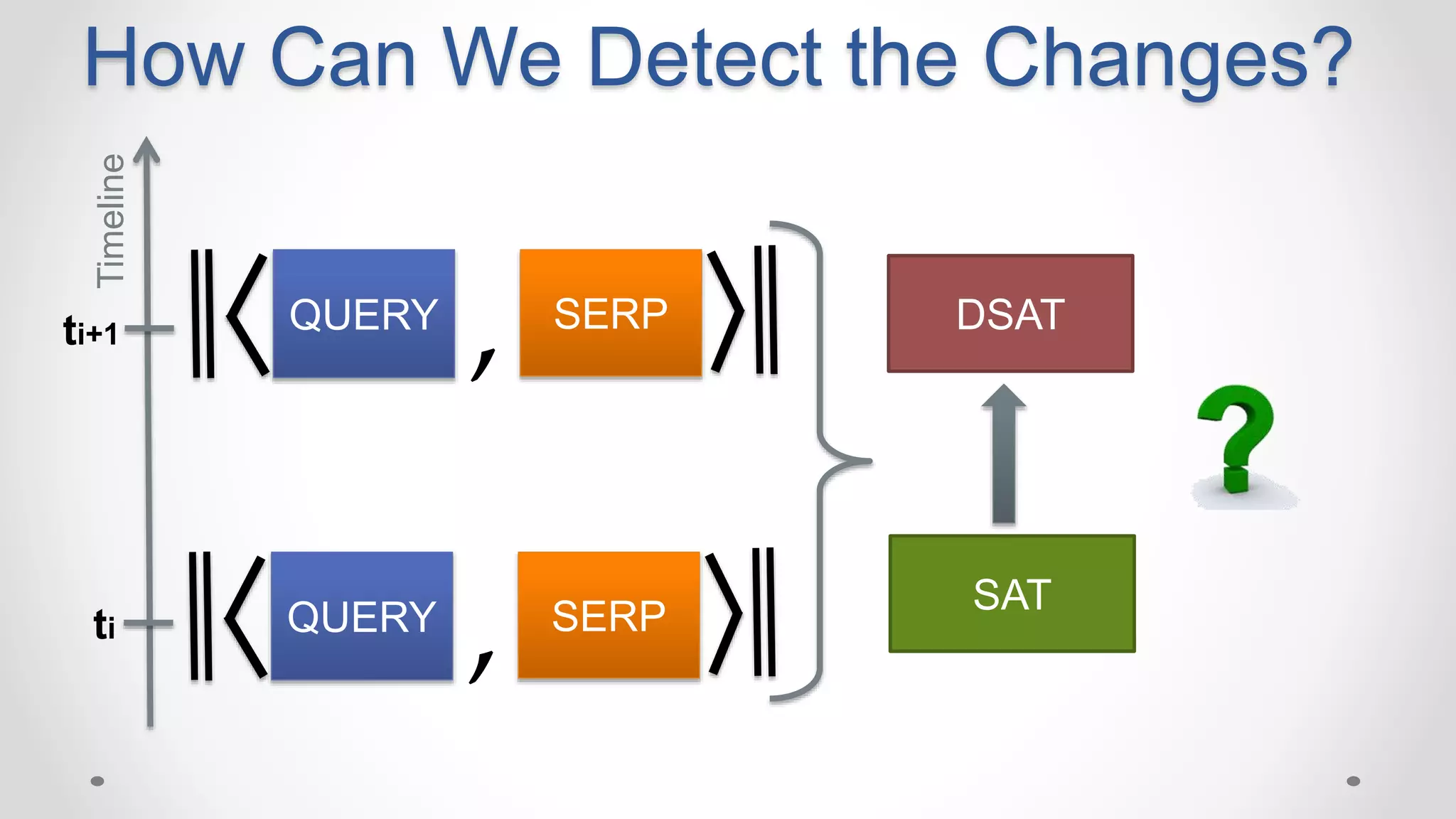

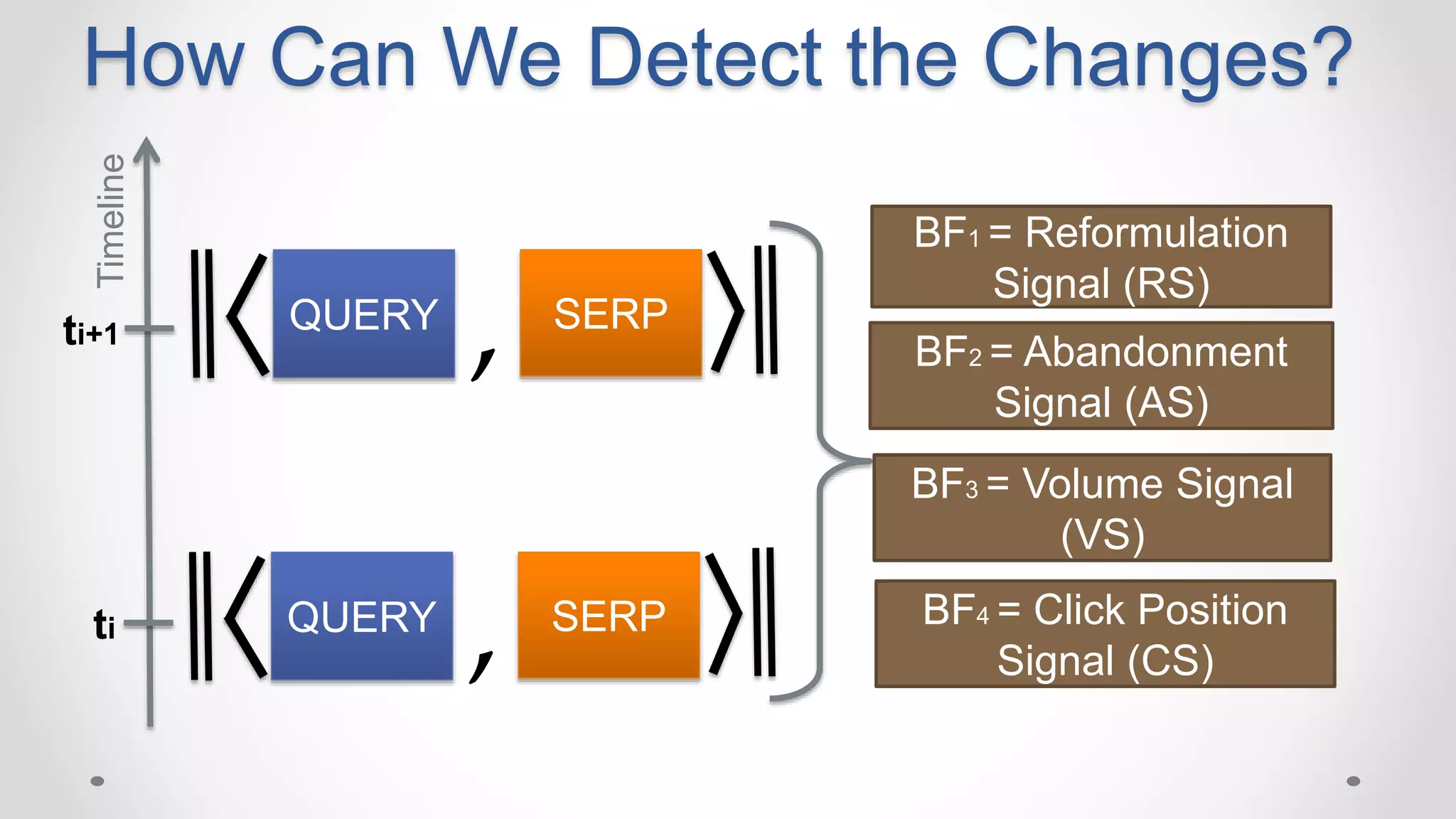

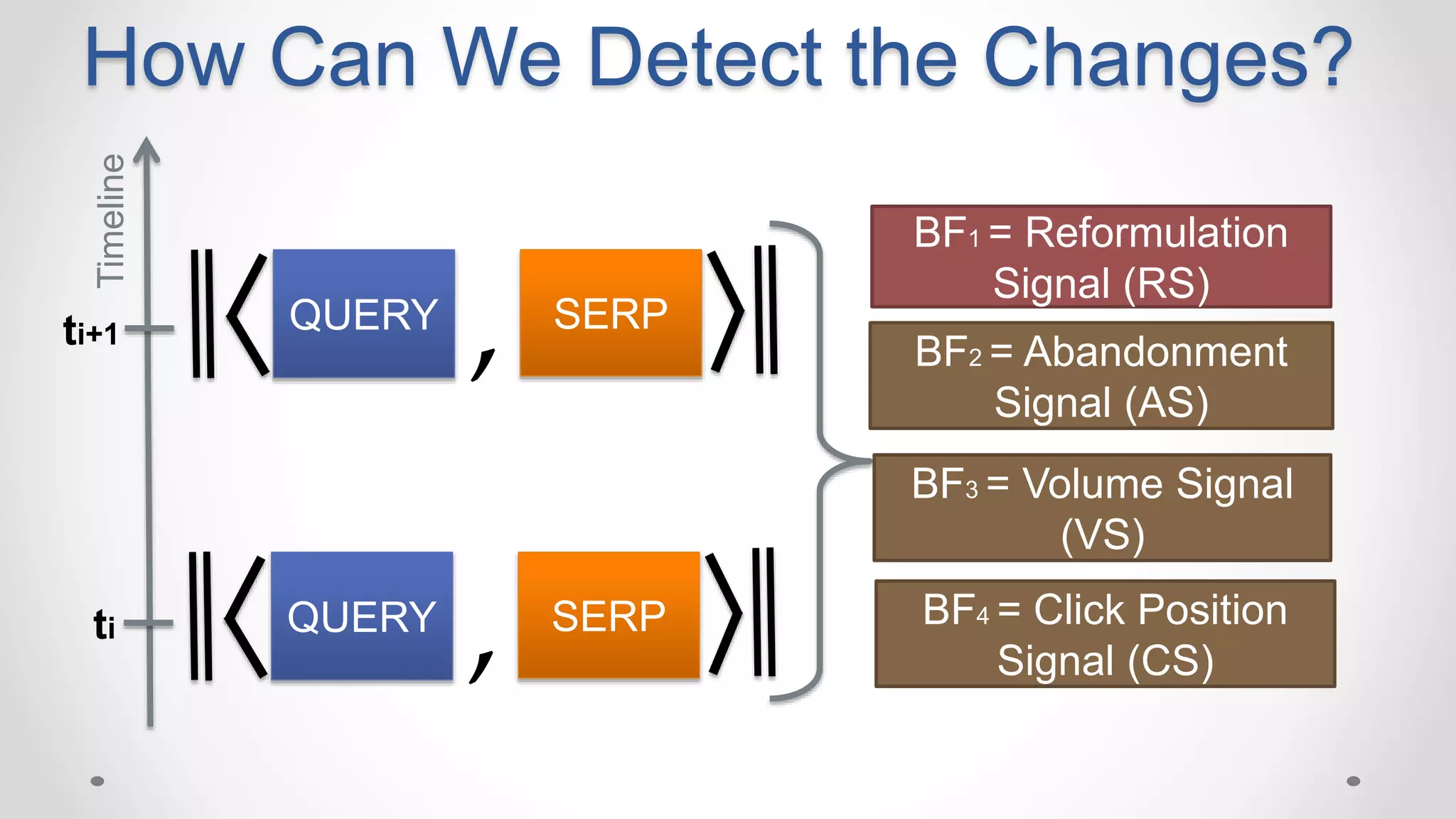

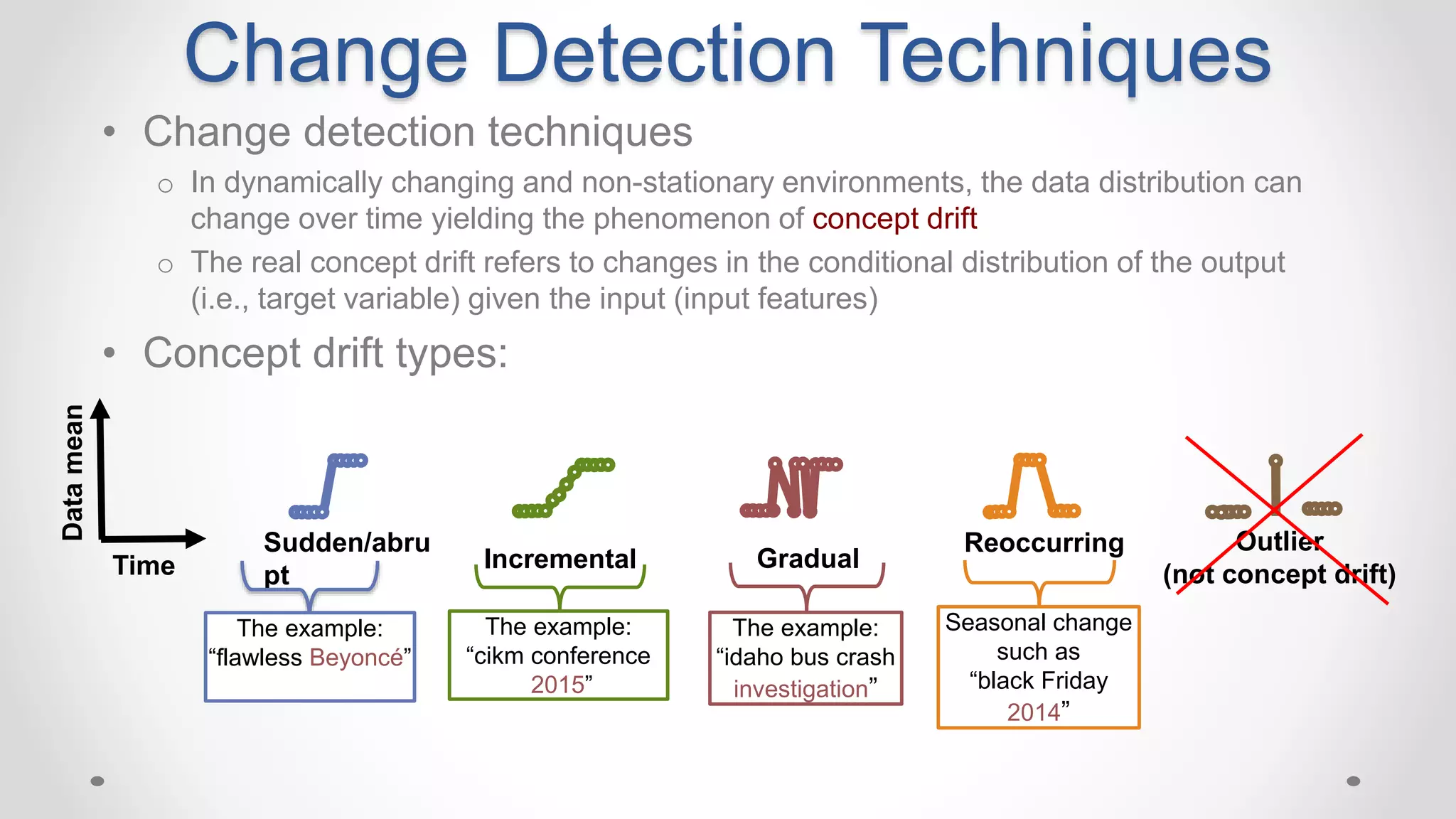

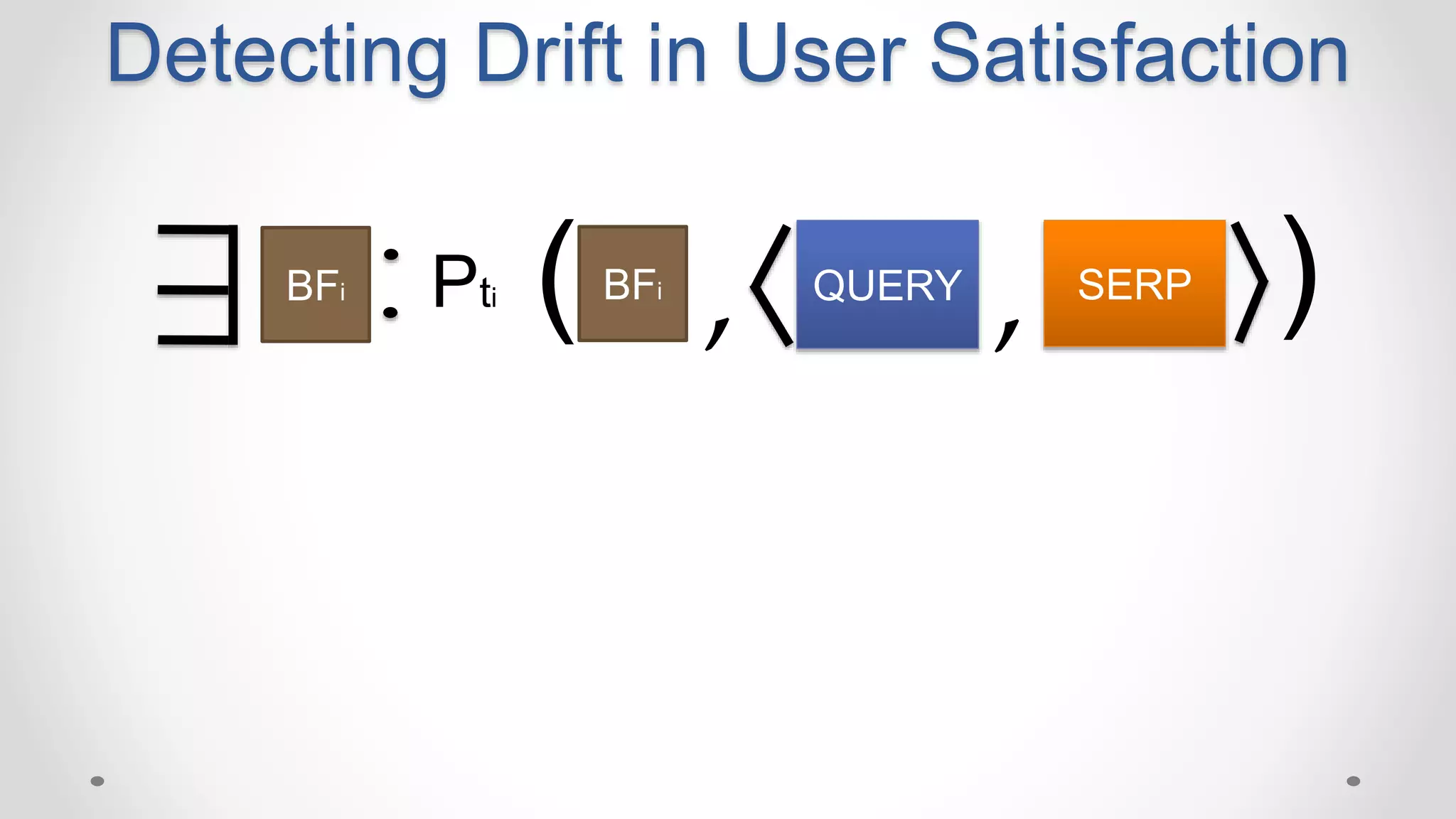

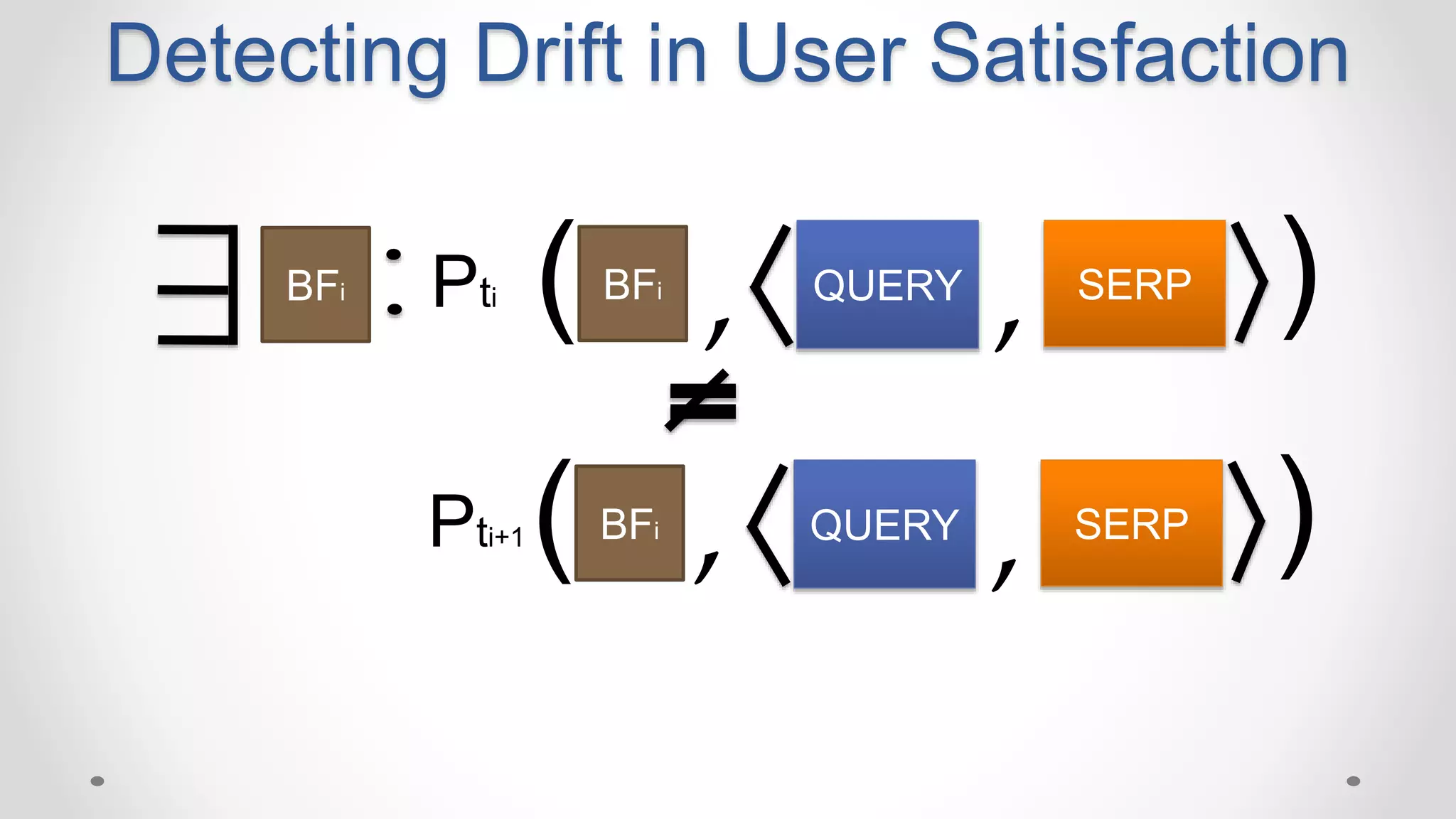

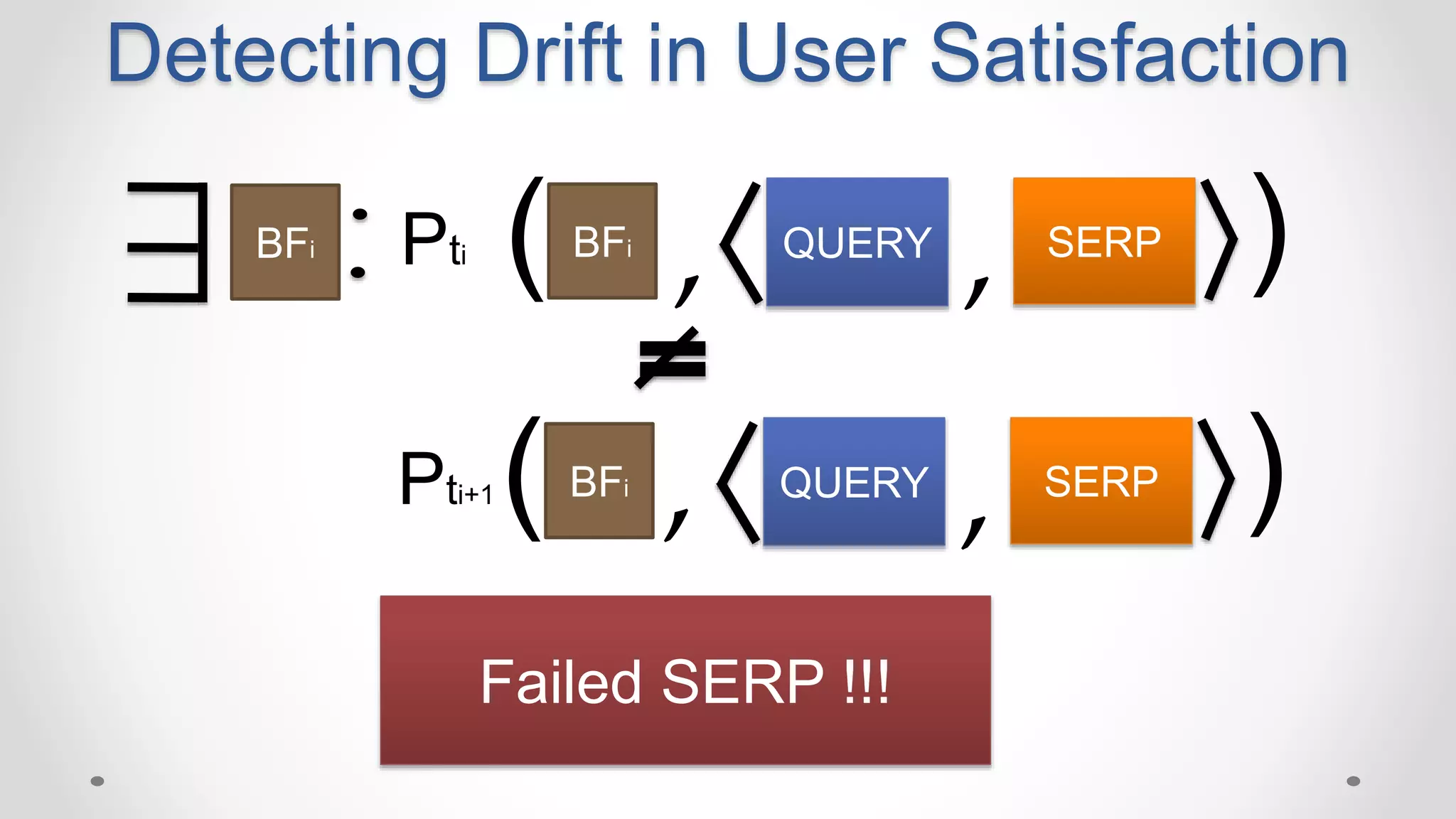

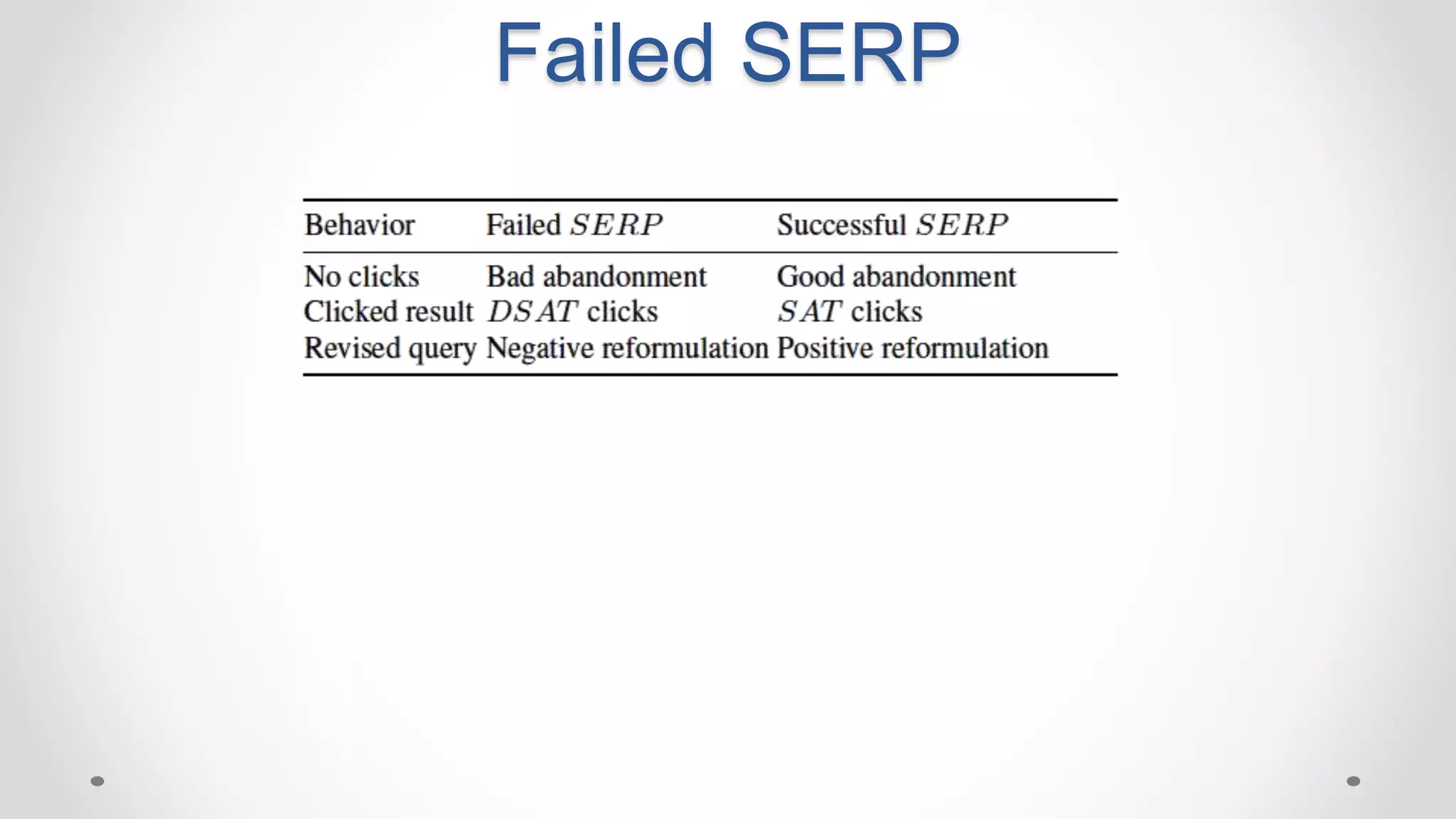

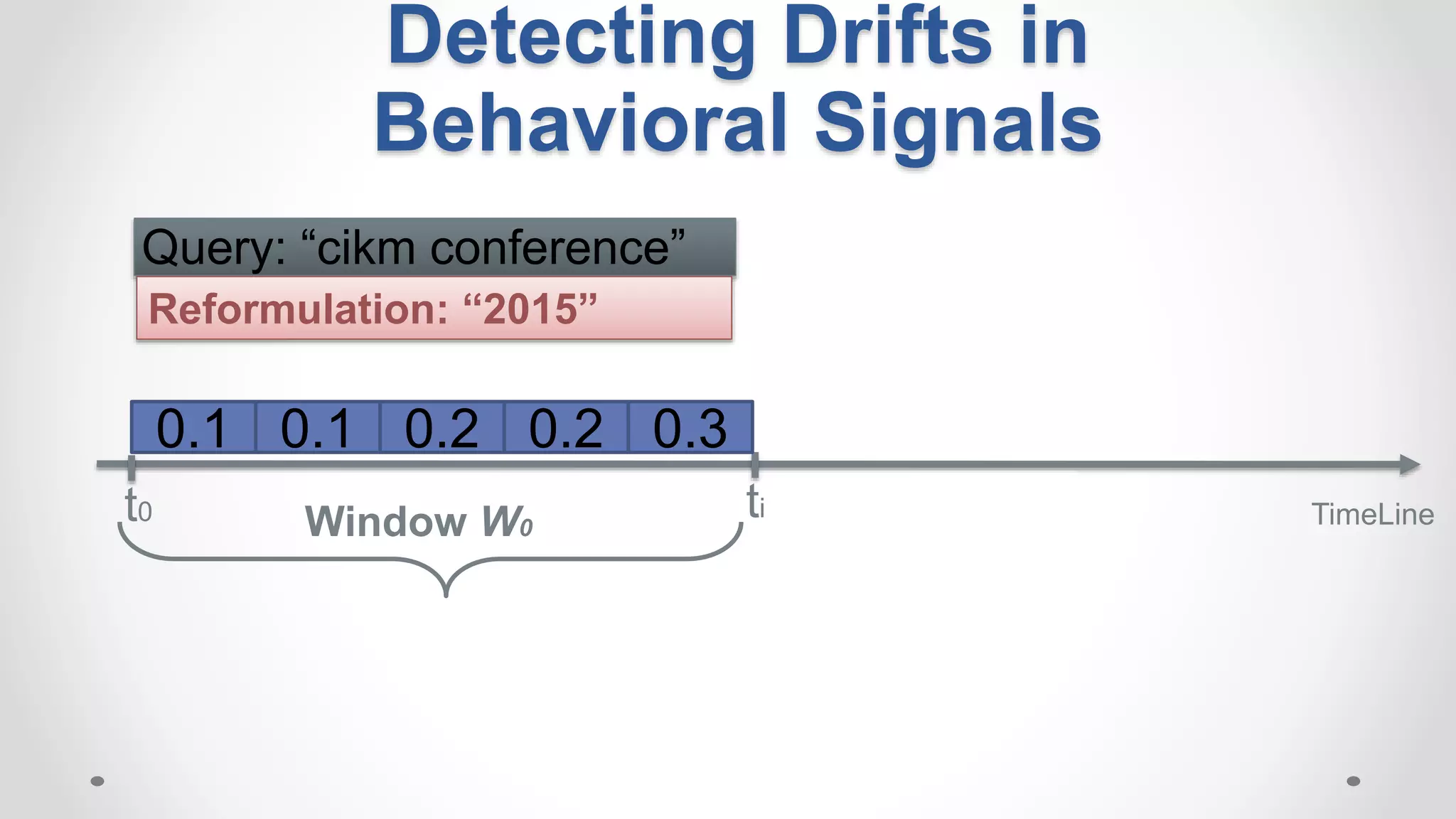

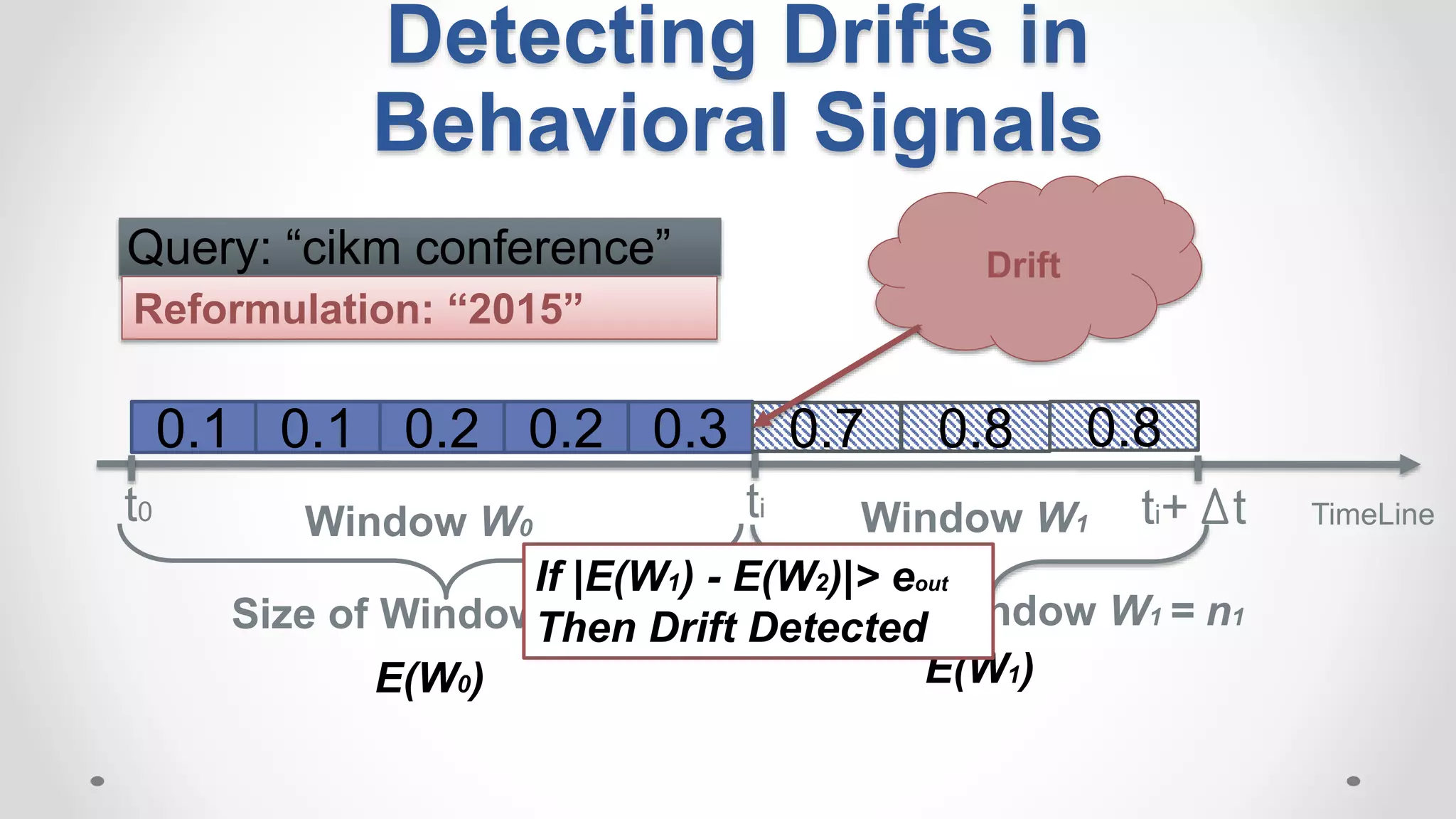

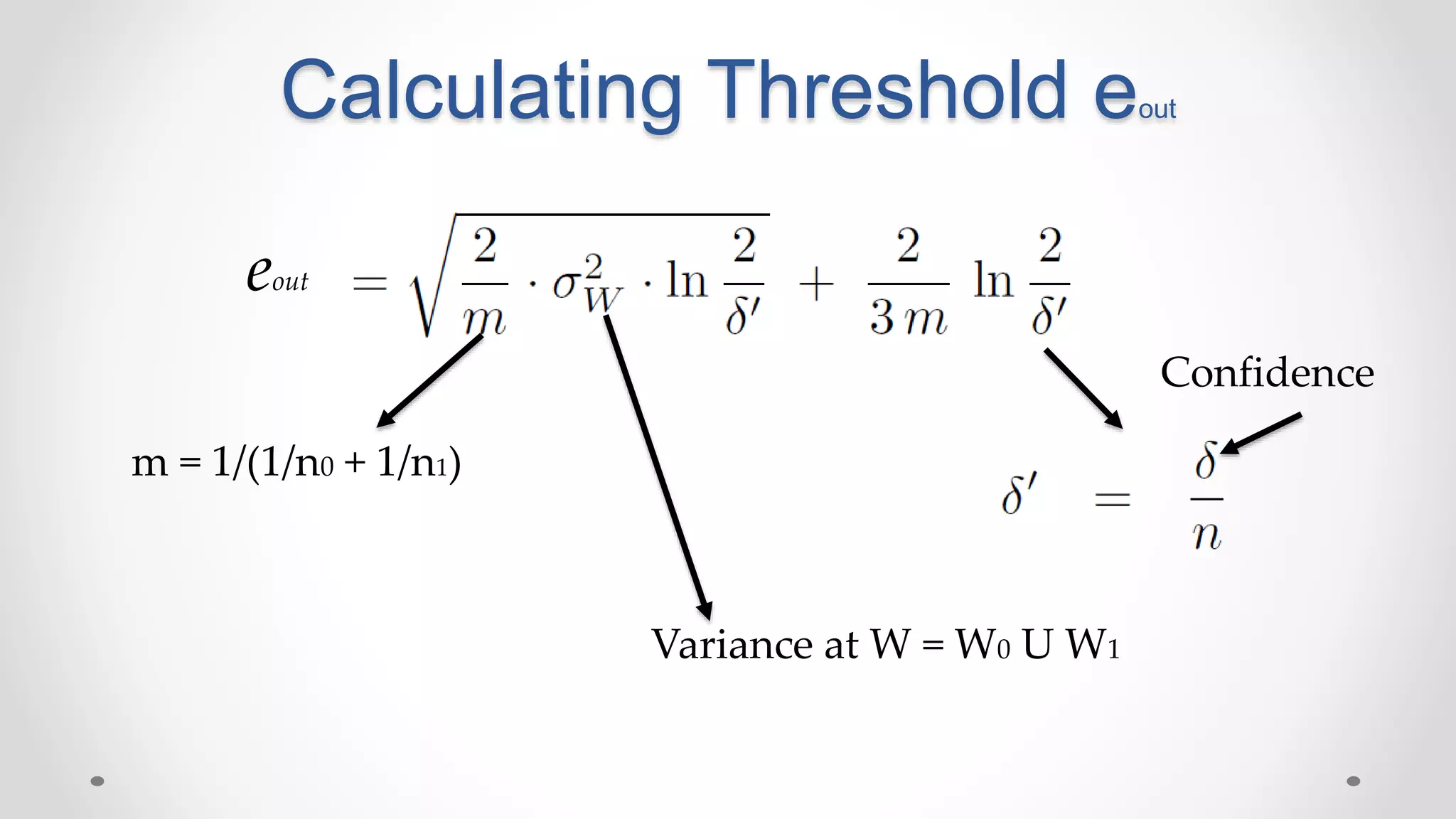

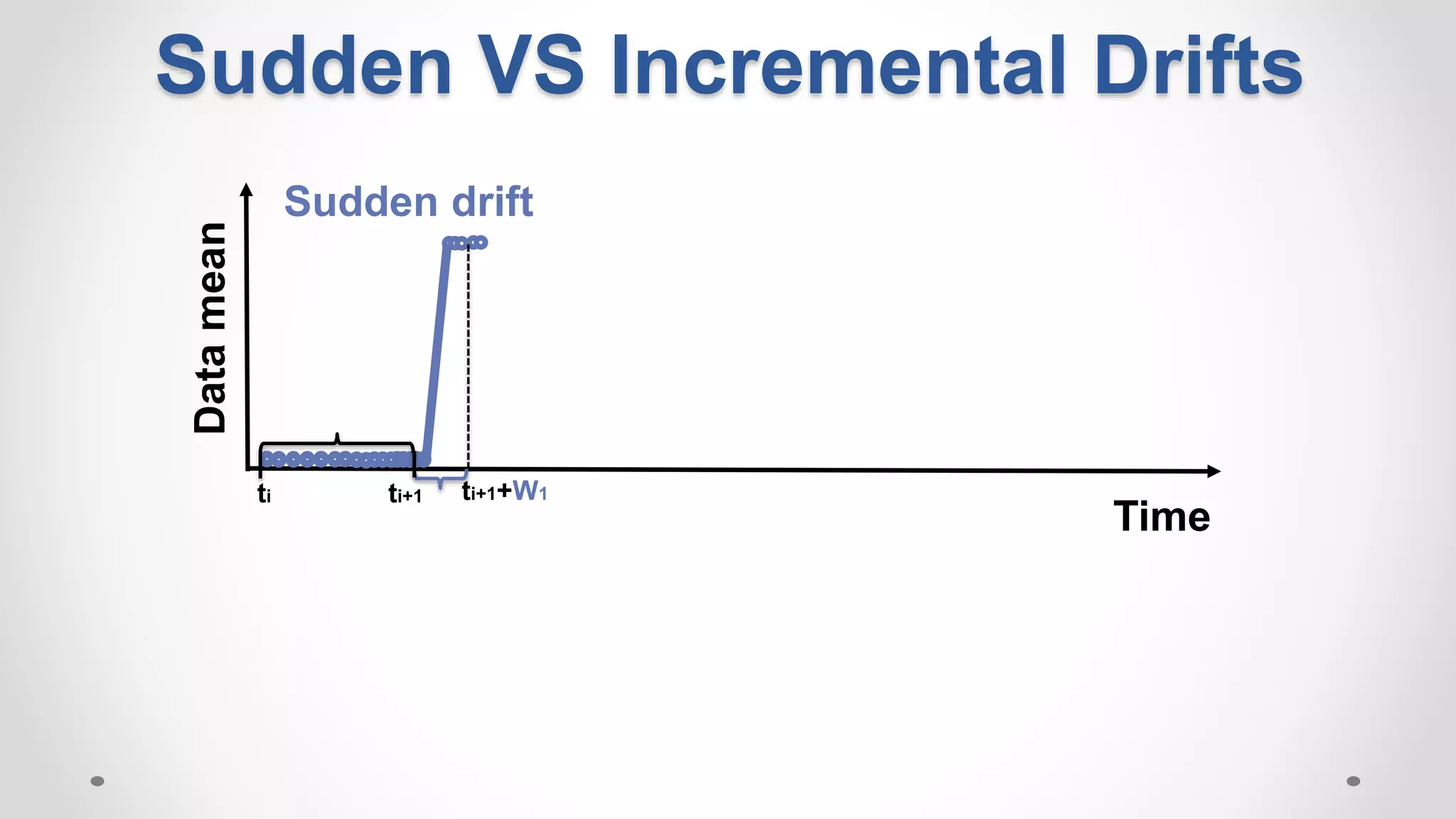

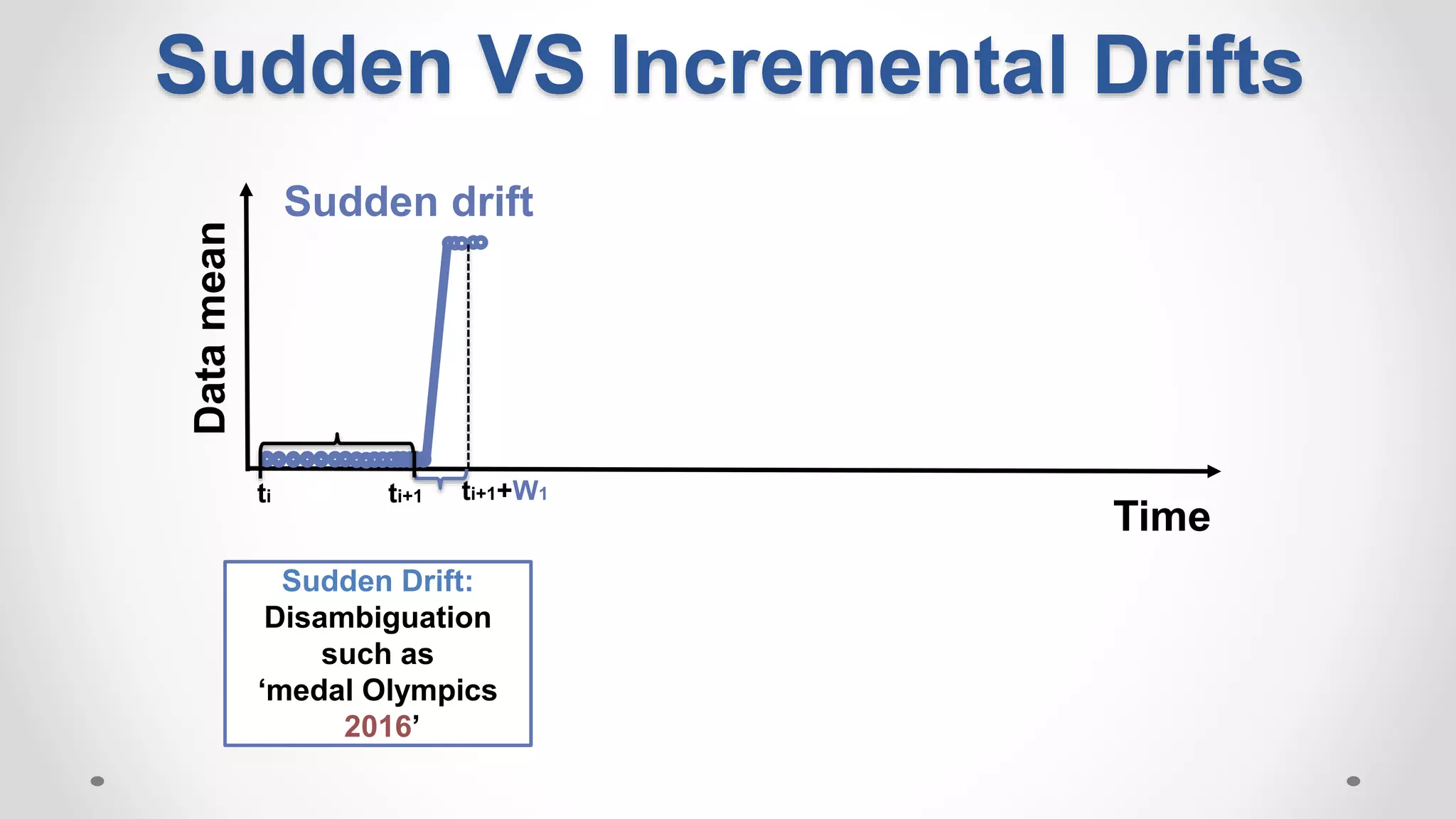

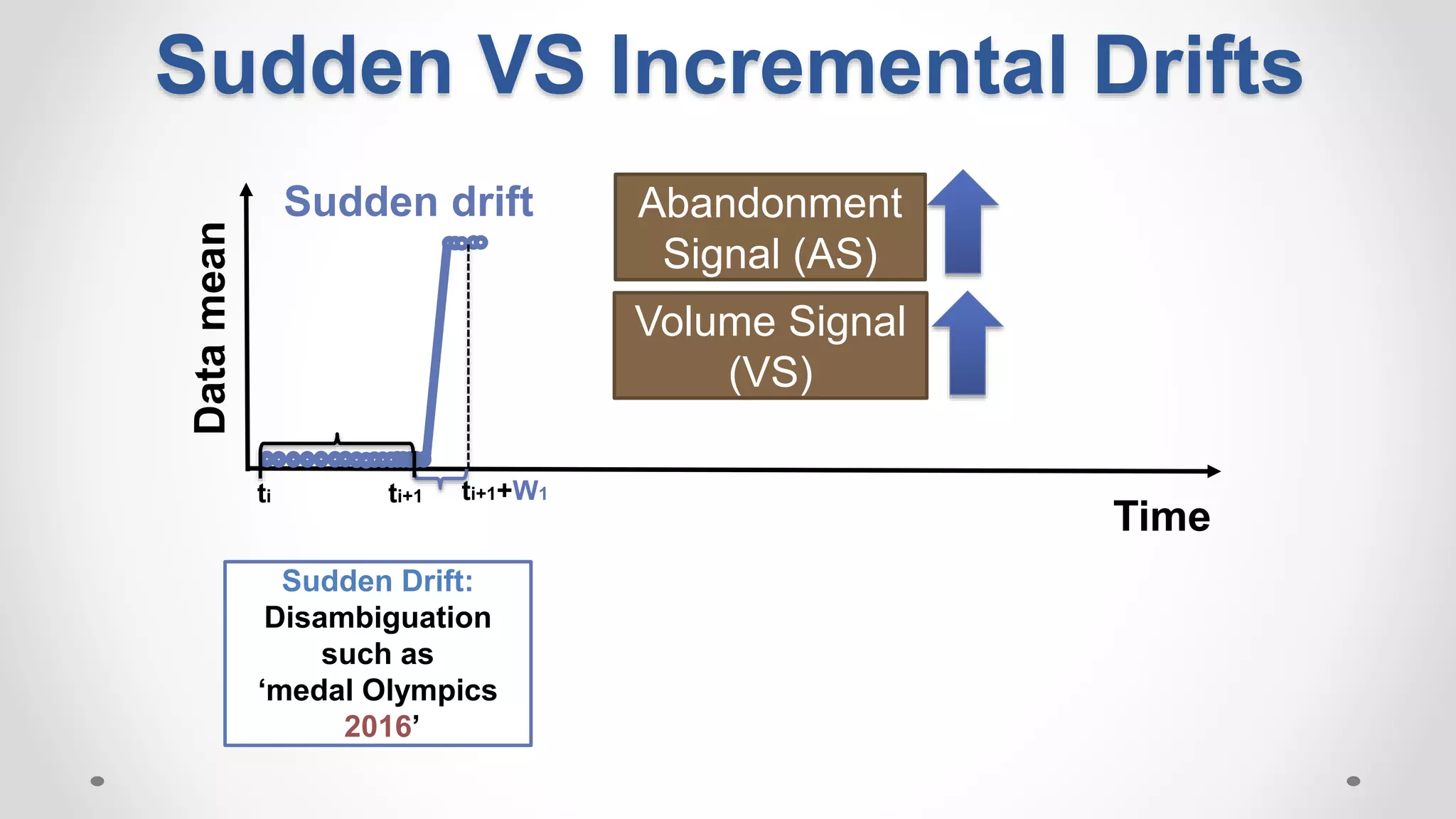

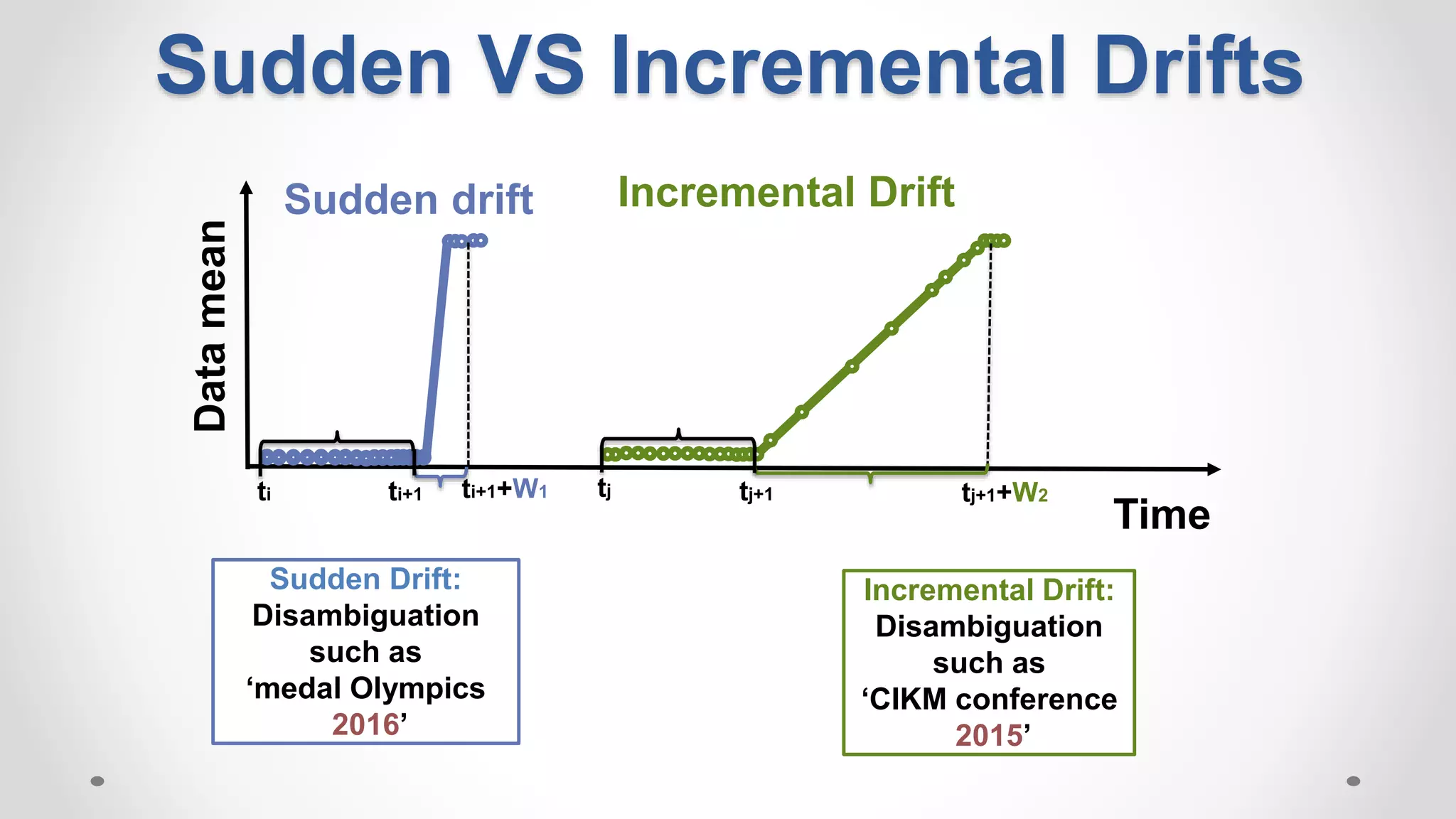

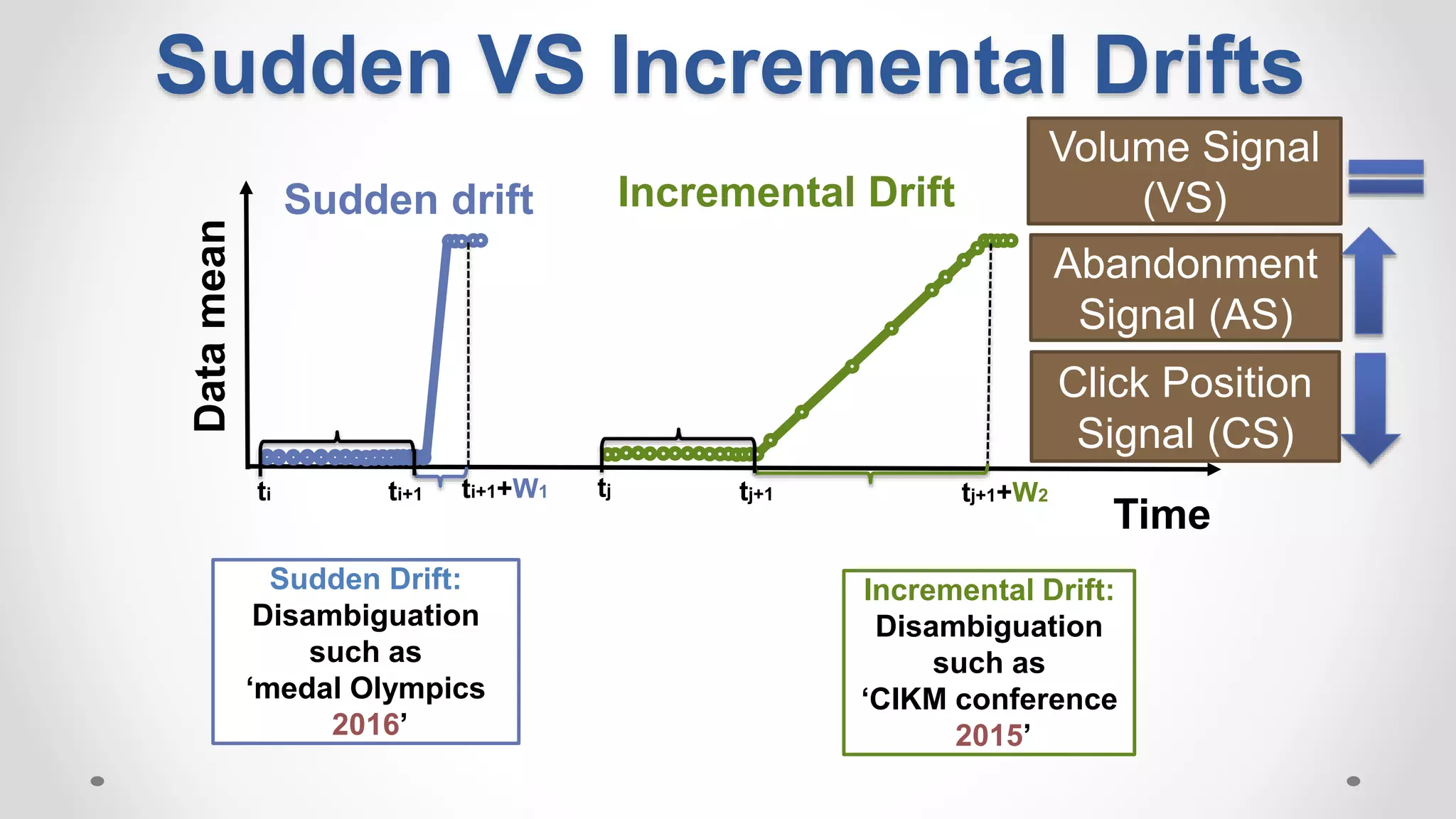

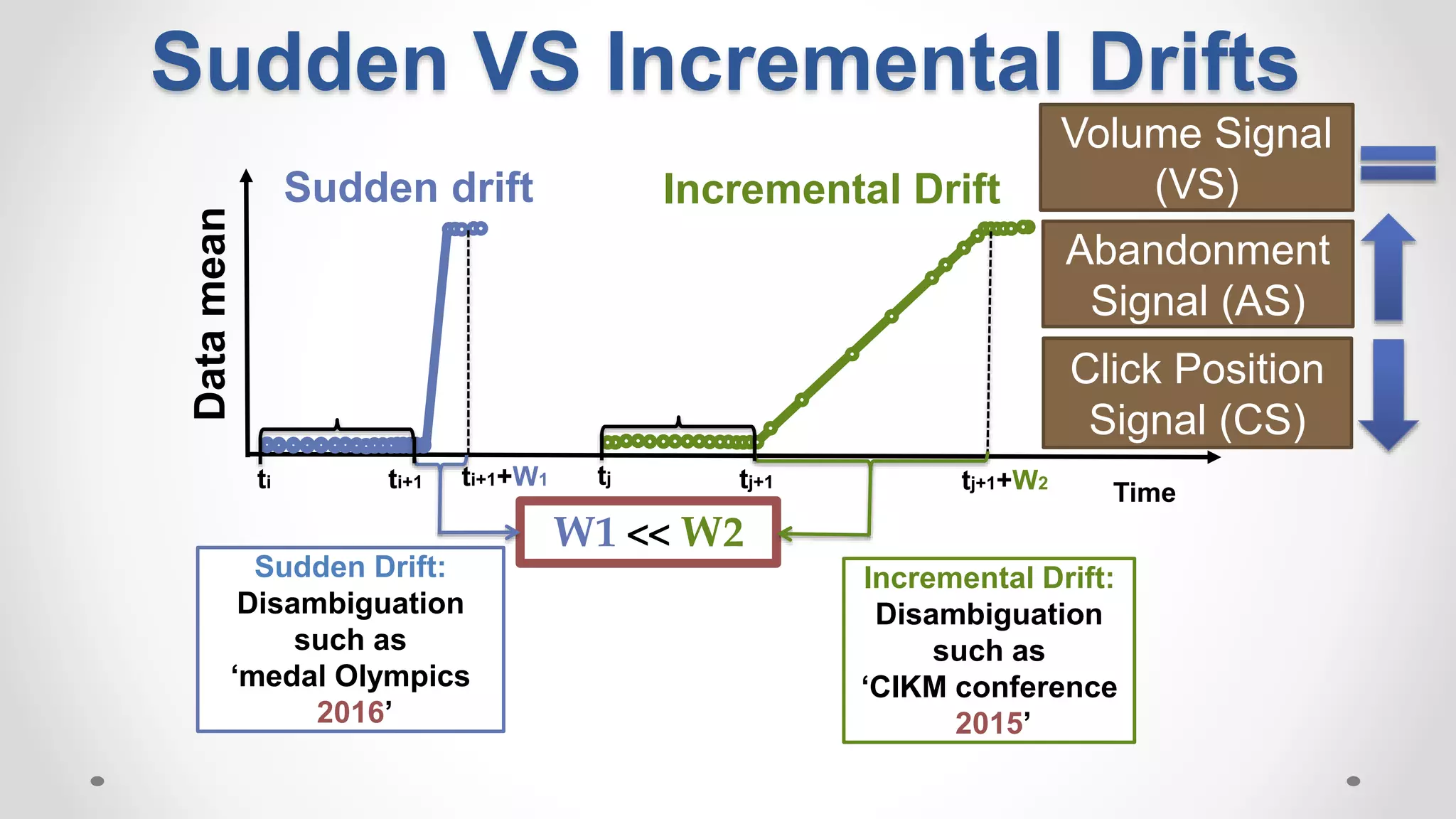

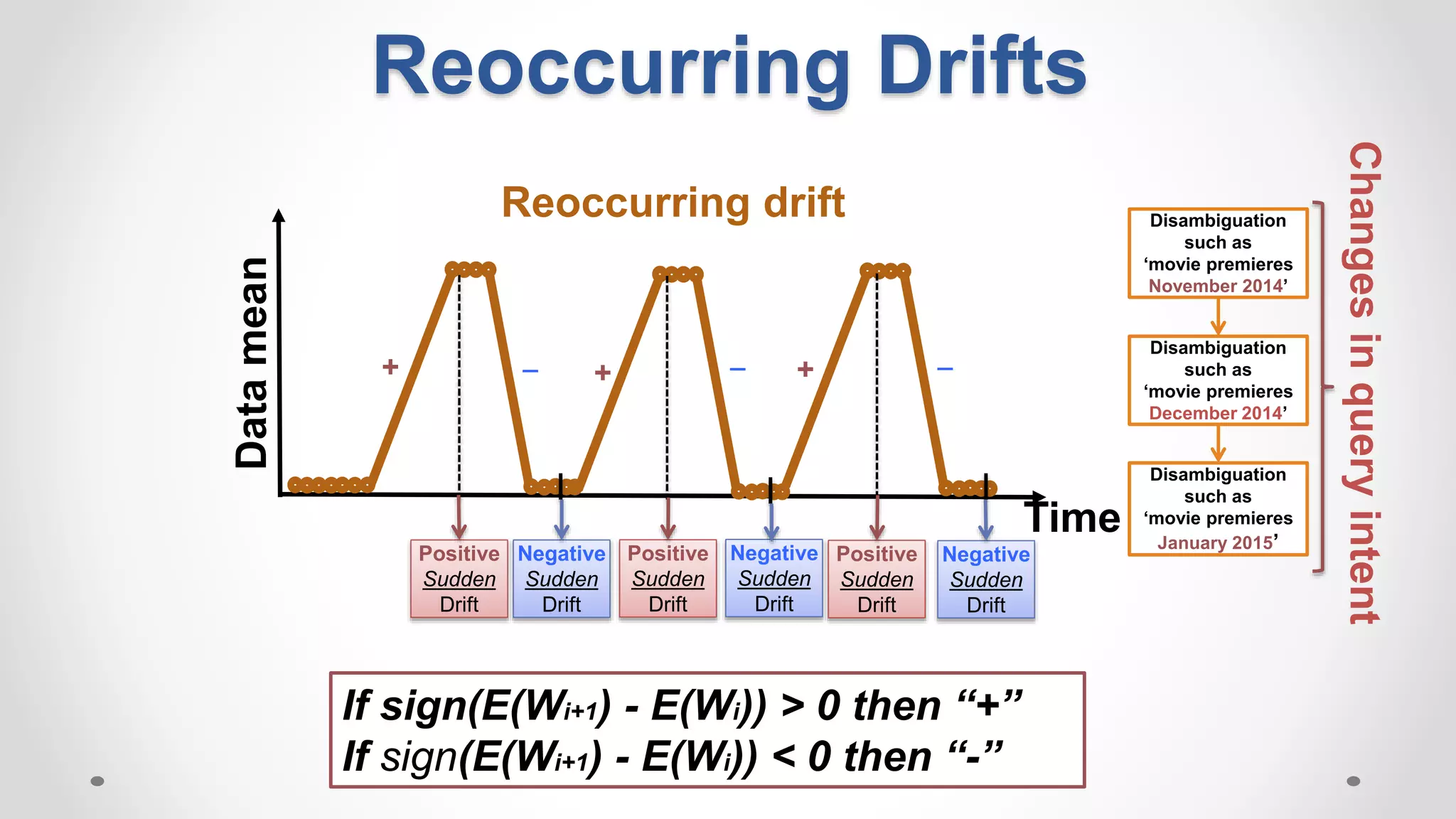

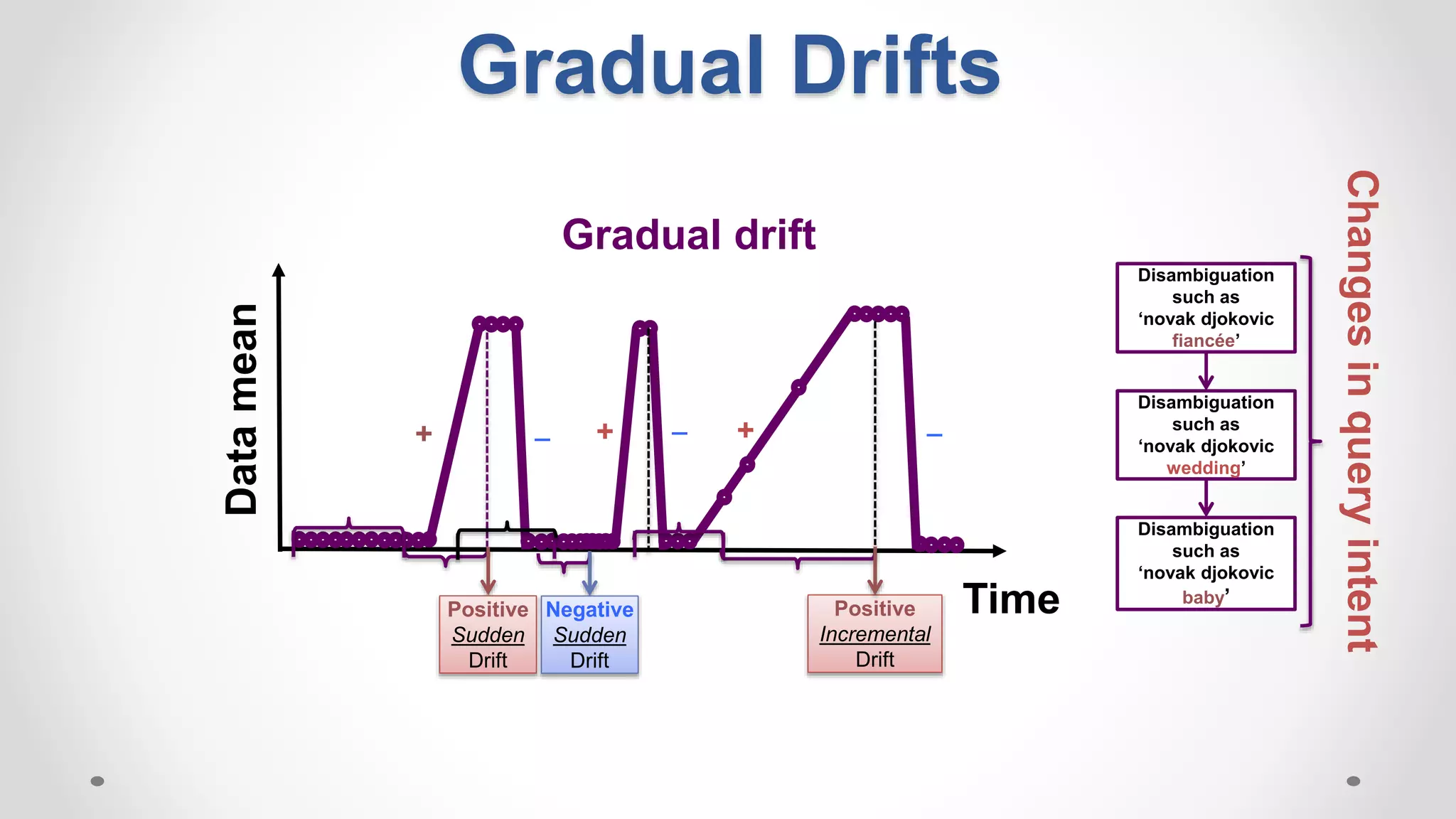

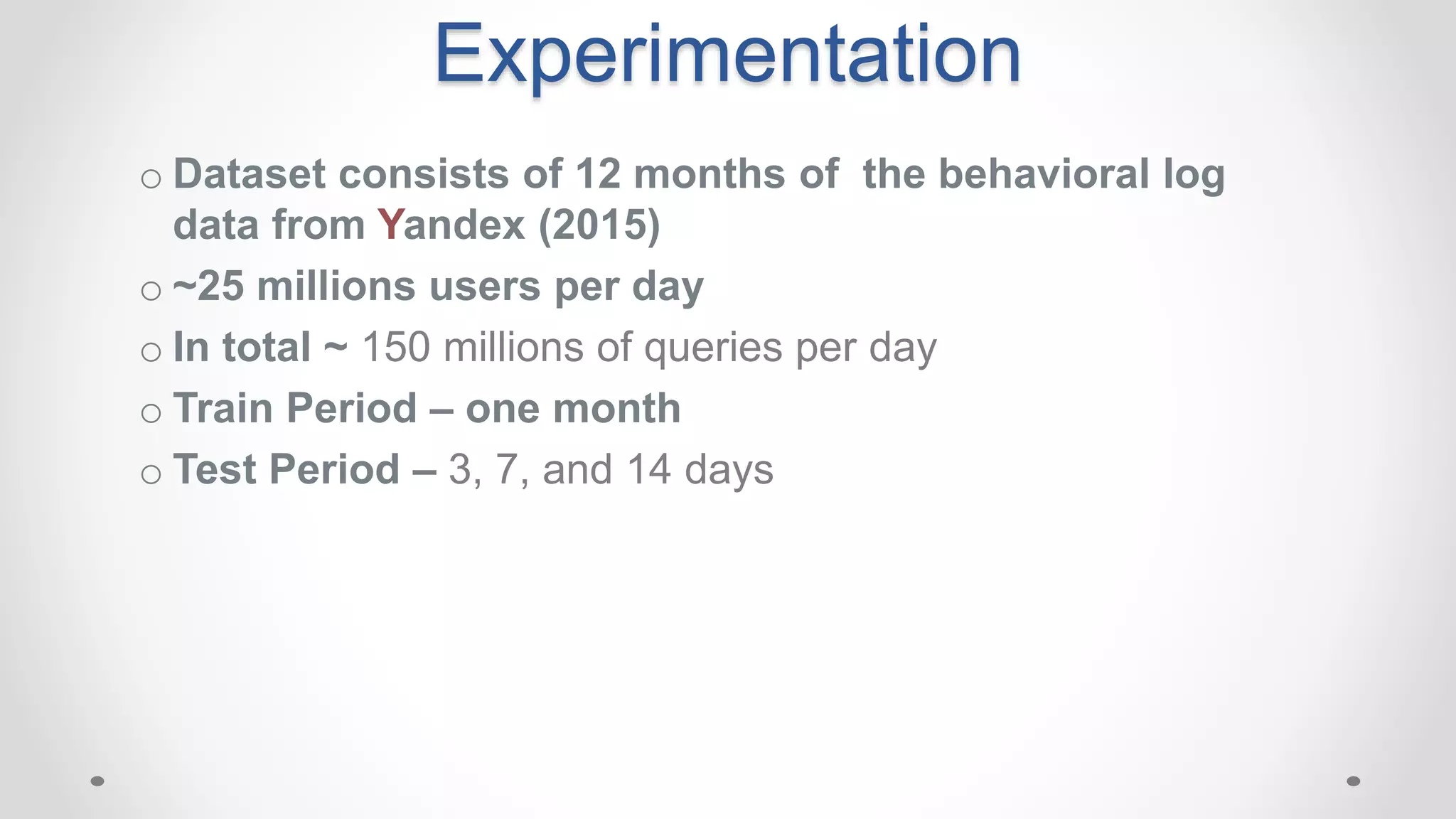

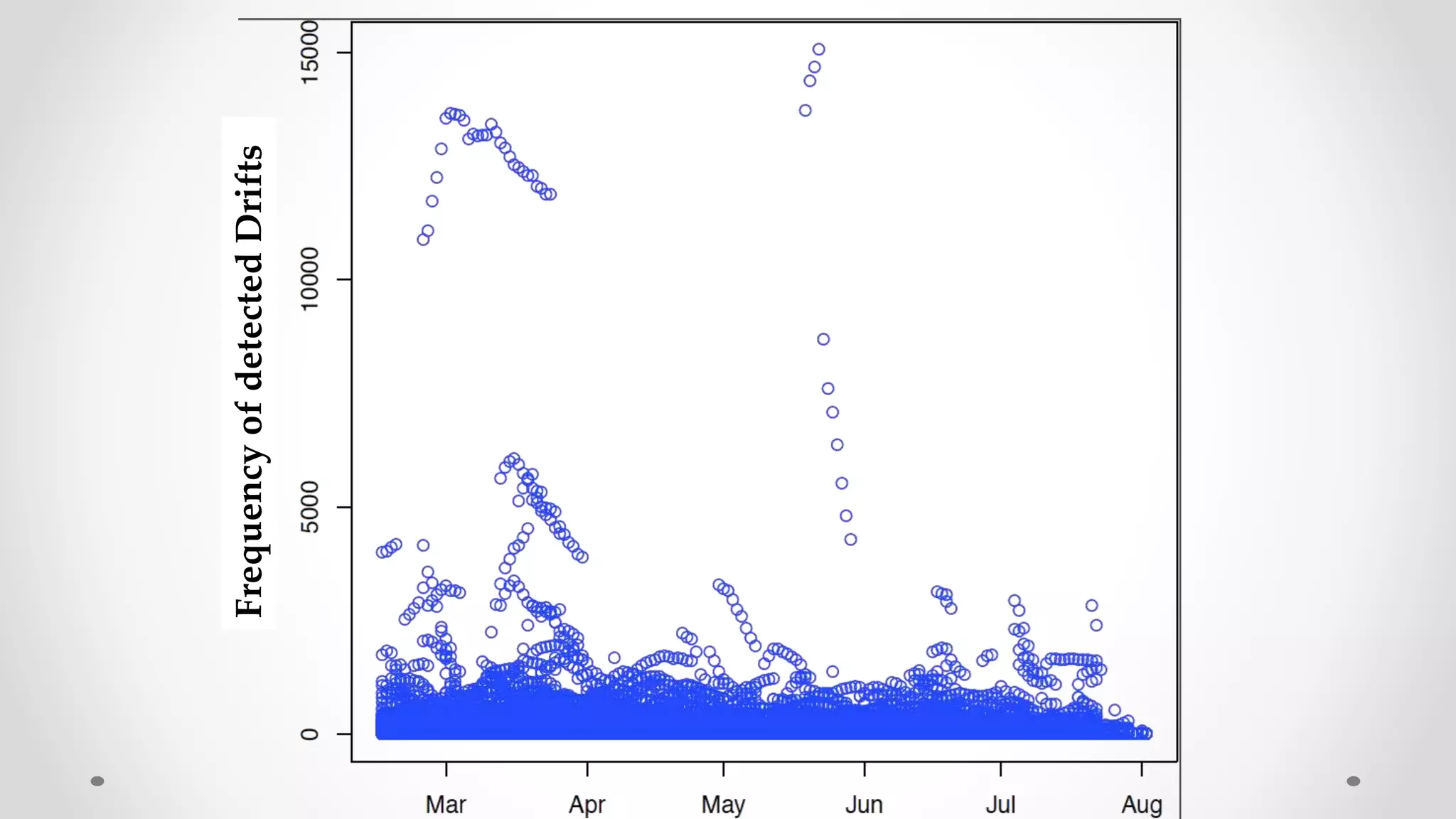

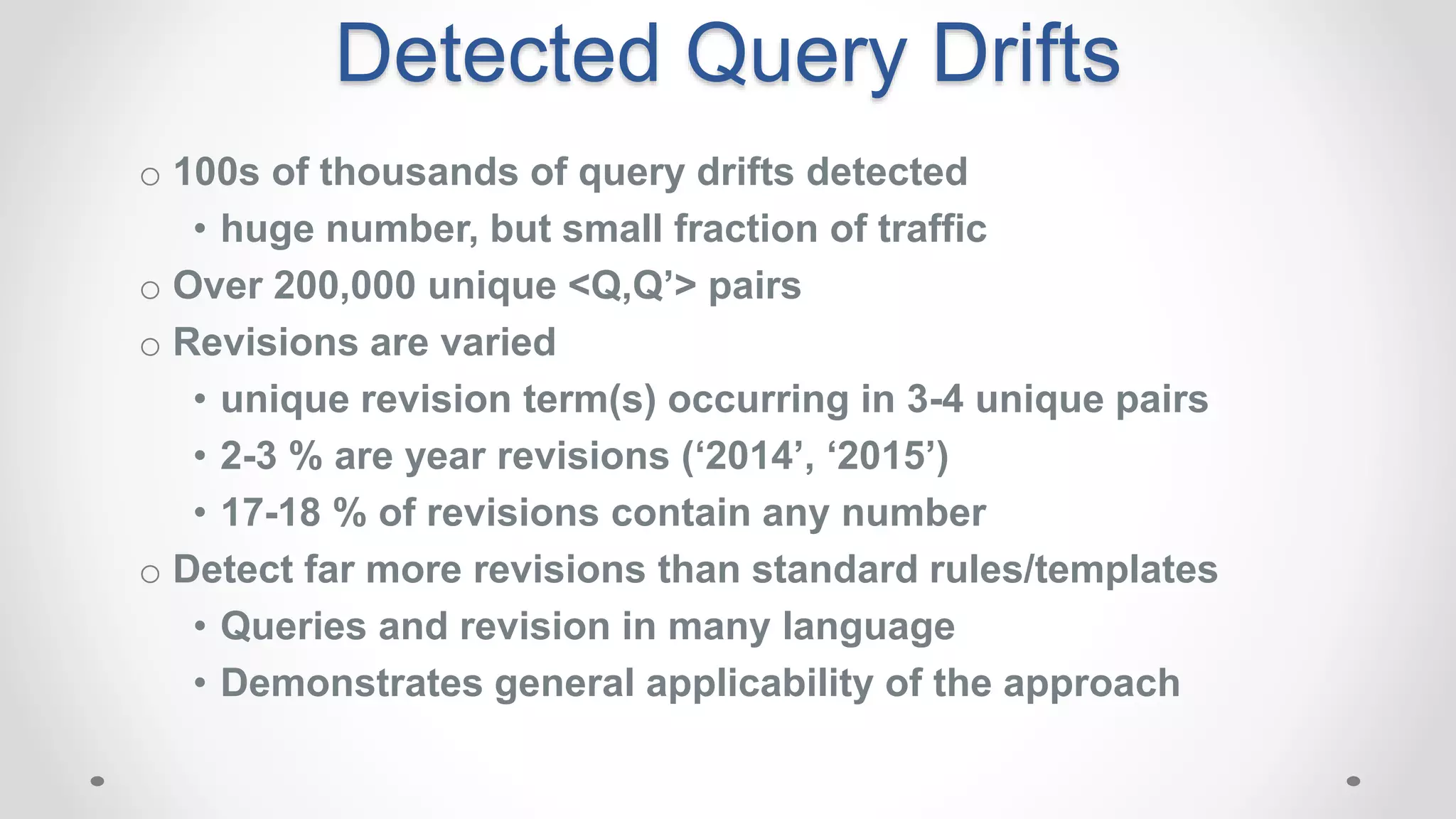

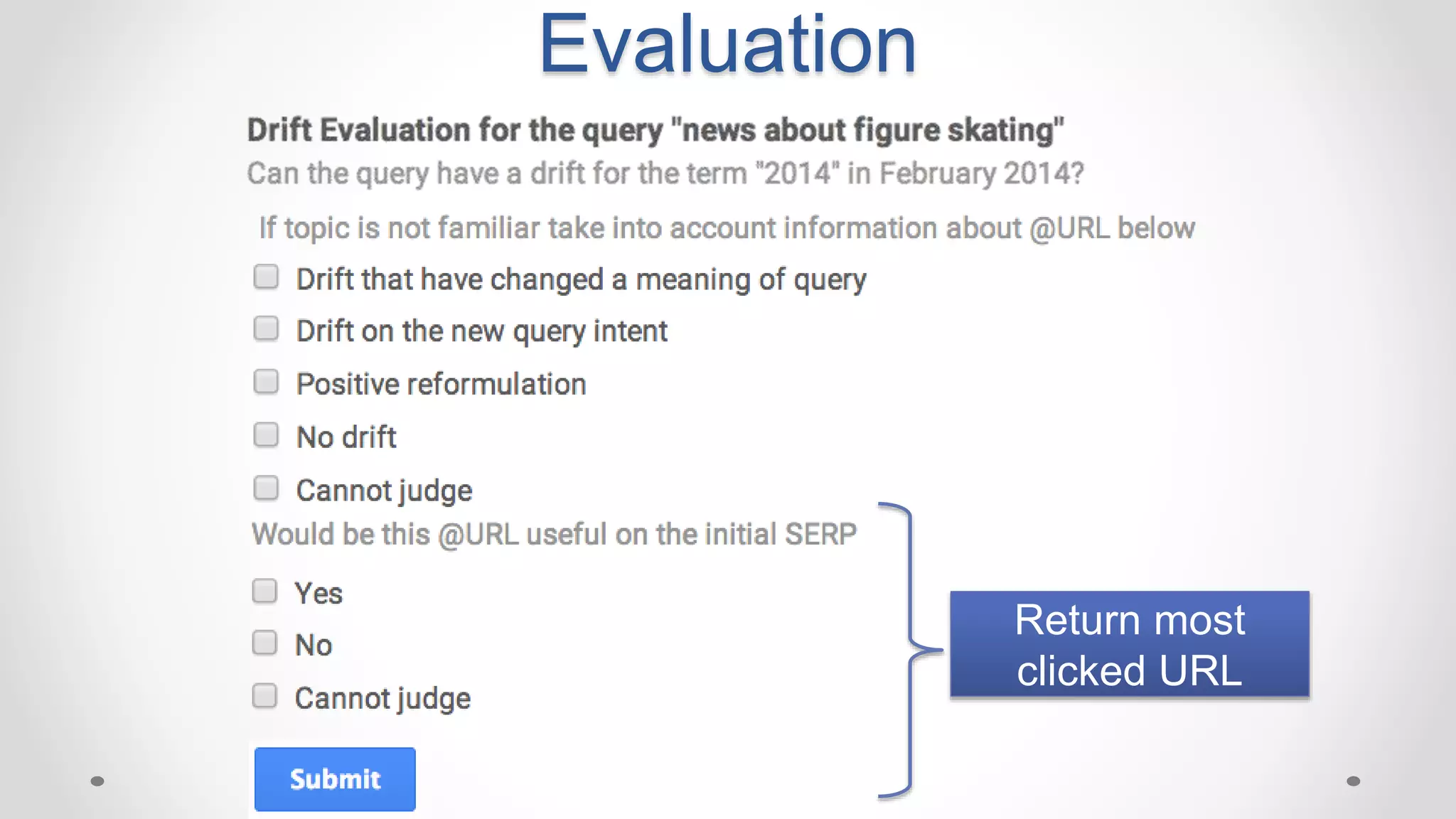

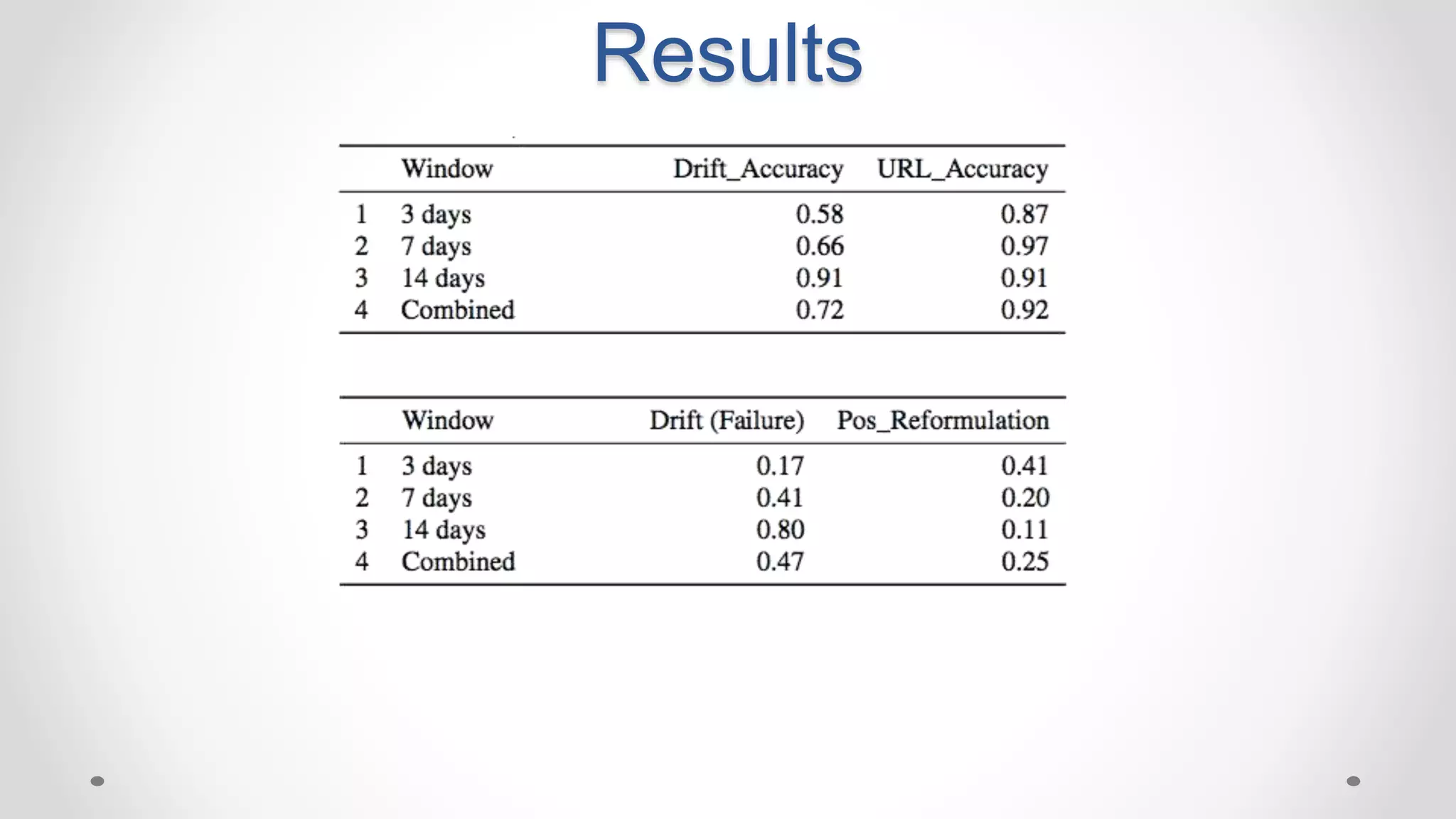

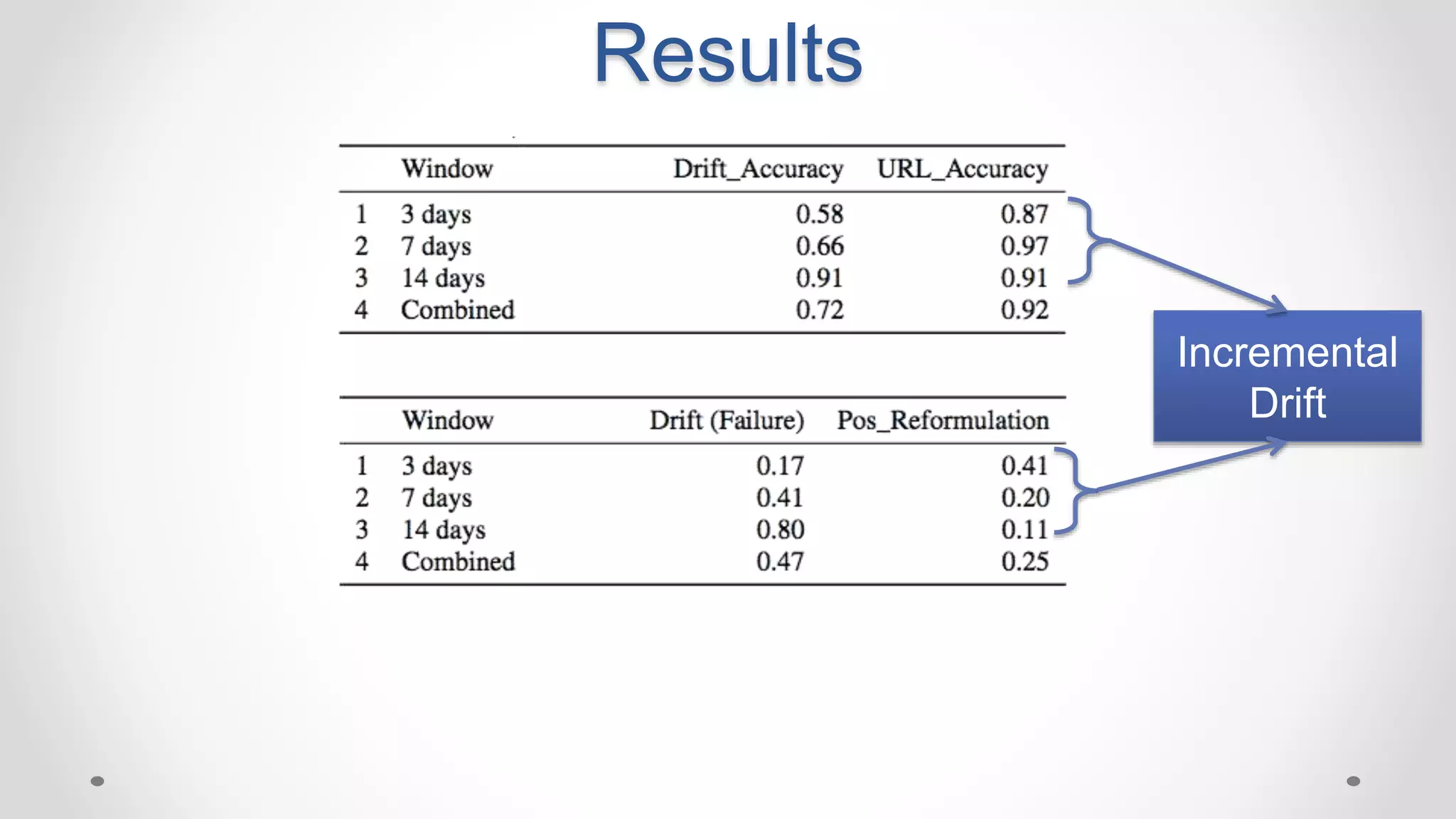

The document discusses the analysis of Search Engine Results Pages (SERPs) from the perspective of user satisfaction, focusing on failed SERPs and their improvements. It introduces methods for detecting changes in user behavior that signify search failures, categorized by different types of drifts in user intent such as sudden, incremental, and reoccurring. The research also emphasizes the development of an unsupervised approach for identifying these failed SERPs through massive behavioral data analysis.