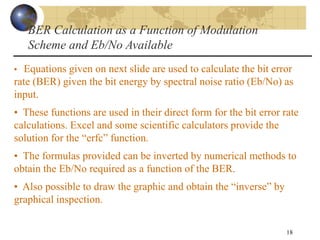

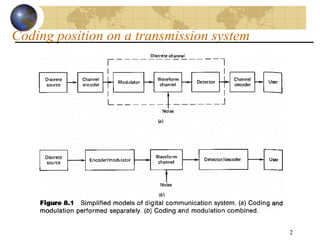

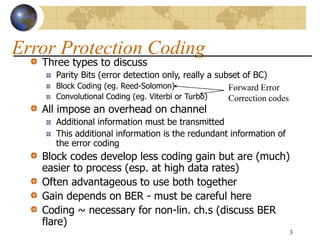

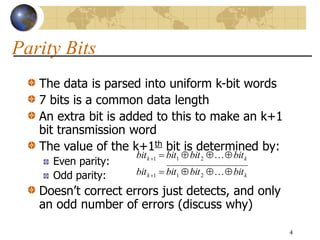

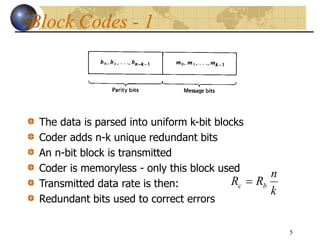

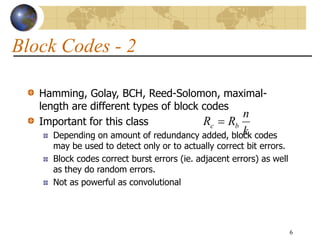

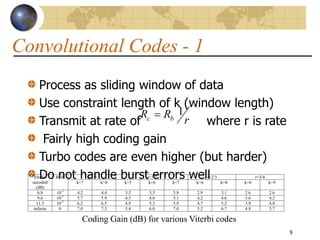

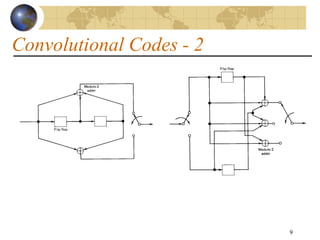

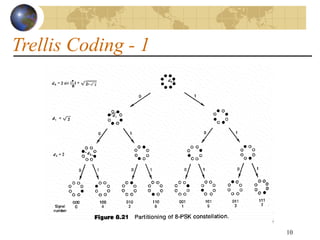

This document discusses error detection and correction techniques used in digital communications. It describes three main types of error coding: parity bits for error detection, block coding for error detection and correction (e.g. Reed-Solomon), and convolutional coding for error correction (e.g. Viterbi). It provides details on parity bits, block codes including cyclic codes, and convolutional codes. Formulas for coding gain, data rates, required signal-to-noise ratios, and bit error rate calculations as a function of modulation scheme and energy per bit to noise spectral density ratio are also summarized.

![15

Summary of Digital Communications - 2

• Bits per Symbol: M

Log

Bs 2

• Symbol Rate [symbol/second]: W

s B

R

1

1

• Gross Bit Rate [bps]: W

s

s

G B

M

Log

R

B

R

1

1

2

• Net Data Rate [bps]:

)

1

(

1

1

)

1

( 2 Ov

B

M

Log

Ov

R

R W

G

i

](https://image.slidesharecdn.com/errorcorrection-231204153105-244c932f/85/error_correction-ppt-15-320.jpg)

![16

Summary of Digital Communications - 3

• Required Eb/No (assuming no coding) [adimensional]:

(function of modulation scheme and required bit error rate – see table later)

BER)

Scheme,

n

(Modulatio

function

Table 1

theory

from

Req

0

N

Eb

• Required Eb/No (using coding gain) [adimensional]:

theory

from

Req

0

Re

0

1

N

E

G

N

E b

c

q

b

• Required C/N [adimensional]:

W

G

q

b

q B

R

N

E

N

C

*

Re

0

Re

](https://image.slidesharecdn.com/errorcorrection-231204153105-244c932f/85/error_correction-ppt-16-320.jpg)

![17

Summary of Digital Communications - 4

• Required Signal Strength [Watts]:

Where k = Boltzman constant = 1.38e-23 J/Hz

TS = System Noise Temperature

T0 = ambient temperature (usually 290 K)

F = System Noise figure in linear scale (not in dB)

F

B

kT

N

C

B

kT

N

C

N

N

C

C

W

q

W

s

q

q

q

0

Re

Re

Re

Re

](https://image.slidesharecdn.com/errorcorrection-231204153105-244c932f/85/error_correction-ppt-17-320.jpg)