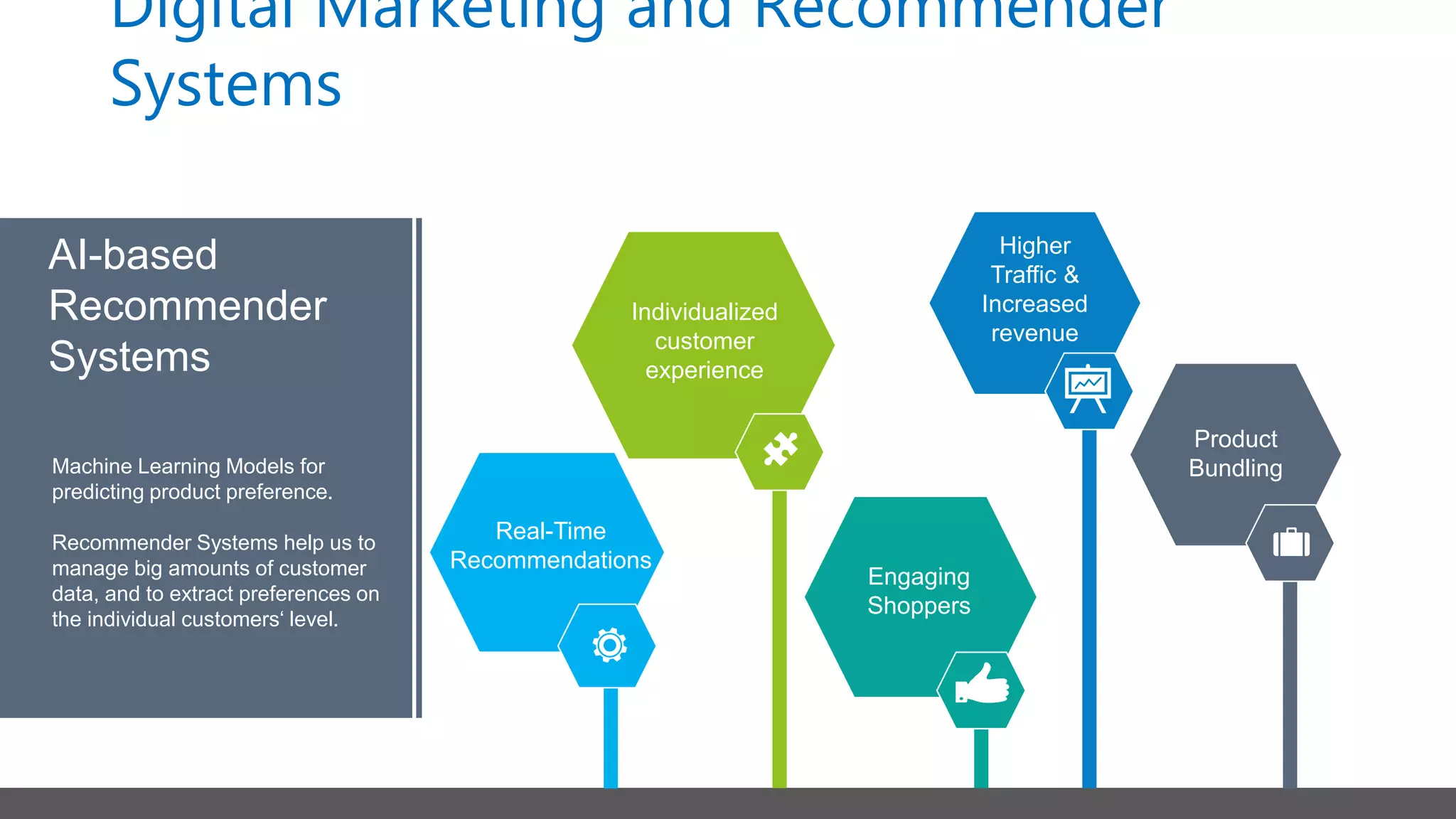

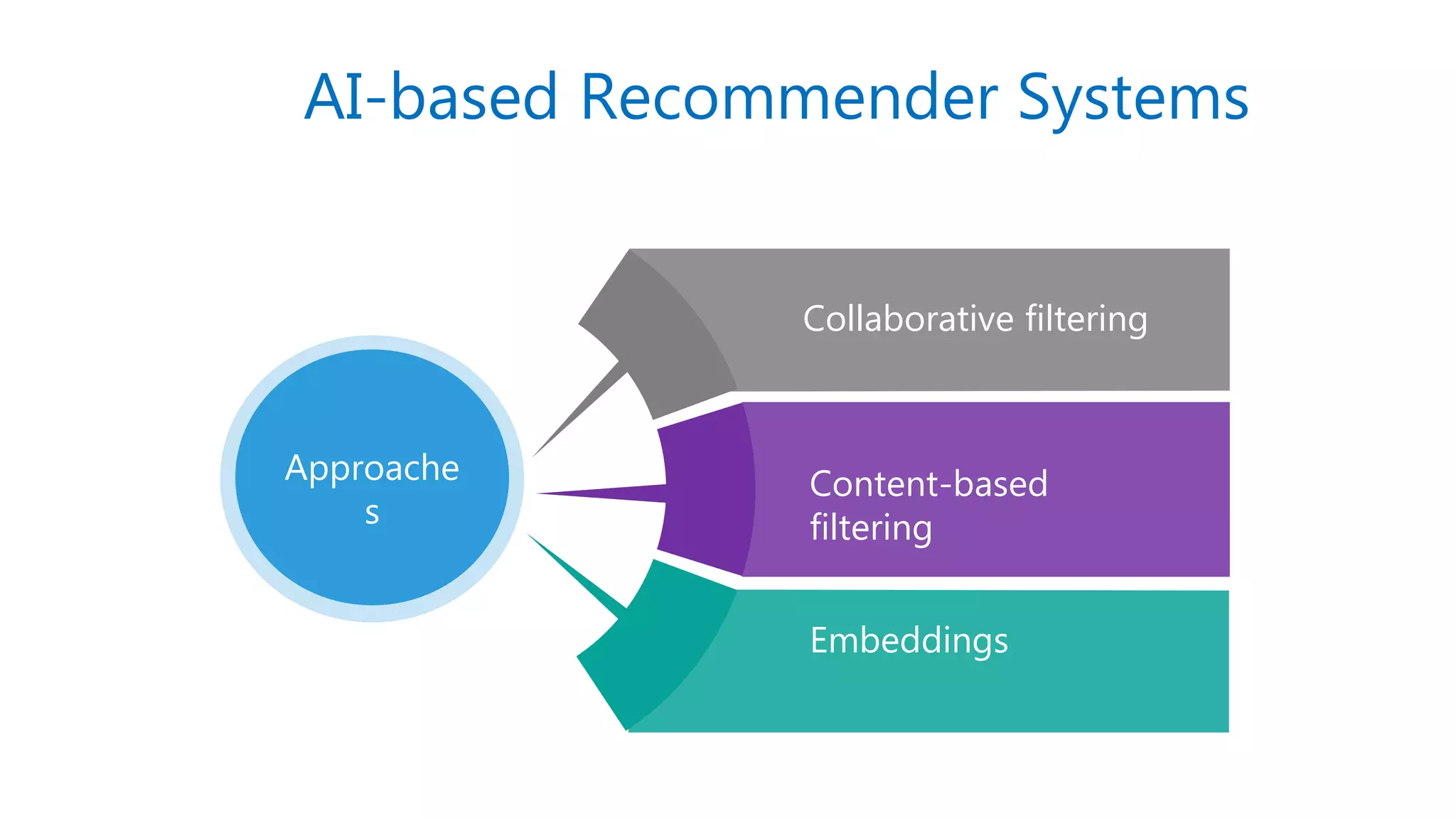

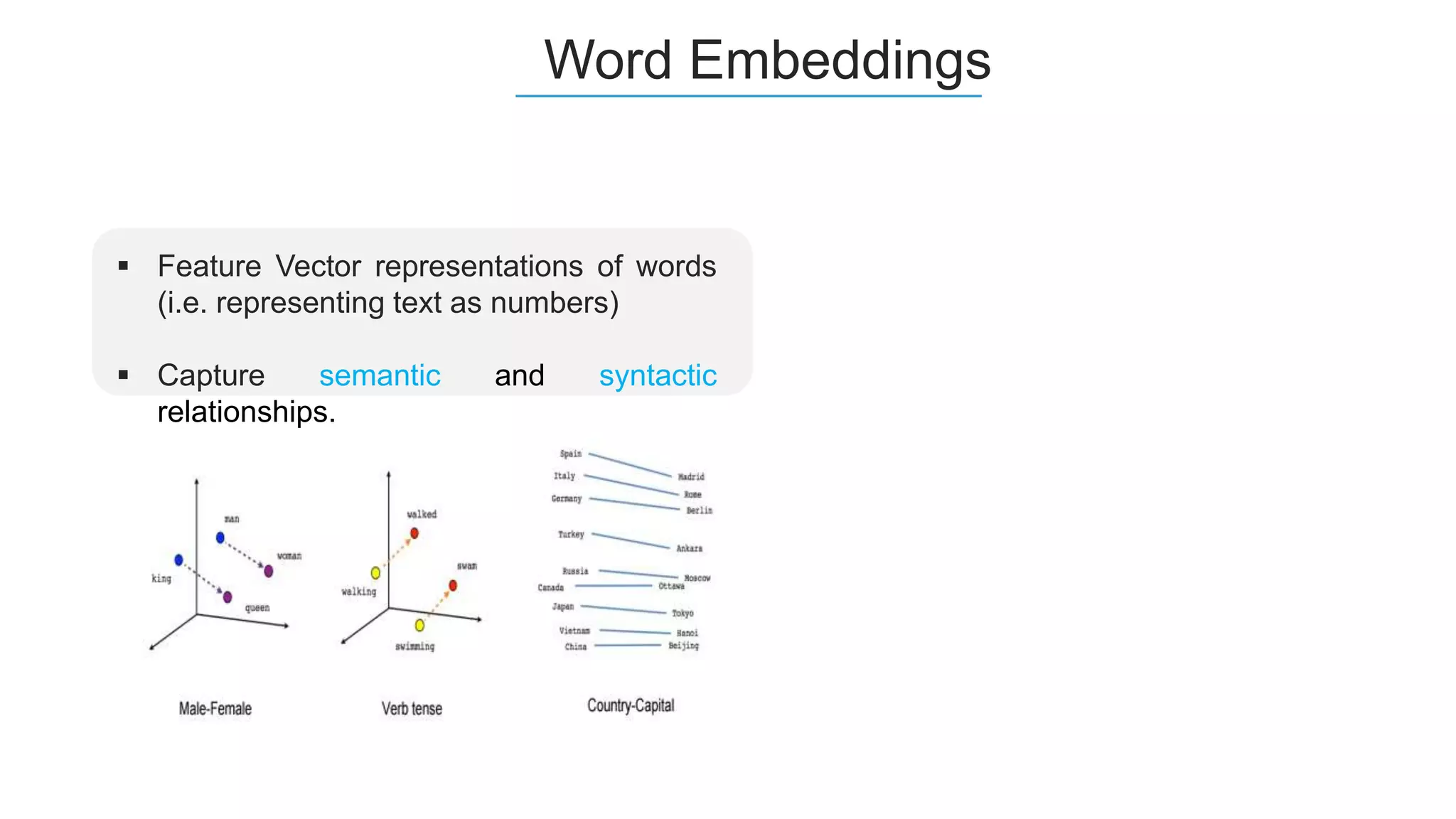

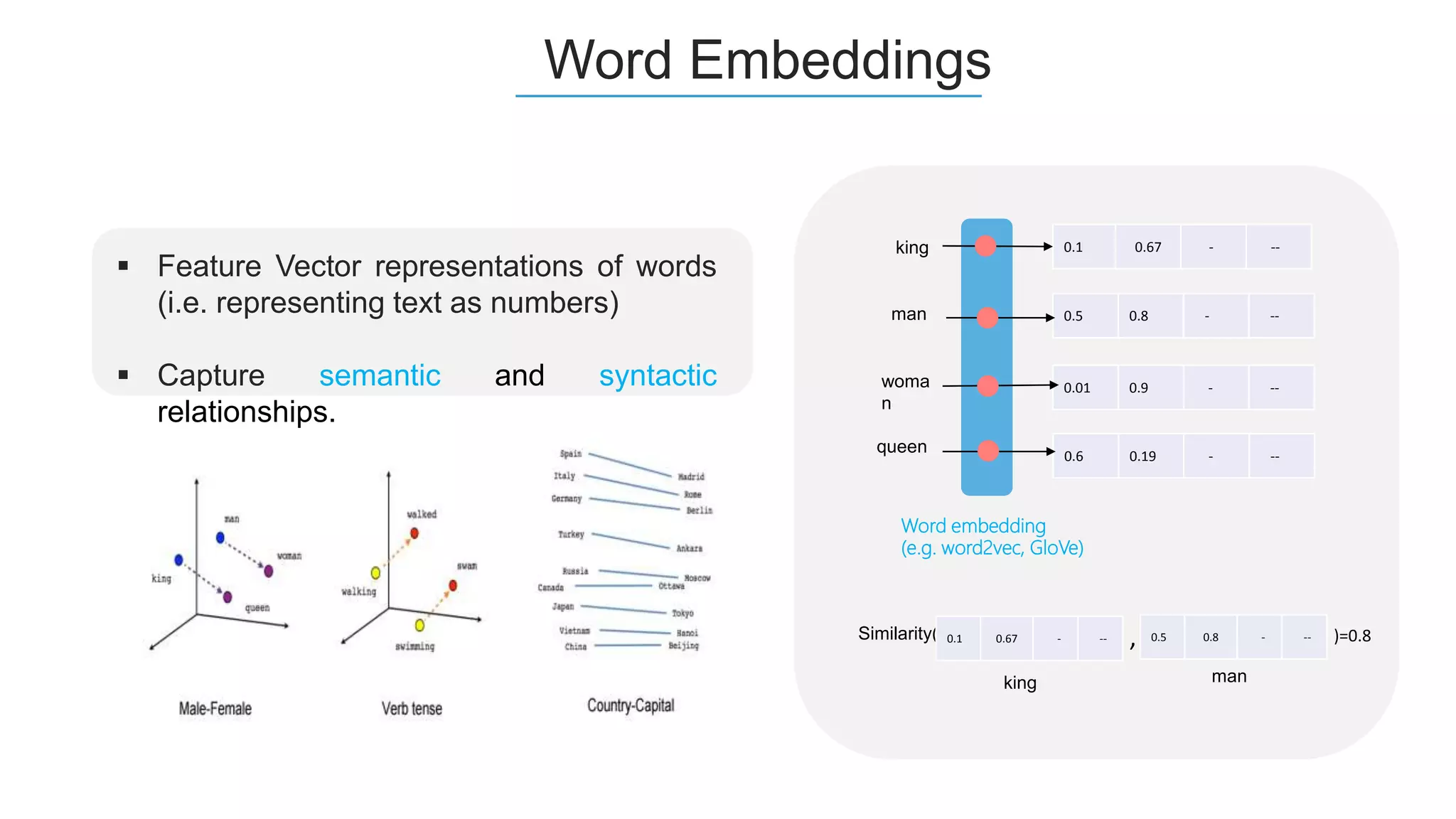

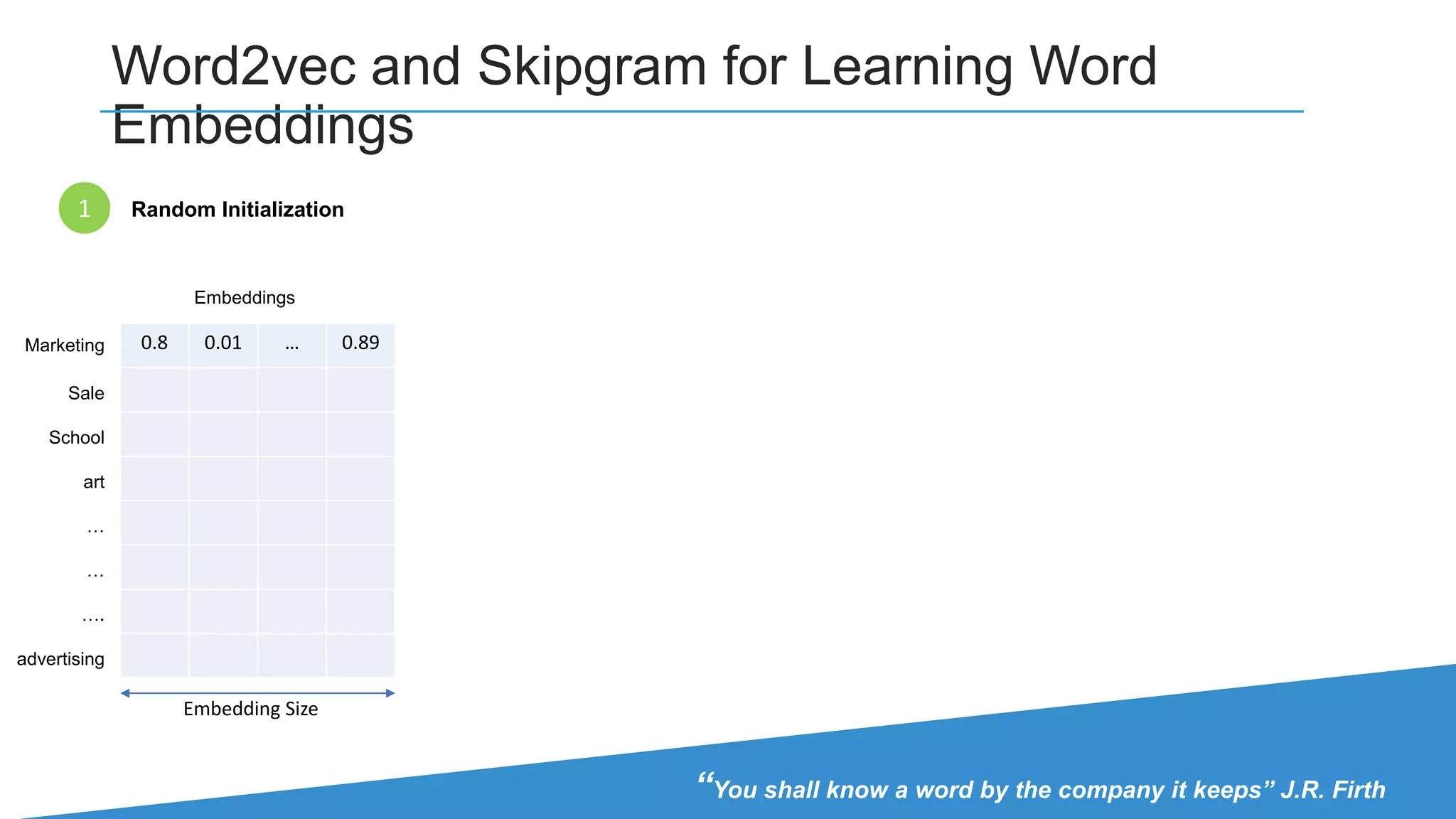

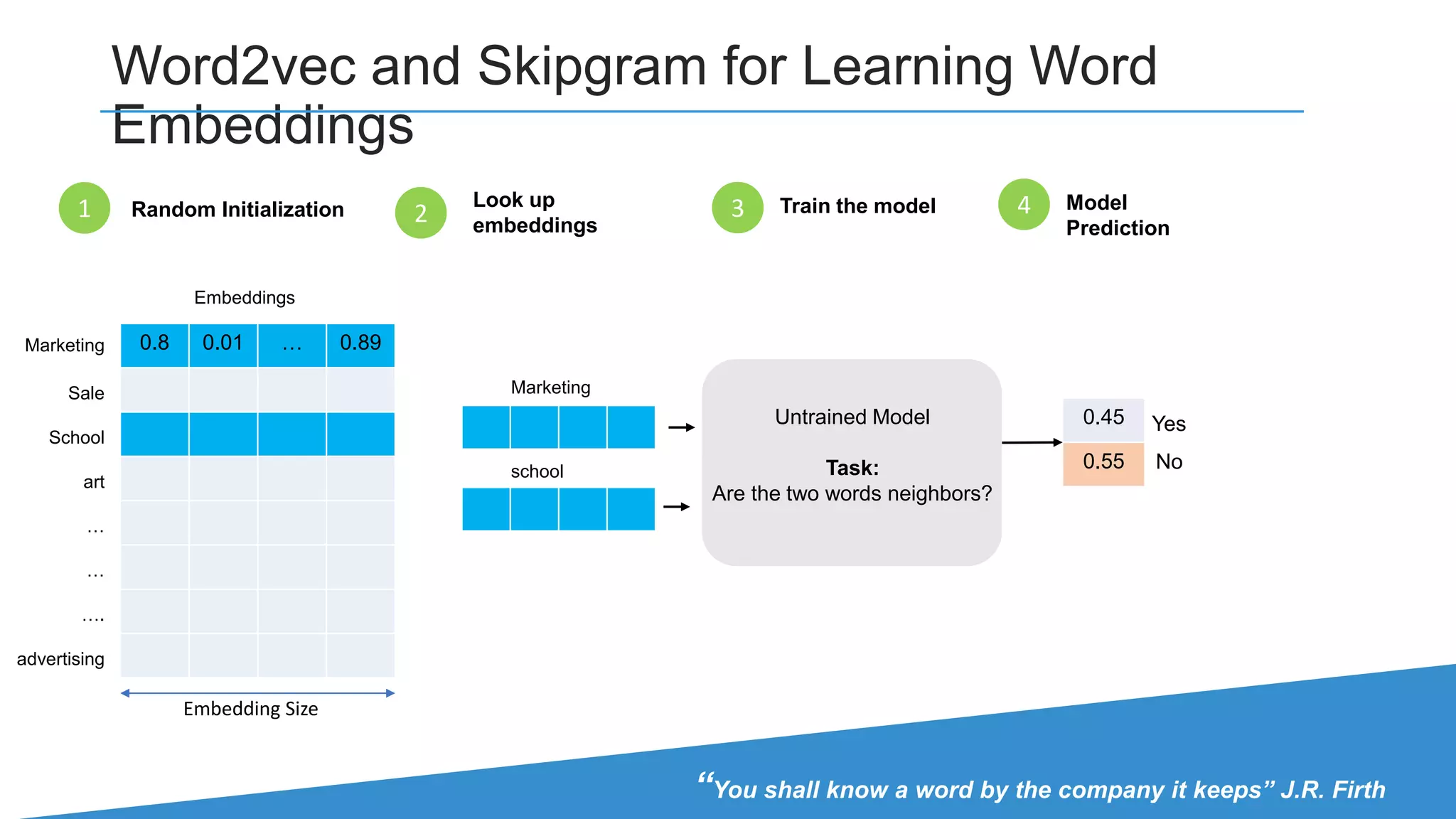

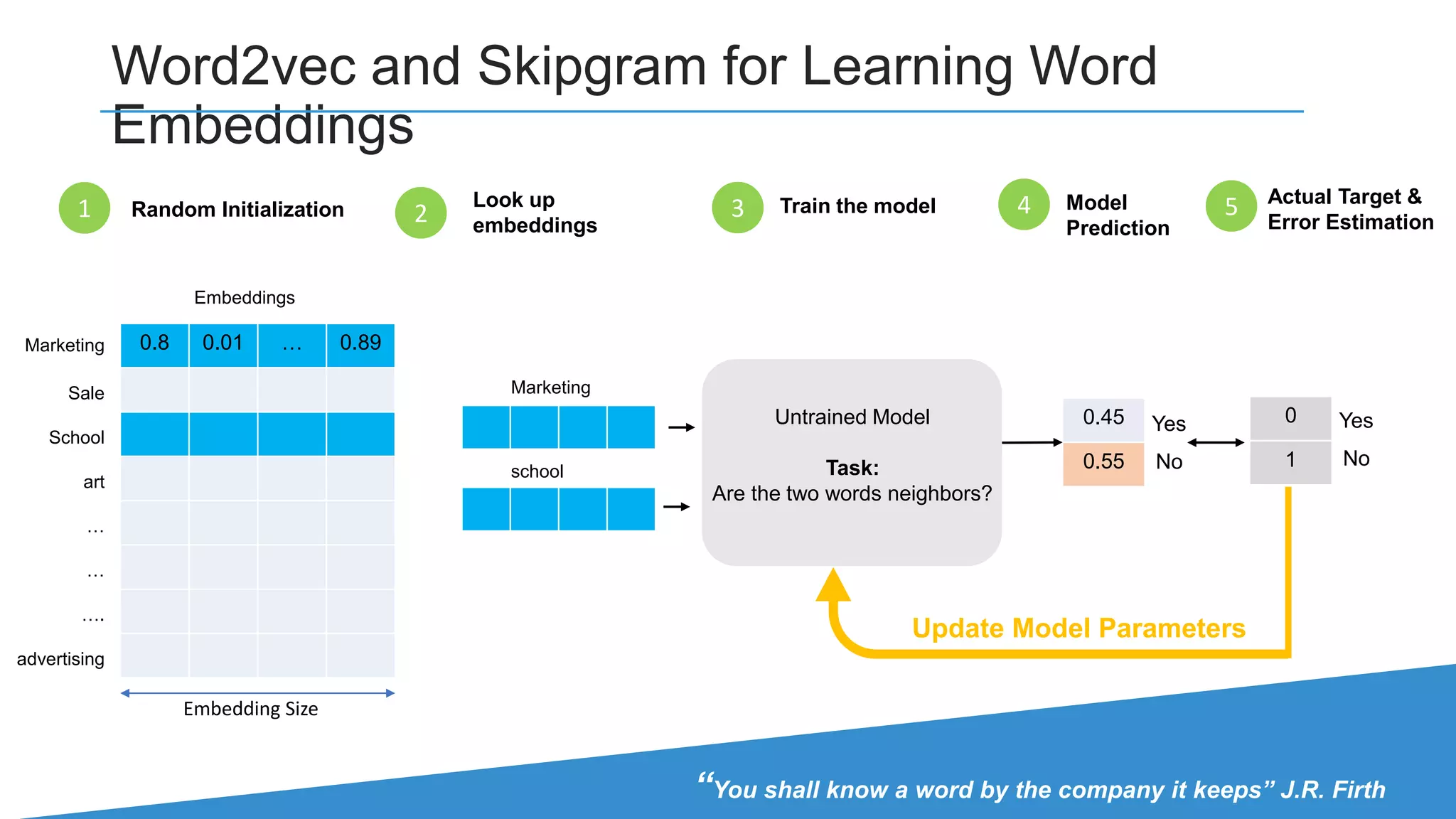

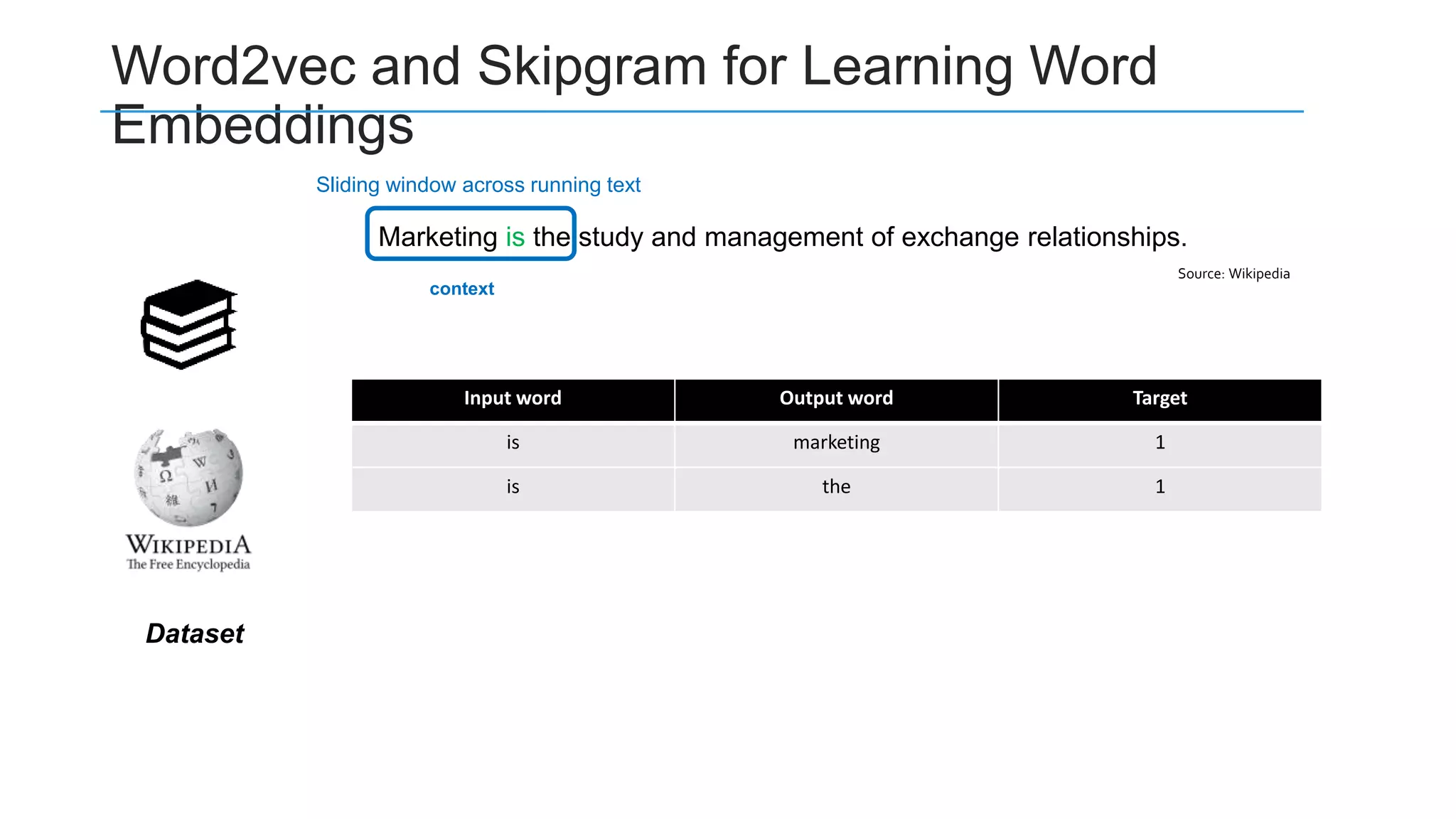

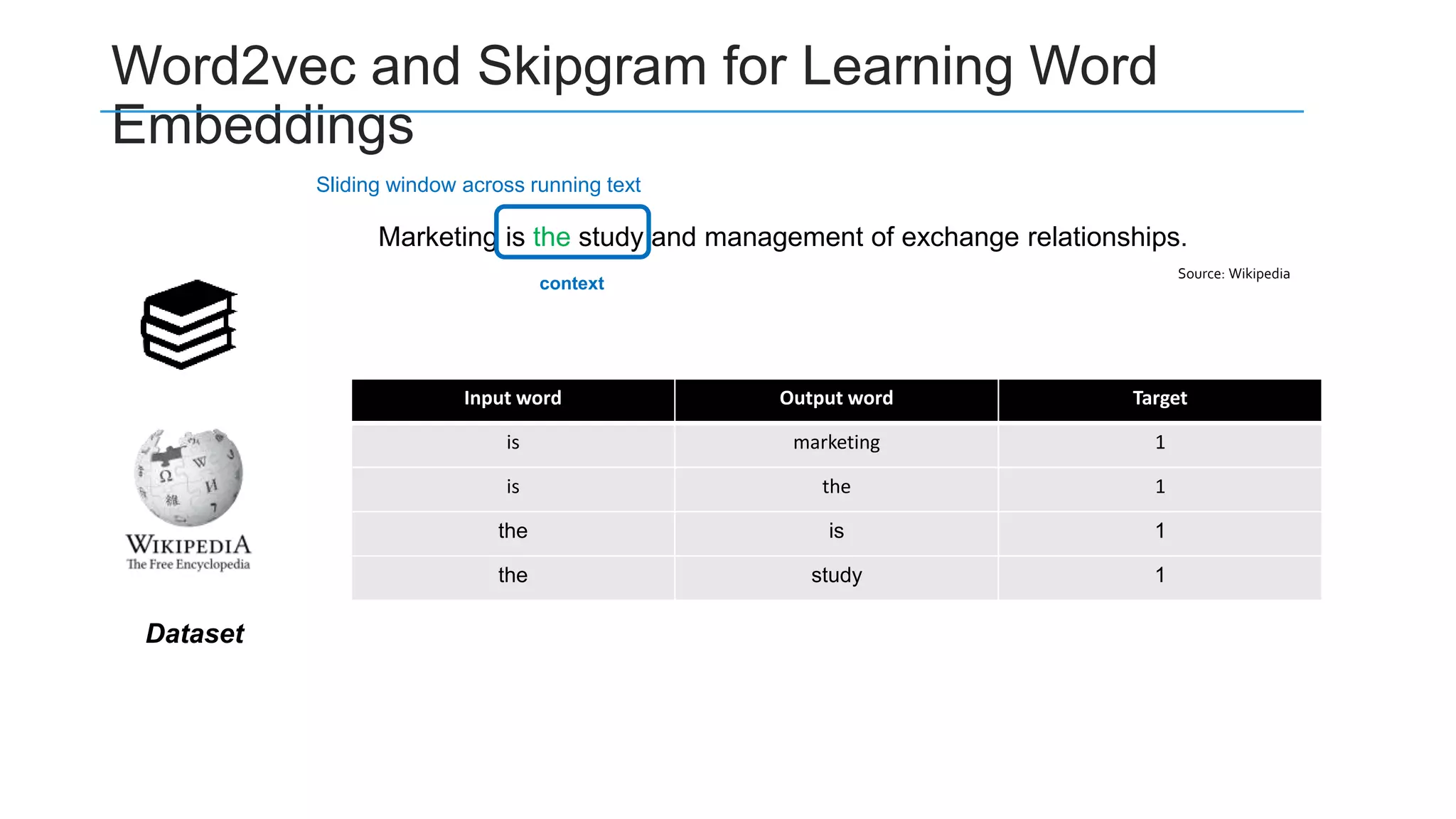

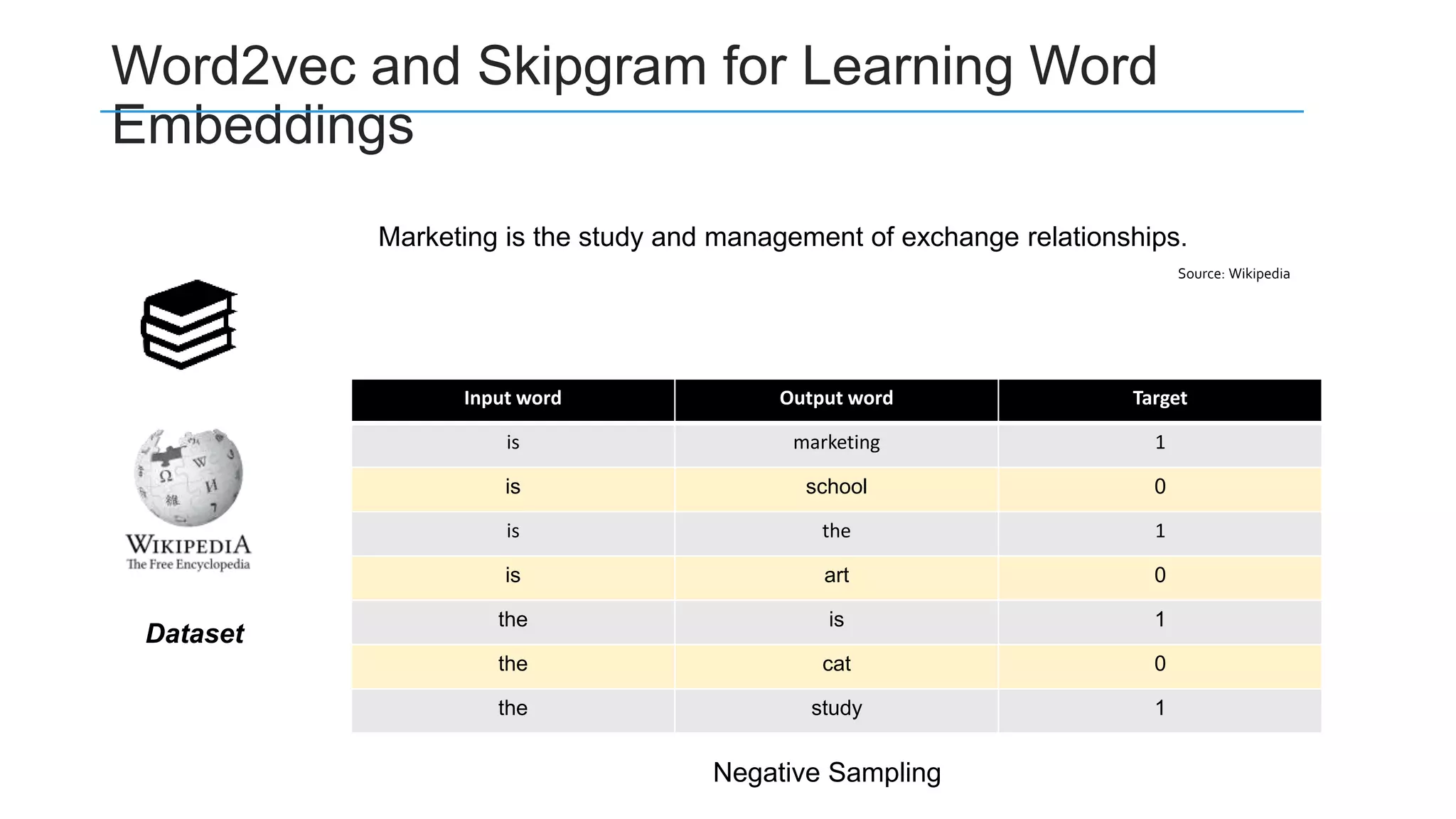

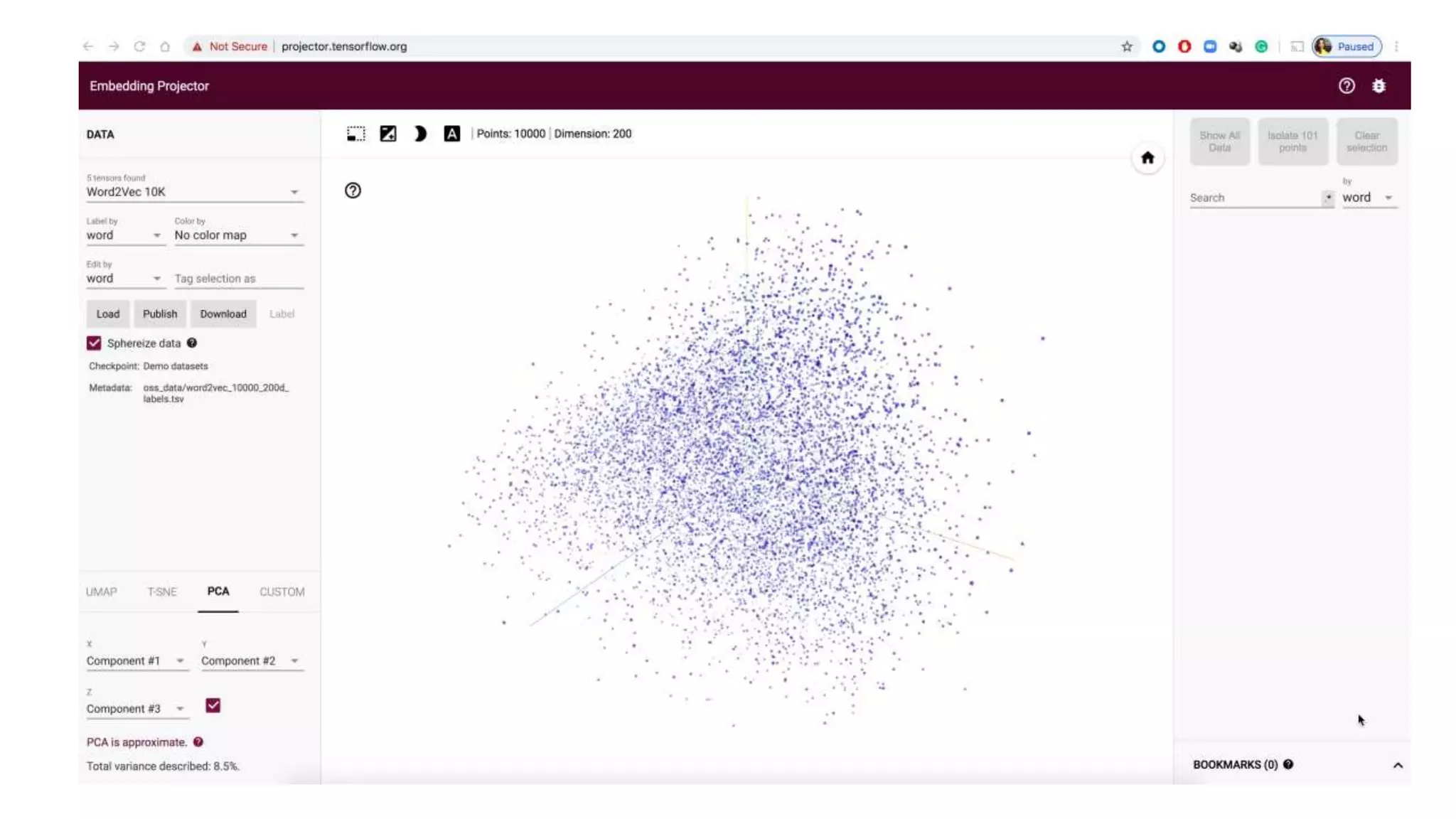

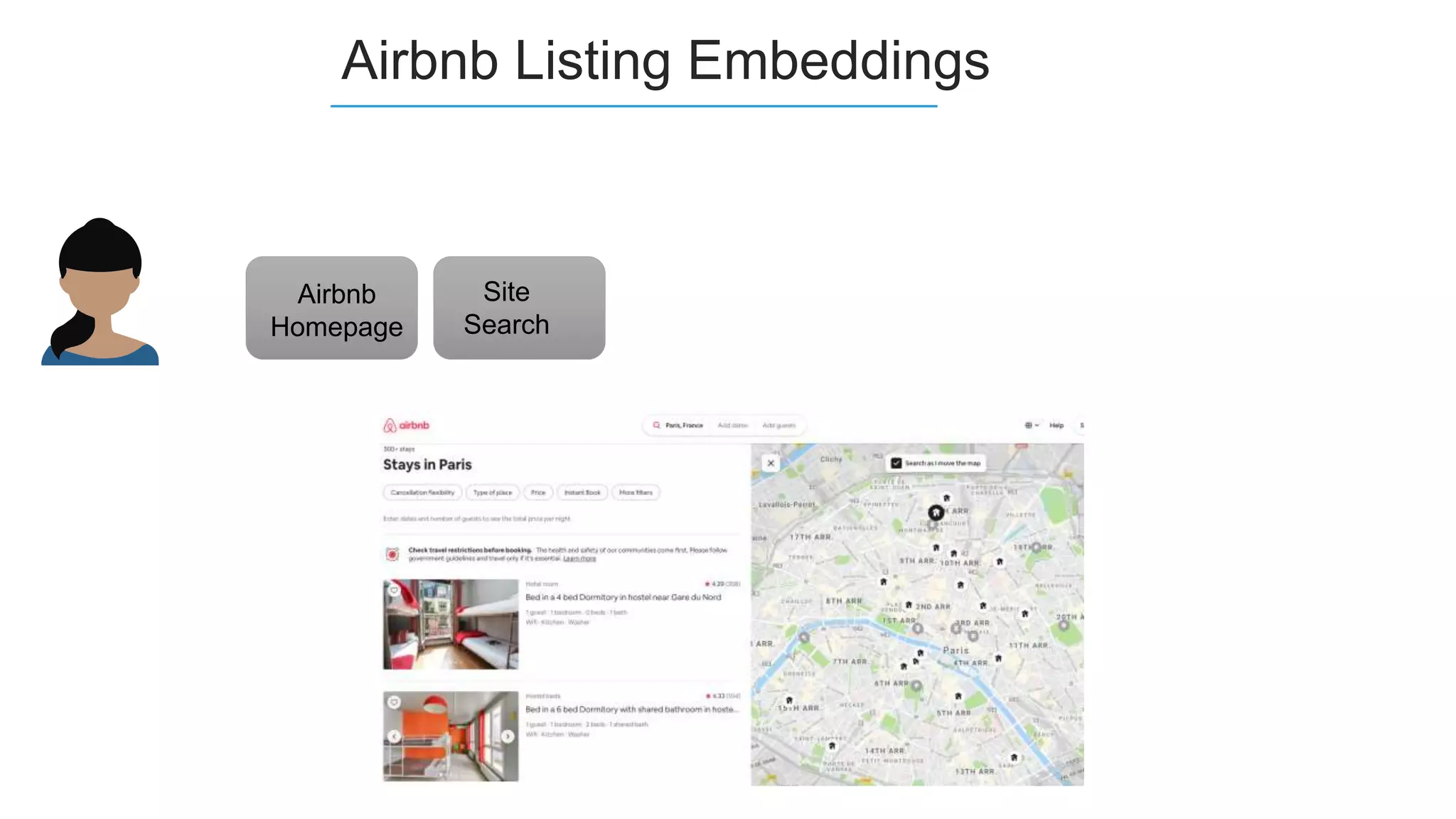

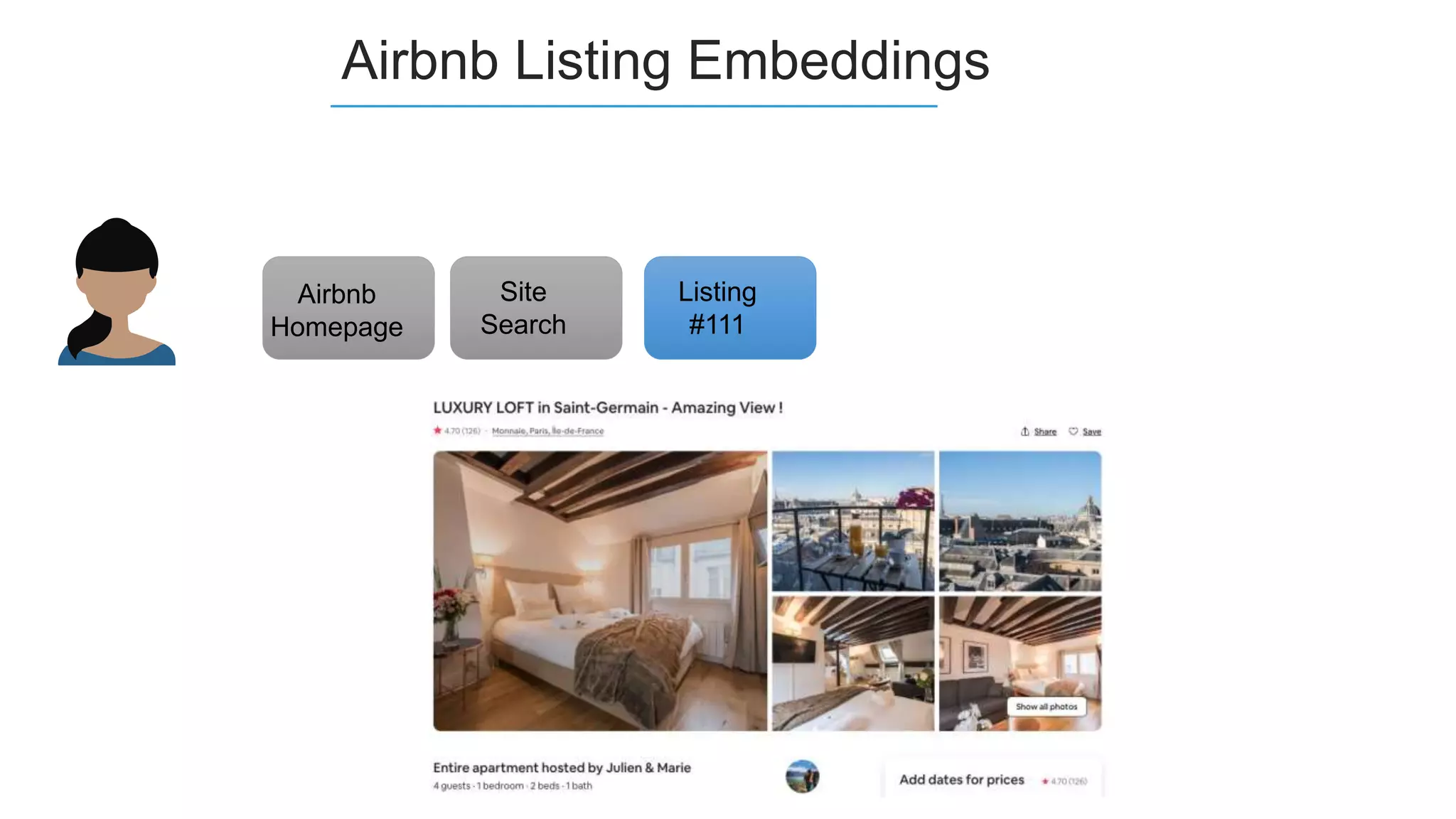

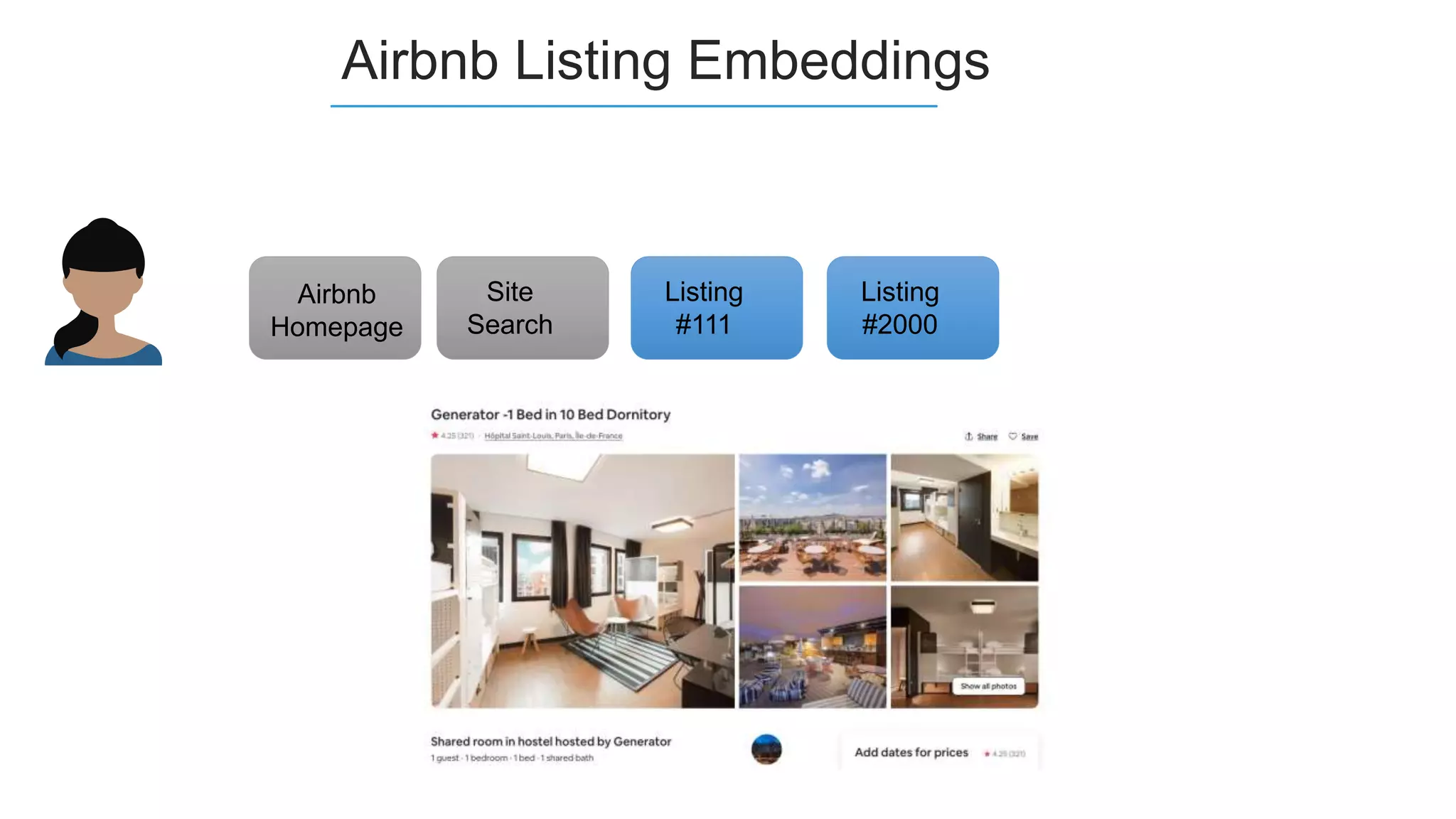

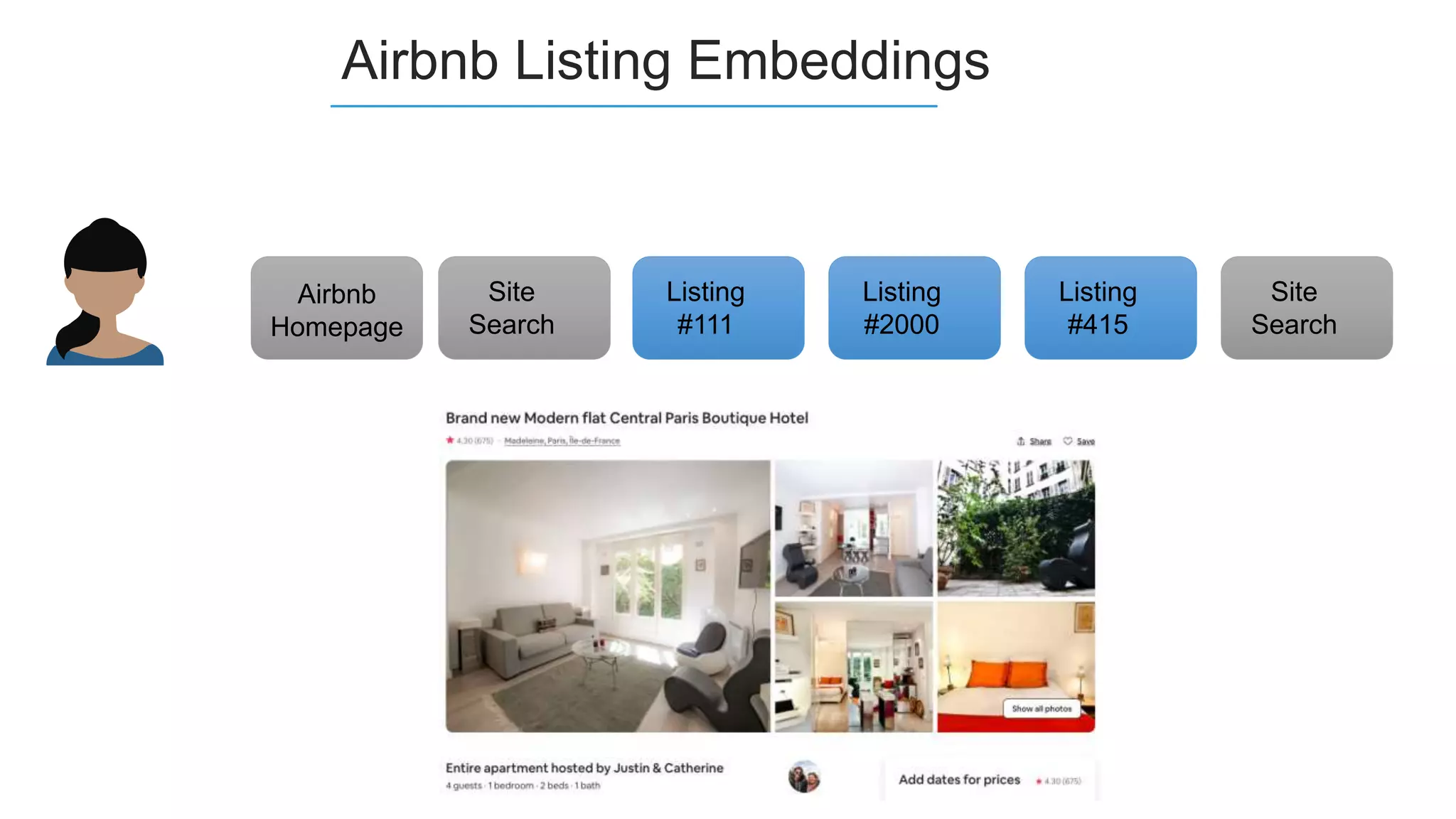

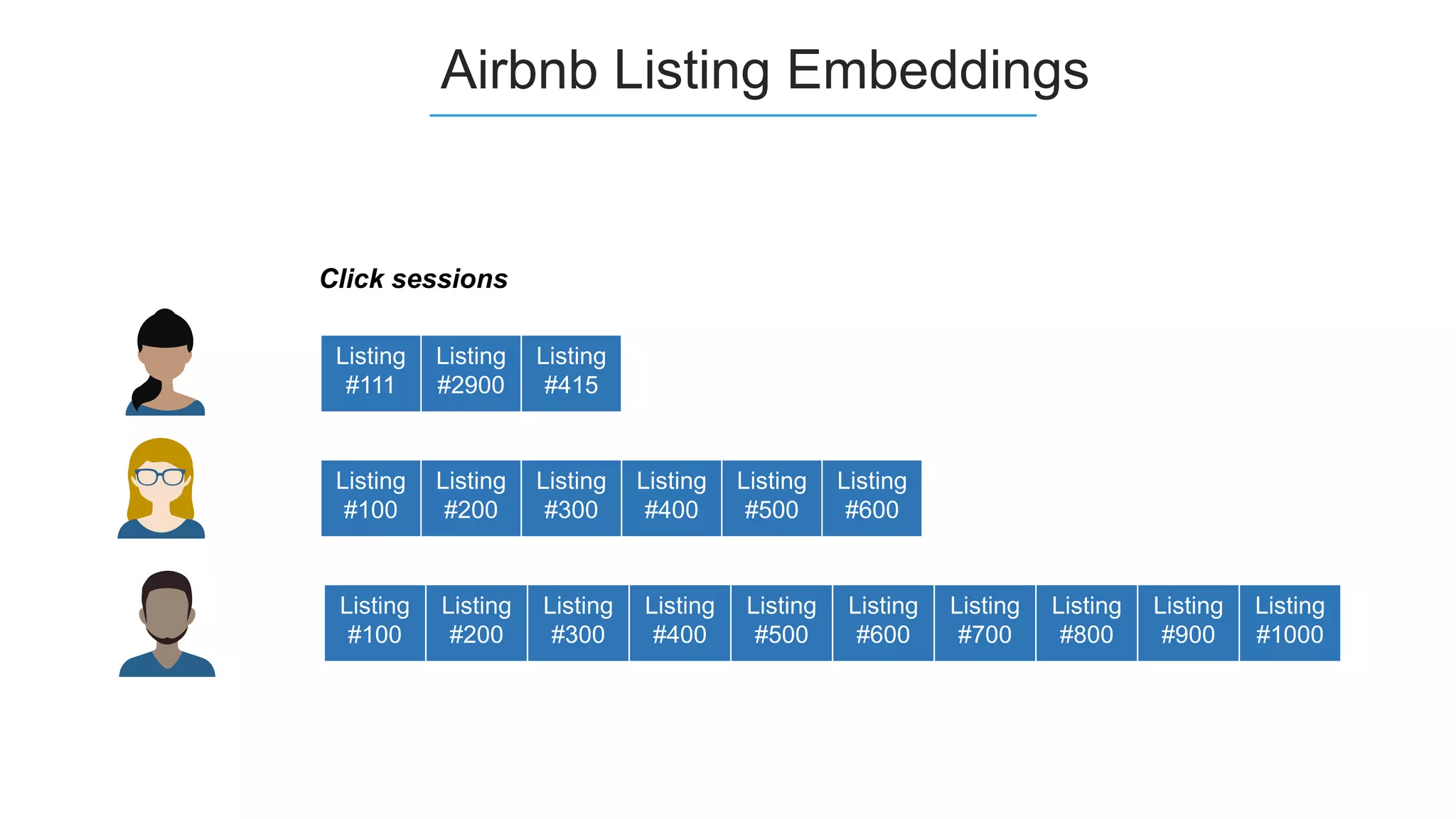

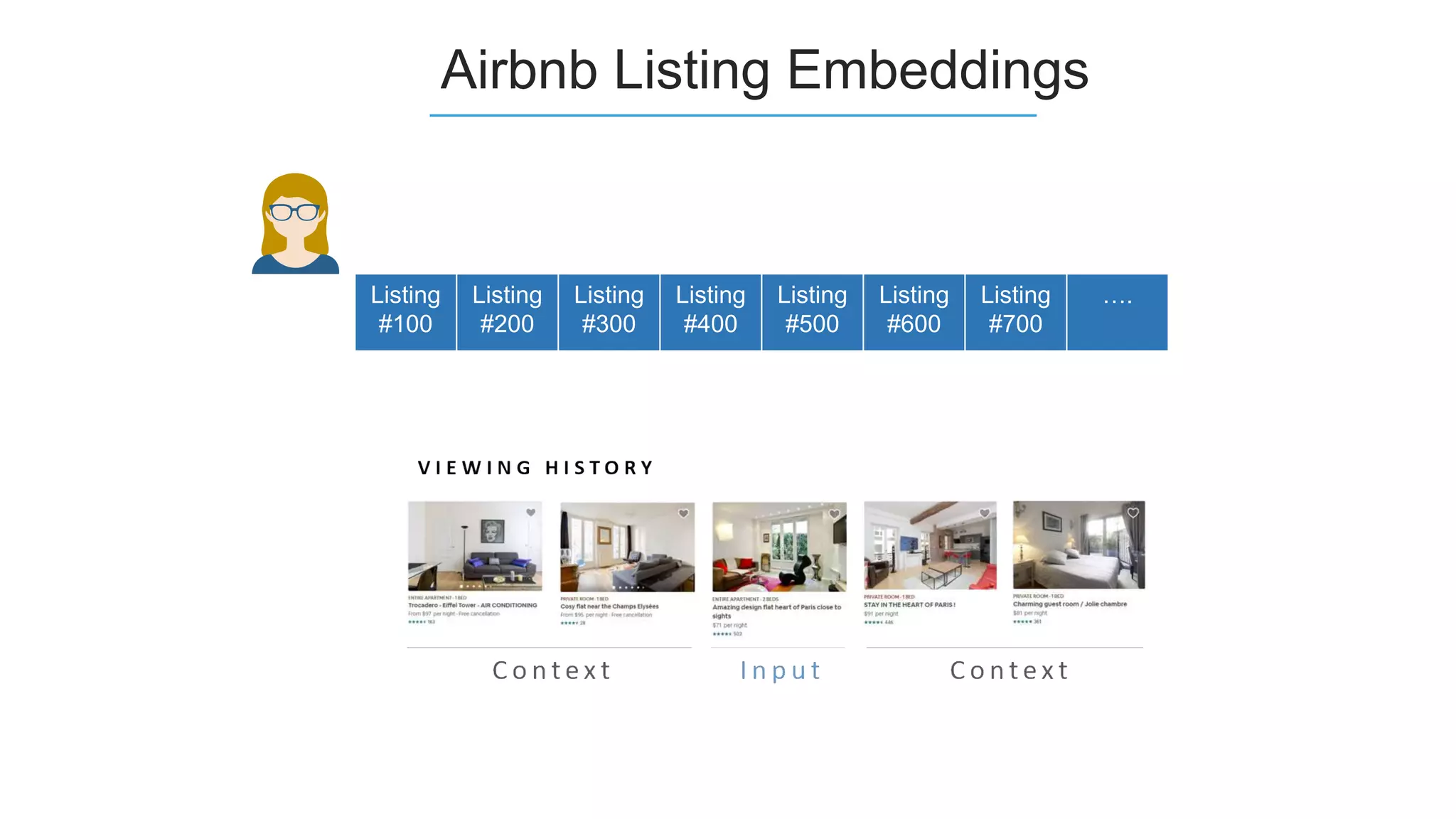

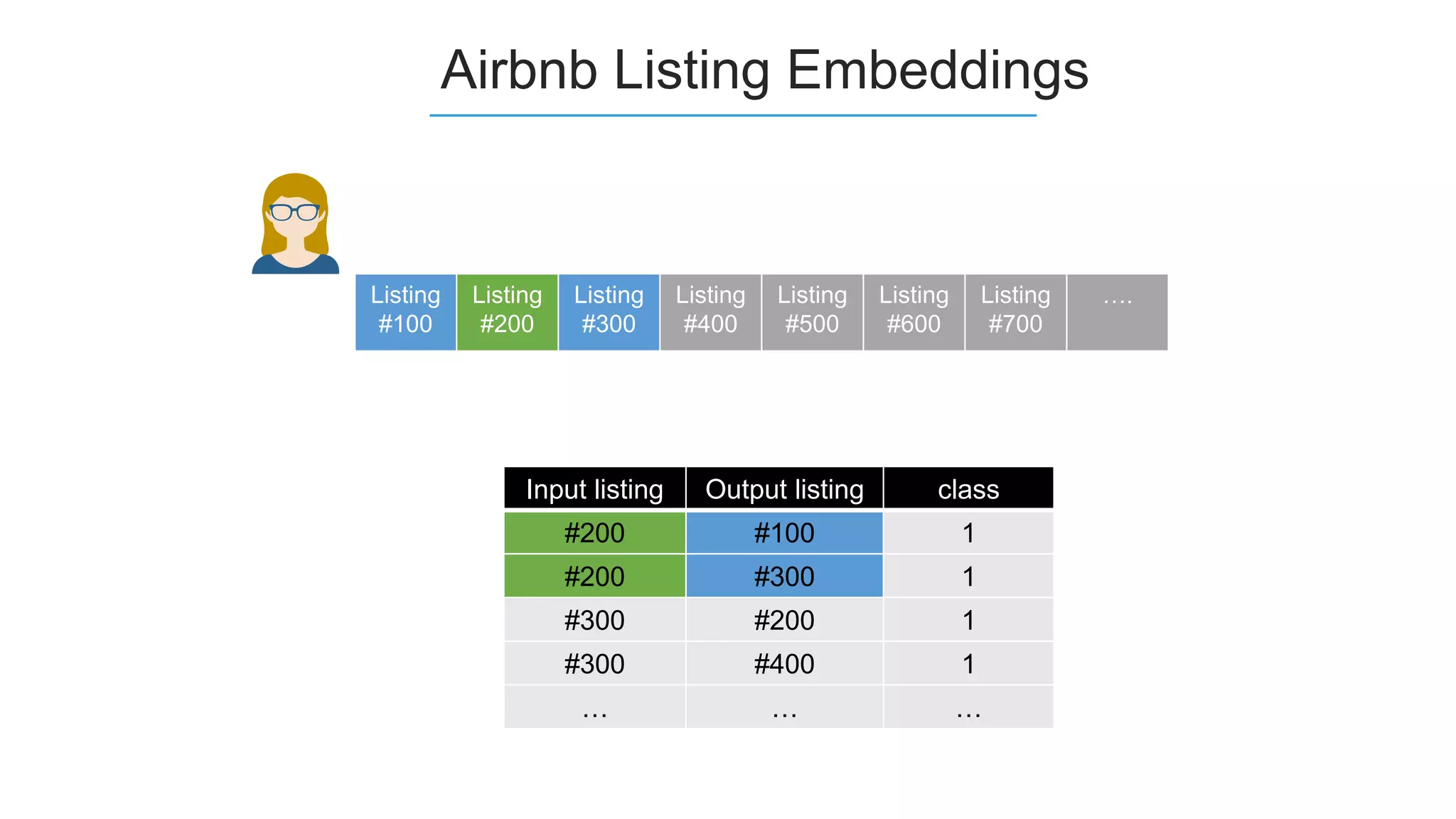

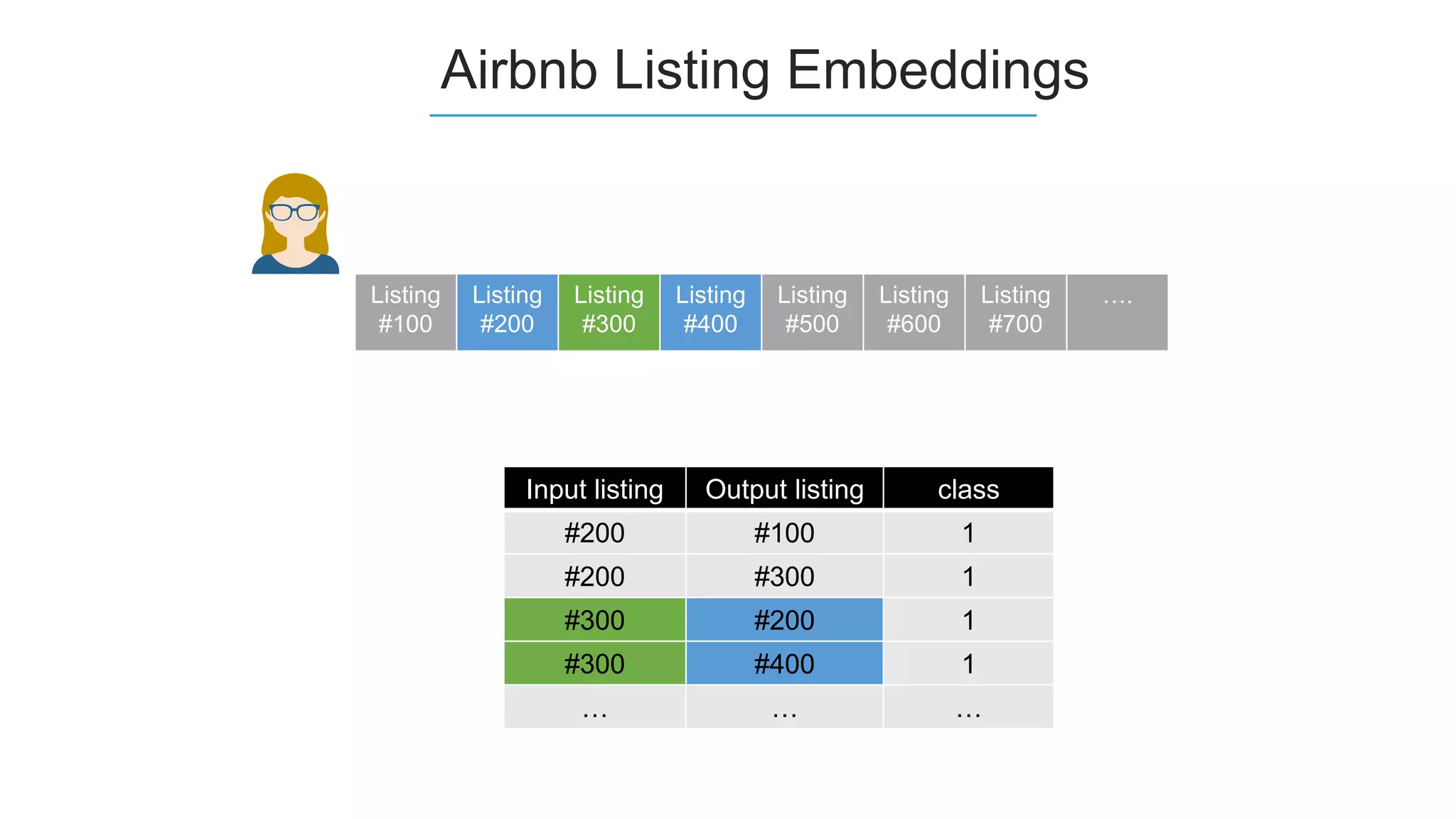

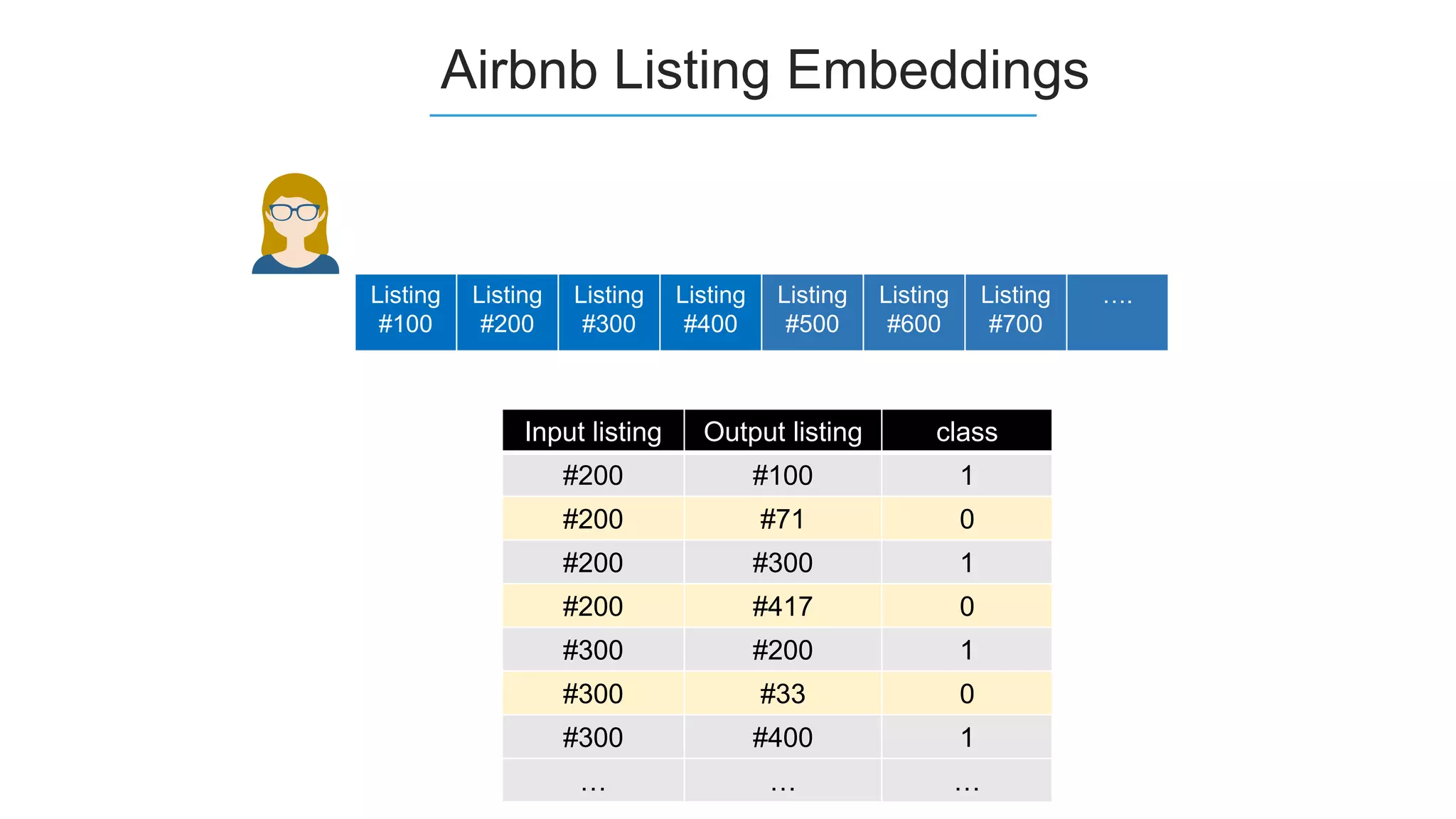

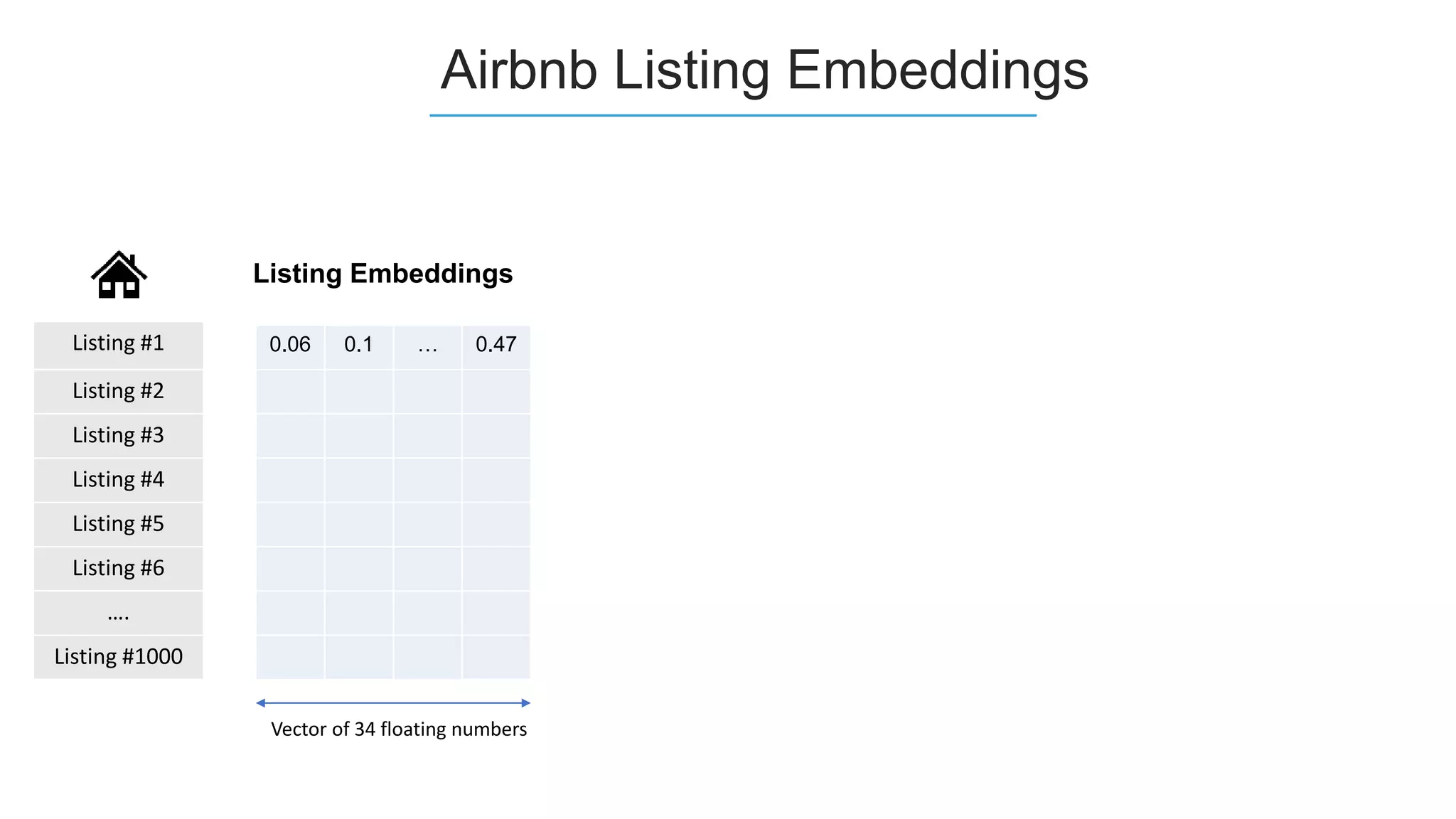

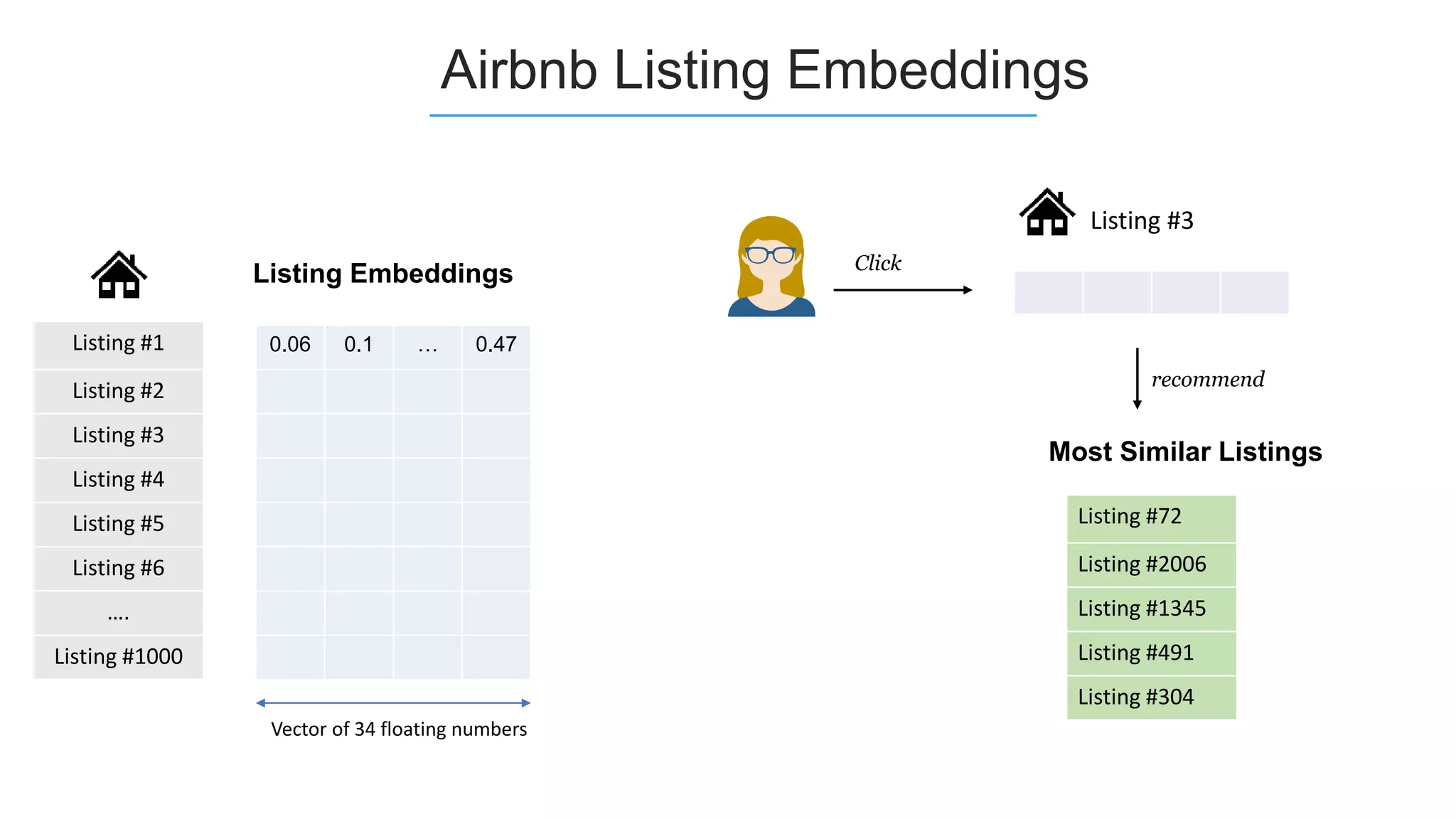

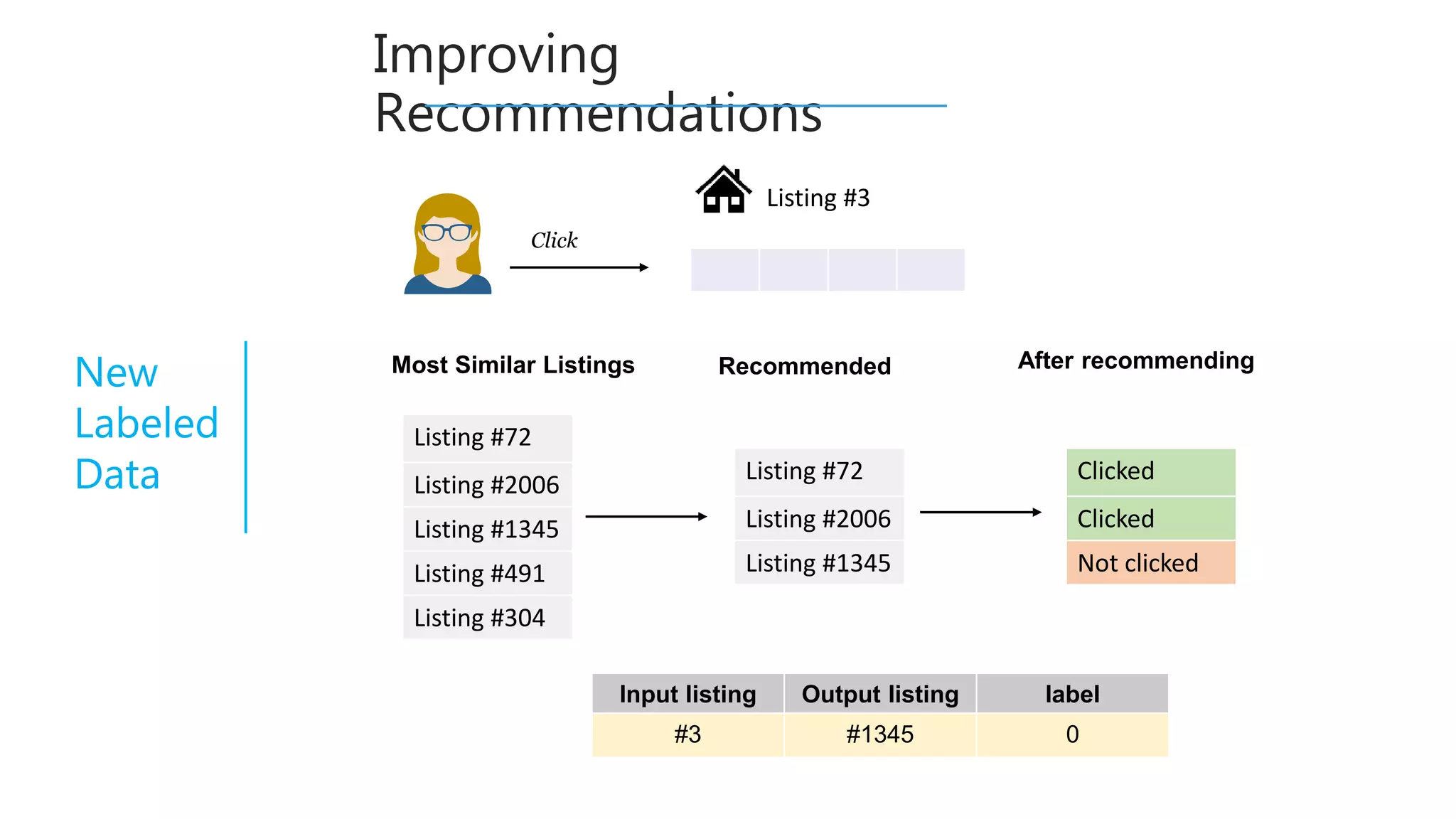

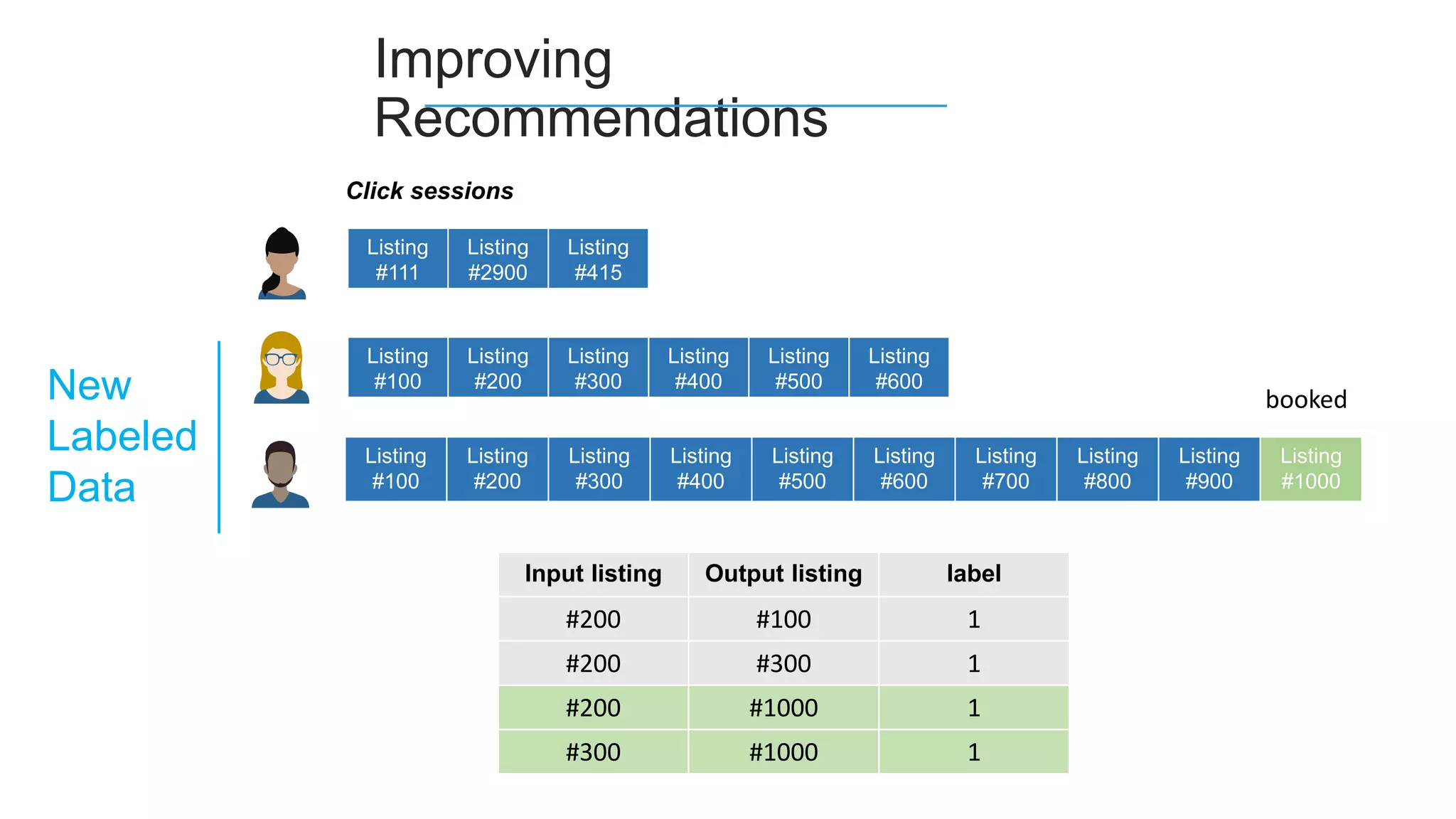

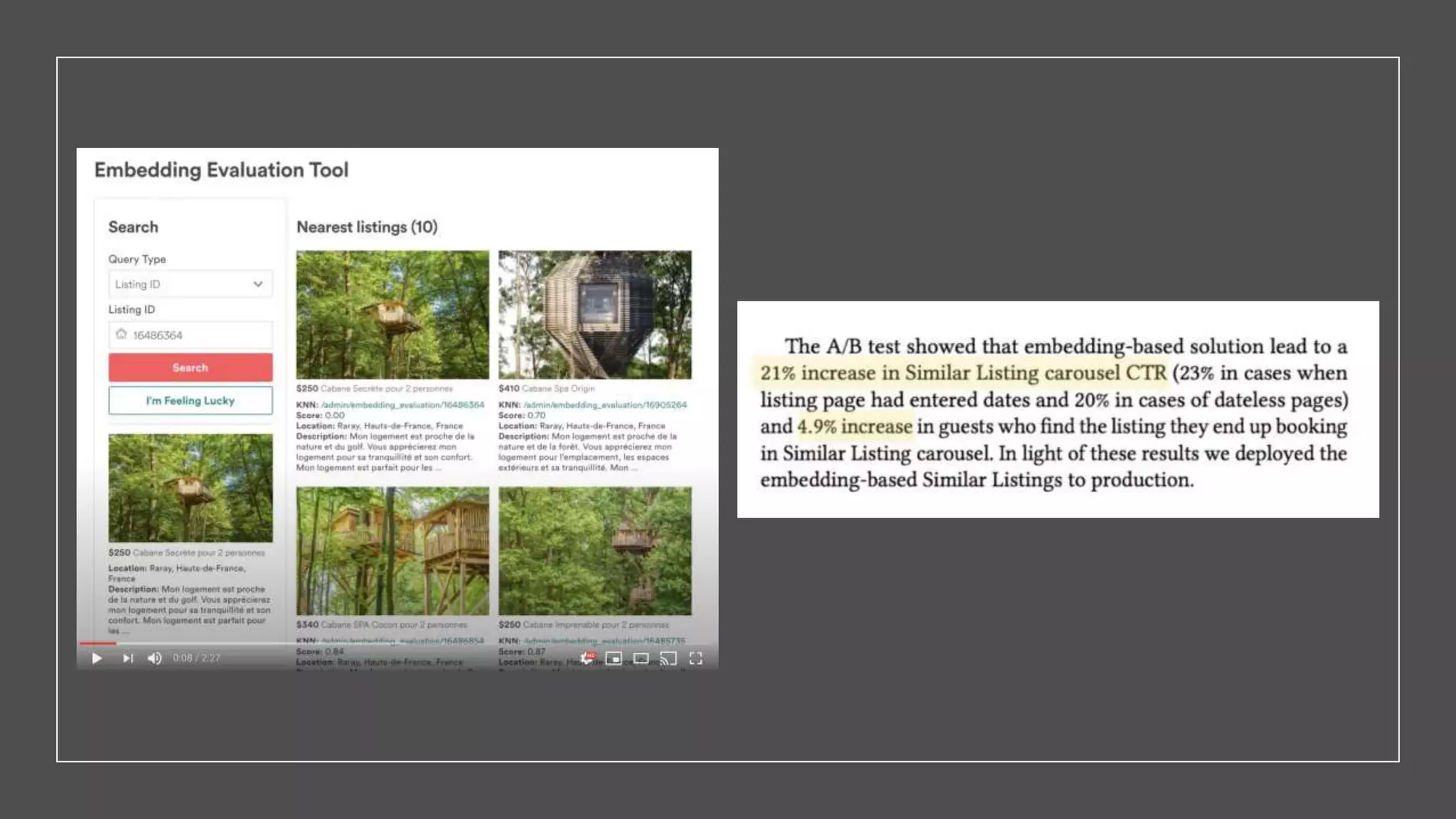

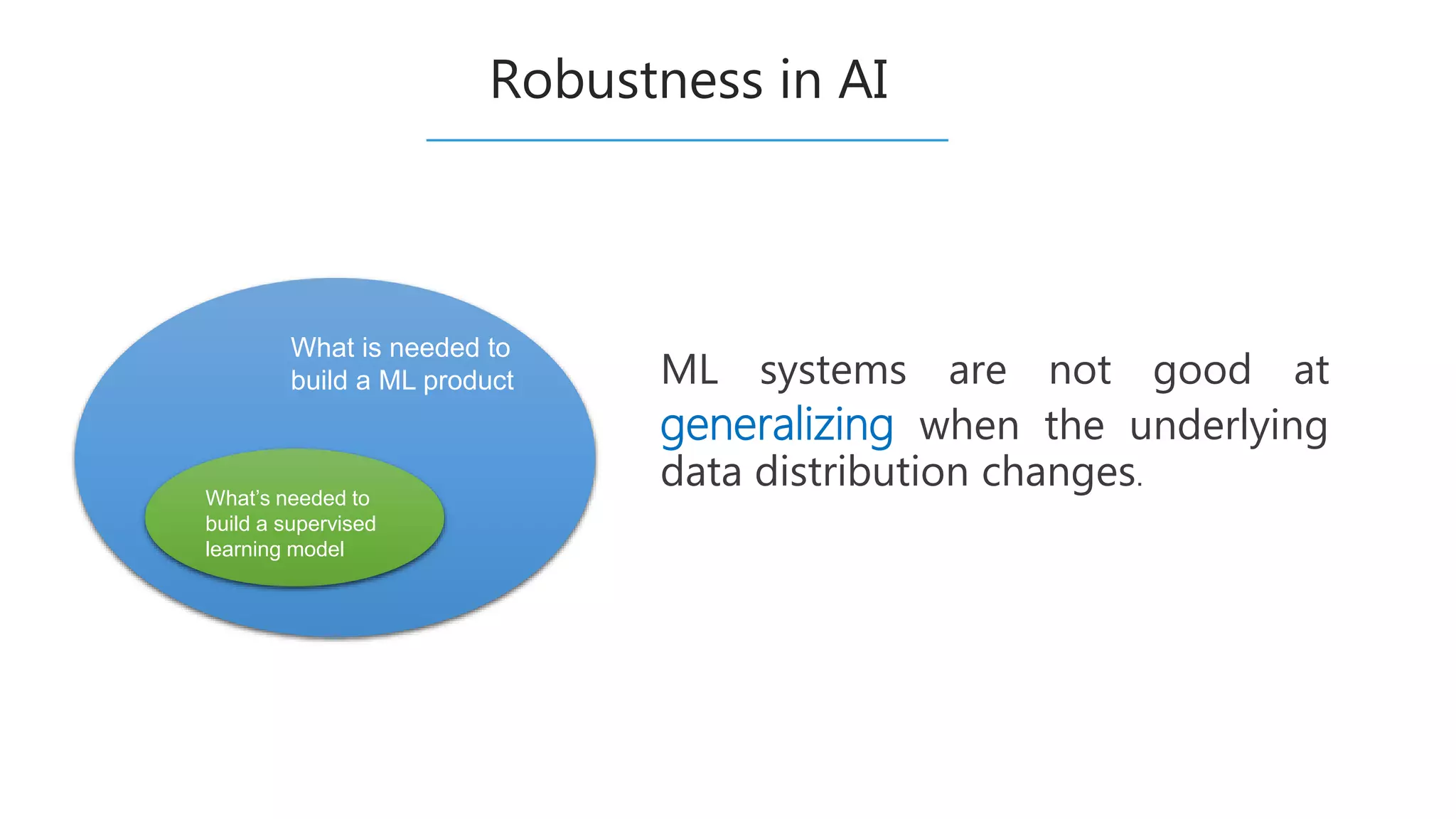

Sihem Romdhani's keynote discusses how AI-based recommender systems, particularly using embeddings, enhance digital marketing and personalized customer experiences across platforms like YouTube and Airbnb. These systems leverage machine learning techniques, such as word2vec, to analyze user preferences and improve recommendation accuracy while addressing challenges like data privacy and model robustness. The speech emphasizes the importance of adaptive systems in response to changing consumer behavior and highlights strategies for ethical AI implementation.