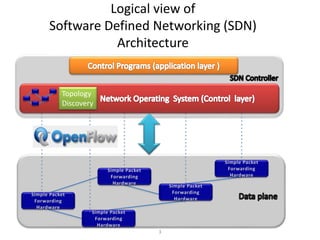

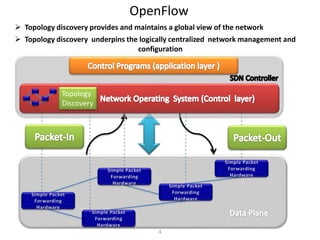

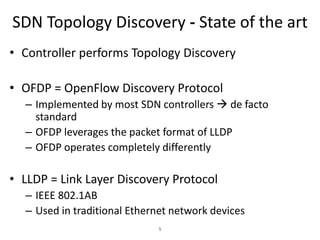

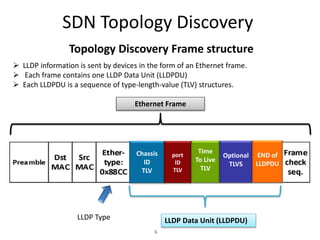

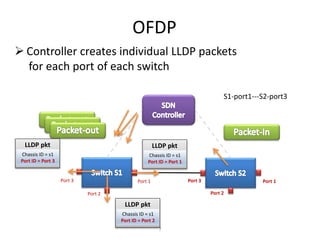

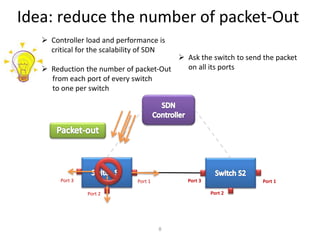

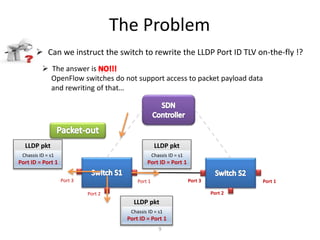

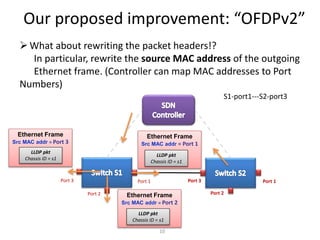

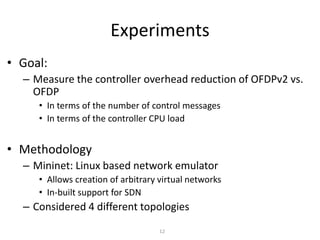

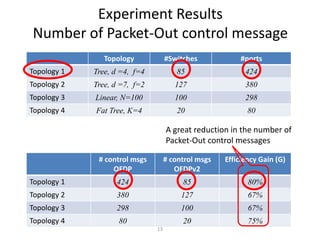

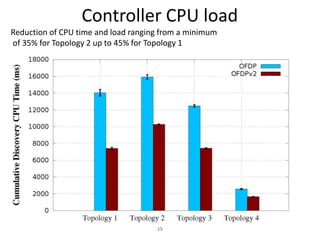

The document discusses an improved topology discovery mechanism for Software Defined Networks (SDNs) called OFDPv2, which reduces controller overhead and enhances network performance. It presents an evaluation of the proposed method against the traditional OpenFlow Discovery Protocol (OFDP), highlighting significant reductions in control message counts and CPU load. The experiments demonstrate that OFDPv2 can achieve up to a 80% reduction in control messages and a 45% decrease in CPU time, thereby increasing overall network efficiency and scalability.