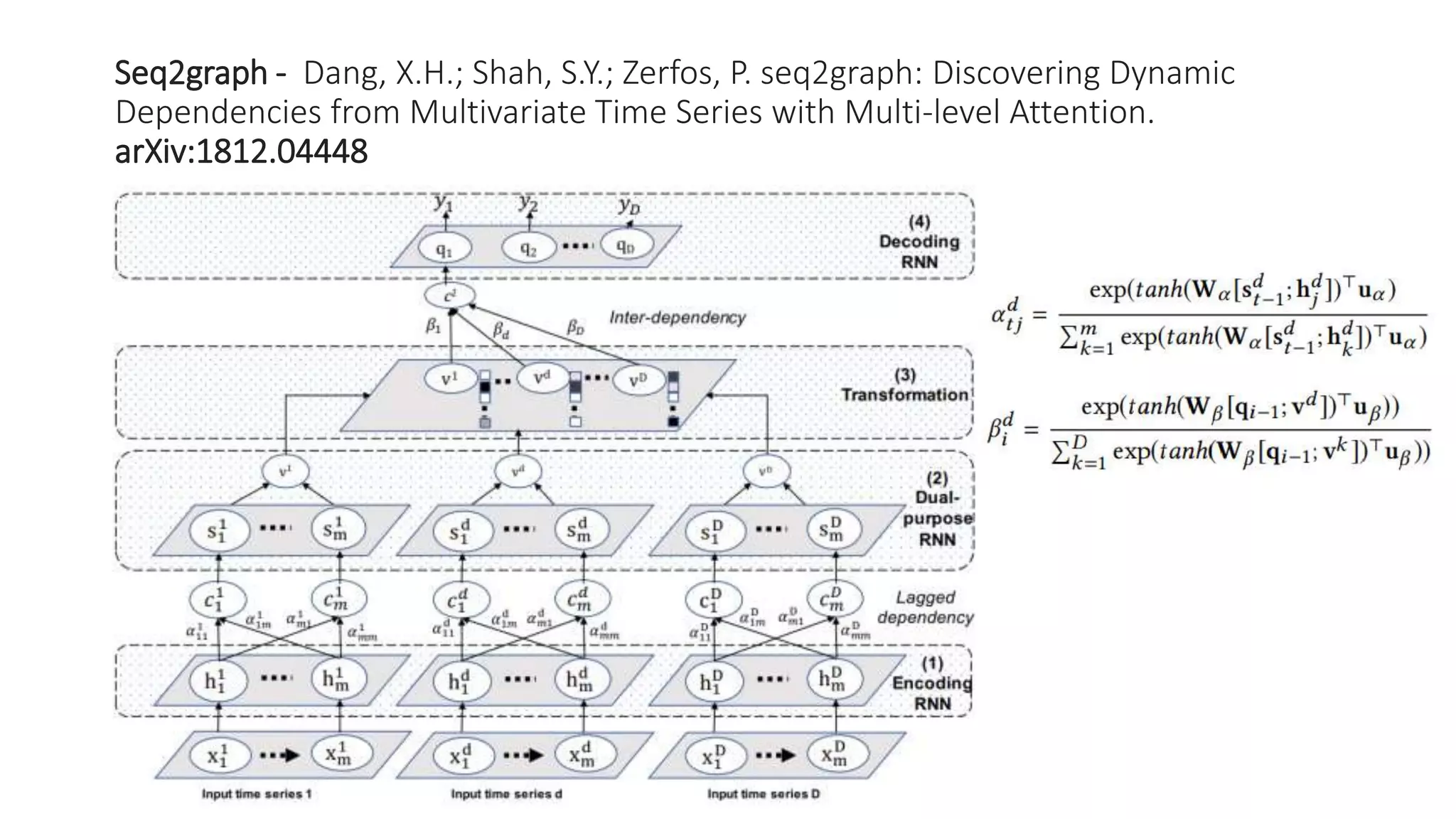

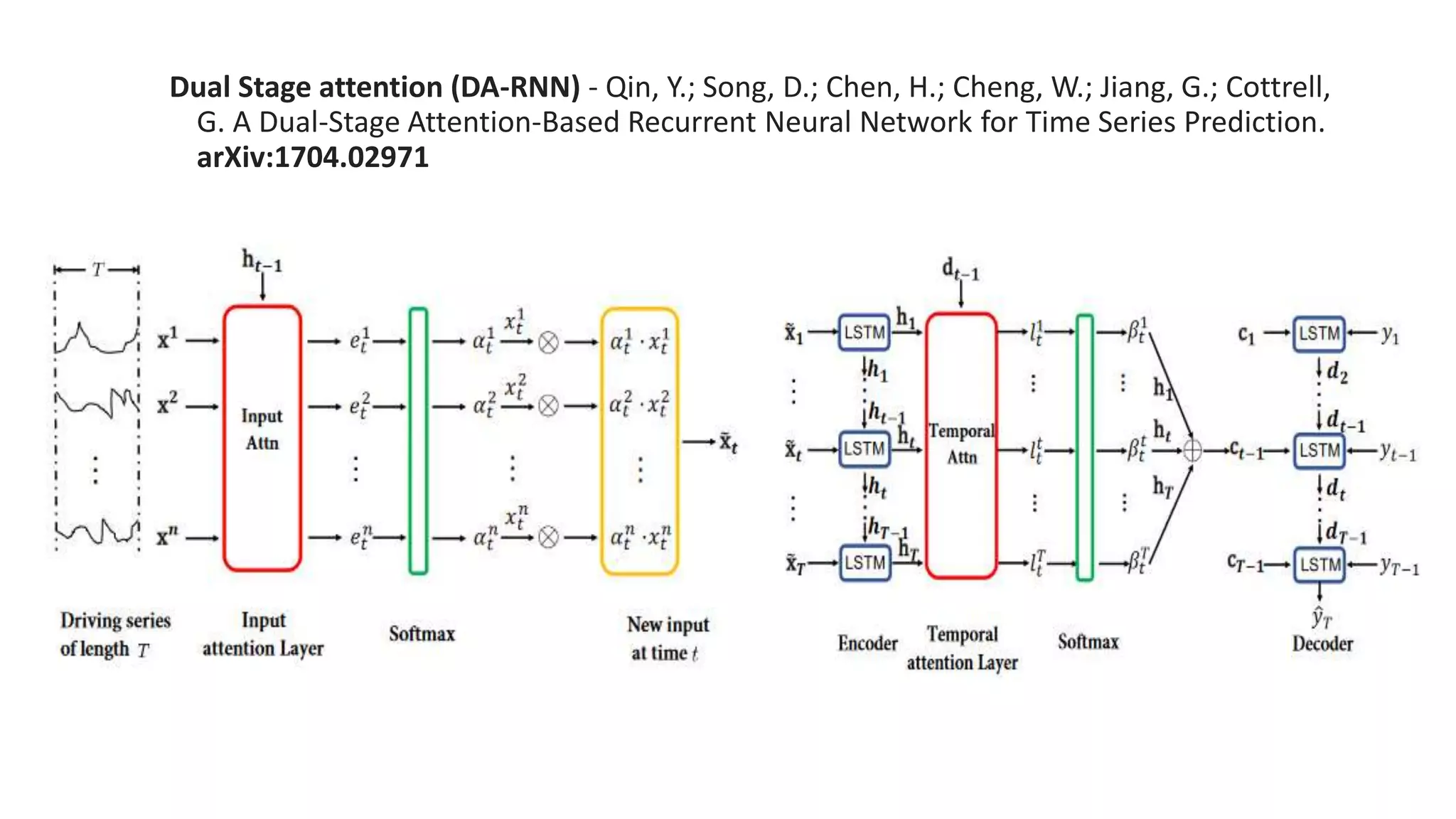

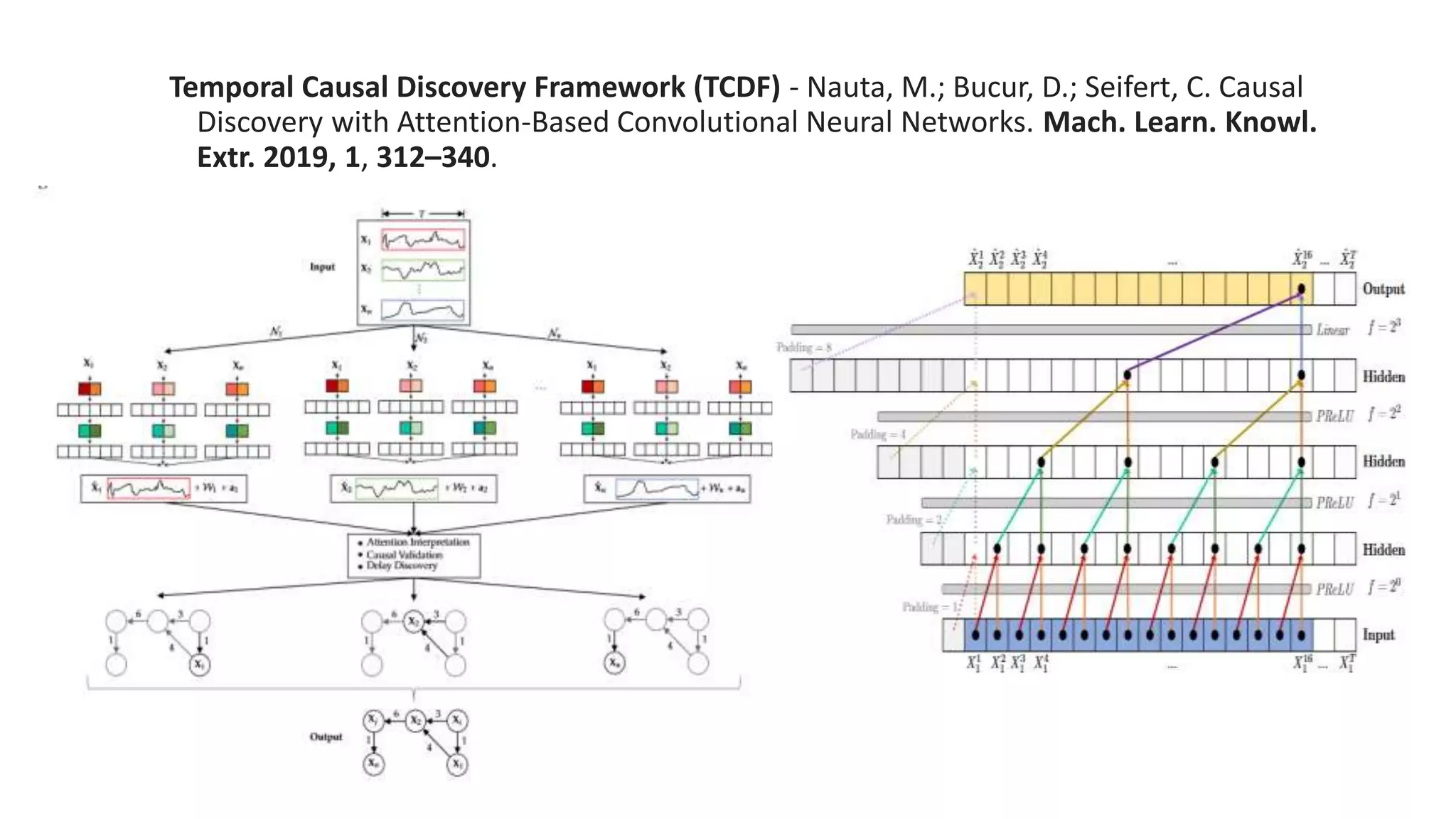

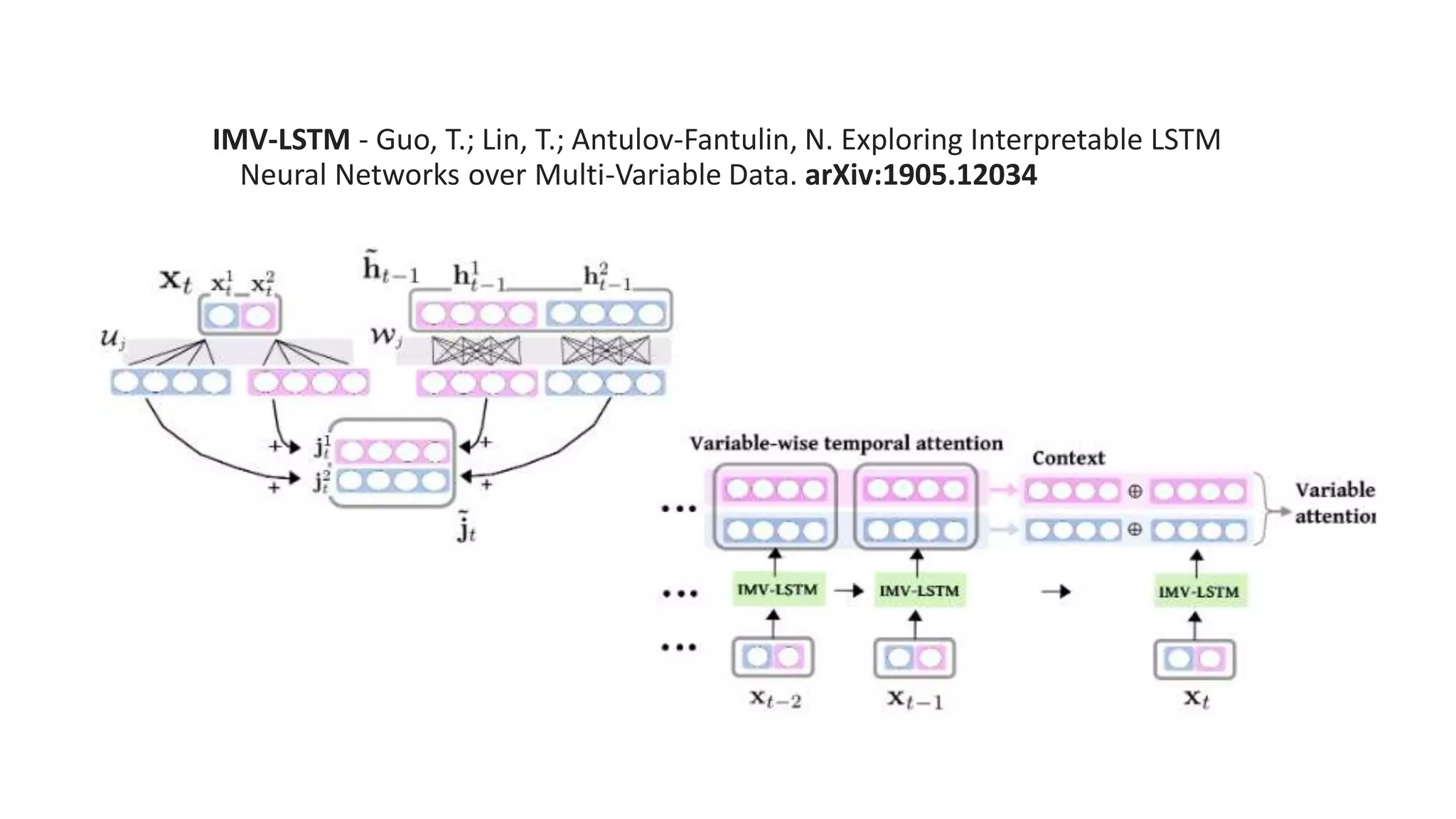

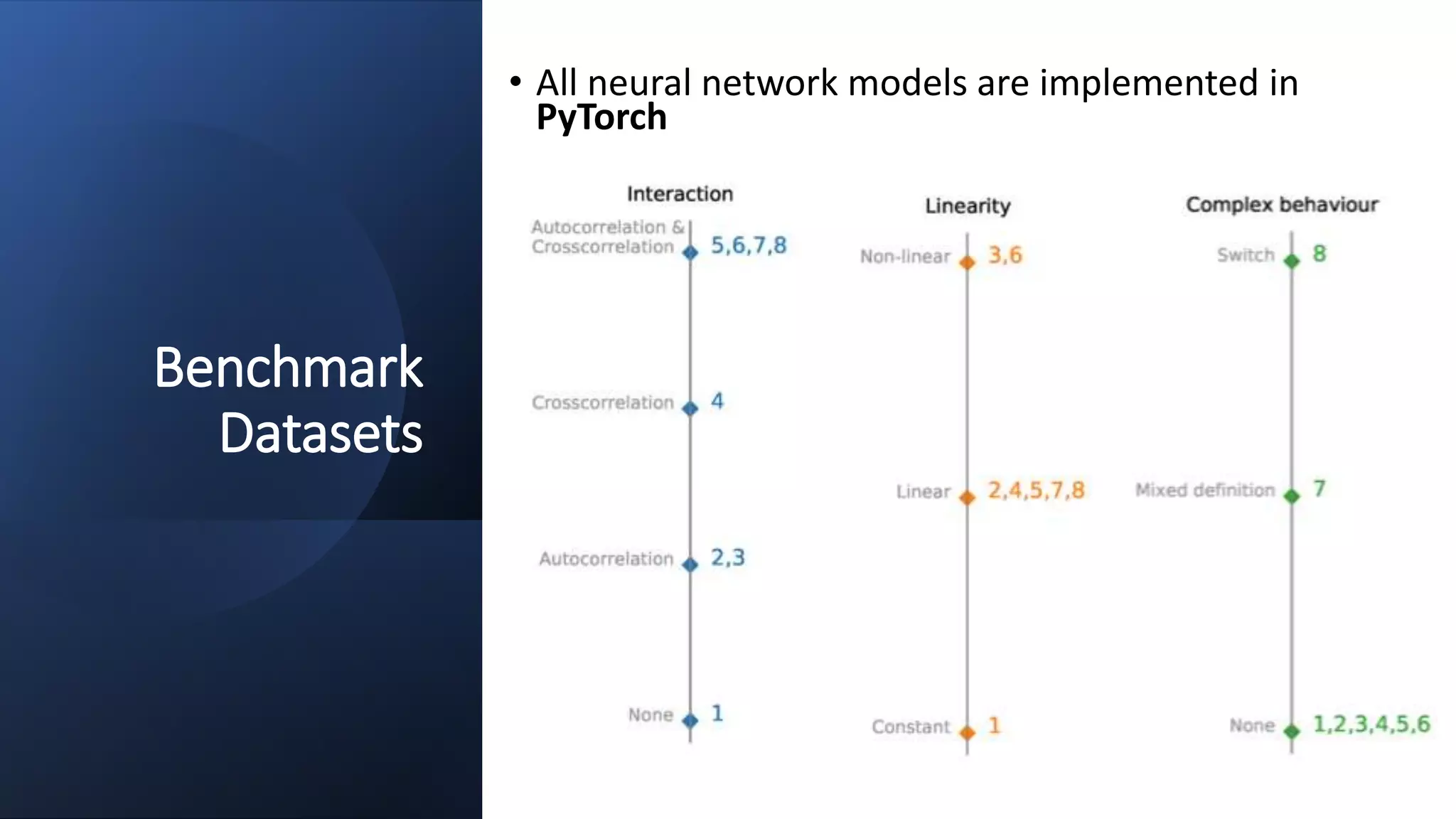

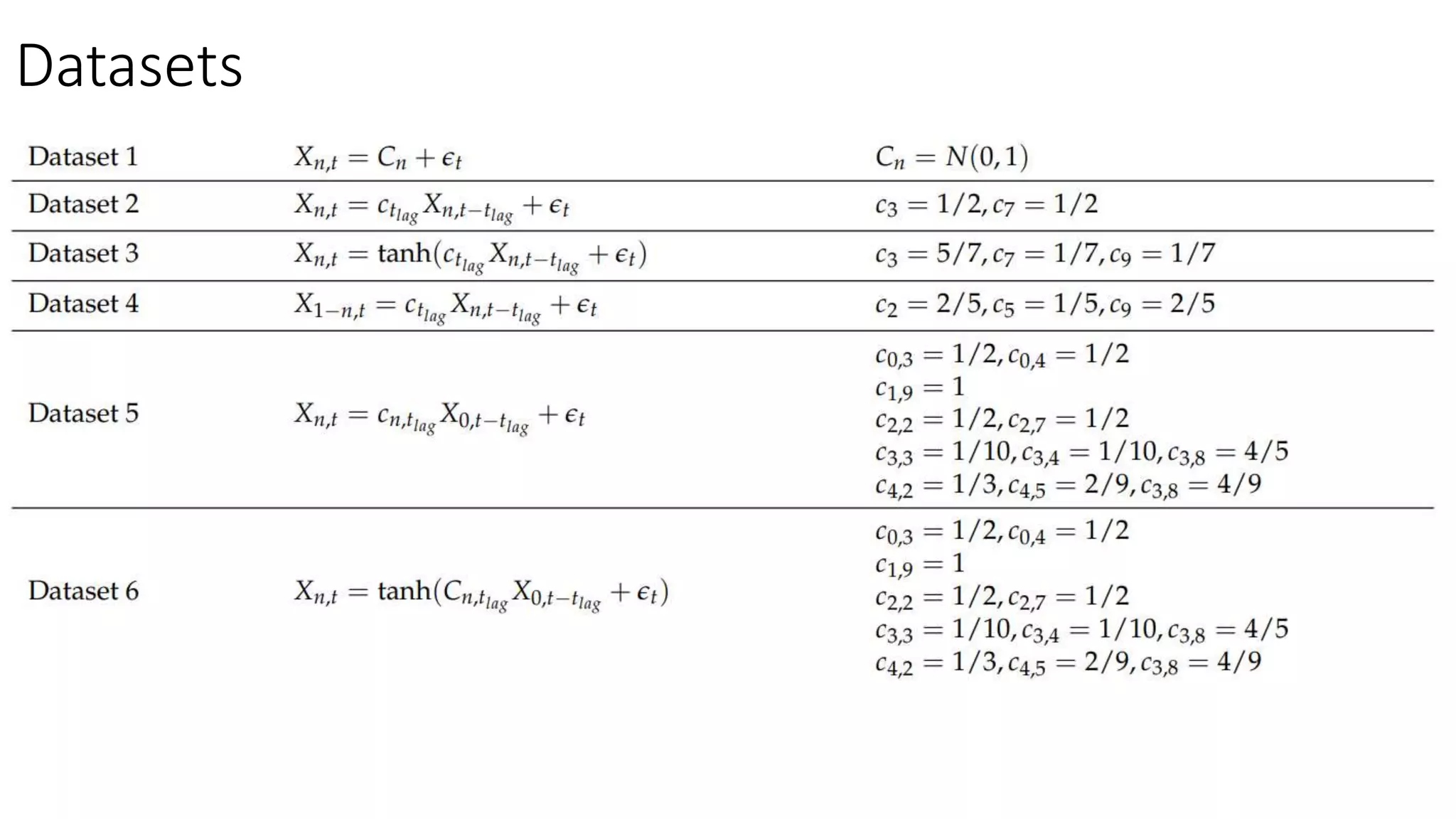

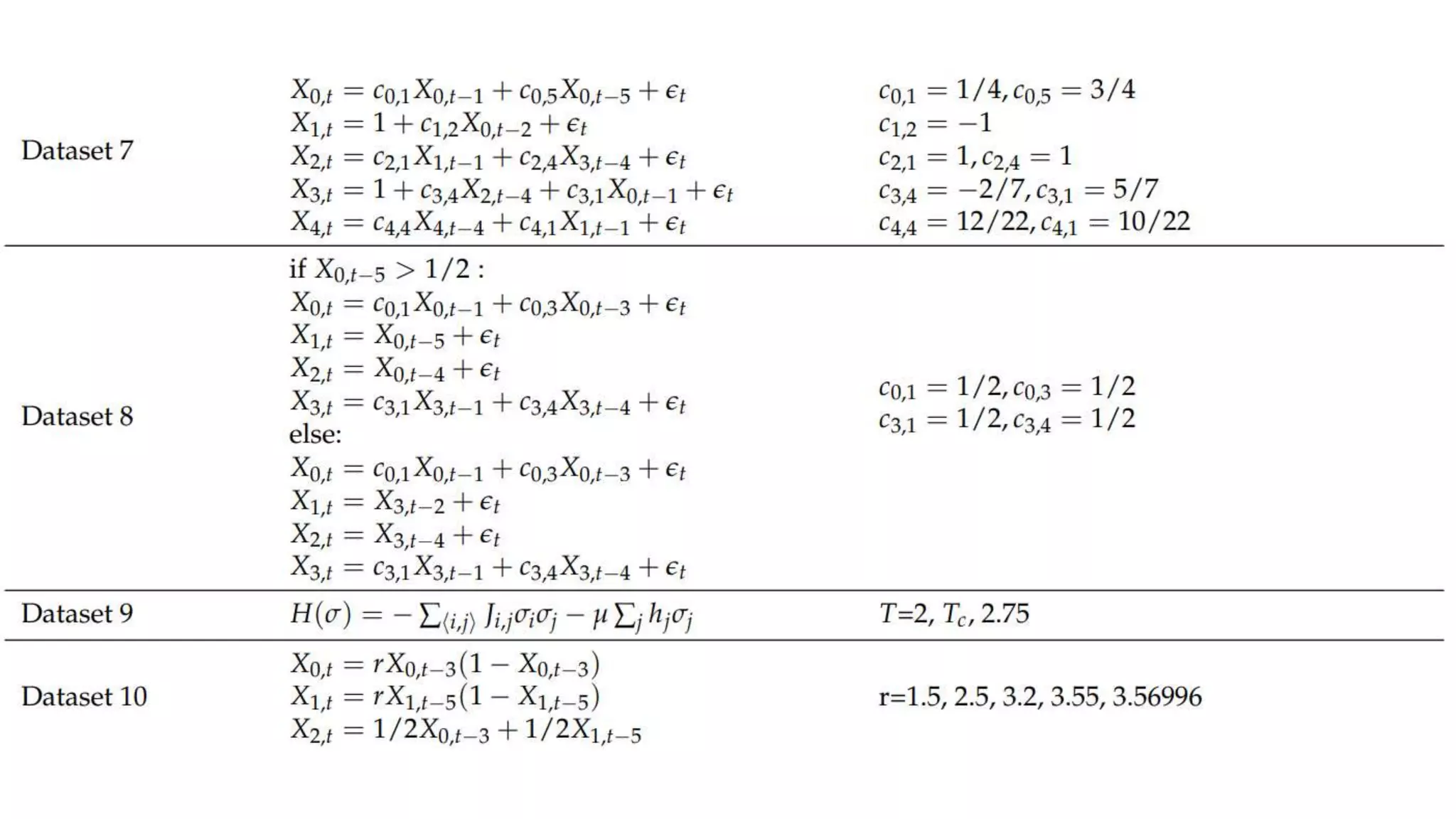

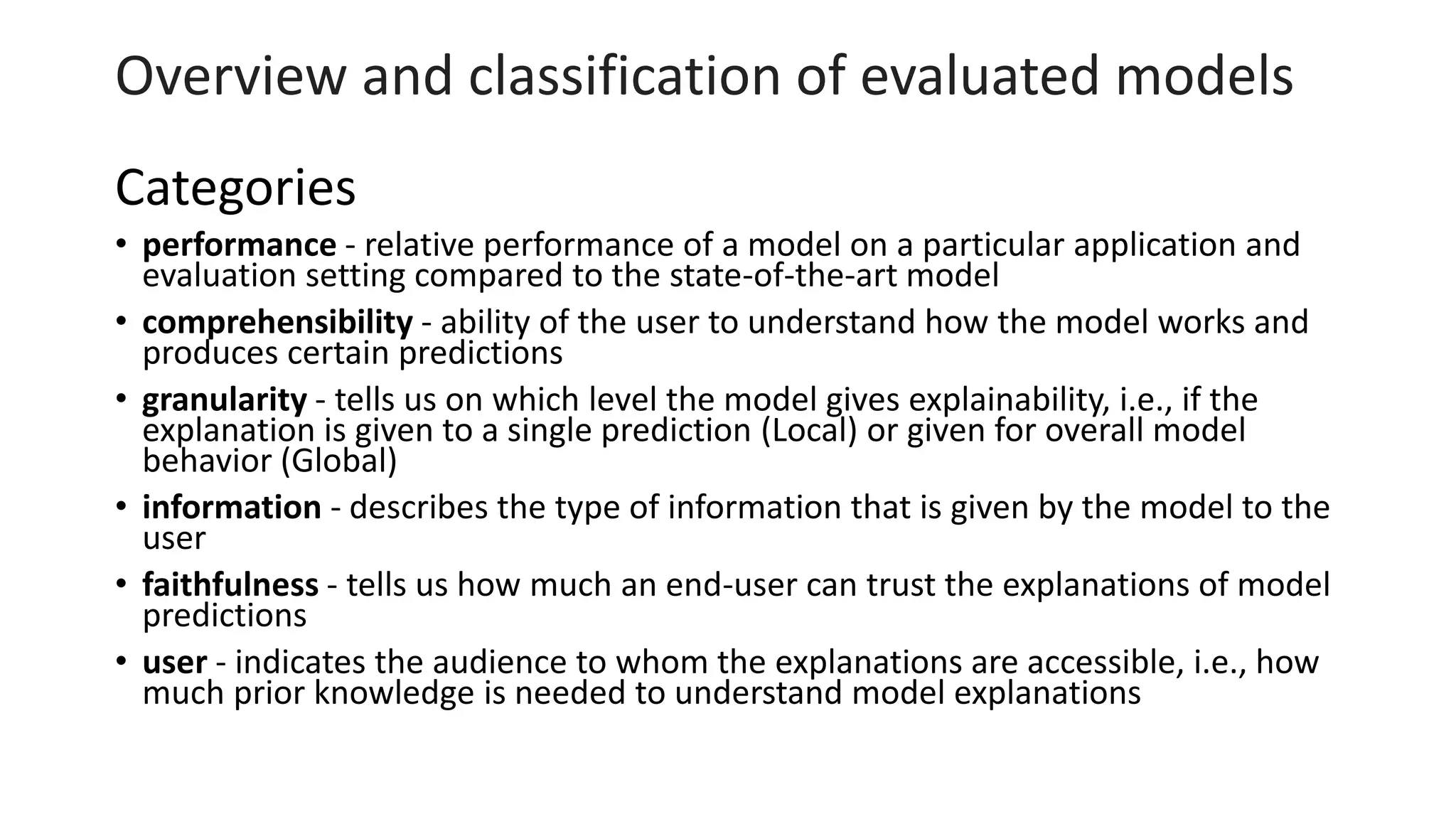

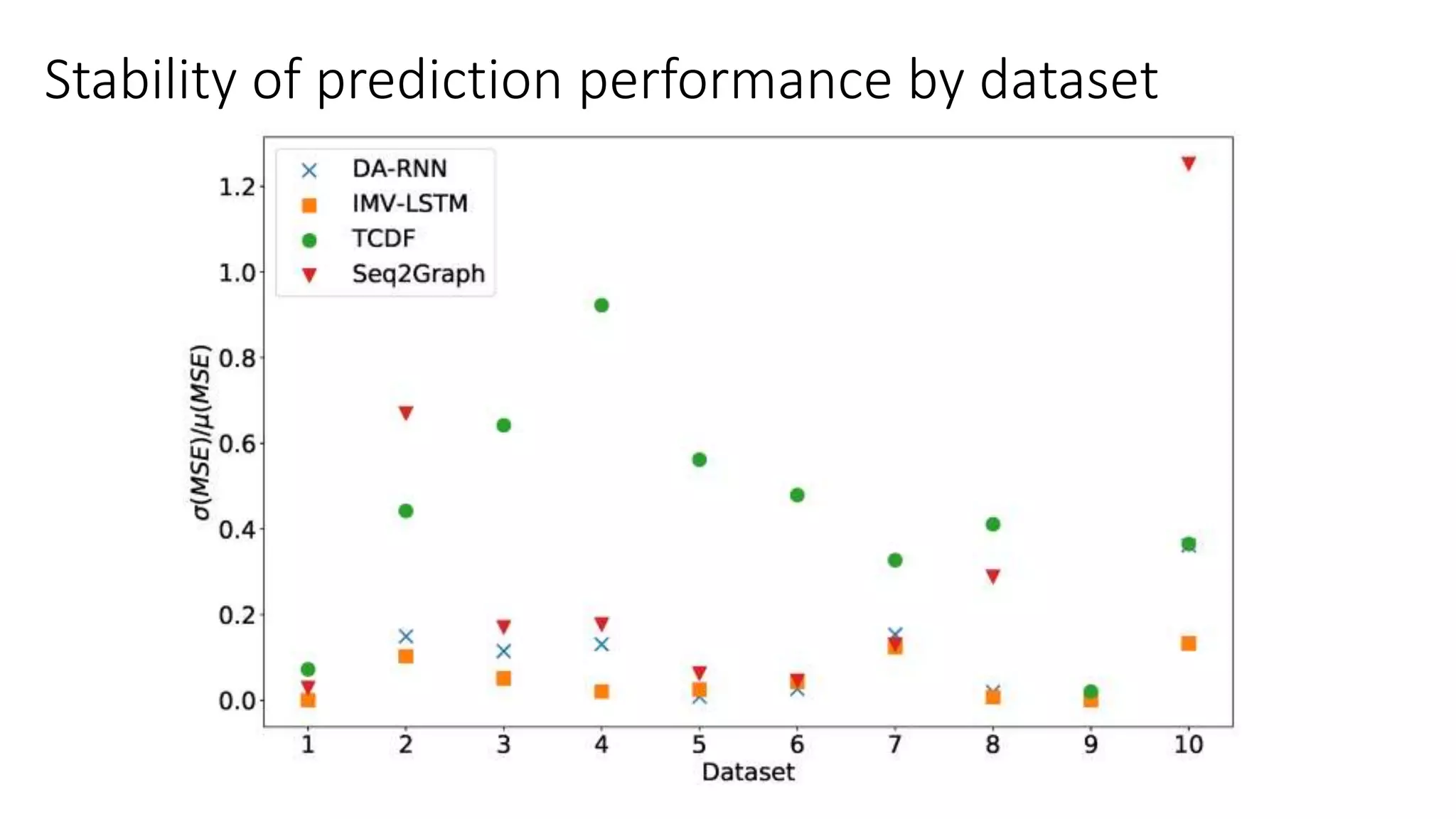

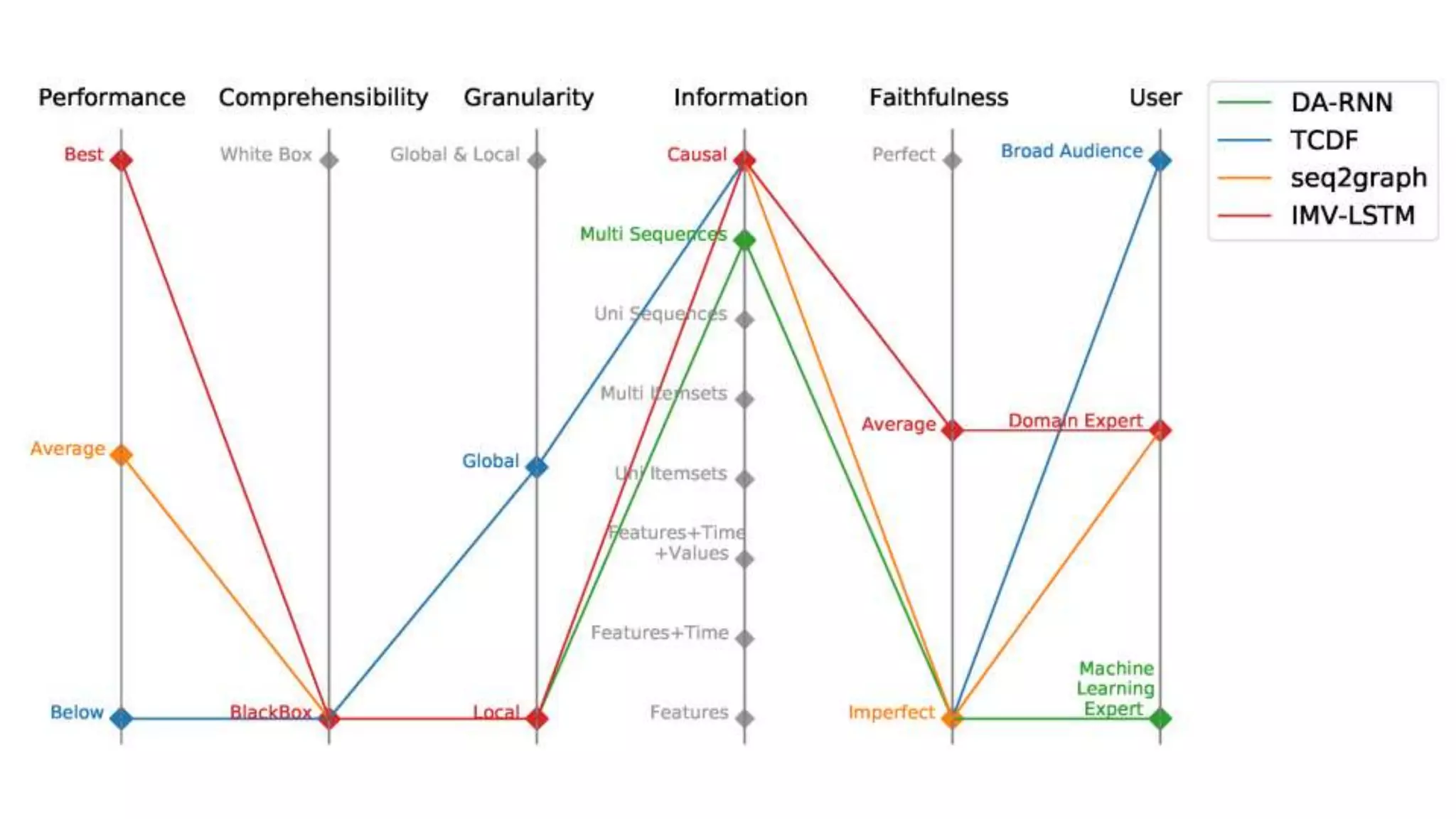

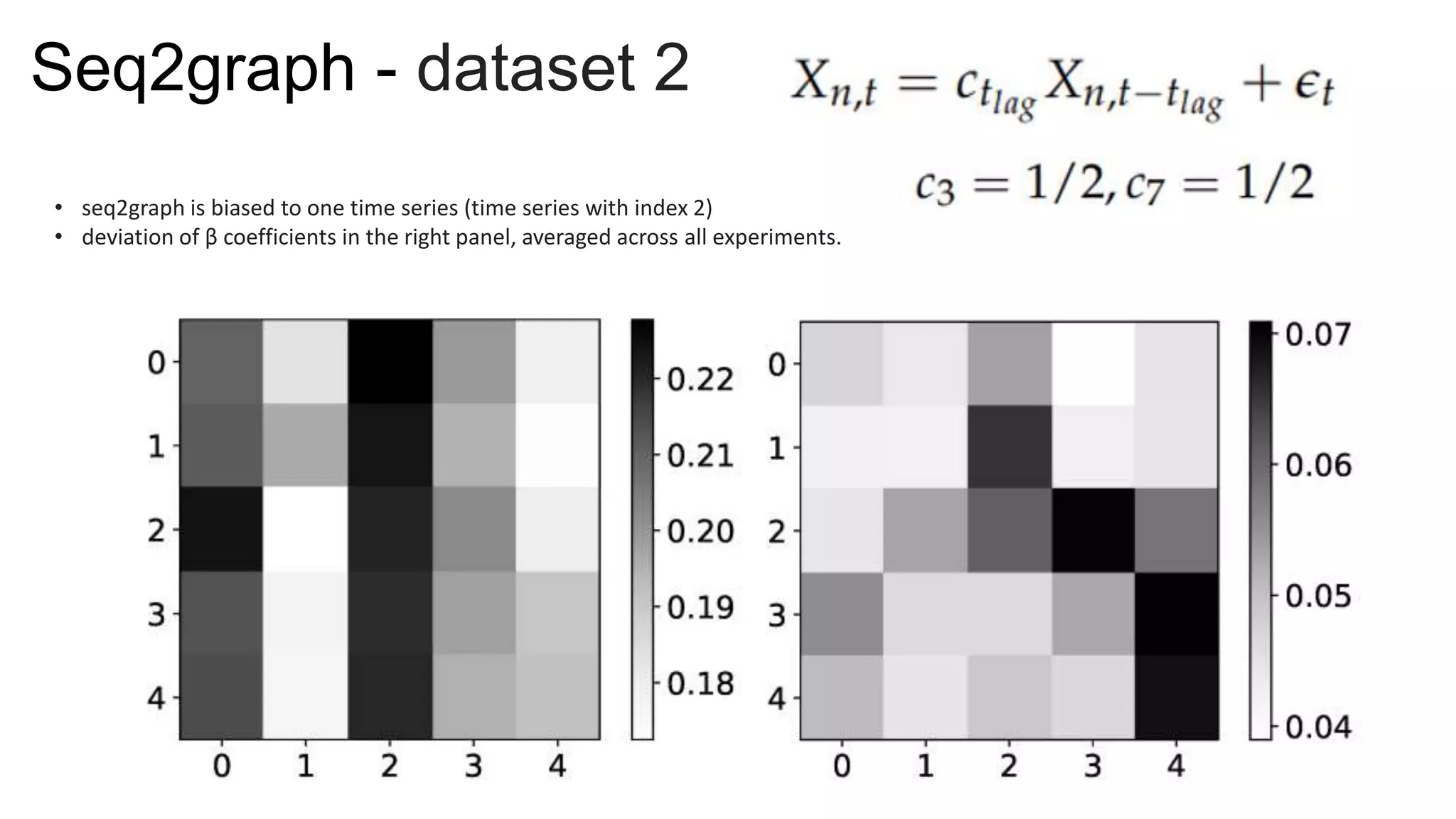

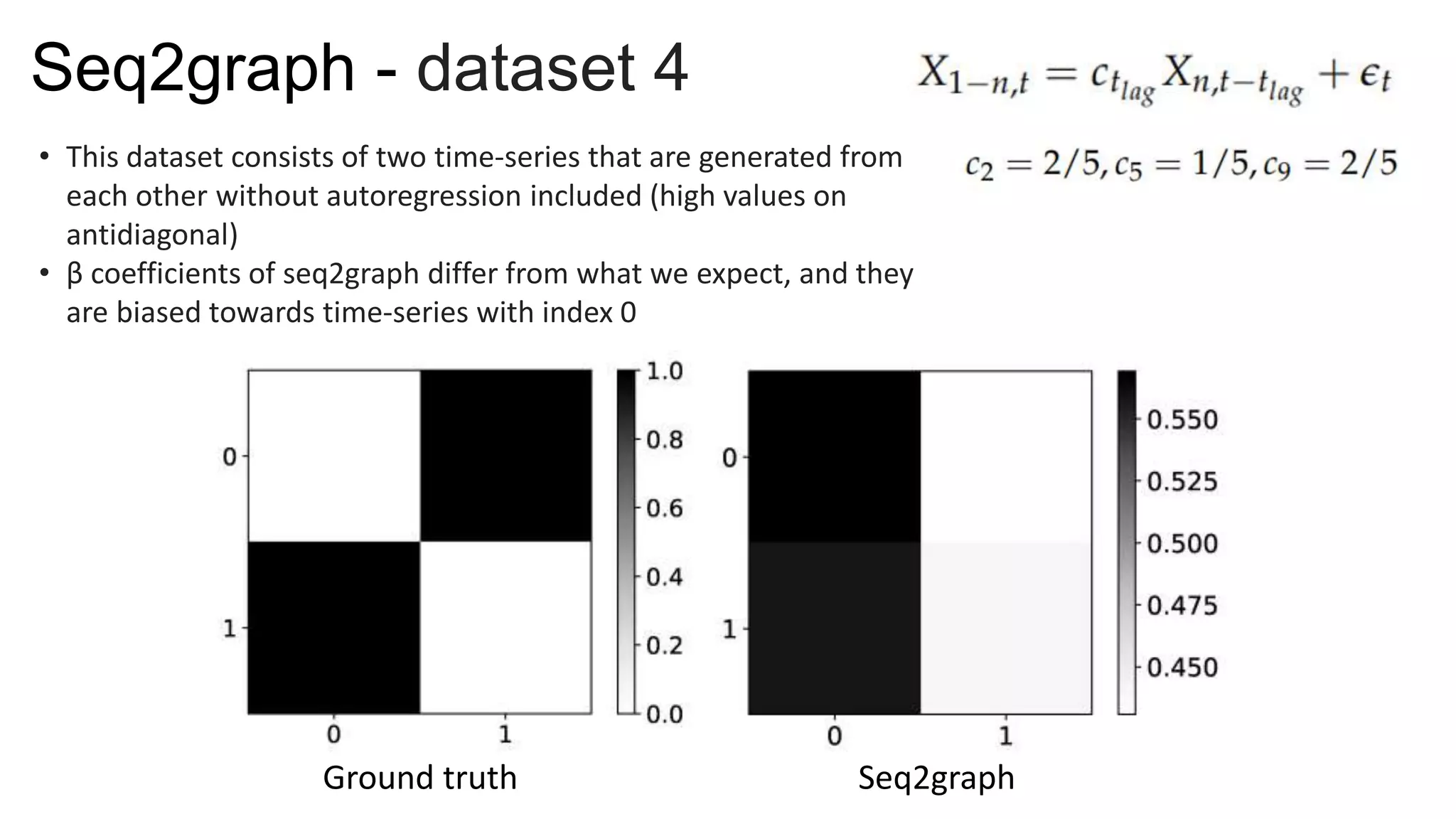

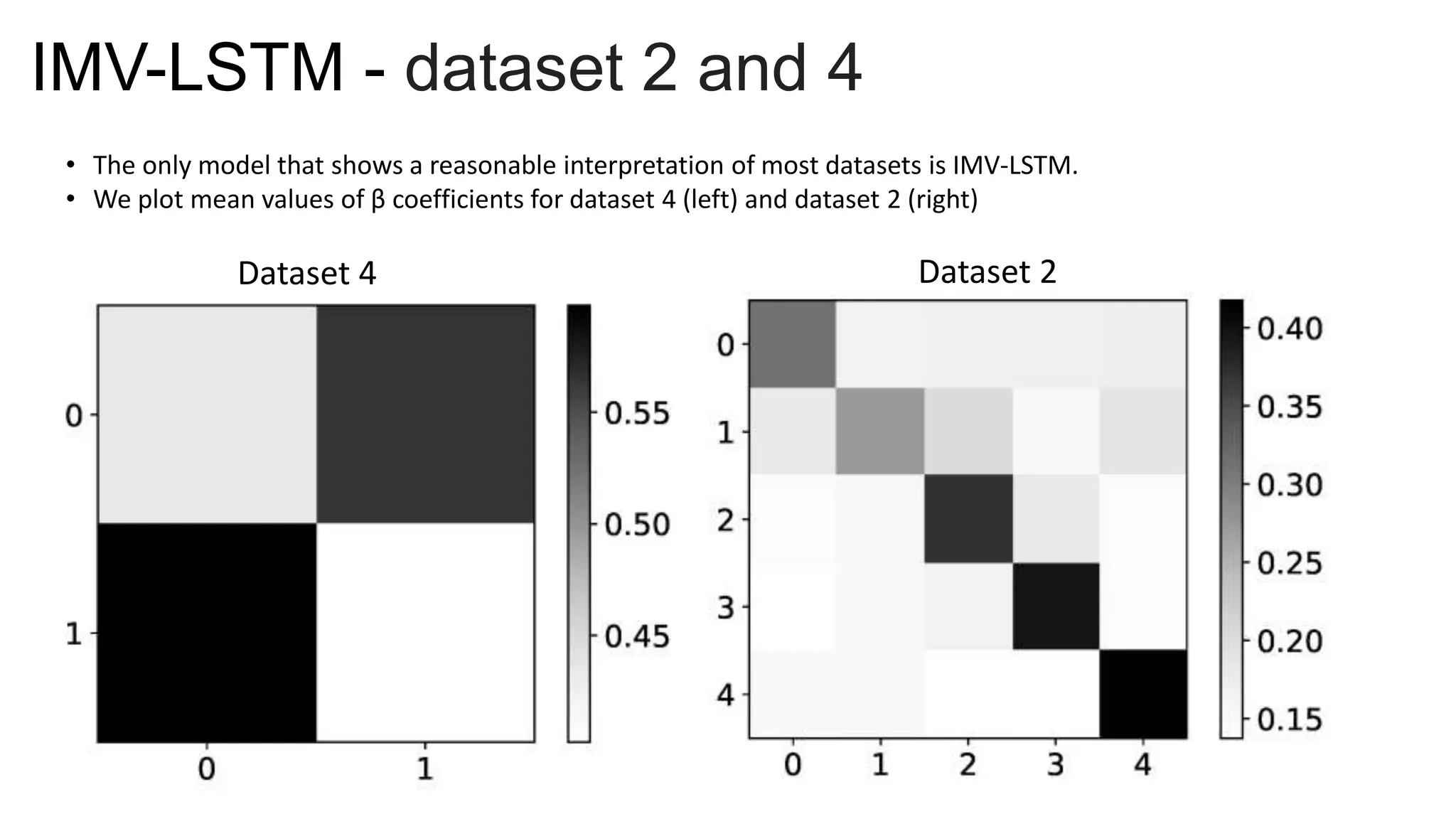

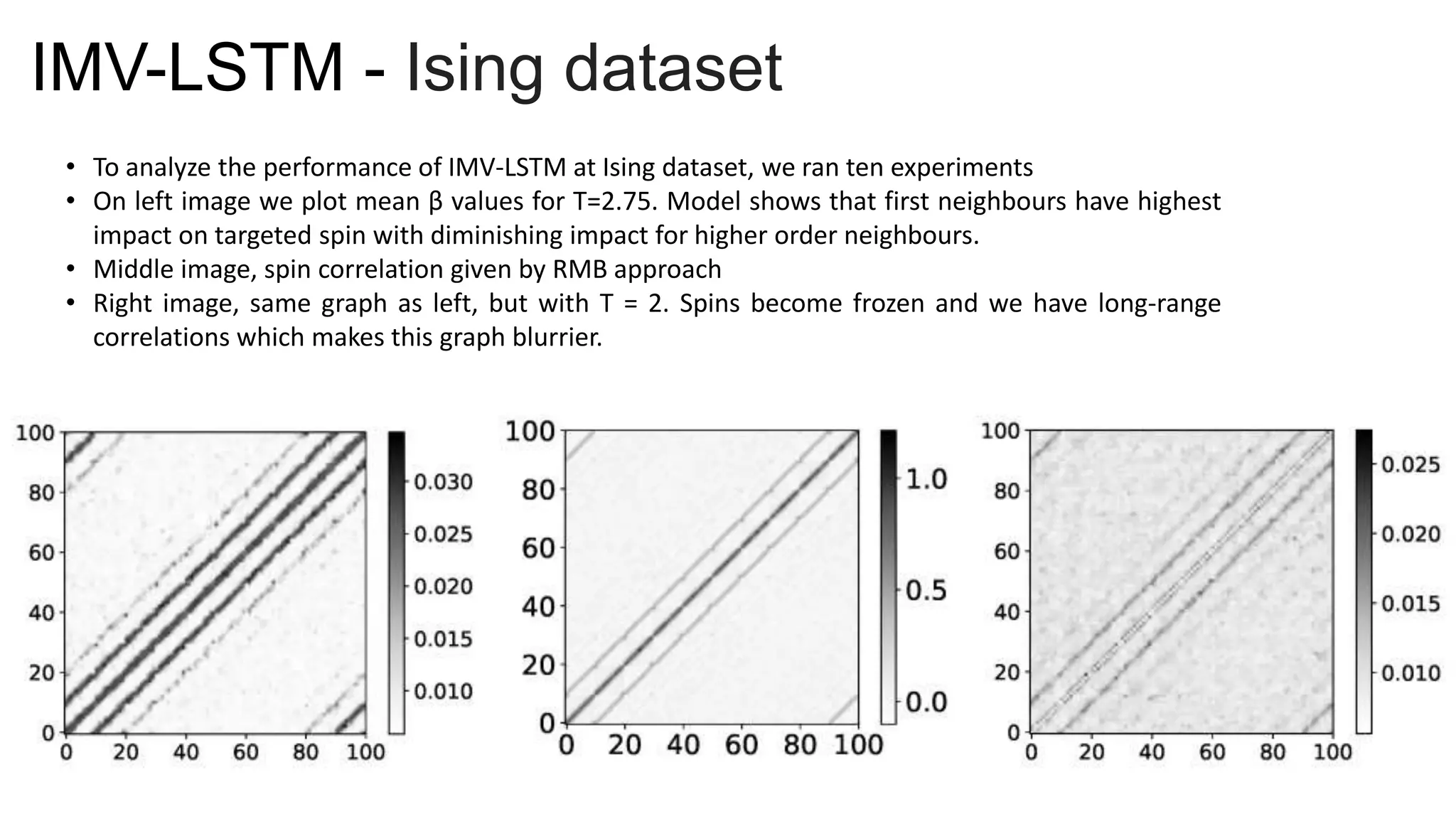

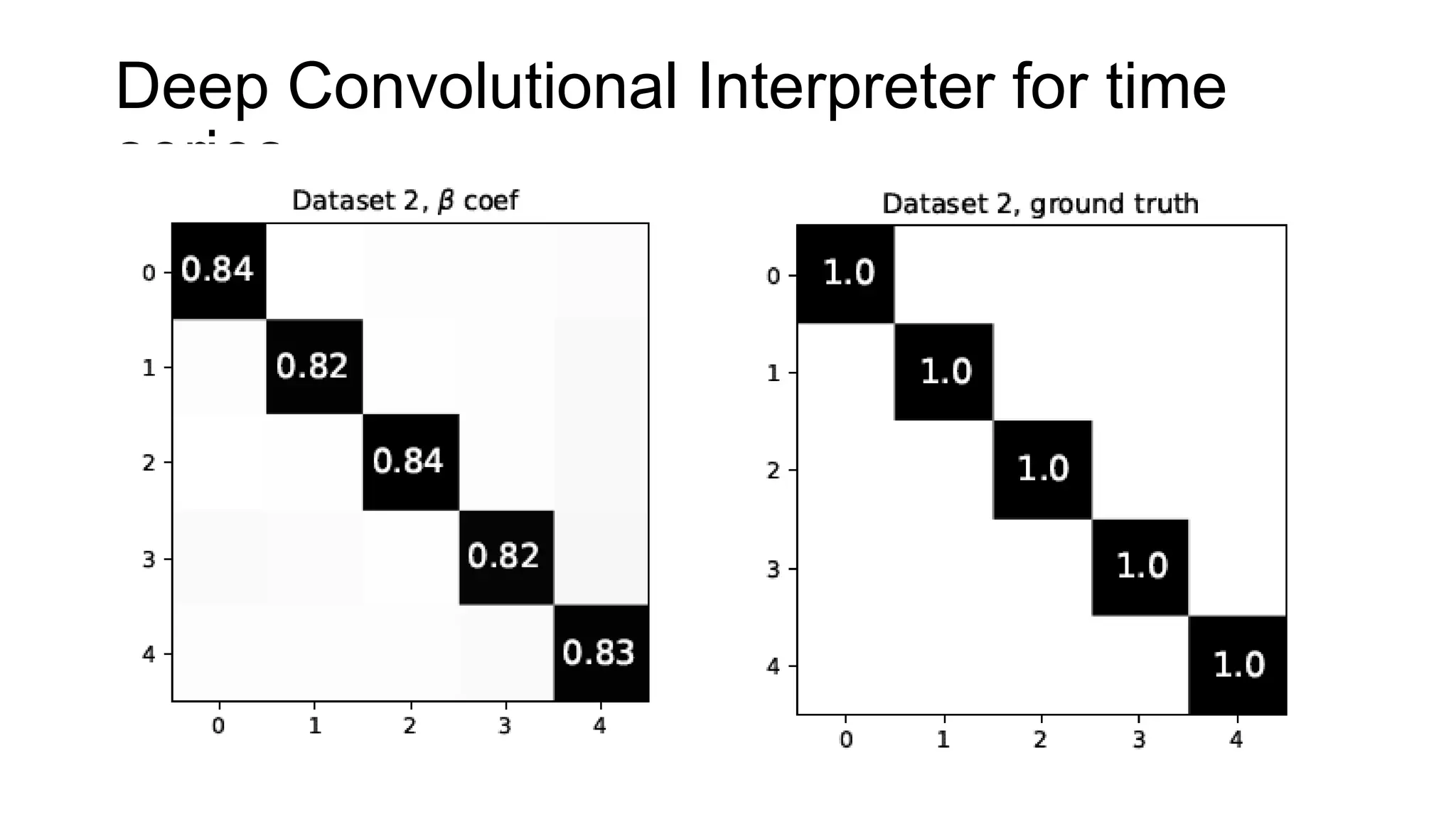

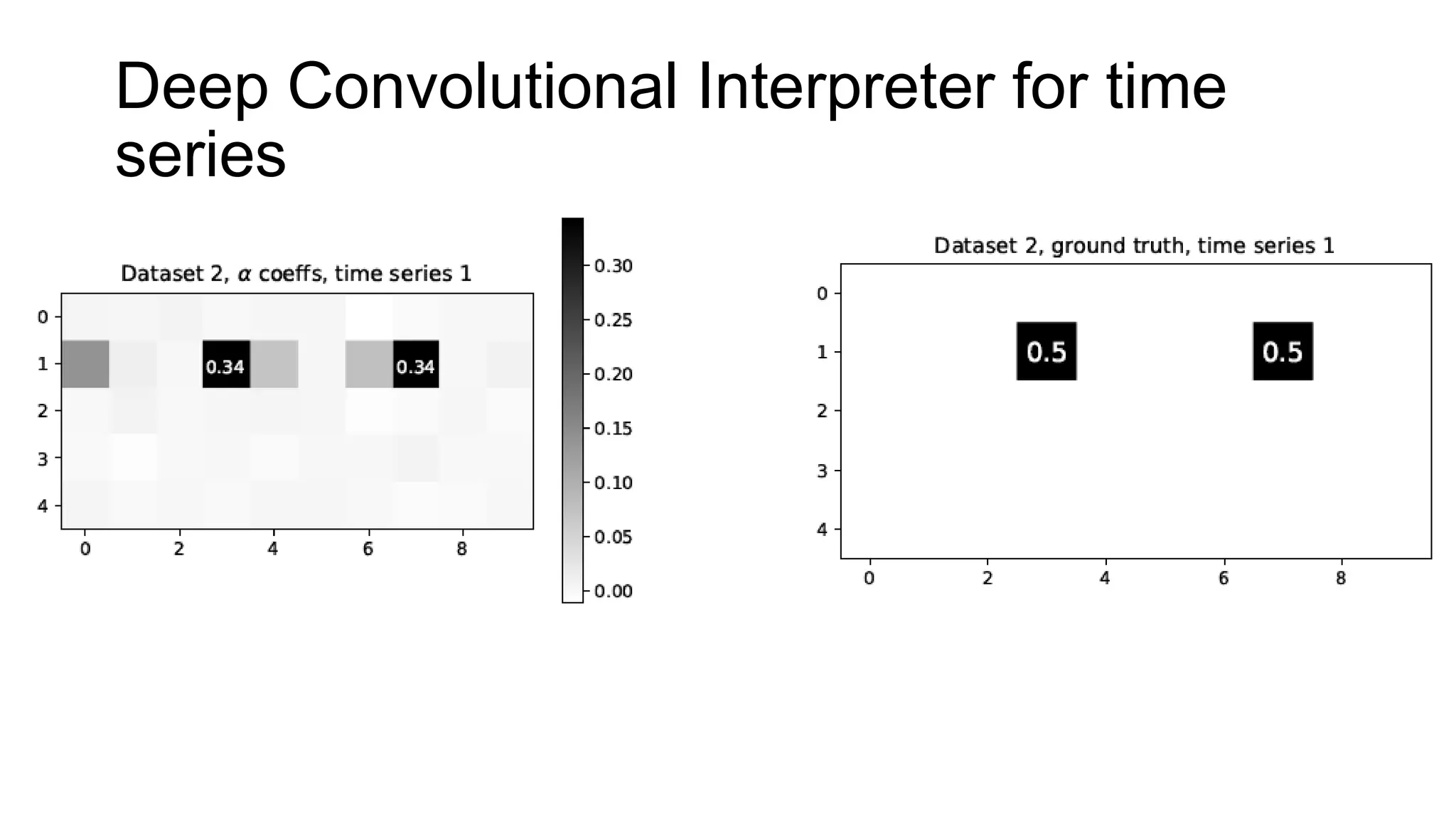

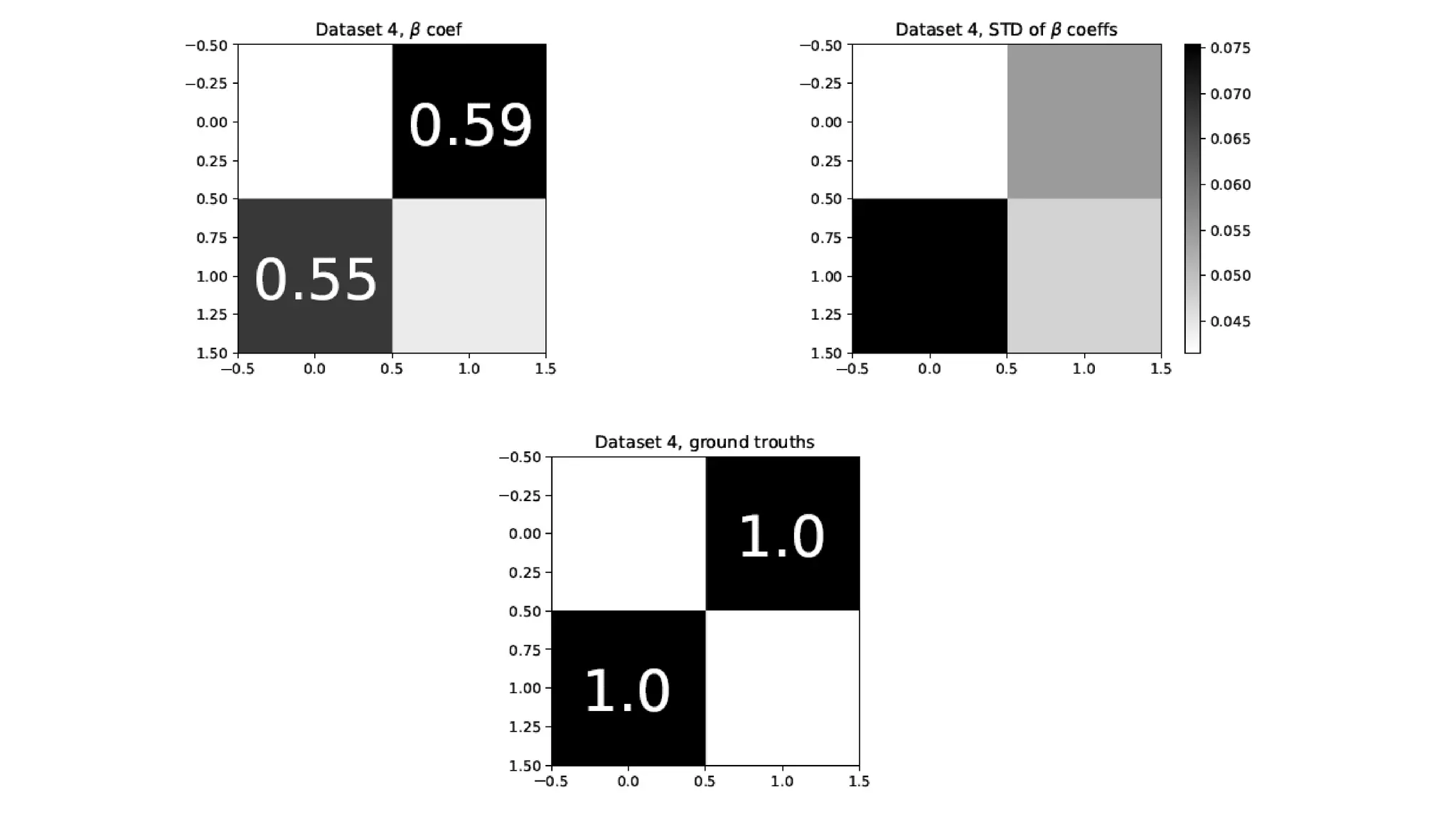

The document discusses the importance of human-centric explainable AI (HC-XAI) in the context of multivariate time series analysis and forecasting, emphasizing the need for models to offer human-understandable interpretations. It reviews various deep learning architectures and their interpretability, particularly focusing on attention-based models, and outlines benchmarking criteria such as performance, comprehensibility, and user accessibility. Results indicate that while prediction performance is often stable, many models struggle with providing adequate interpretability, highlighting weaknesses in current attention-based approaches.