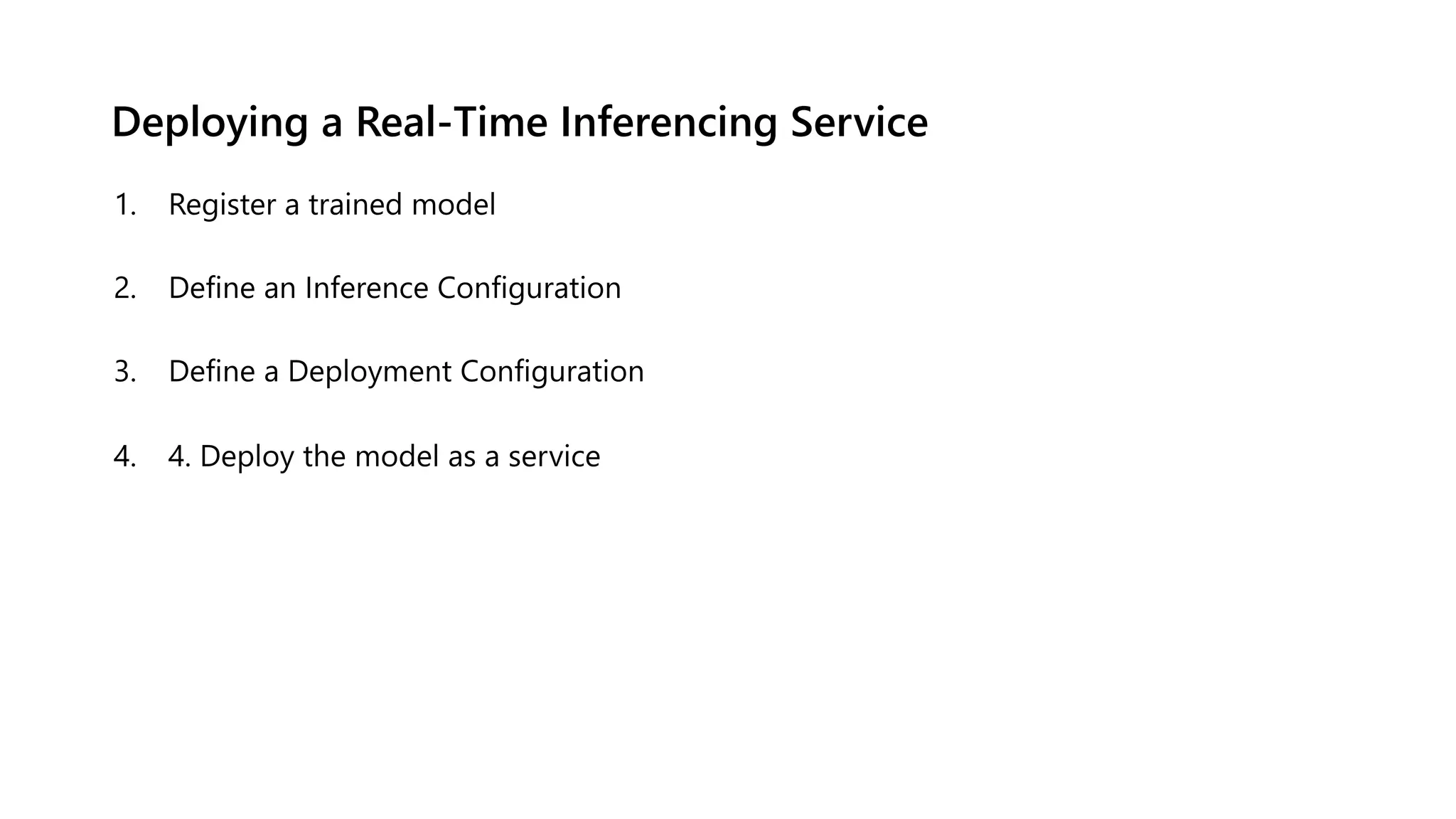

The document discusses machine learning inferencing, outlining the distinction between batch and real-time inference methods. Batch inference processes predictions in scheduled intervals, storing results for later use, while real-time inference offers immediate predictions for applications needing rapid responses. Best practices for implementing both methods in Azure Machine Learning, including compute options and deployment strategies, are provided.