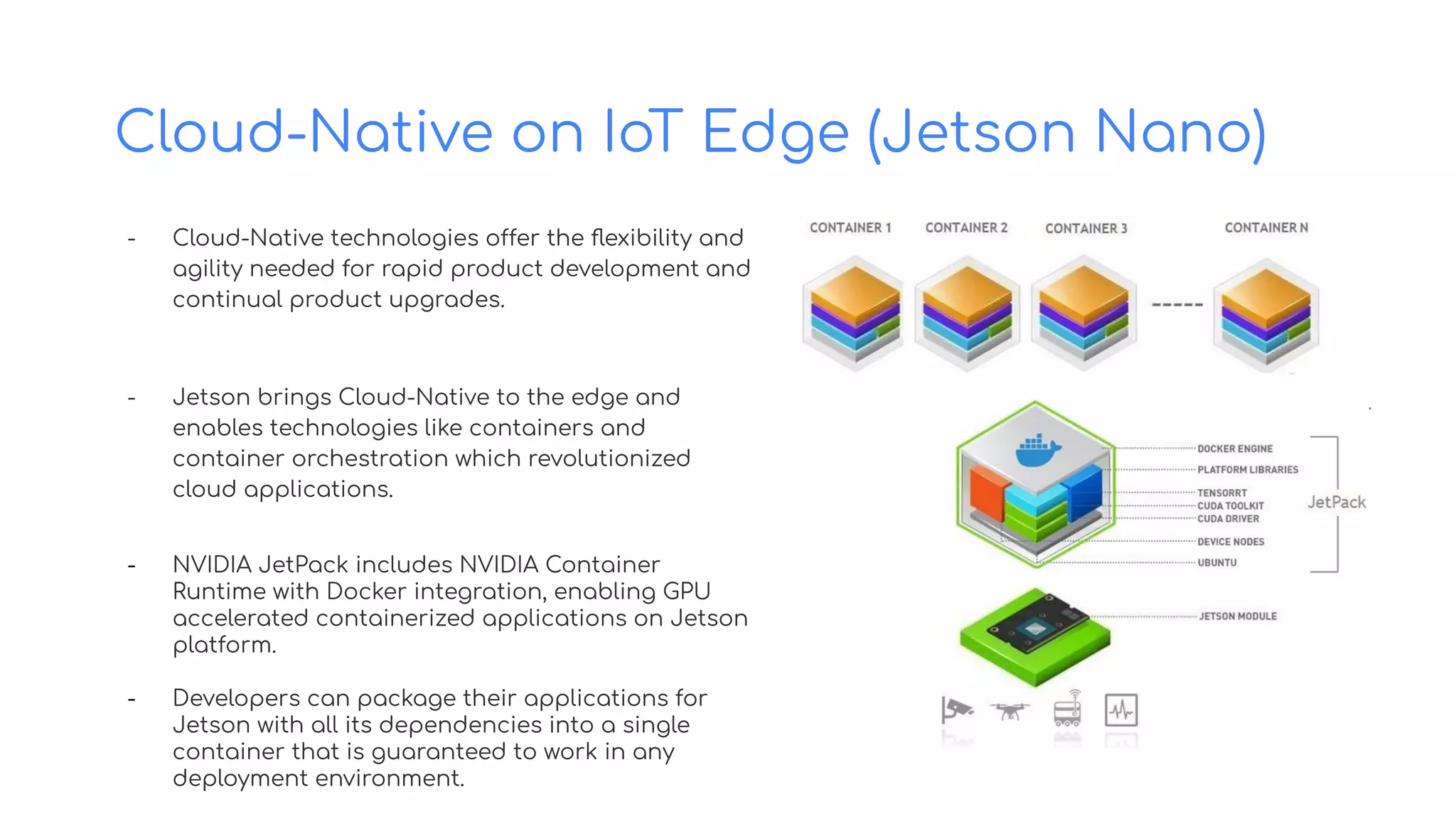

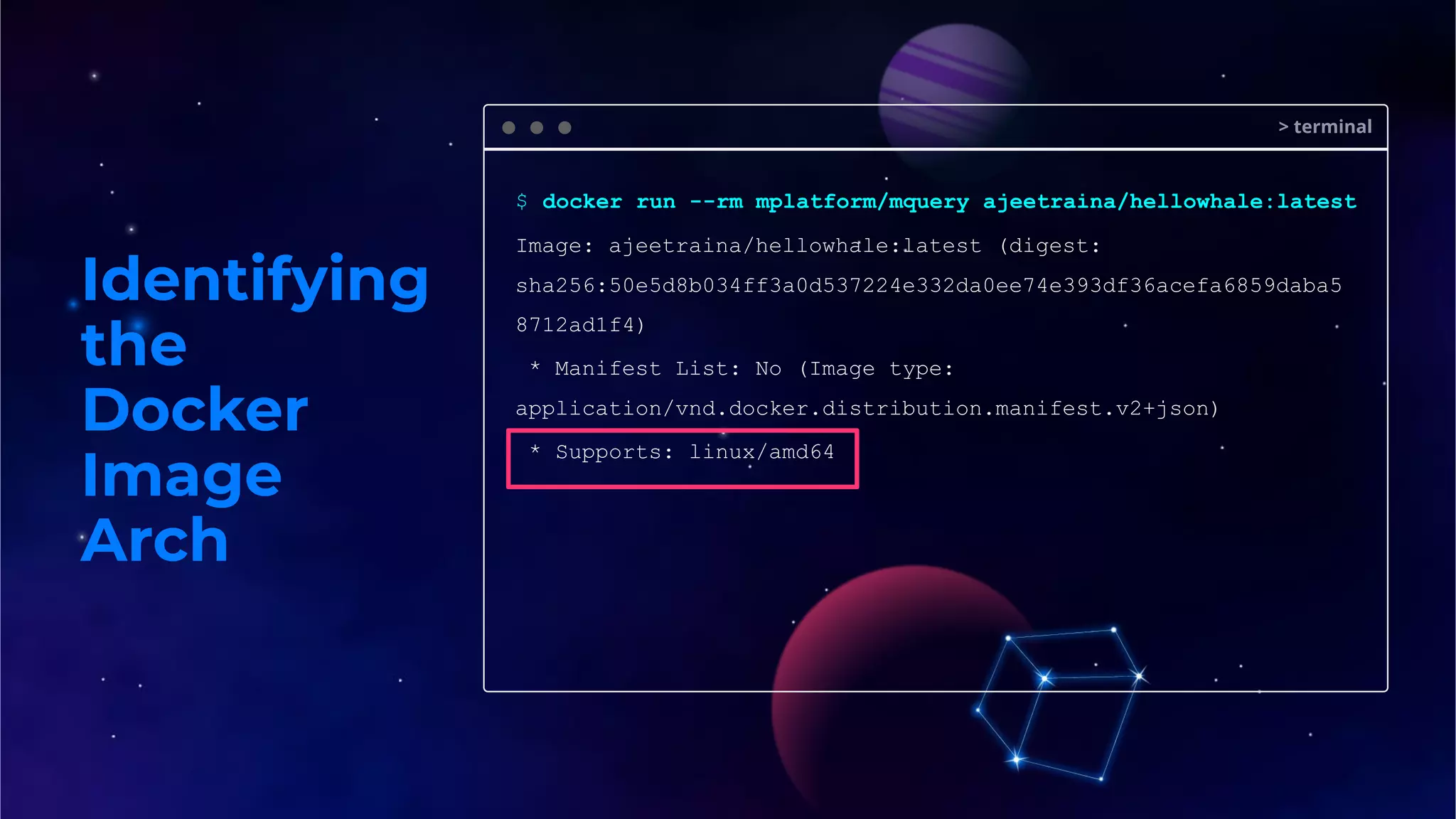

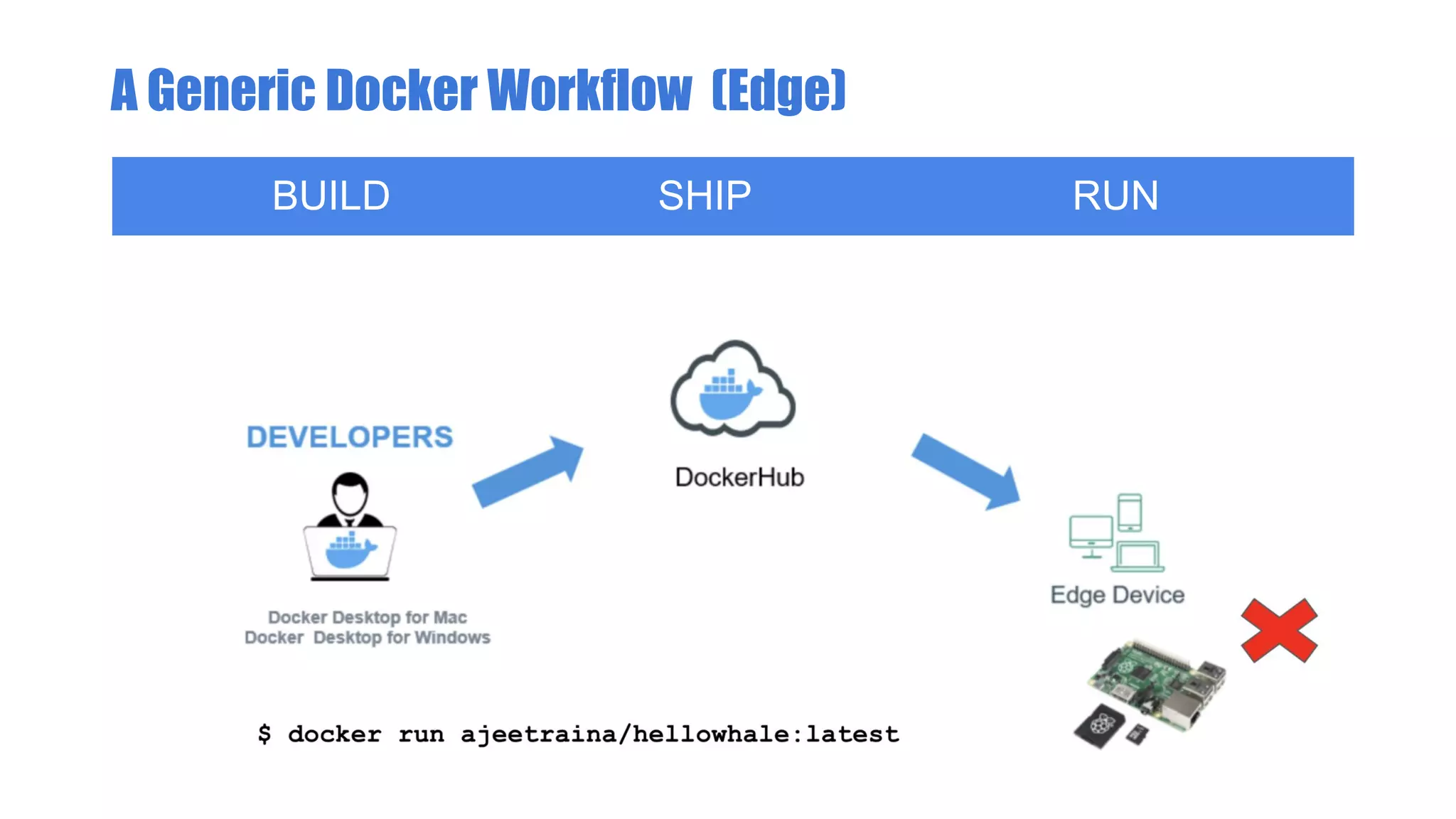

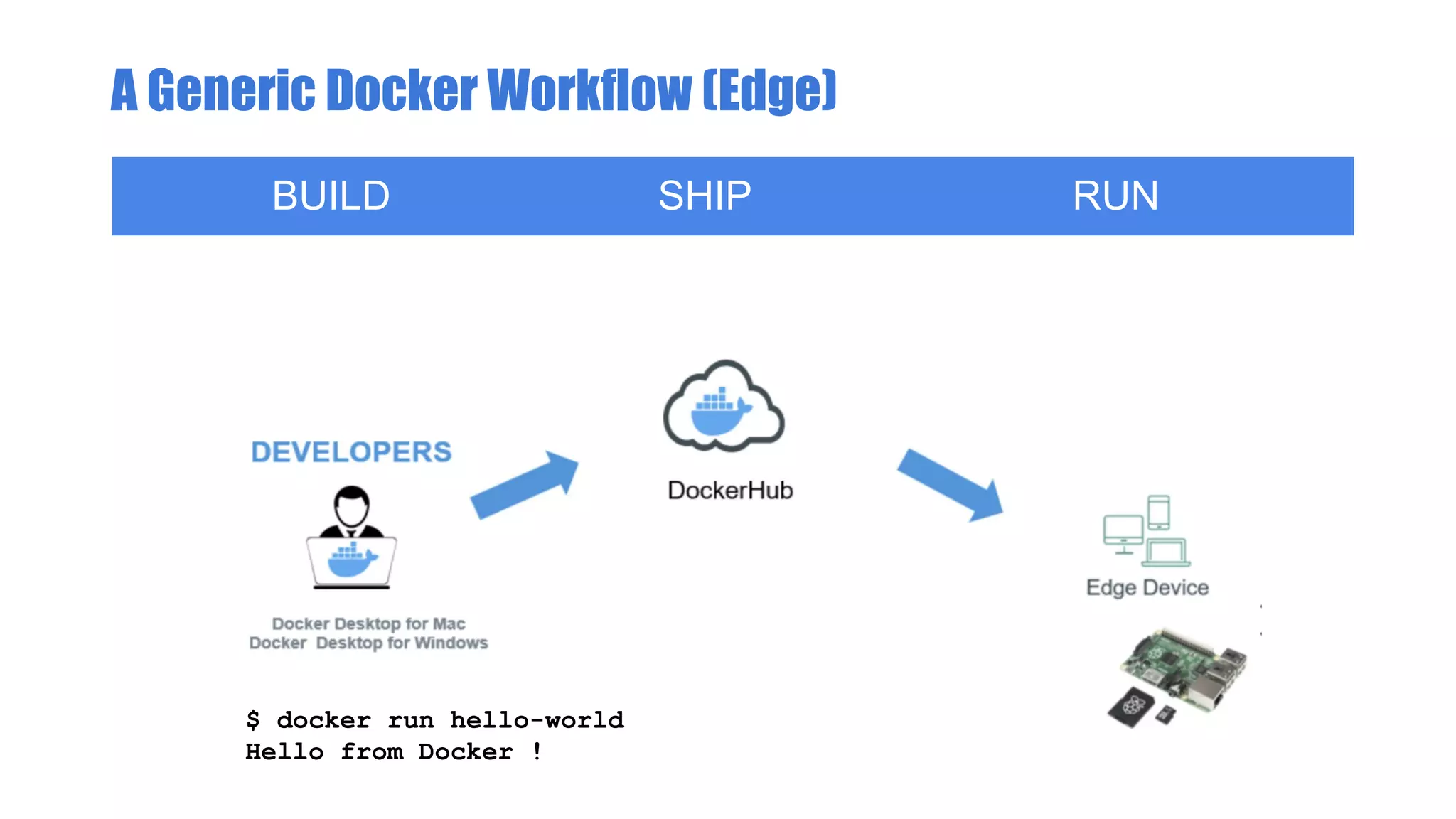

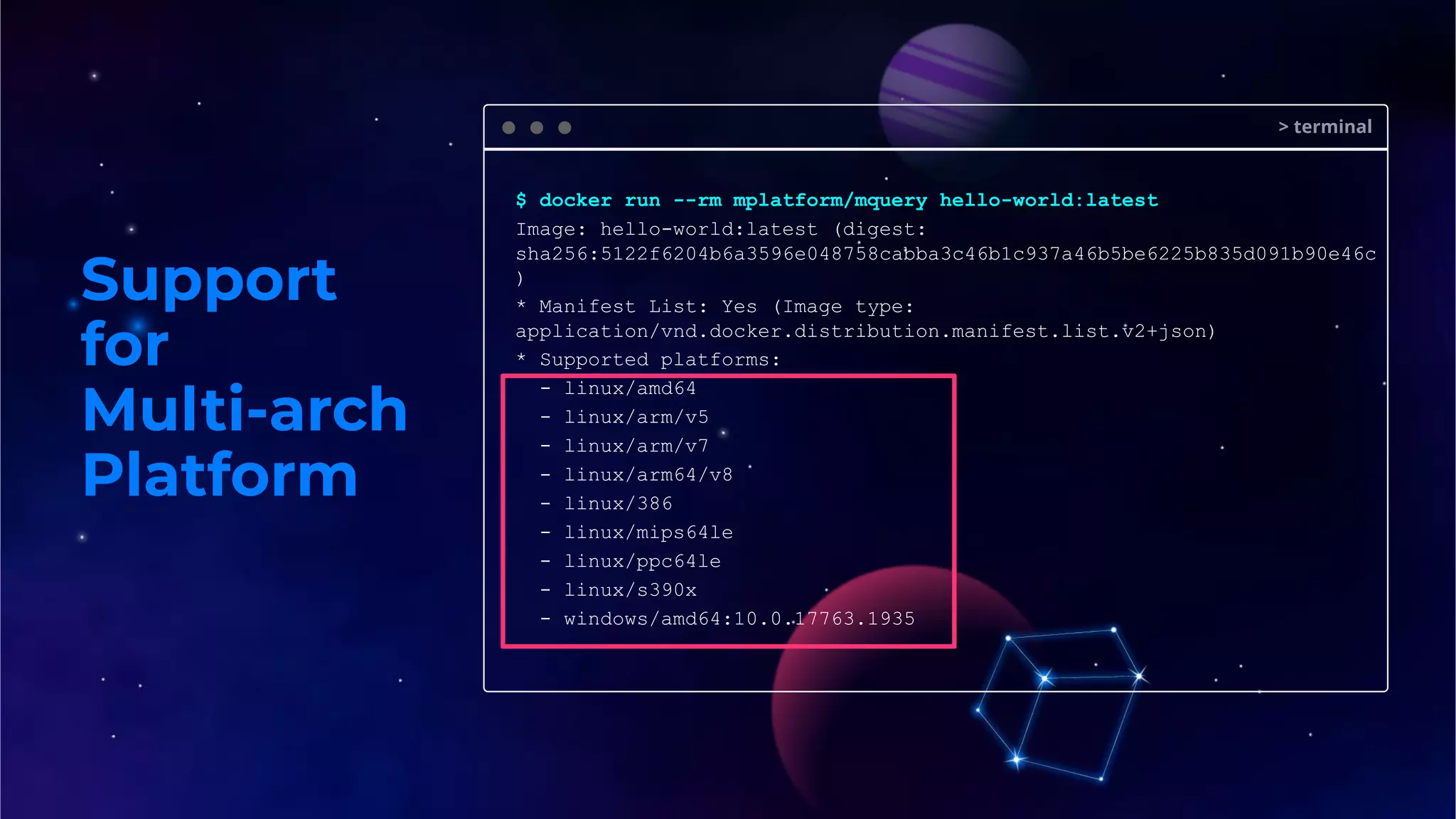

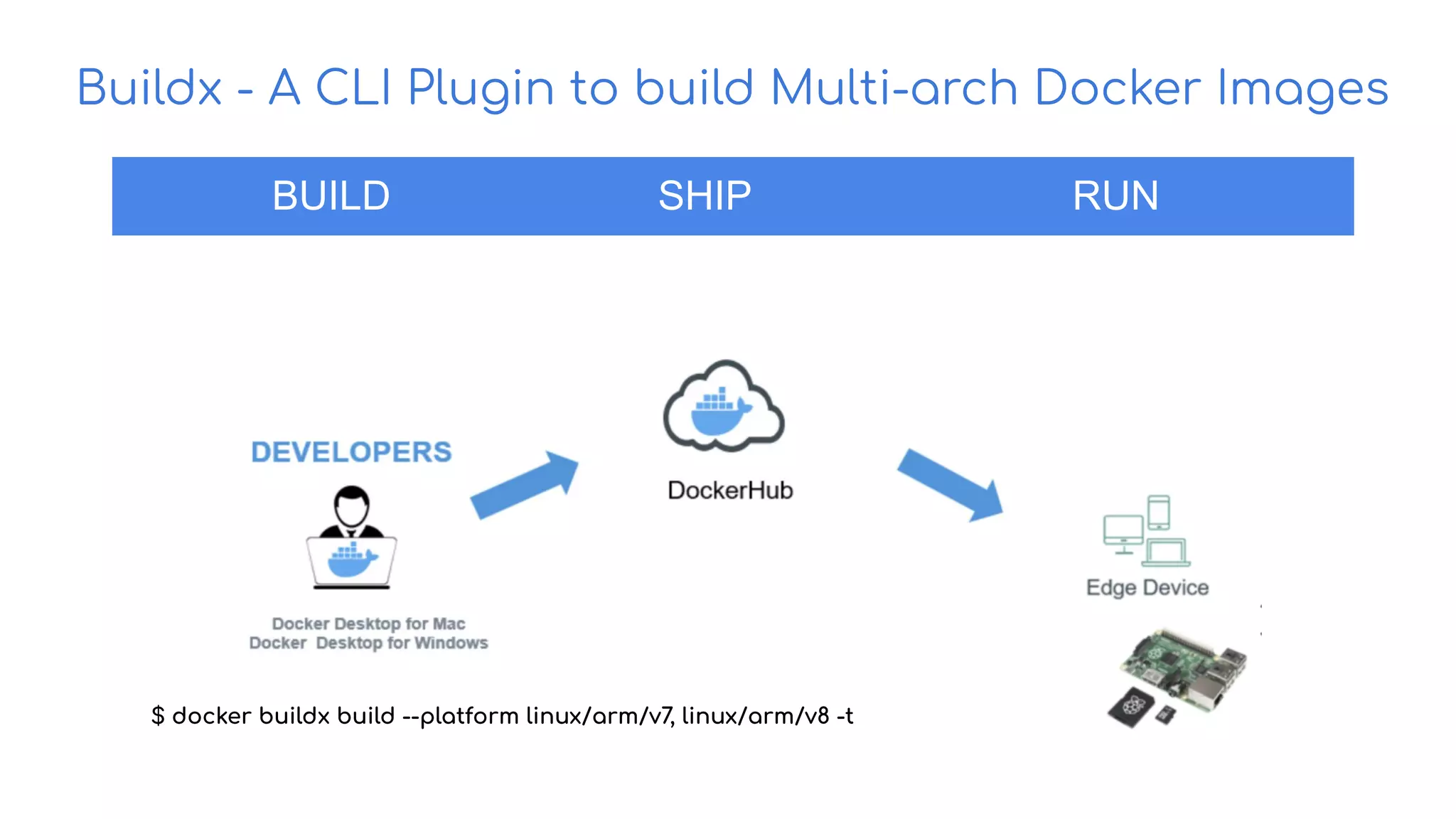

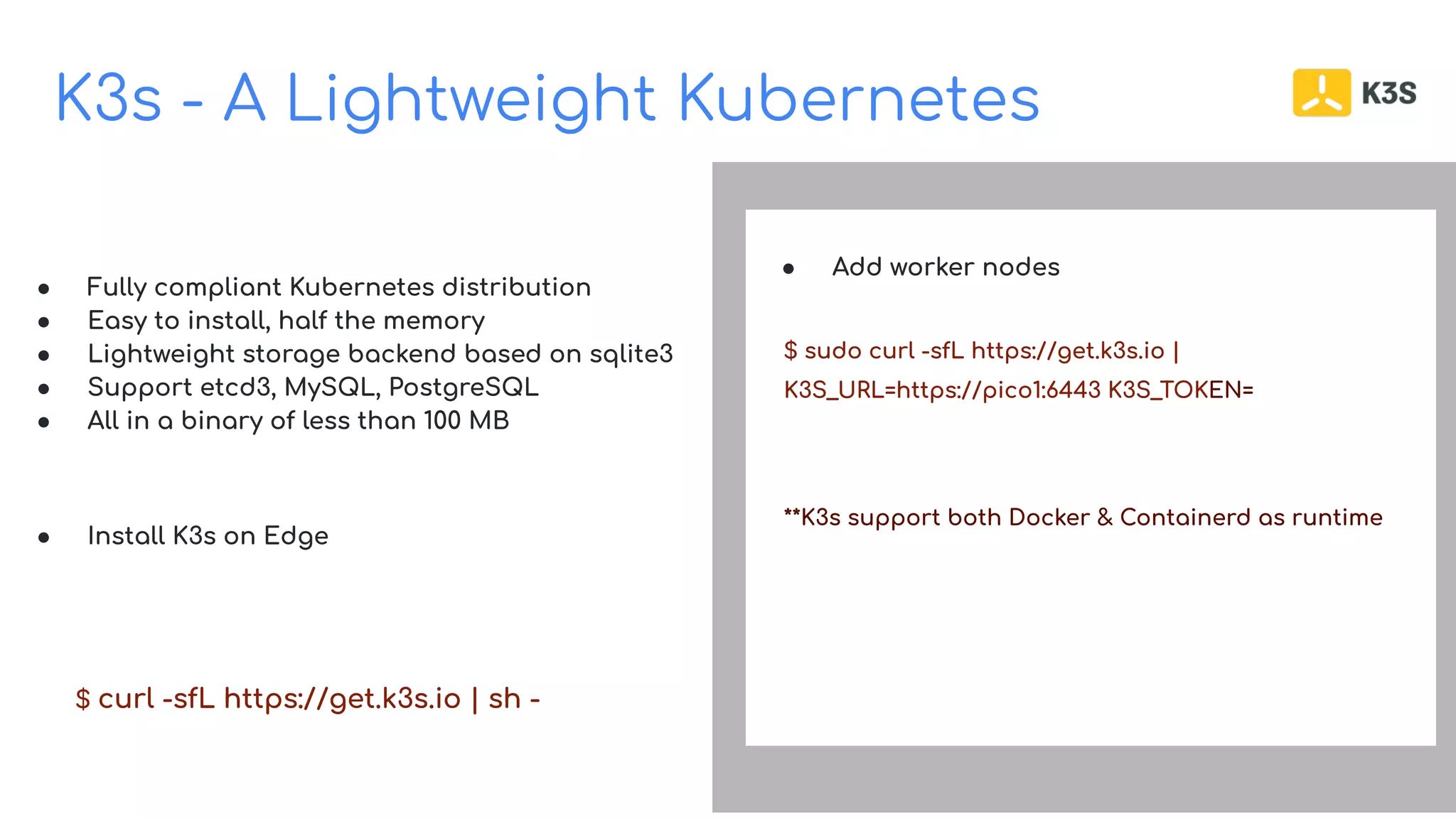

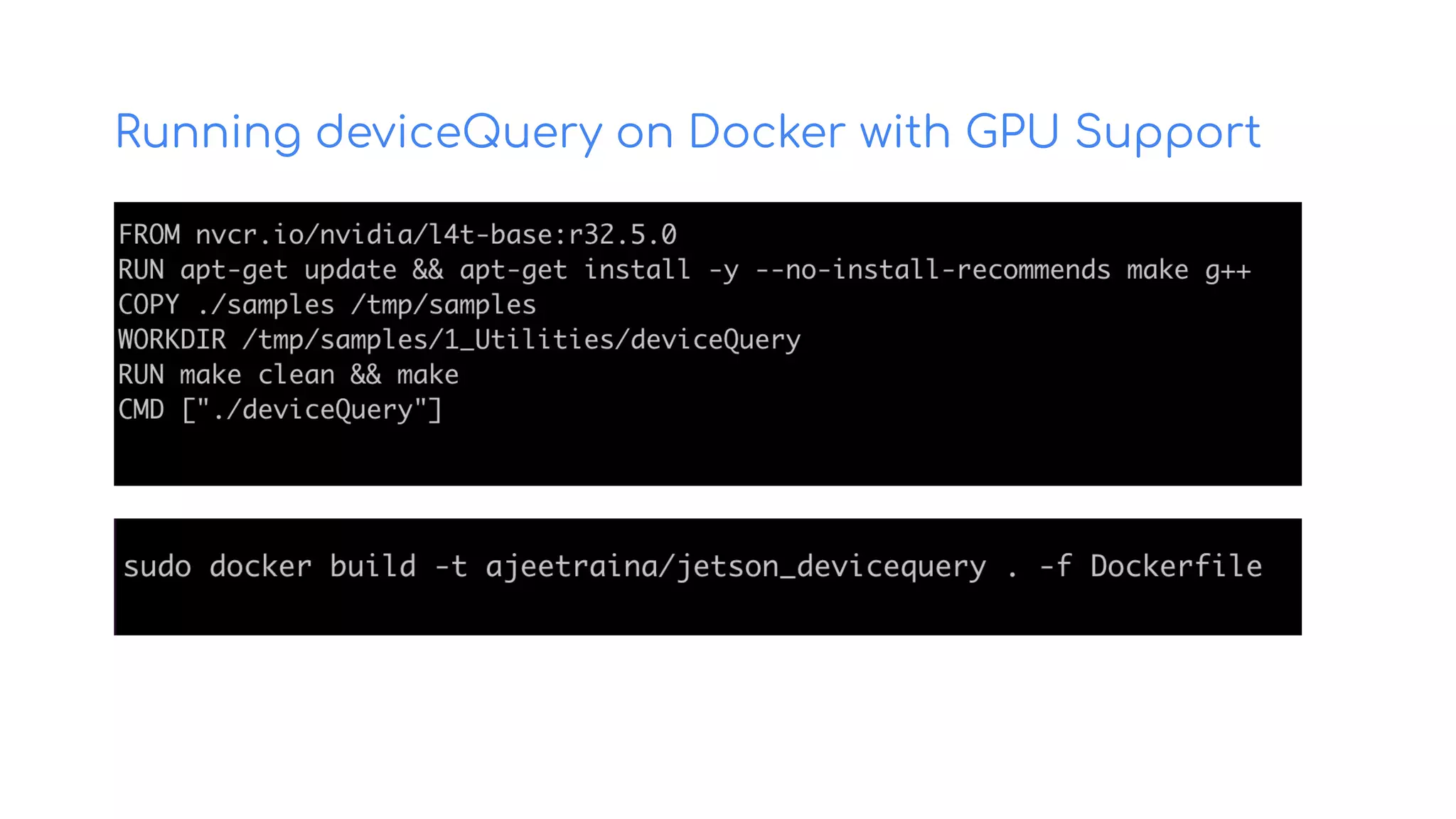

The document discusses the integration of Docker and K3s with NVIDIA Jetson Nano for IoT edge computing, highlighting its capabilities for AI applications and GPU-accelerated containerized environments. It provides installation instructions and workflows for deploying applications, including examples of real-time systems for mask detection and drone delivery services. Key features of K3s as a lightweight Kubernetes distribution and Docker's support for multi-architecture are also emphasized.

![Enabling GPU access with Compose

Build on Open Source

● Compose services can define GPU device reservations

services:

test:

image: nvidia/cuda:10.2-base

command: nvidia-smi

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 1

capabilities: [gpu, utility]

ia-smi

● Bring up GPU-enabled Docker container

$ docker-compose up

Creating network "gpu_default" with the default driver

Creating gpu_test_1 ... done

Attaching to gpu_test_1

test_1 |

+-----------------------------------------------------

------------------------+

test_1 | | NVIDIA-SMI 450.80.02 Driver Version:

450.80.02 CUDA Version: 11.1 |

test_1 |

|-------------------------------+---------------------

-+----------------------+

======================================================

==============|](https://image.slidesharecdn.com/meetupdeliveringcontainers-basedappstoiotedgedevices-210619053151/75/Delivering-Docker-K3s-worloads-to-IoT-Edge-devices-11-2048.jpg)

![Support

for

Multi-arch

Platform

Docker Compose v2.0

$ docker compose --project-name demo up

[+] Running 3/4

⠿ Network demo_default Created

4.0s

⠿ Volume "demo_db_data" Created

0.0s

⠿ Container demo_db_1 Started

2.2s

⠿ Container demo_wordpress_1 Starting](https://image.slidesharecdn.com/meetupdeliveringcontainers-basedappstoiotedgedevices-210619053151/75/Delivering-Docker-K3s-worloads-to-IoT-Edge-devices-22-2048.jpg)

![Mask Detection System running on Jetson Nano

[Confidential]

- A Jetson Nano Dev Kit running JetPack 4.4.1 or 4.5

- An external DC 5 volt, 4 amp power supply connected through the Dev Kit's

barrel jack connector (J25). (See these instructions on how to enable barrel

jack power.) This software makes full use of the GPU, so it will not run with

USB power.

- A USB webcam attached to your Nano

- Another computer with a program that can display RTSP streams -- we

suggest VLC or QuickTime.

$ sudo docker run --runtime nvidia

--privileged --rm -it

--env MASKCAM_DEVICE_ADDRESS=<your-jetson-ip>

-p 1883:1883

-p 8080:8080

-p 8554:8554

maskcam/maskcam-beta](https://image.slidesharecdn.com/meetupdeliveringcontainers-basedappstoiotedgedevices-210619053151/75/Delivering-Docker-K3s-worloads-to-IoT-Edge-devices-33-2048.jpg)

![CherryBot Systems - AI-Powered Payload Delivery System

[Confidential]

- AI-based payload delivery robot

- Low-cost autonomous robot system

- Equipped with NVIDIA Jetson Nano

board, a low-powered AI deployed as

Edge device, a sensor suite that

includes multiple cameras, GPS and

swappable batteries

- Uses deep learning to correctly

interpret data gathered from its

sensors and to make intelligent

decisions that ensure a fast, safe and

cost efficient delivery.

- It can correctly identify objects or

people or detect objects and

obstacles to avoid collisions in a safe

reliable manner.](https://image.slidesharecdn.com/meetupdeliveringcontainers-basedappstoiotedgedevices-210619053151/75/Delivering-Docker-K3s-worloads-to-IoT-Edge-devices-35-2048.jpg)

![CherryBot Systems - AI-Powered Payload Delivery System

[Confidential]](https://image.slidesharecdn.com/meetupdeliveringcontainers-basedappstoiotedgedevices-210619053151/75/Delivering-Docker-K3s-worloads-to-IoT-Edge-devices-36-2048.jpg)

![CherryBot Systems

[Confidential]

Food Delivery Swag Distribution Medicine Delivery](https://image.slidesharecdn.com/meetupdeliveringcontainers-basedappstoiotedgedevices-210619053151/75/Delivering-Docker-K3s-worloads-to-IoT-Edge-devices-37-2048.jpg)