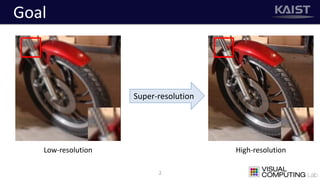

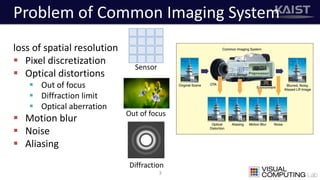

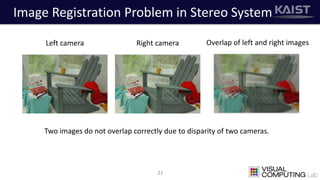

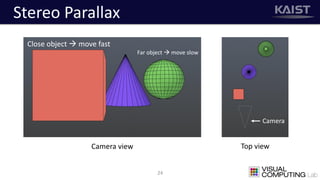

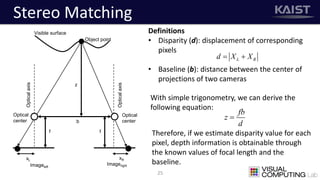

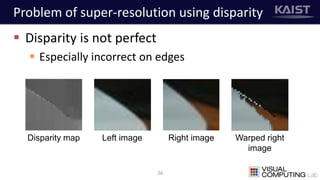

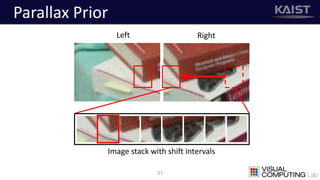

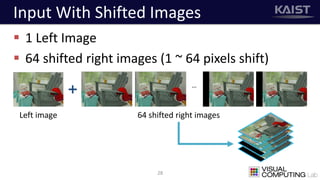

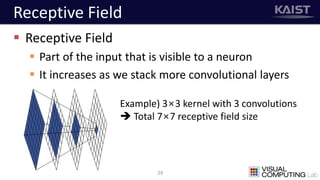

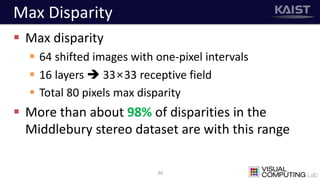

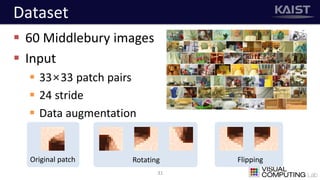

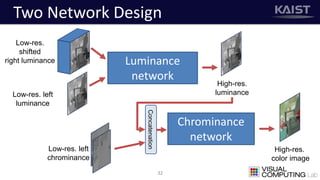

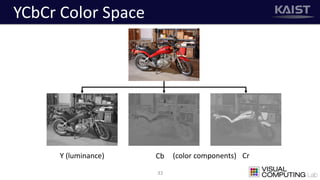

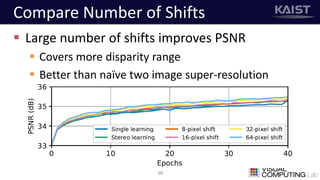

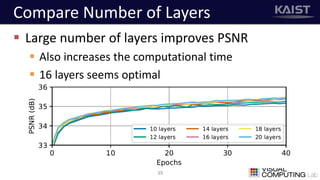

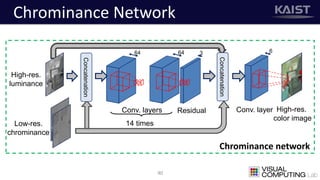

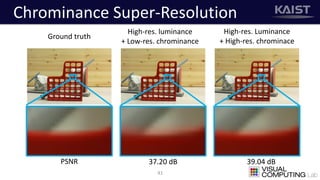

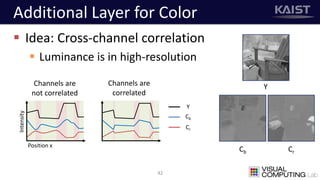

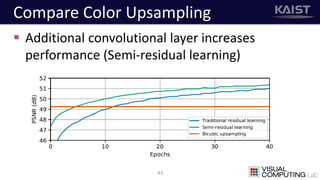

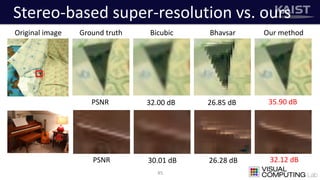

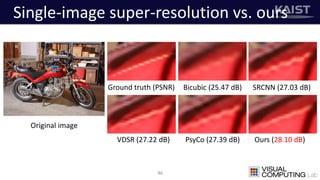

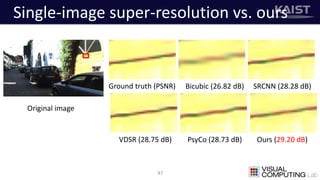

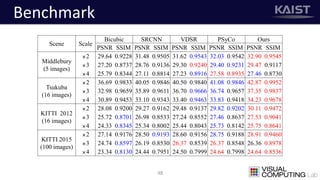

The document discusses a deep learning-based approach for stereo super-resolution, which aims to enhance the spatial resolution of stereo images using a parallax prior. The method addresses common imaging system issues and surpasses traditional techniques by reconstructing high-resolution images without direct disparity calculation, demonstrating promising performance metrics. Results indicate the proposed method outperforms existing state-of-the-art approaches in stereo imaging applications.