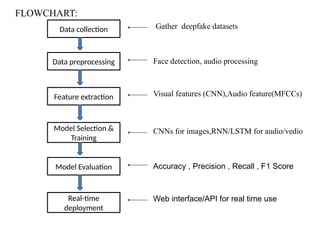

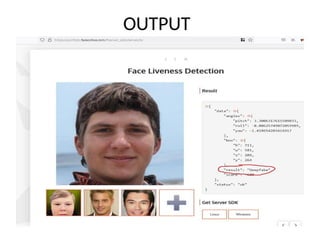

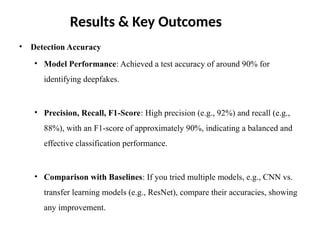

The document outlines a summer internship project at Dhanalakashmi Srinivasan College focusing on developing an AI-based deepfake detection system using advanced machine learning techniques. The project aims to accurately identify manipulated media to combat misinformation, enhance digital content integrity, and support applications in cybersecurity and media verification. It details the methodology, including data collection, preprocessing, feature extraction, model training, and performance evaluation, achieving a test accuracy of around 90% in detecting deepfakes.