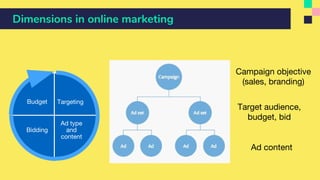

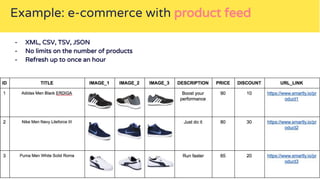

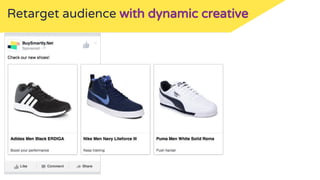

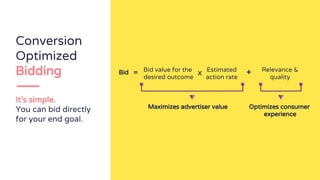

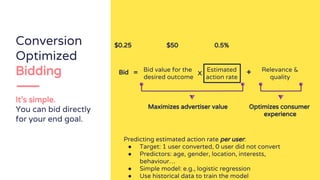

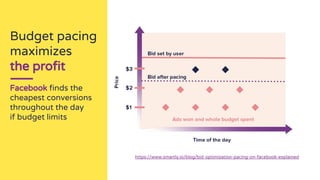

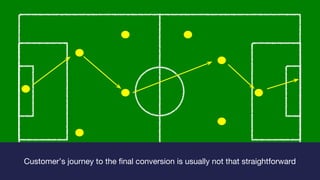

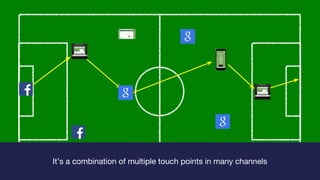

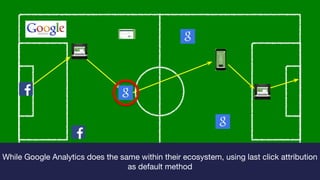

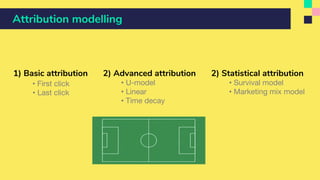

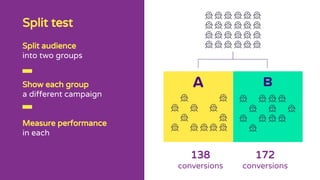

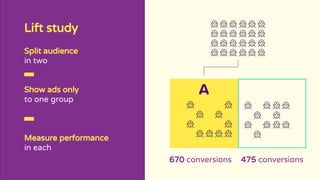

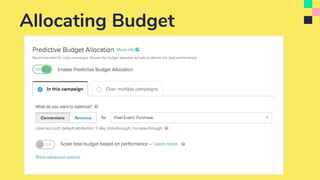

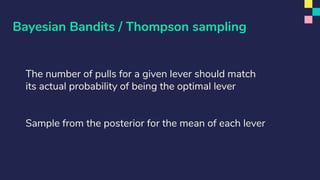

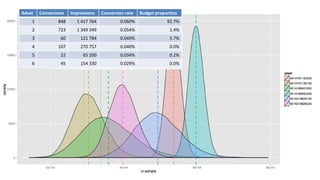

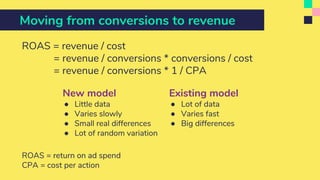

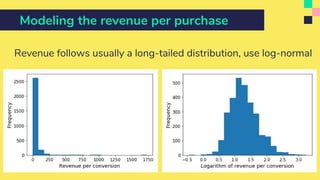

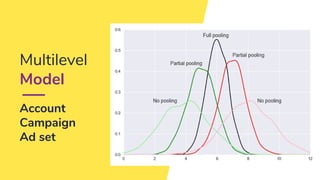

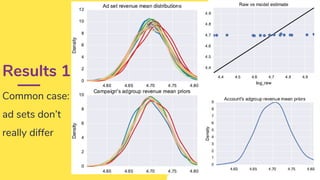

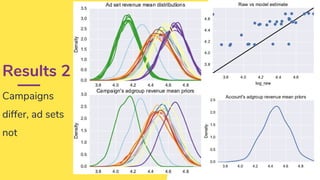

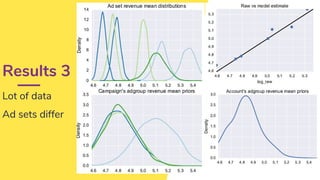

The document discusses data science applications in online marketing, focusing on campaign optimization, tracking, attribution, and budget allocation. It emphasizes the importance of analyzing customer journeys, testing strategies, and using statistical models to enhance ad performance. Additionally, it shares insights on predictive budget allocation and the complexity of measuring advertising effectiveness.