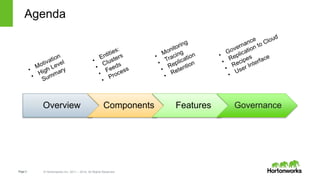

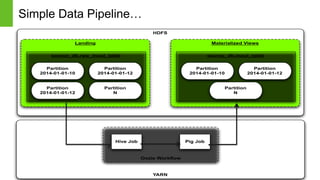

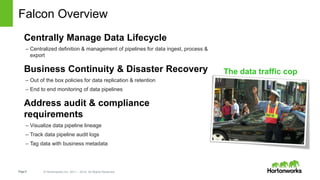

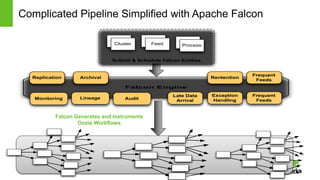

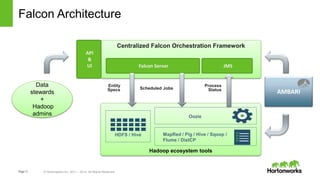

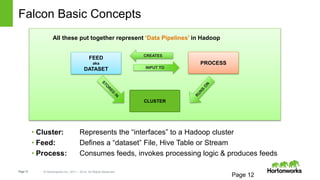

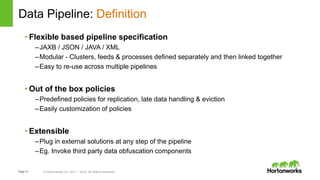

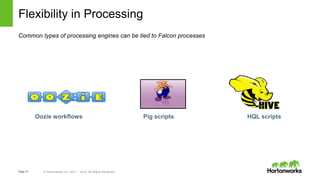

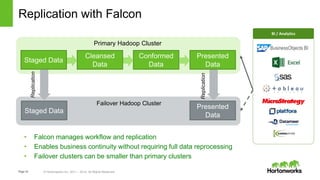

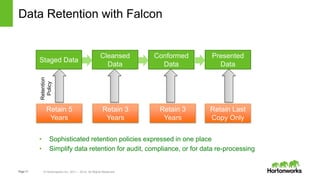

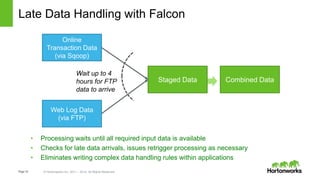

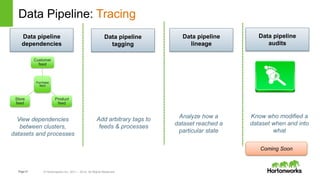

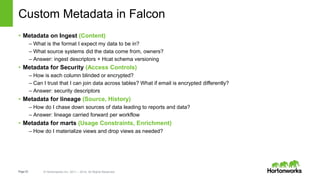

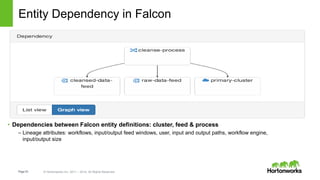

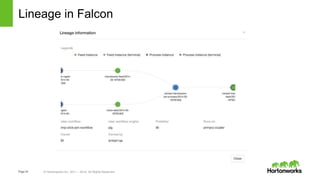

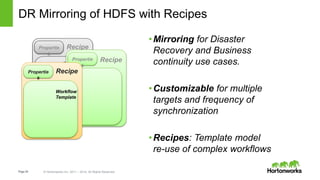

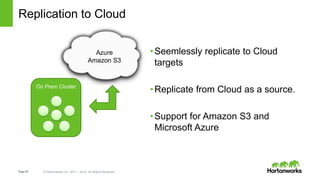

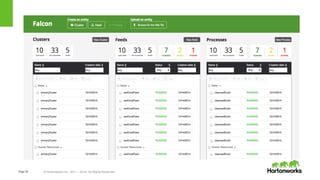

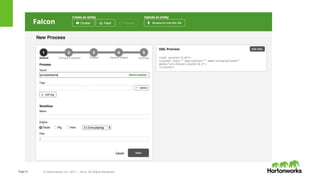

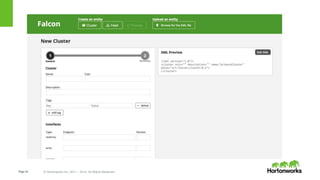

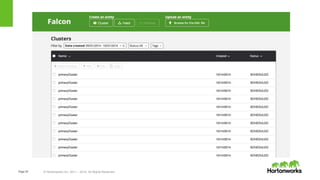

Apache Falcon is a data management platform that allows users to centrally manage data lifecycles across Hadoop clusters. It defines data entities like clusters, feeds, and processes to represent data pipelines. Falcon then automatically generates workflows to orchestrate the movement of data according to defined policies for replication, retention, and late data handling. It also provides data governance features like lineage tracing, auditing, and tagging. The latest version of Falcon includes new capabilities for disaster recovery mirroring and replication to cloud storage services.