1) Automated decision-making systems are opaque and can encode hidden biases, but human decisions also exhibit bias. It is mathematically impossible for algorithms to achieve both equal predictive value and equal false positive rates across groups with different base rates.

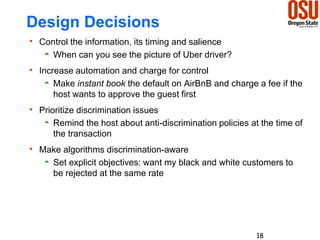

2) Studies show discrimination in online marketplaces, where requests from users with black-sounding names receive fewer responses from hosts on AirBnB and ads featuring arrest records appear more for searches with black-sounding names on Google.

3) Gerrymandering of electoral districts to benefit one party can violate equal treatment of voters, but defining and preventing partisan bias through redistricting is challenging, as natural