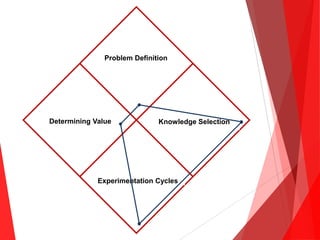

The document analyzes the crowdsourcing approach to innovation, exemplified by the Netflix Prize competition, which solicited solutions for improving their recommendation algorithm. While it led to significant collaboration and results, such as a 10.05% improvement over Netflix's algorithm, the implementation faced challenges, including misalignment with company goals and a lengthy contest duration. Key takeaways include the need for clearer goals and managed collaboration to maximize the benefits of crowdsourcing in R&D processes.