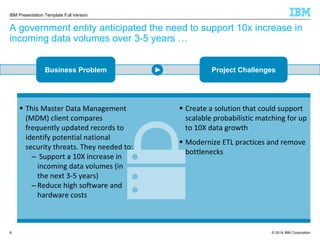

The document outlines the challenges and requirements for successful big data integration, emphasizing that 80% of development efforts in big data projects focus on integration rather than analysis. It highlights the limitations of Hadoop and MapReduce for data integration tasks and suggests that organizations need scalable and efficient solutions like IBM's Infosphere Information Server. Various case studies illustrate how companies improved costs and capabilities by replacing hand-coded methods with integrated data solutions from IBM.