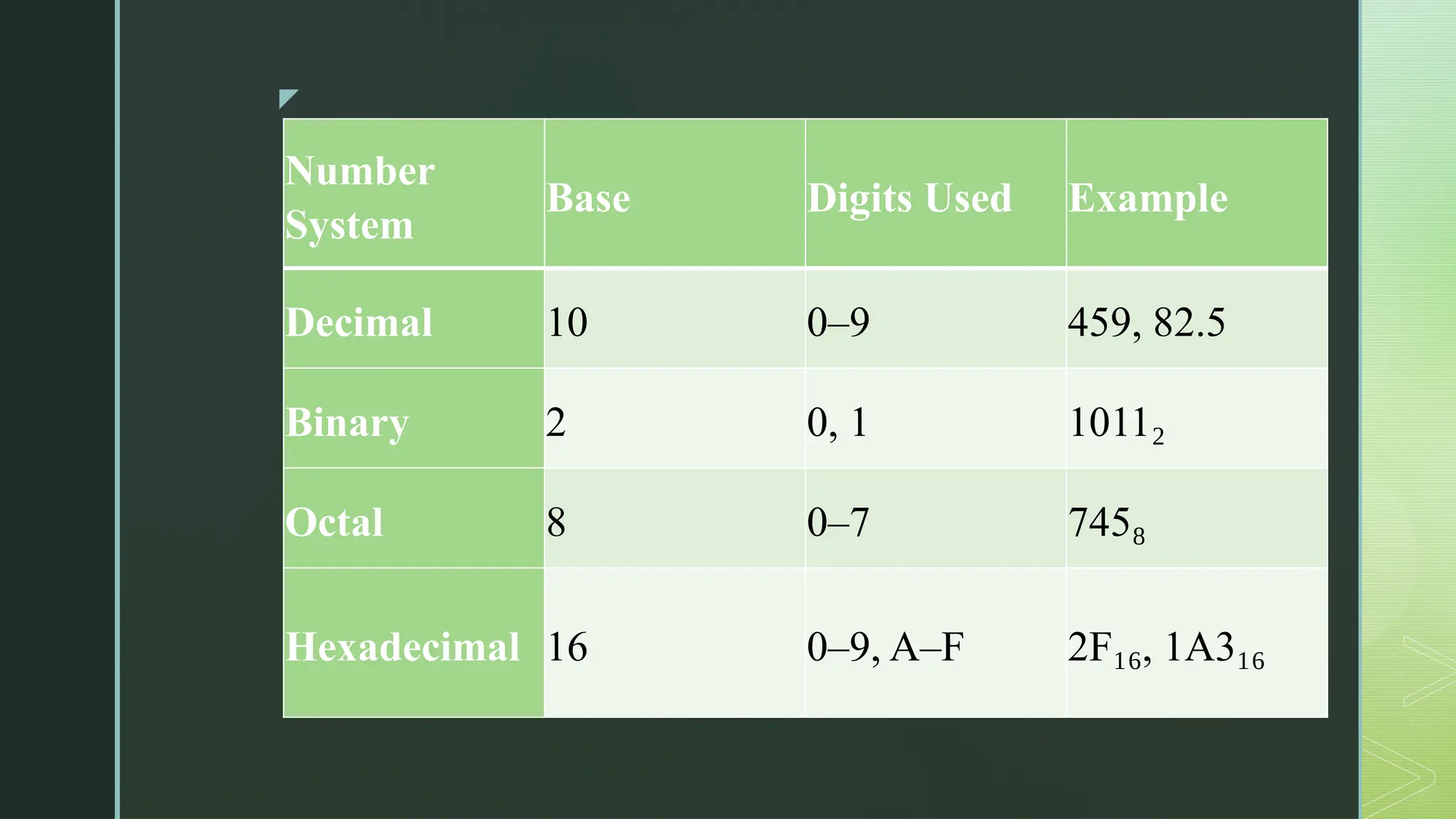

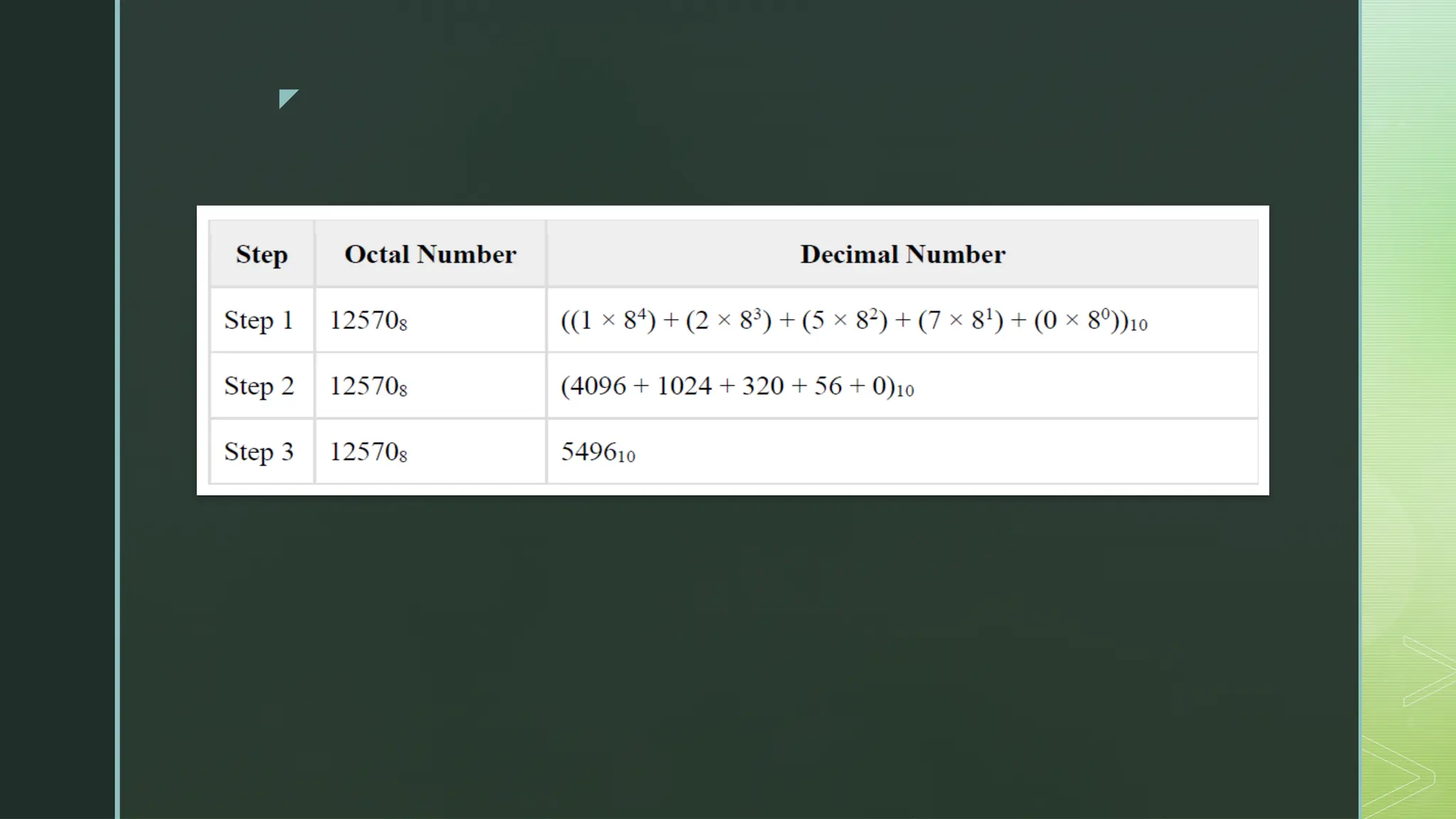

Digital Logic and Computer Architecture are foundational domains in computer science and electronics that enable the design, development, and analysis of digital systems and computing machines. At the heart of digital logic lies the binary number system, which uses only two digits—0 and 1—to represent and process all types of data. Computers rely on binary because digital electronic devices are inherently bistable, meaning they can exist in only two distinct states. Number systems such as binary, octal, decimal, and hexadecimal are crucial for representing data in forms that are easy for both humans and machines to understand. Logic gates—AND, OR, NOT, NAND, NOR, XOR, and XNOR—are the basic building blocks of digital circuits, each performing a specific Boolean function. These gates are combined to form combinational circuits like adders, multiplexers, encoders, and decoders, which produce outputs based solely on current inputs. Boolean algebra and simplification techniques like Karnaugh maps and the Quine-McCluskey method are employed to minimize logical expressions, reducing hardware complexity. Beyond combinational logic, sequential circuits add memory elements to the system. These include flip-flops, registers, and counters, which store past inputs and states, making them essential for timing, control, and memory functions. Flip-flops such as SR, D, JK, and T types are used to build higher-level components, with synchronous and asynchronous counters being critical for time-keeping and control applications.

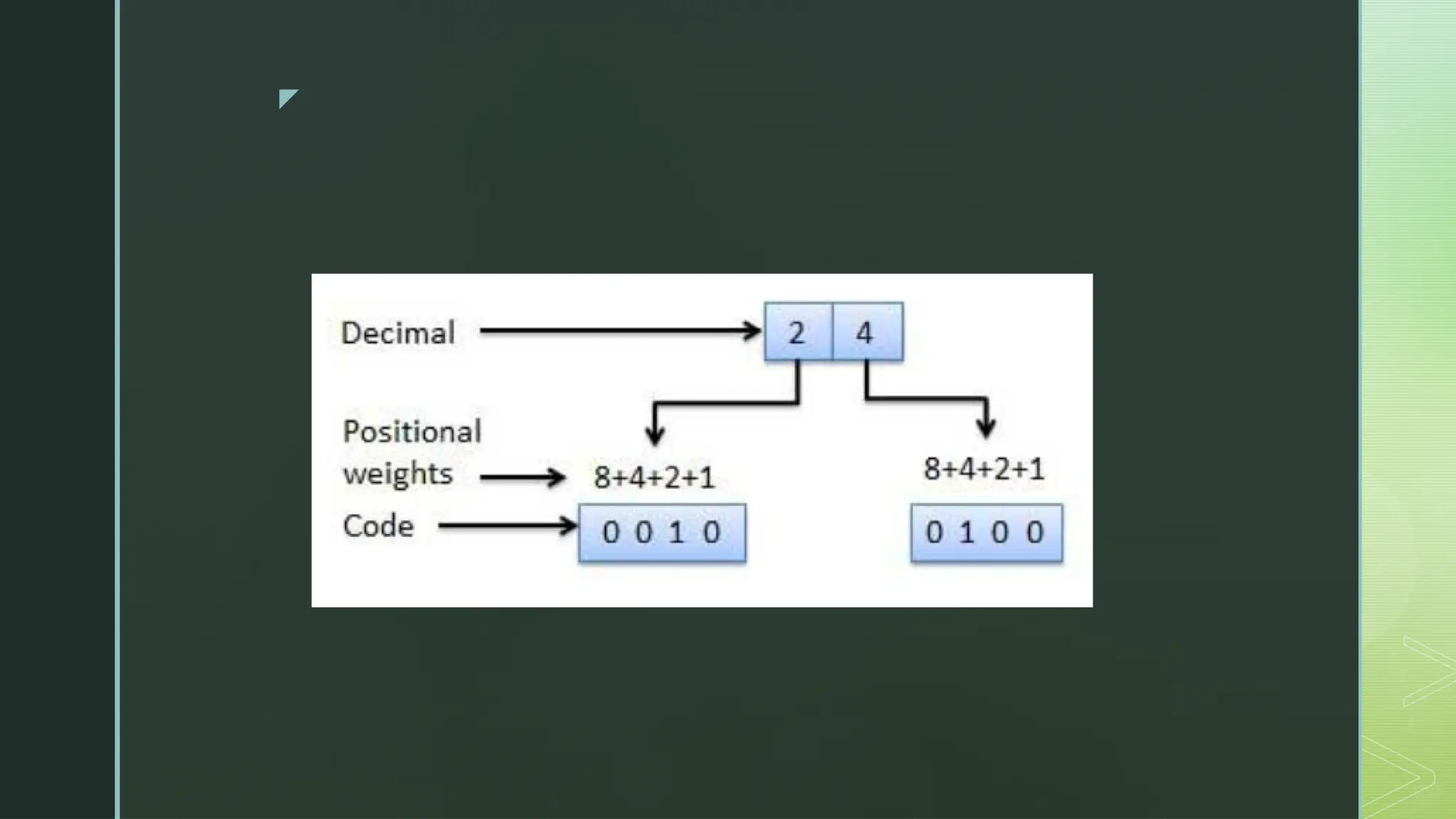

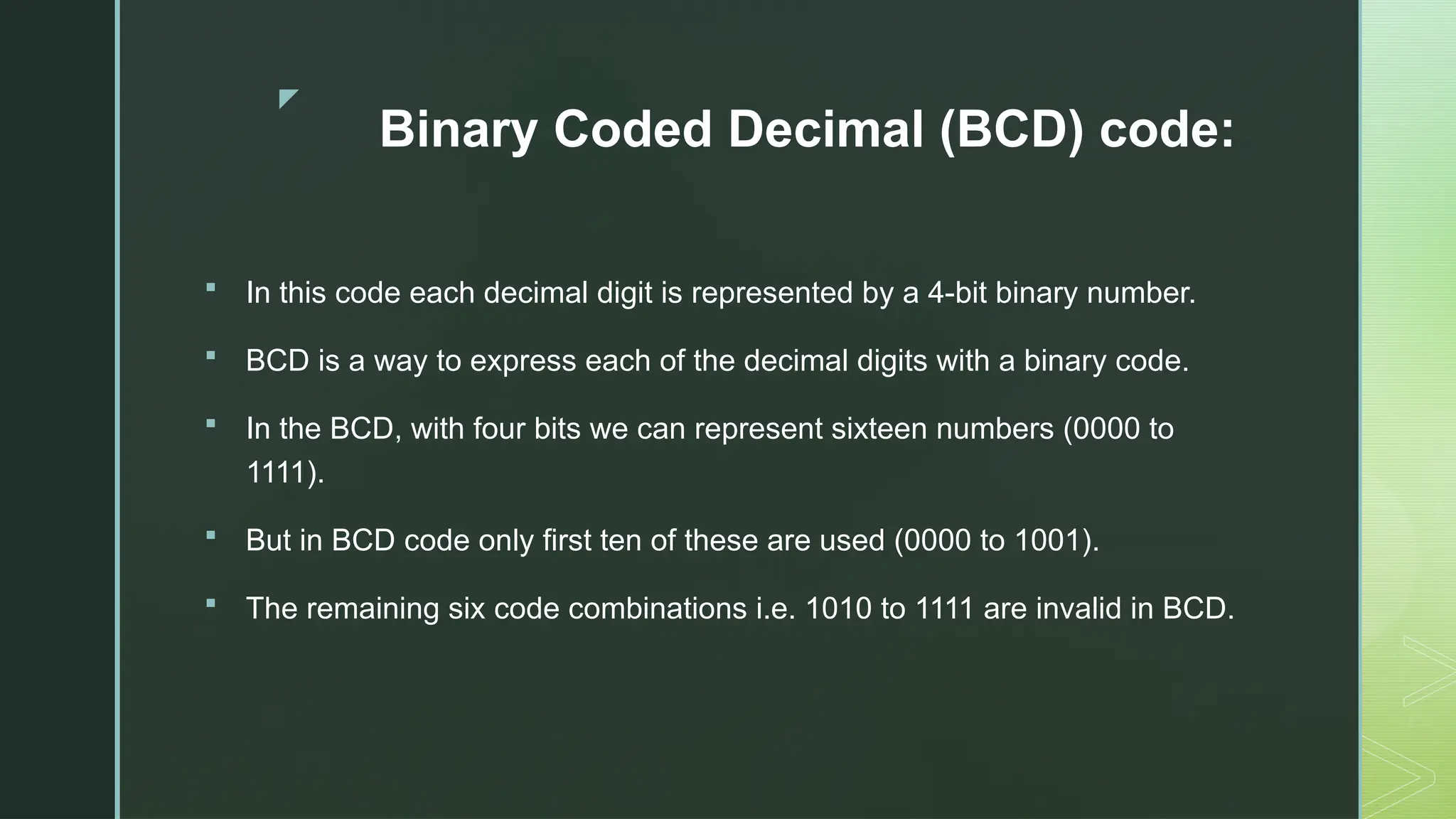

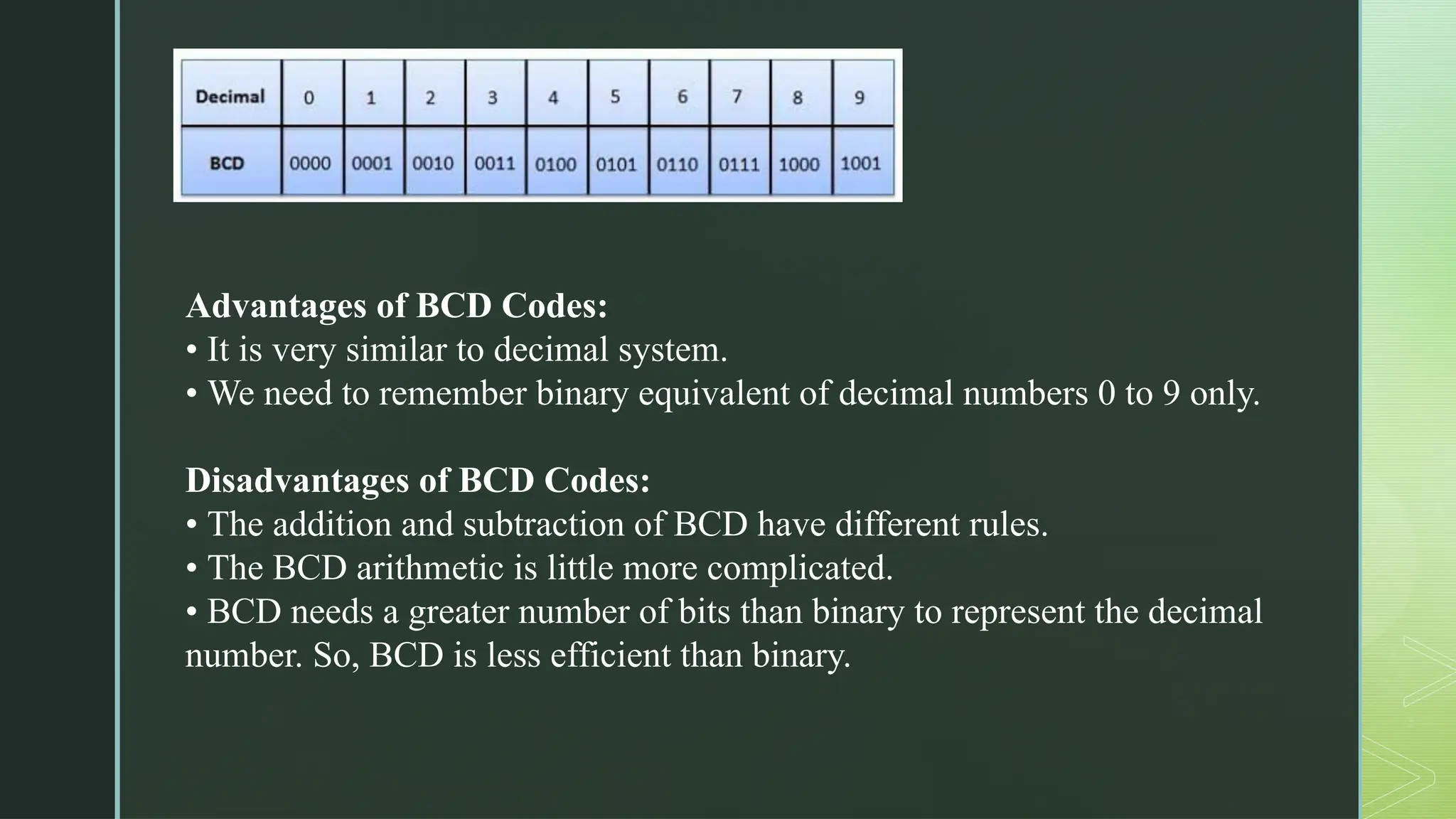

A central theme in digital logic is the representation of data. Computers handle various data types—integers, floating-point numbers, characters, and instructions—using binary encoding. Signed integers are often stored in 2's complement format to simplify arithmetic operations, while floating-point numbers follow the IEEE 754 standard to represent very large or very small values. ASCII and Unicode standards are used to encode characters. Arithmetic operations such as addition, subtraction, multiplication, and division are implemented using specialized algorithms and circuits within the Arithmetic Logic Unit (ALU), a key component of the CPU. The ALU performs both arithmetic and logical functions, serving as the brain of computational tasks. Data transfer among components relies on a well-structured bus system, and memory units form a hierarchy based on speed and size. This hierarchy starts with processor registers, followed by cache memory (L1, L2, L3), main memory (RAM), and secondary storage (hard disks, SSDs), extending to tertiary storage such as optical drives. The goal is to balance cost, speed, and storage capacity. Cache memory significantly improves speed by storing frequently used data and instructions closer to the processor, employing mapping techniques like direct mapping, fully associative mapping, and set-associative mapping.

Modern computers often employ multicore processors—multiple CPUs integrated into a single chip—to e