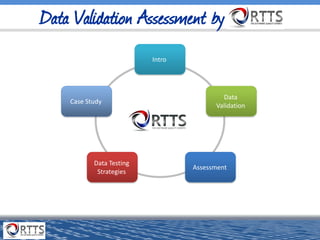

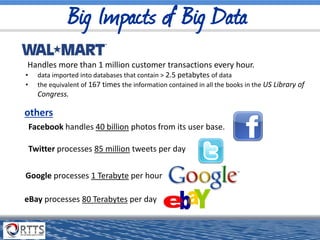

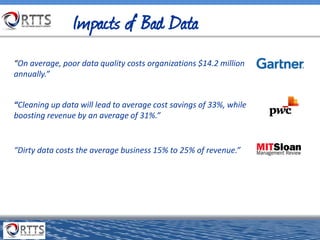

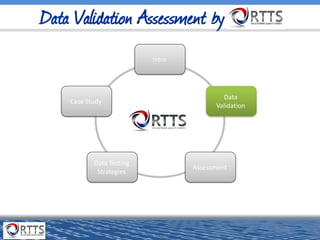

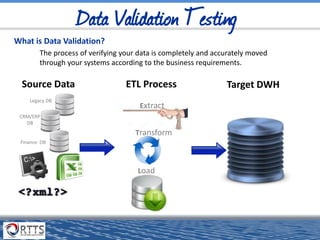

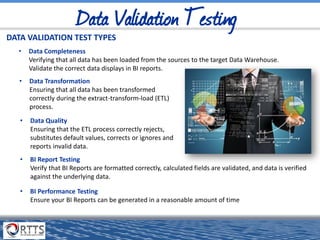

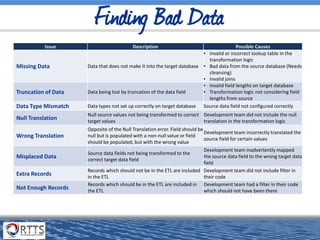

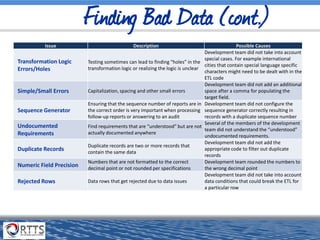

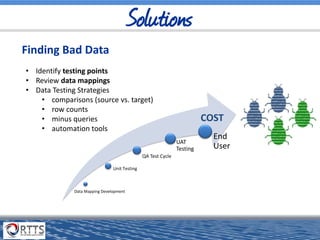

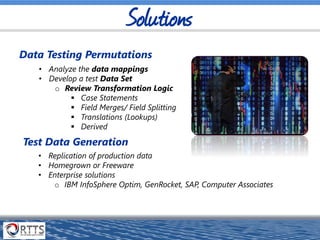

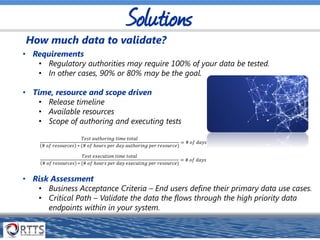

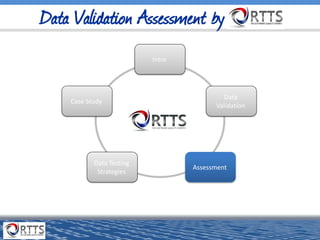

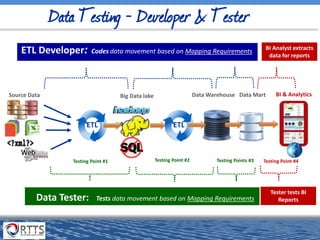

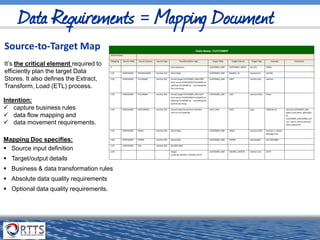

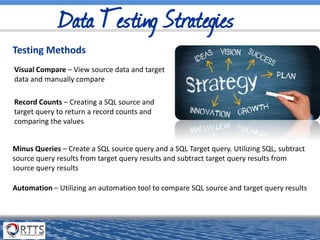

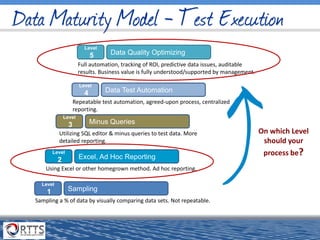

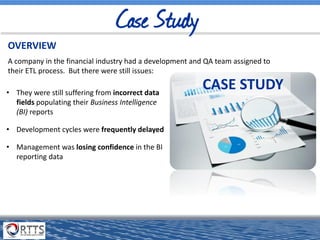

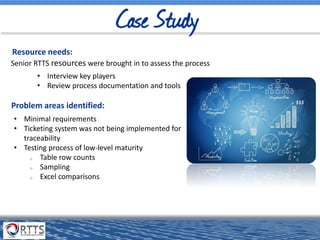

This document discusses strategies for creating an effective data validation and testing process. It provides examples of common data issues found during testing such as missing data, wrong translations, and duplicate records. Solutions discussed include identifying important test points, reviewing data mappings, developing automated and manual testing approaches, and assessing how much data needs validation. The presentation also includes a case study of a company that improved its process by centralizing documentation, improving communication, and automating more of its testing.