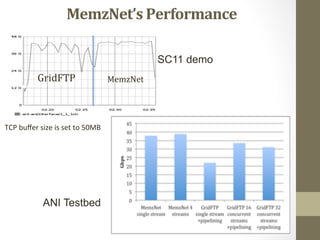

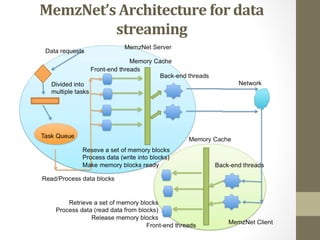

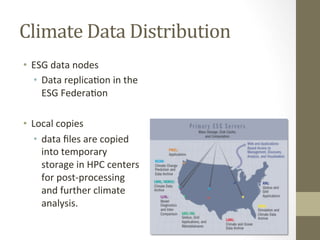

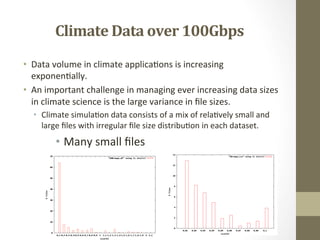

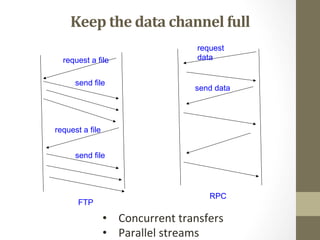

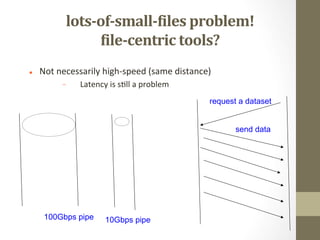

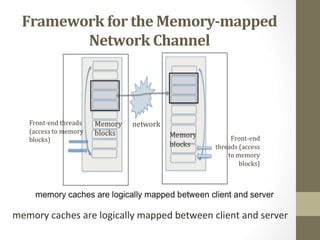

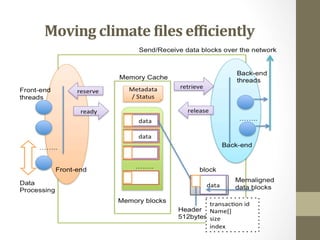

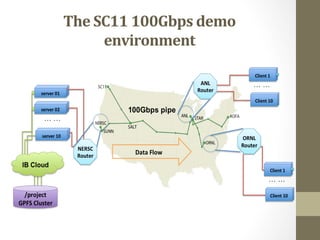

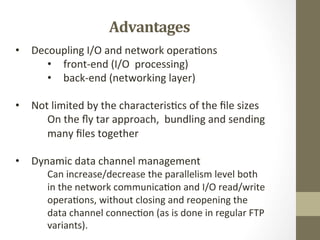

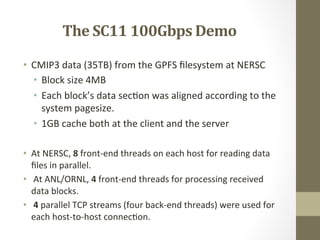

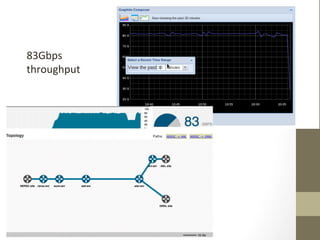

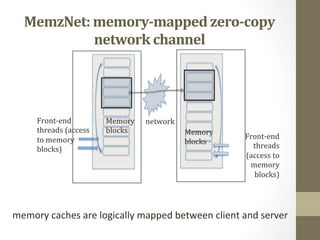

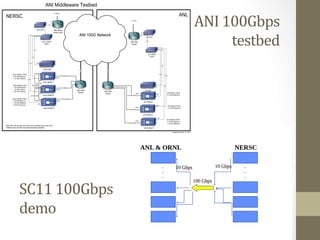

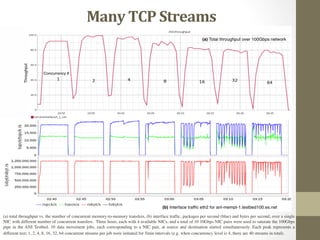

This document discusses streaming exascale data over 100Gbps networks. It summarizes a demonstration at SC11 where climate simulation data was transferred from NERSC to ANL and ORNL at 83Gbps using a memory-mapped zero-copy network channel called MemzNet. The demonstration showed efficient transfer of large datasets containing many small files is possible over high-bandwidth networks through parallel streams, decoupling I/O and network operations, and dynamic data channel management. High-performance was achieved by keeping the data channel full through concurrent transfers and leveraging high-speed networking testbeds like ANI.

![ANI testbed 100Gbps (10x10NICs, three hosts): Interrupts/CPU vs the number of concurrent transfers [1, 2, 4, 8, 16,

32 64 concurrent jobs - 5min intervals], TCP buffer size is 50M

Effects

of

many

streams](https://image.slidesharecdn.com/crd-allhands-balman-120716174519-phpapp01/85/Streaming-exa-scale-data-over-100Gbps-networks-18-320.jpg)