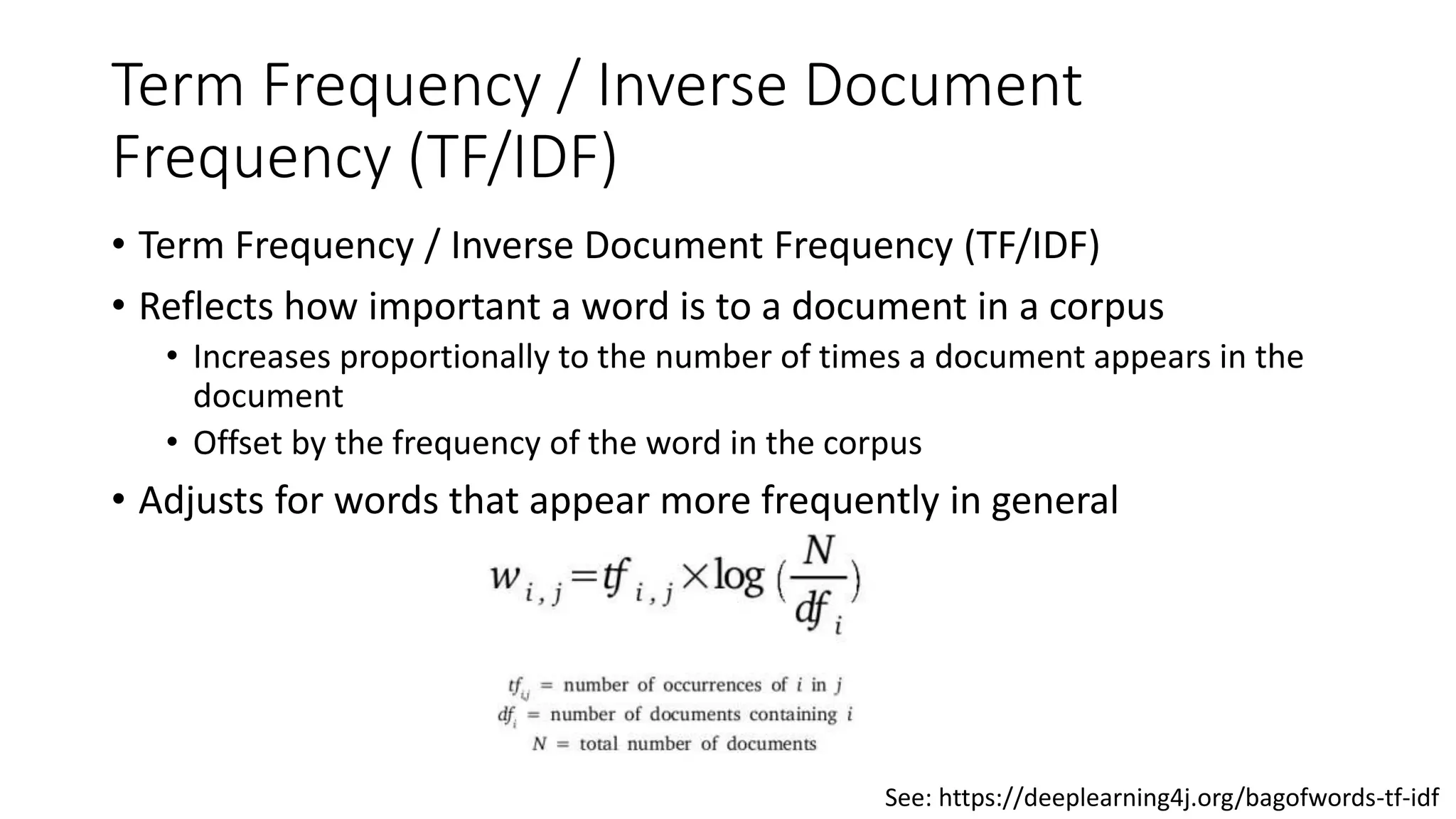

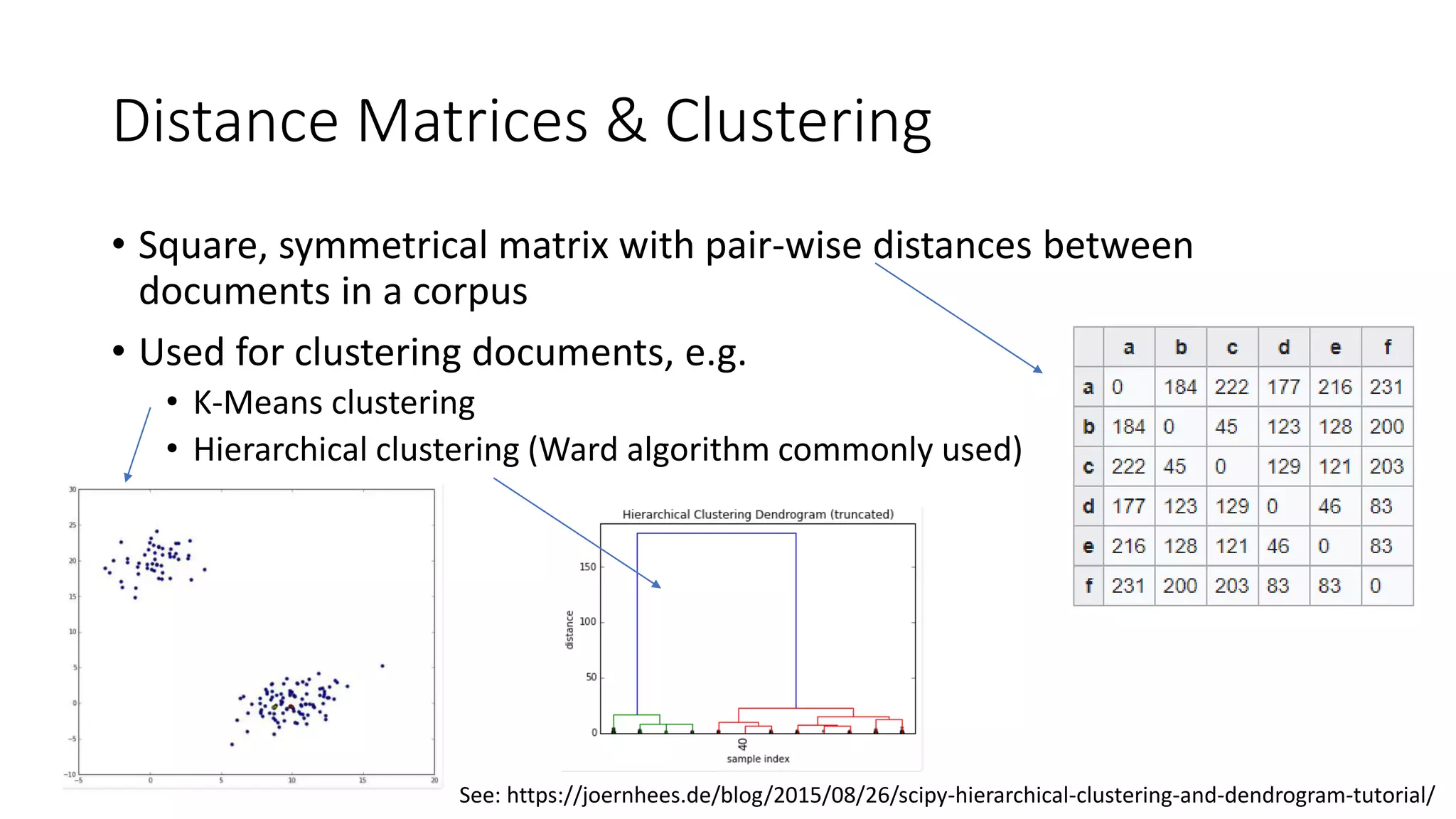

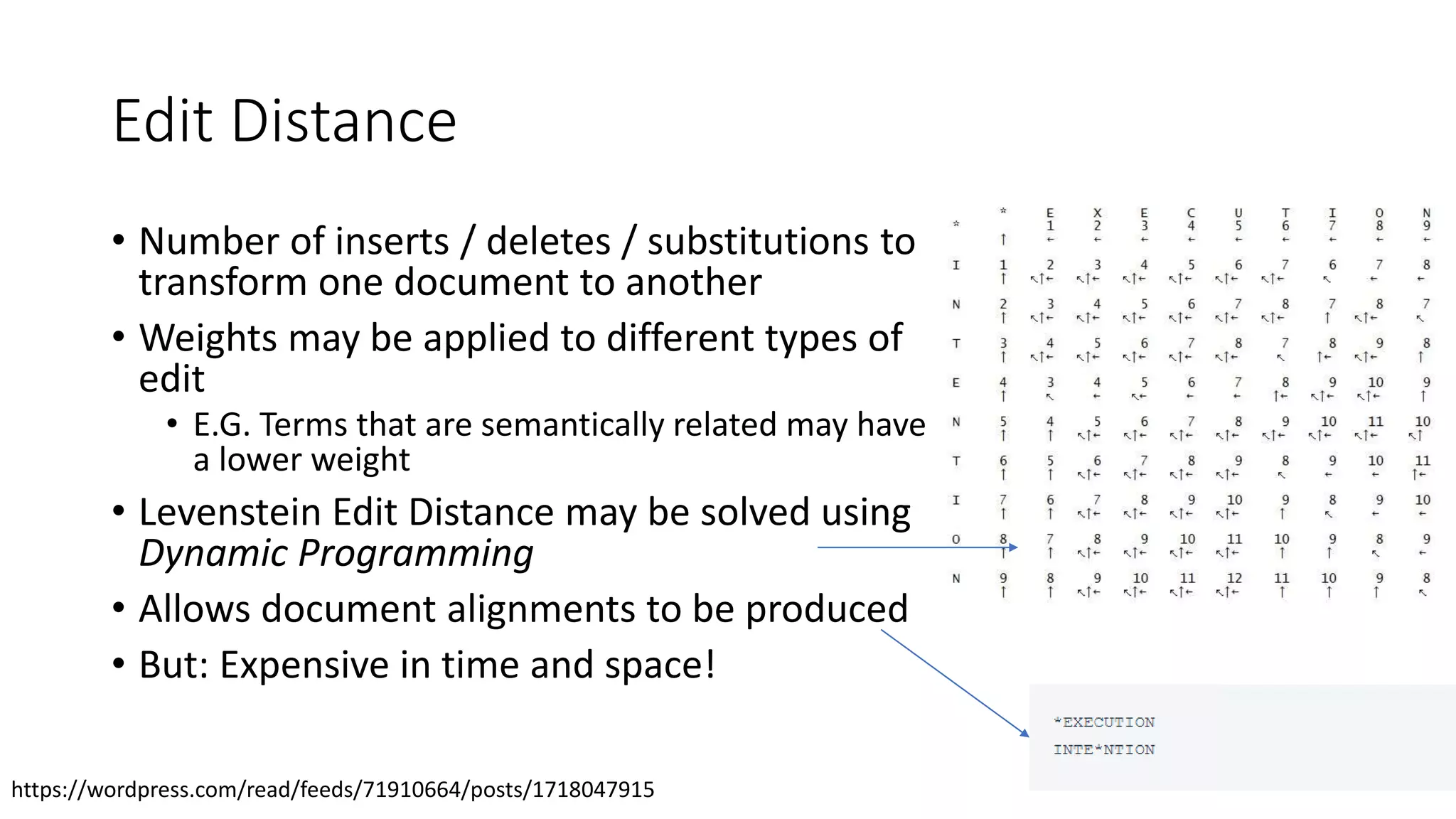

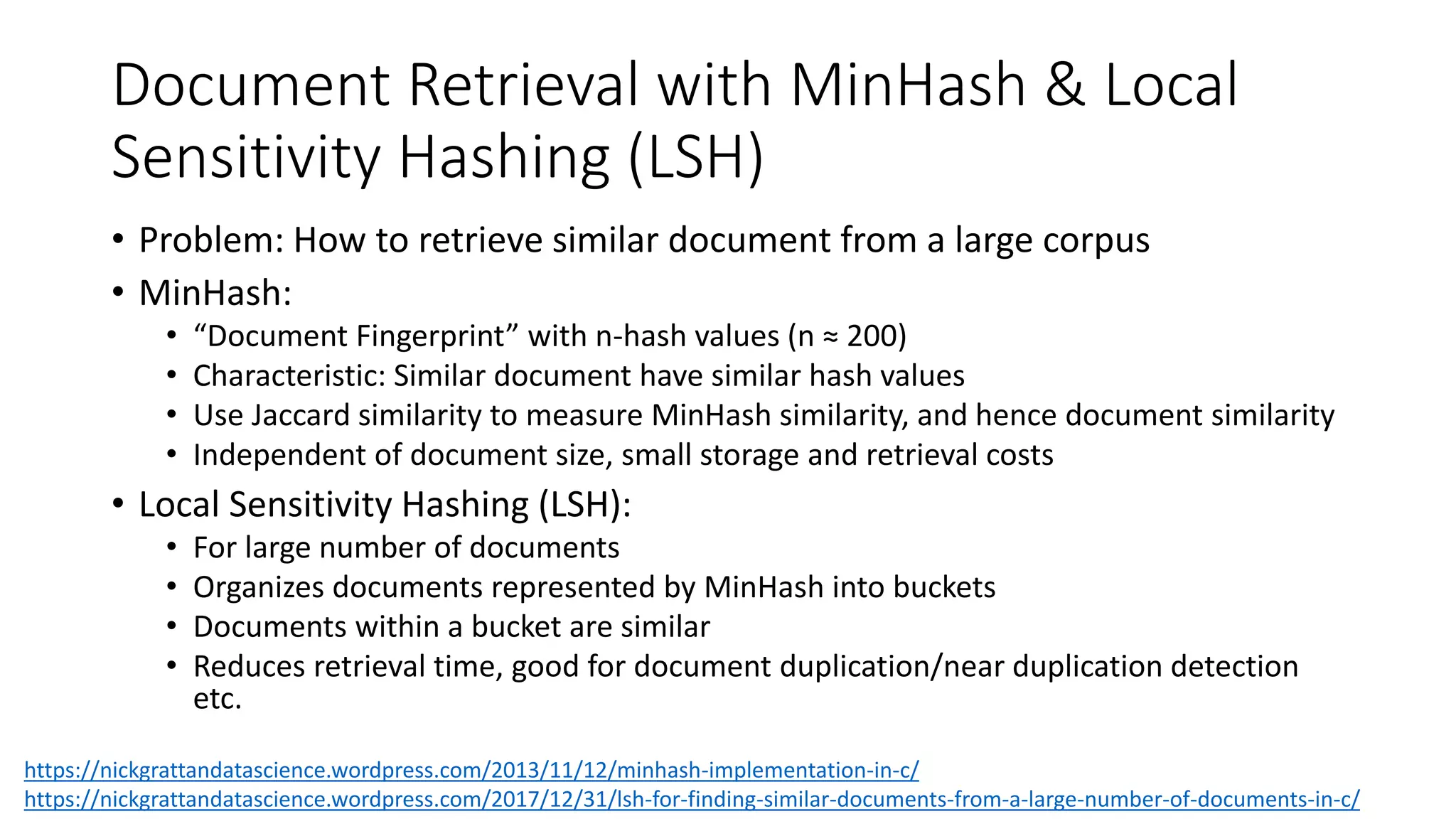

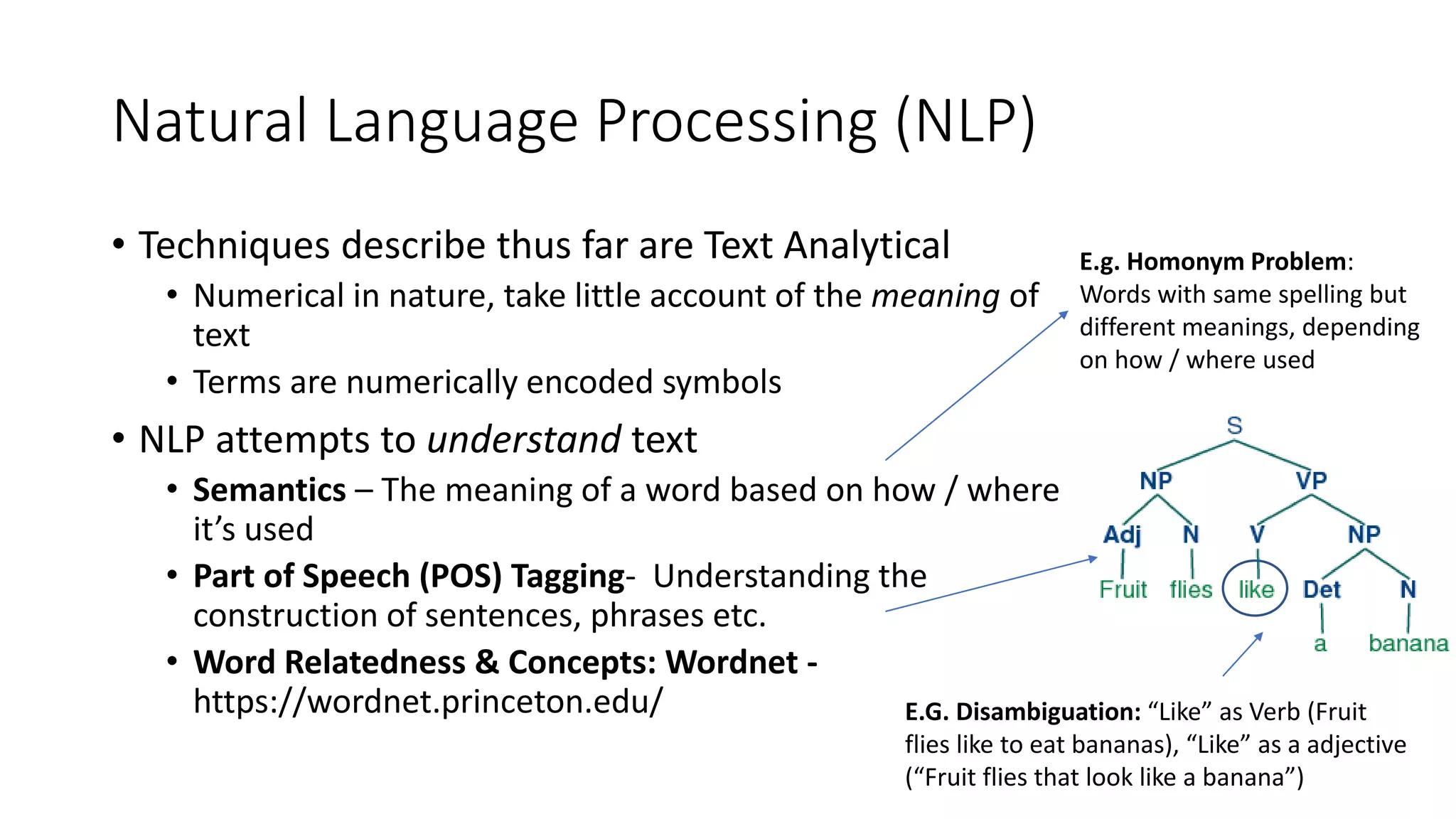

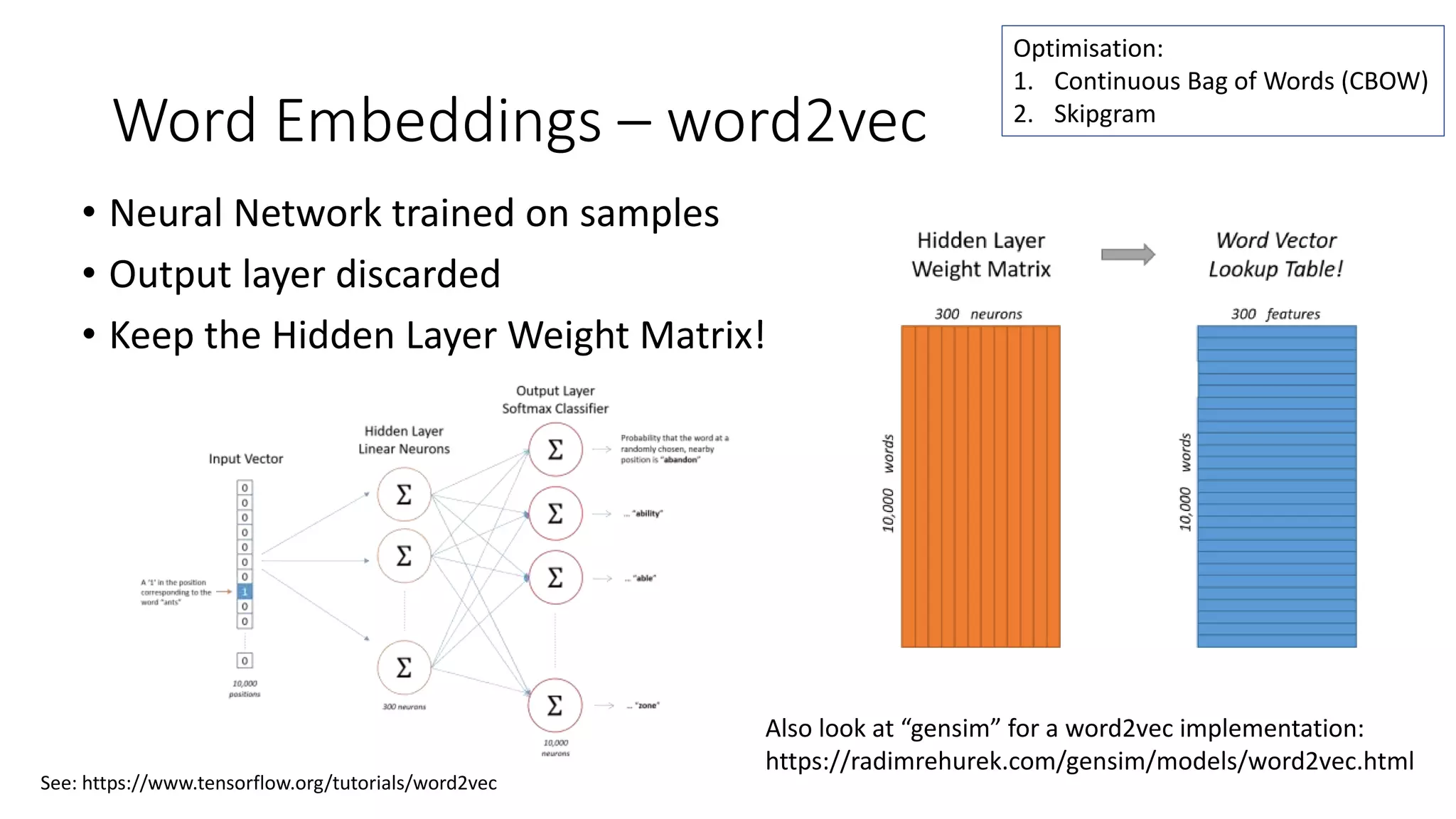

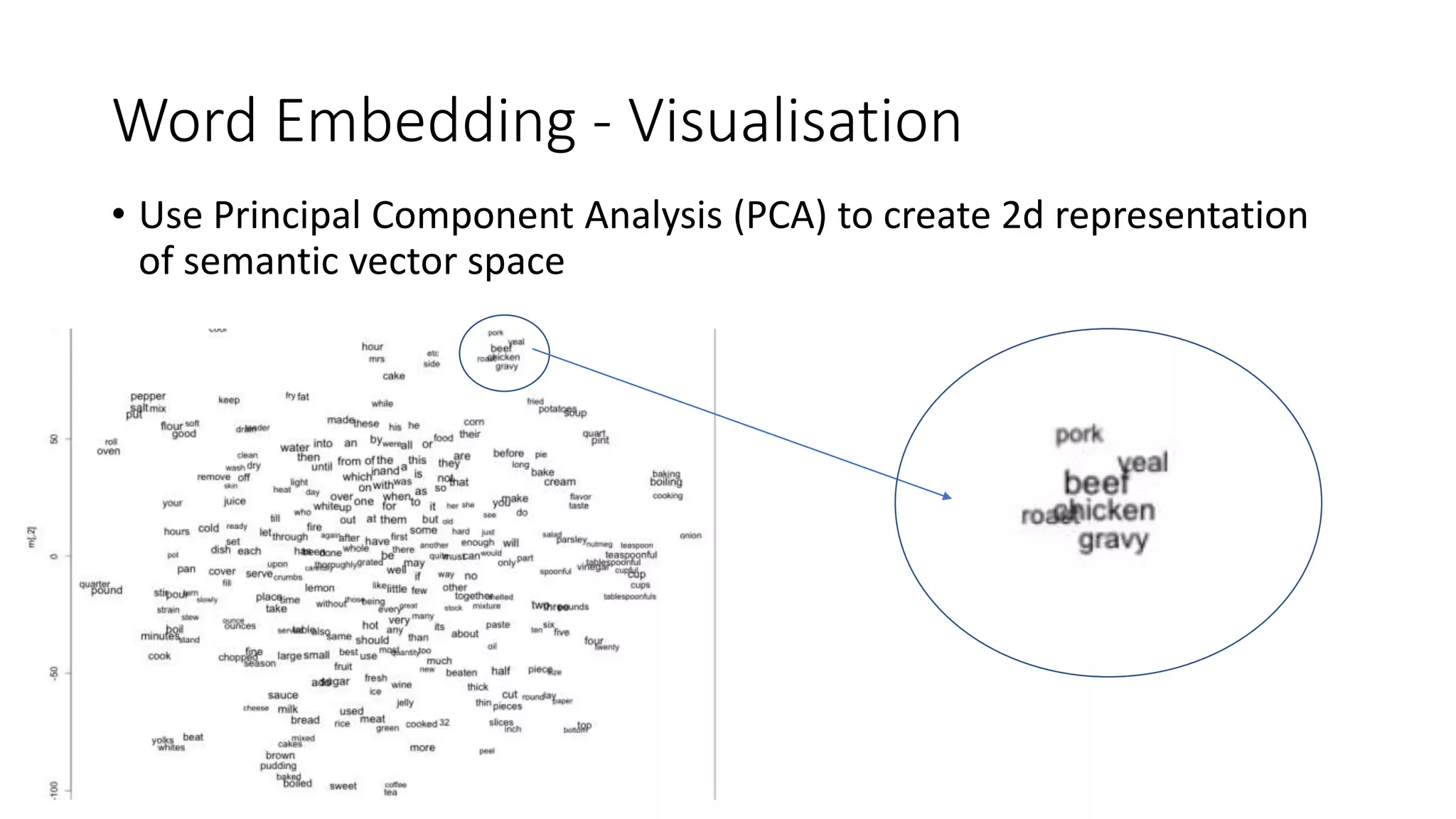

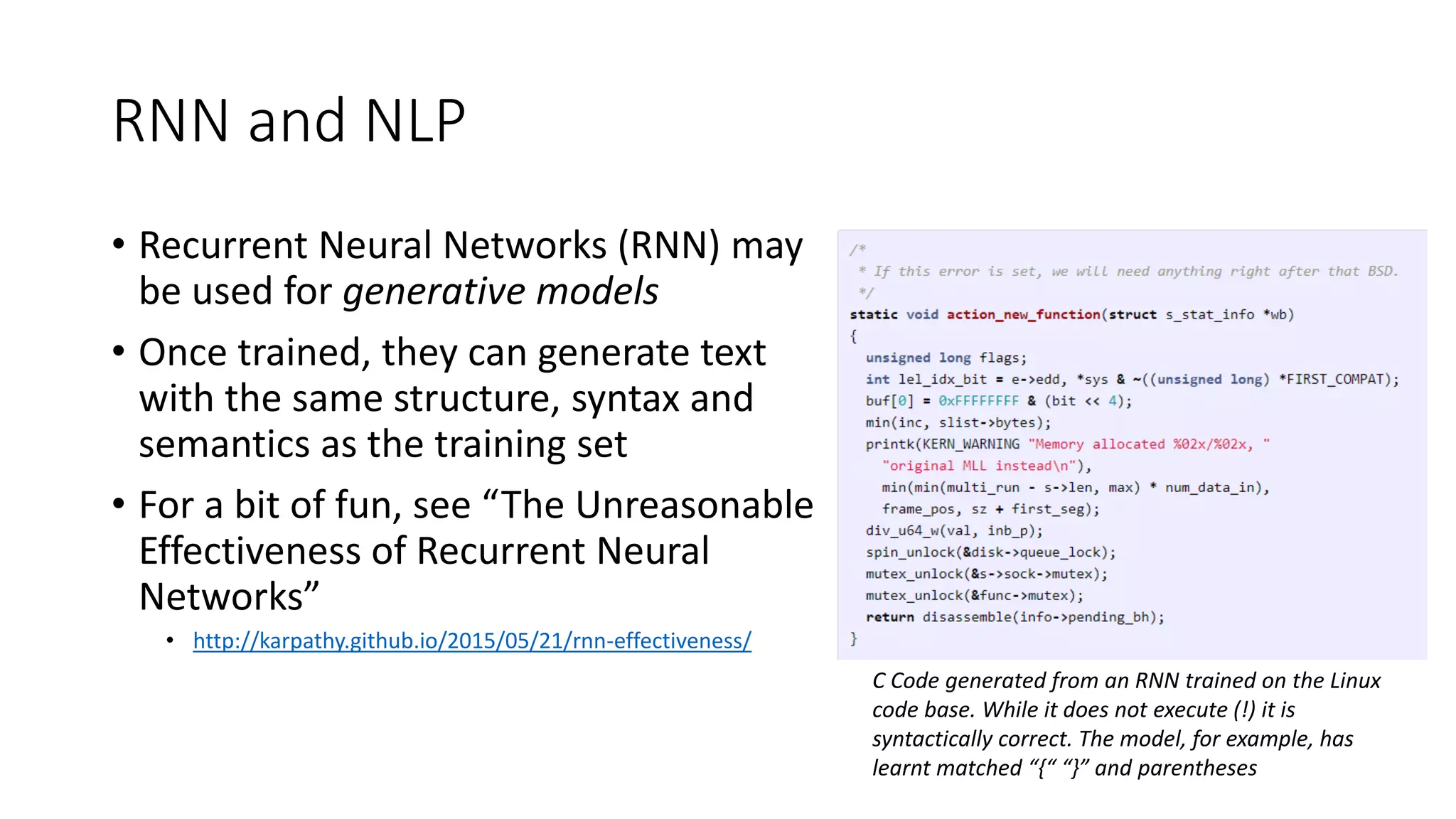

This document provides an introduction to text analytics and natural language processing techniques. It discusses bag-of-words models, term frequency-inverse document frequency (TF-IDF), vector space models, distance measures, document clustering, word embeddings using word2vec, and recurrent neural networks. The agenda covers traditional "frequentist" text analysis methods as well as deep learning techniques for semantic analysis. Hands-on examples in Python are provided to illustrate document clustering, creating word embeddings, and generating text with recurrent neural networks.

![Natural Language Processing and Text

Analytics

• Natural Language Processing (NLP)

• Area of AI concerned with interactions between computers and human natural

language, to process or “understand” natural language

• Common tasks: speech recognition, natural language understanding & generation,

automatic summarization, part-of-speech tagging, disambiguation, named entity

recognition …

• To fully understand and represent the meaning of language is a difficult goal (AI-

Complete) [1]

• Text Analytics (Text Mining):

• The process or practice of examining large collections of written resources in order

to generate new information (Oxford English Dictionary)

• Transforms text to data for information discovery, establishing relationships, often

using NLP](https://image.slidesharecdn.com/corkmeetup3-v3-180315133037/75/Cork-AI-Meetup-Number-3-4-2048.jpg)

![Resources and References

[1] “Natural Language Processing with Deep Learning” – Christopher Manning et

al, Stamford University. https://www.youtube.com/watch?v=OQQ-W_63UgQ

• Lecture series including excellent description of back propagation, word2vec and GLOVE

[2] “Hands-On Machine Learning with Scikit-Learn & TensorFlow”, Aurélien

Géron (O'Reilly Media, 2017)

• Excellent introduction, Jupyter Notebooks available here: https://github.com/ageron

[3] “Speech and Language Processing”, Daniel Jurafsky & James H. Martin (2nd

Edition, Pearson Education 2009)

• In-depth introduction to NLP

[4] “Introduction to Information Retrieval”, Christopher Manning et al

(Cambridge University Press, 2008)

• Probabilistic models for text retrieval, TF/IDF, Vector Space, Support Vector Machines…](https://image.slidesharecdn.com/corkmeetup3-v3-180315133037/75/Cork-AI-Meetup-Number-3-18-2048.jpg)