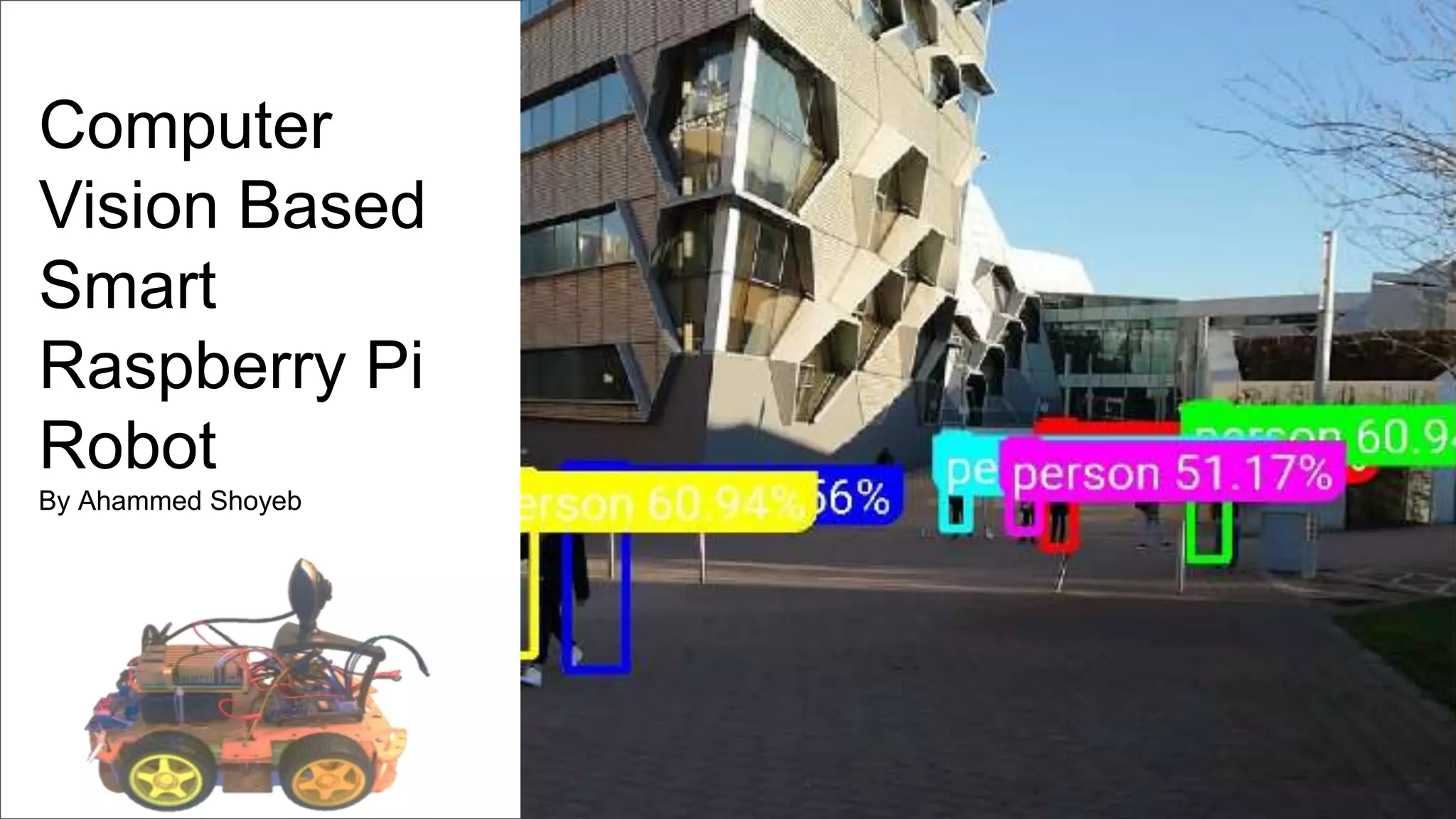

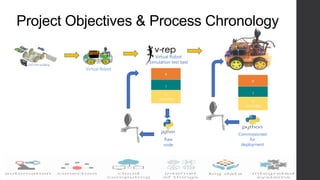

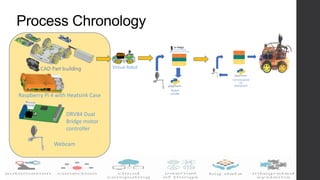

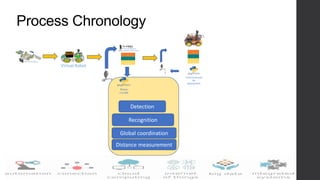

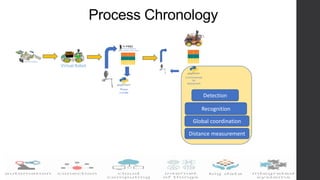

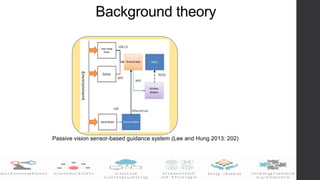

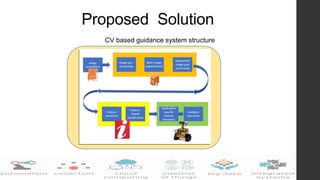

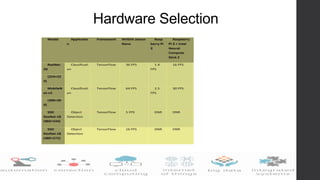

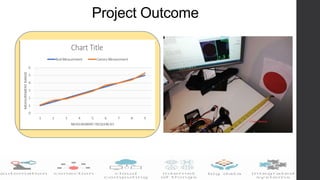

The document outlines the development of a computer vision-based autonomous robot utilizing a Raspberry Pi 4, emphasizing its efficiency and cost-effectiveness. The robot employs a convolutional neural network for object classification and navigation, successfully identifying targets and obstacles while maintaining specific distances. The project has achieved its objectives, resulting in a functional robotic guidance system with documented performance and plans for future enhancements.

![List of References

Bellis, M. (Jul. 3, 2019, thoughtco.com/definition-of-a-robot-1992364.) the

Definition of a Robot [online] available from <www.thoughtco.com/definition-of-a-

robot-1992364.> [11/10 2019]

Billingsley, J. e. and Brett, P. e. (2015) Machine Vision and Mechatronics in

Practice. 1st ed. 2015.. edn

Chaumette, F. (2015) Potential Problems of Unstability and Divergence in

Image-Based and Position-Based Visual Servoing.

Chesi, G. and Hung, Y. S. (2007) 'Global Path-Planning for Constrained and

Optimal Visual Servoing'. IEEE Transactions on Robotics 23 (5), 1050-1060

Dastur, J. and Khawaja, A. (2010) Robotic Arm Actuation with 7 DOF using Haar

Classifier Gesture Recognition.

Di Castro, M., Almagro, C. V., Lunghi, G., Marin, R., Ferre, M., and Masi, A.

(2018) Tracking-Based Depth Estimation of Metallic Pieces for Robotic Guidance.](https://image.slidesharecdn.com/wmgpresentation-200330195821/85/Computer-Vision-CV-based-Raspberry-Pi-robot-vehicle-30-320.jpg)