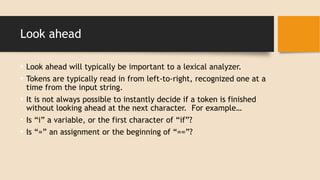

The document discusses the role and functioning of a lexical analyzer, which is the first phase of a compiler responsible for converting a stream of characters into a sequence of tokens for the parser. It details how tokens are identified, the use of lexemes and patterns, and the importance of lookahead in determining token boundaries. The separation of lexical analysis from parsing is highlighted to improve design simplicity and compiler efficiency.

![Example of Token

As an example, consider the following line of code, which could be

part of a “ C ” program.

a [index] = 4 + 2](https://image.slidesharecdn.com/3-241128194632-211bbc92/85/Compiler-Construction-lexical-analyzer-pptx-15-320.jpg)

![Example

a ID

[ Left bracket

index ID

] Right bracket

= Assign

4 Num

+ plus sign

2 Num](https://image.slidesharecdn.com/3-241128194632-211bbc92/85/Compiler-Construction-lexical-analyzer-pptx-16-320.jpg)