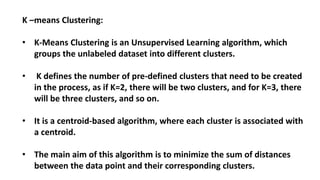

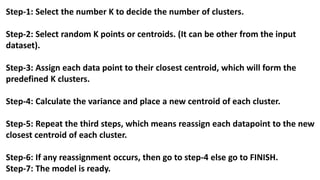

The document discusses unsupervised learning with a focus on clustering techniques, particularly k-means clustering and hierarchical clustering. K-means clustering involves grouping unlabeled data into predefined clusters based on centroids, while hierarchical clustering builds a tree-like structure to represent data relationships. Additionally, it covers methods for determining the optimal number of clusters, such as the elbow method and various linkage methods for calculating distances between clusters.