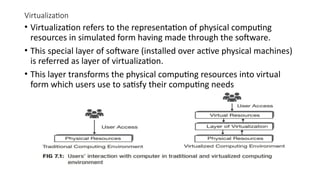

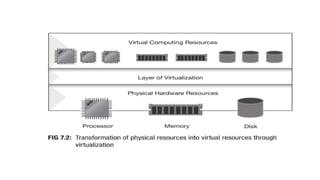

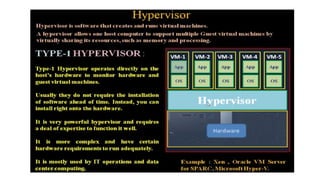

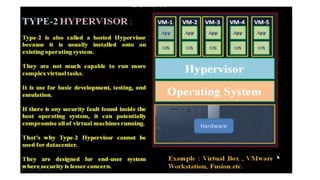

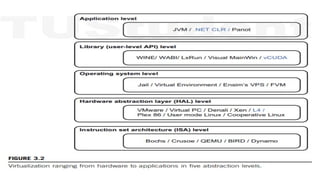

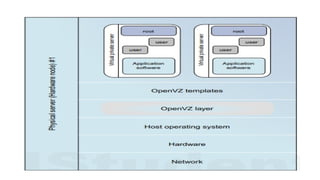

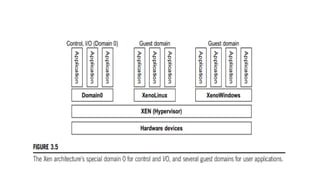

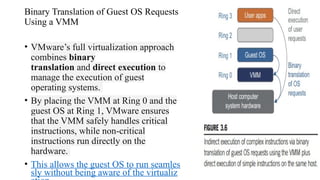

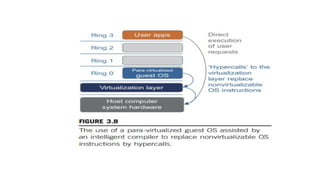

The document discusses virtualization, a technology that simulates physical computing resources through software layers, enabling multiple virtual machines (VMs) on a single hardware platform. It outlines various levels and types of virtualization, such as hardware-level, operating system-level, and application-level virtualization, along with their benefits and drawbacks. Additionally, it covers the architecture and functionality of hypervisors and virtual machine monitors (VMMs), as well as the interactions between guest operating systems and underlying hardware.