Cloud Native Data Platform at Fitbit

- Fitbit collects 100 TB of user data daily from 30 million users across fitness trackers, smartwatches, and apps for internal teams like data science, research, and customer support as well as enterprise wellness programs.

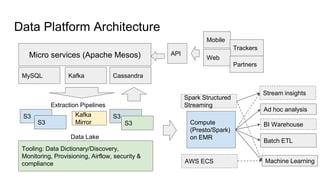

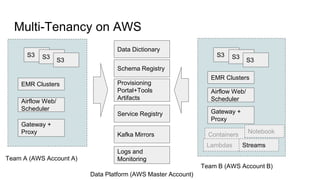

- The data platform includes MySQL, Kafka, Cassandra, S3, EMR, Presto/Spark and supports both batch and real-time workflows across multiple AWS accounts for compliance.

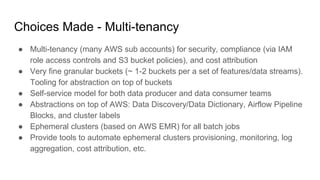

- Key challenges included diverse user needs, multiple compliance requirements, and a lean team. The multi-tenant architecture in AWS with fine-grained S3 buckets and IAM roles helps address these challenges.