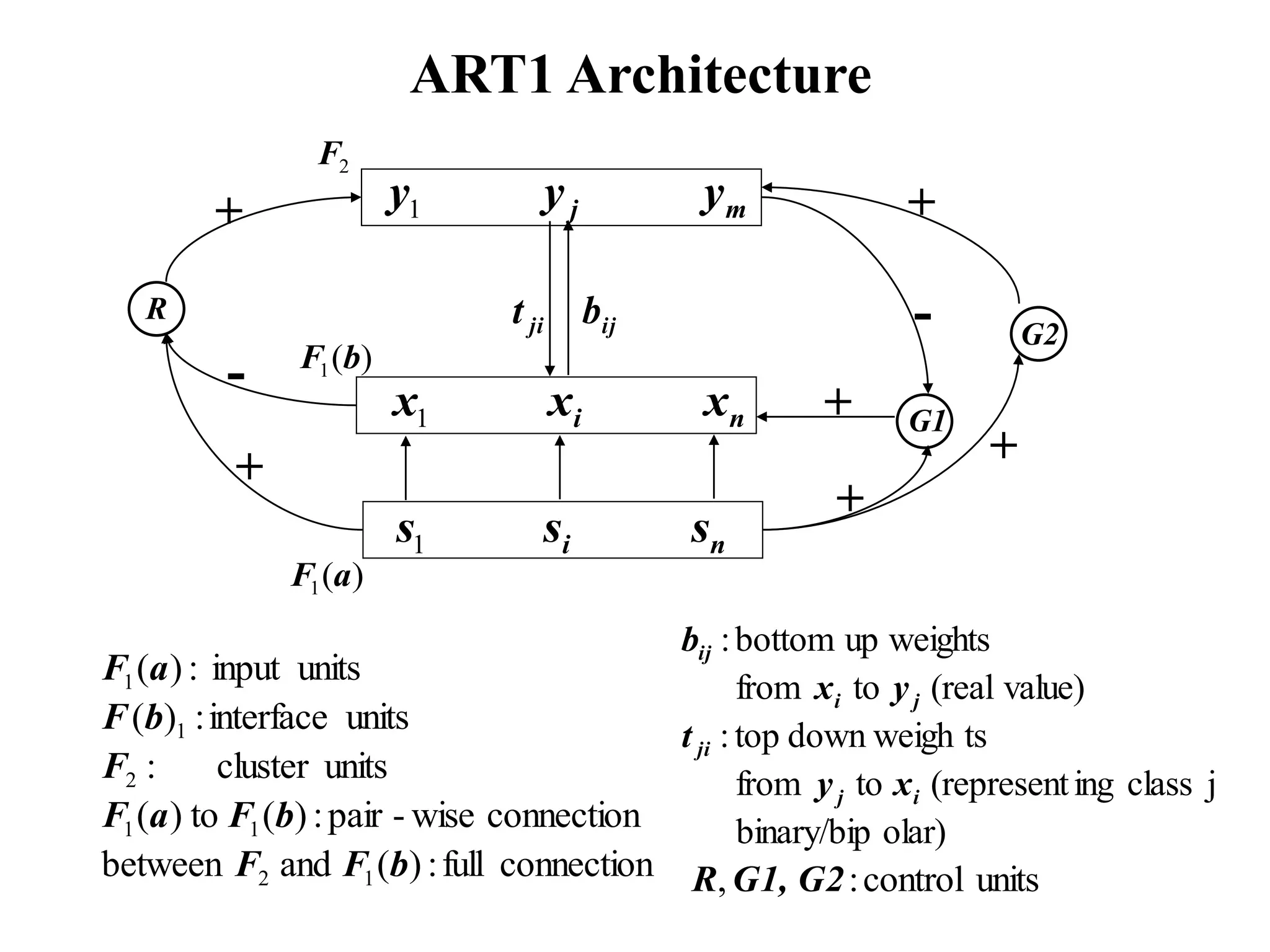

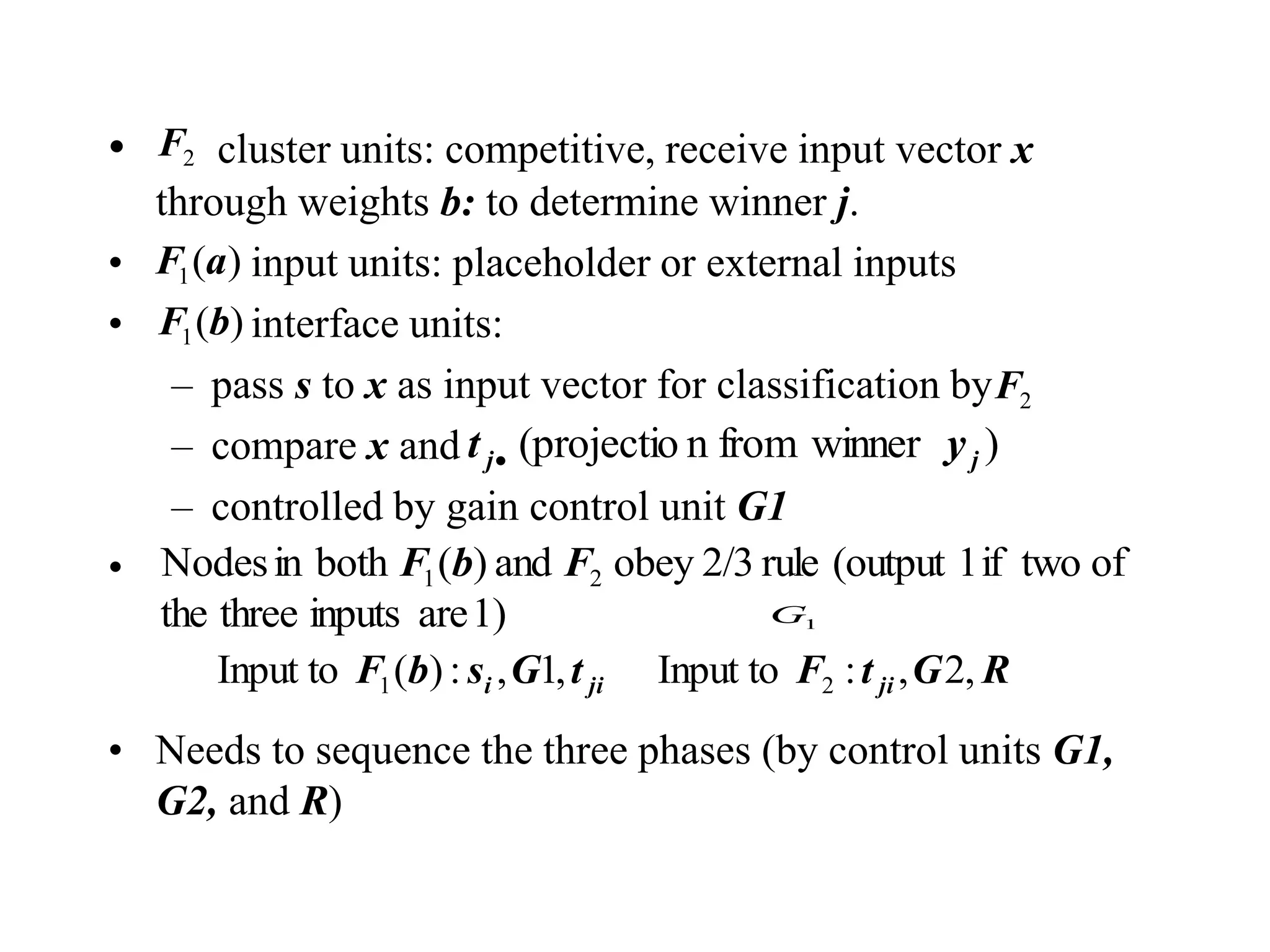

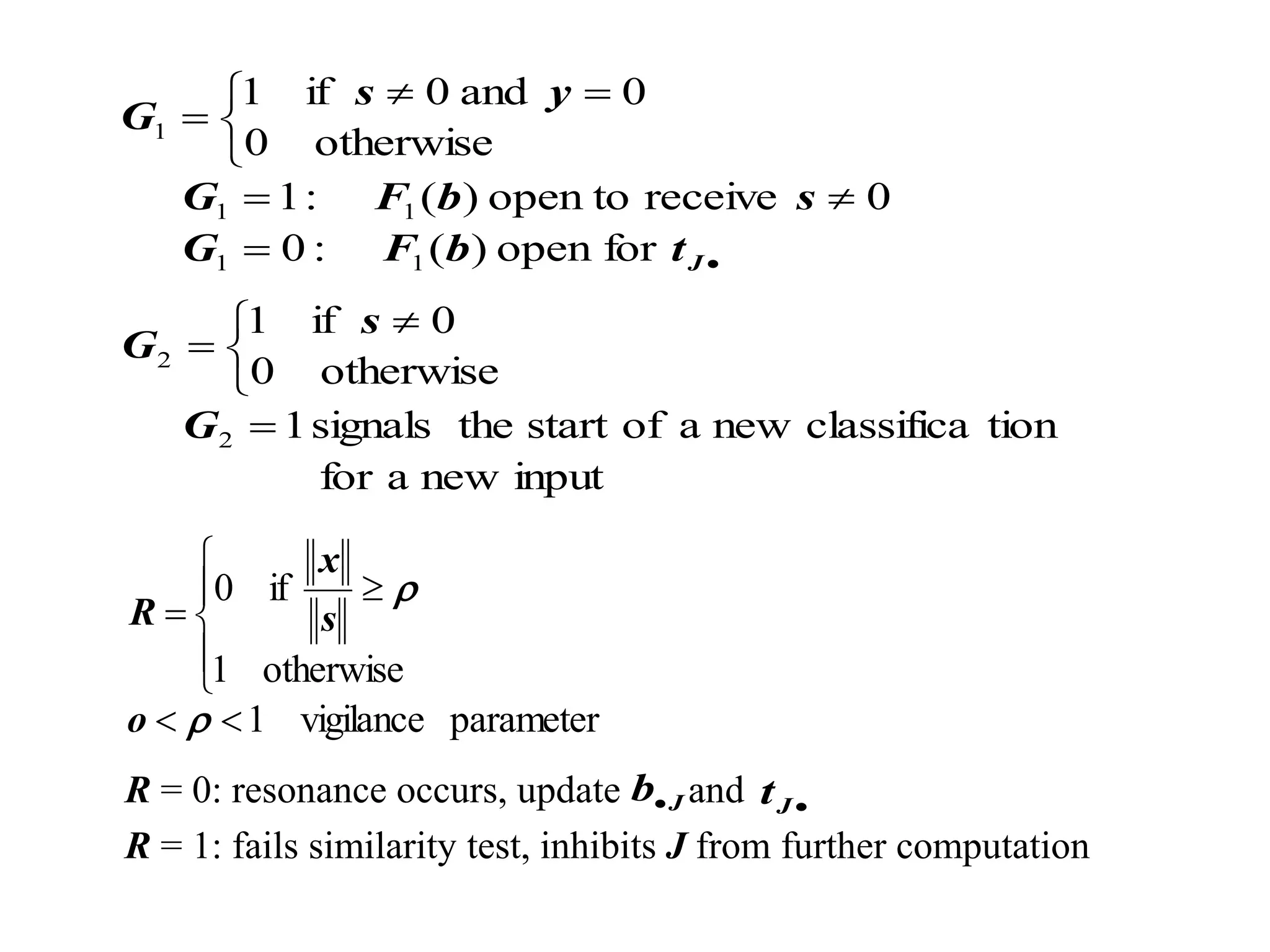

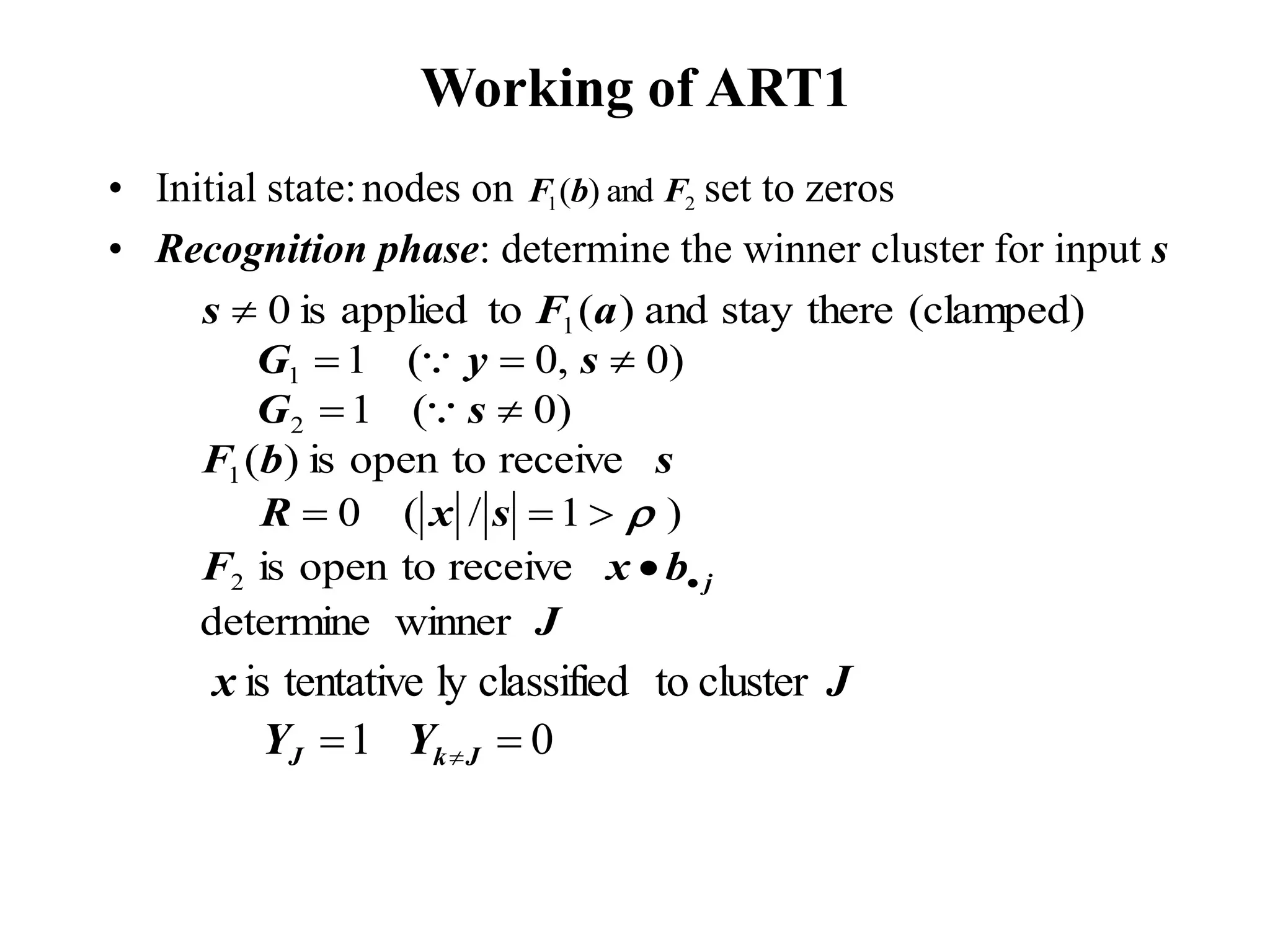

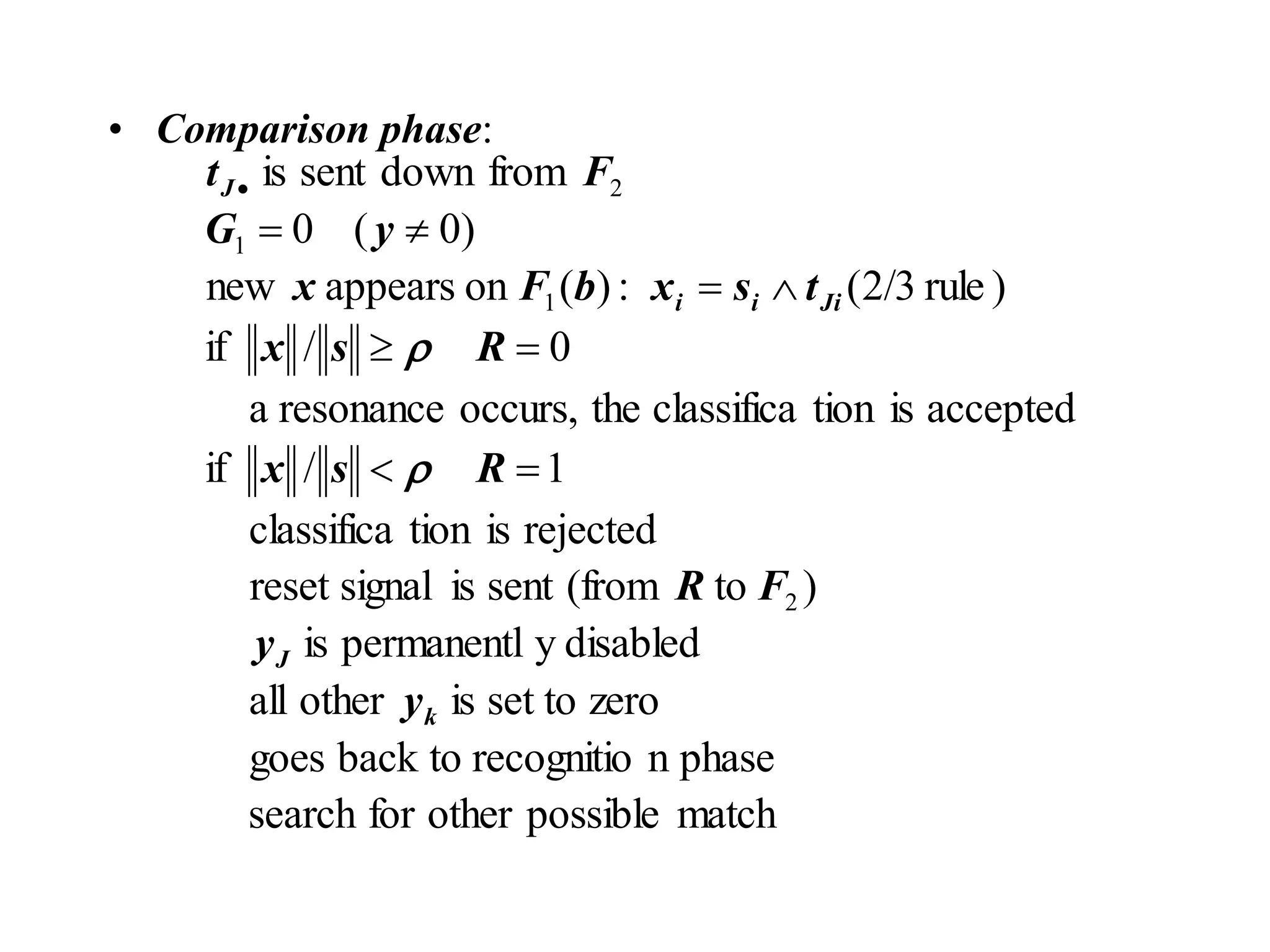

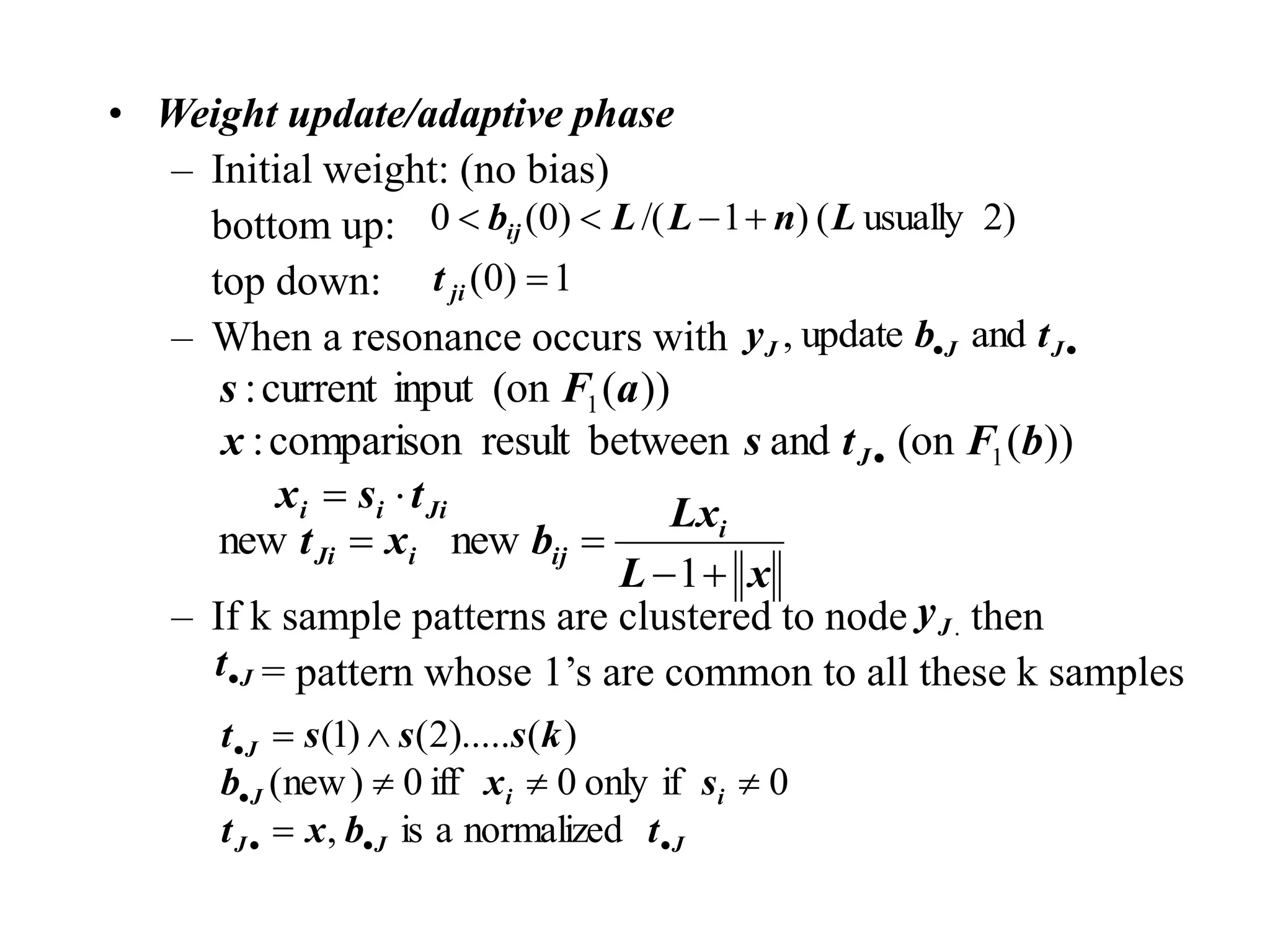

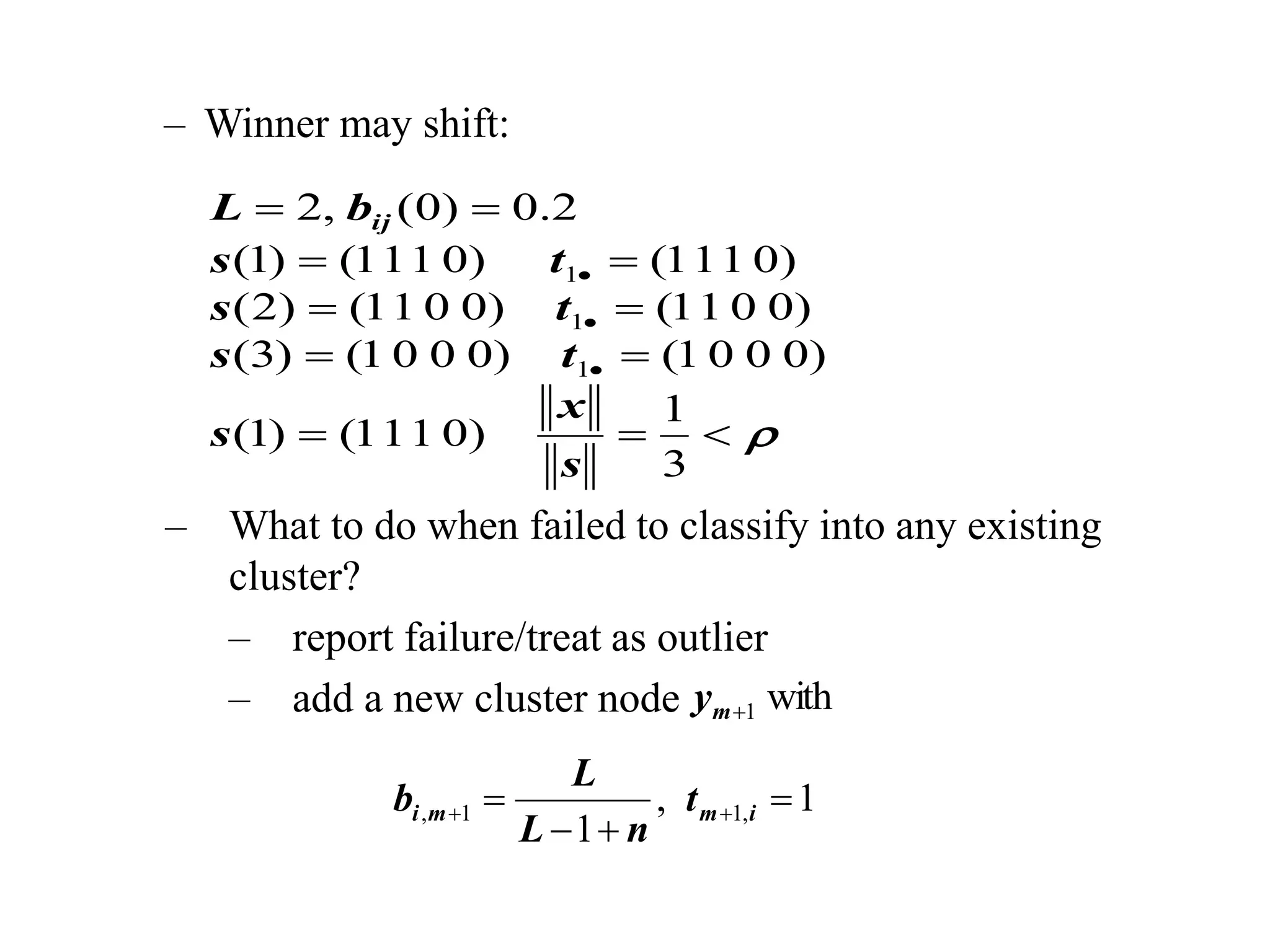

This document summarizes Adaptive Resonance Theory (ART), which was developed to allow neural networks to incrementally learn new patterns without forgetting old ones. ART1 is designed for binary patterns and ART2 for continuous patterns. The key ideas are to find the most similar existing cluster for a new input, and if it is not sufficiently similar, create a new cluster. The architecture uses input, cluster, and interface units connected by adaptive weights to classify inputs through resonance or create new clusters when needed.