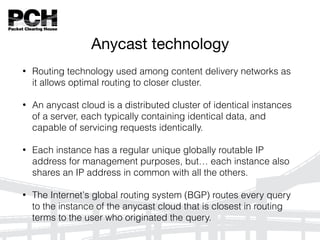

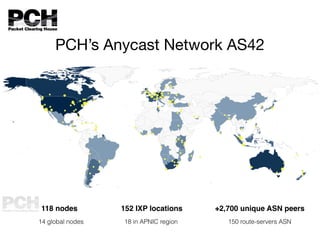

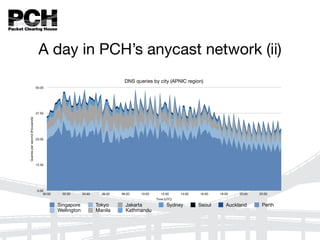

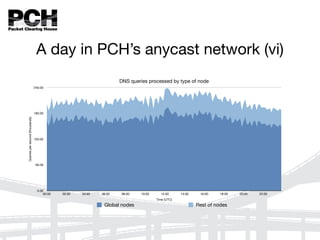

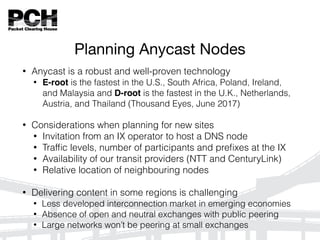

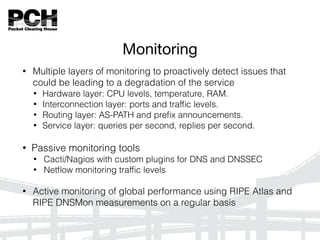

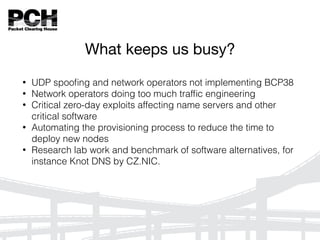

This document discusses Packet Clearing House's (PCH) global anycast network for content delivery of DNS services. PCH operates 118 nodes across 14 global locations and 152 internet exchange points. Anycast technology allows optimal routing of DNS queries to the closest server instance. PCH has been operating anycast DNS services since 1997 and their network has evolved to support the growth of the internet. The document describes considerations for planning new anycast nodes, and how PCH monitors and operates their global anycast network.