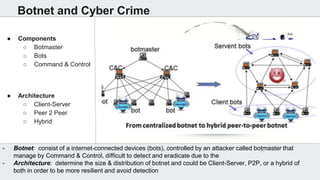

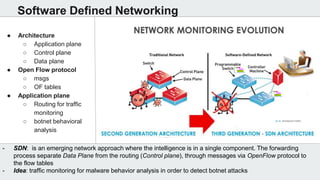

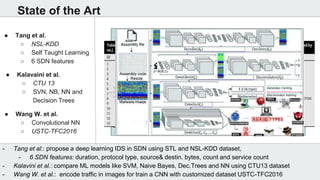

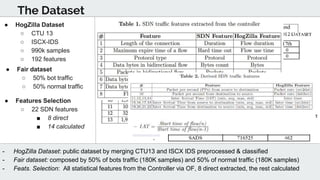

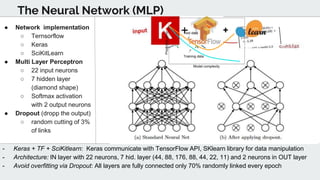

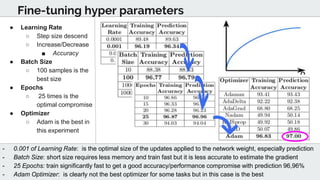

The document discusses the use of deep learning techniques for botnet detection in software-defined networks (SDN), highlighting the challenges posed by various botnet architectures. It describes the implementation of a multi-layer perceptron neural network using specific datasets and tuning parameters to achieve a prediction accuracy of 97%. Future work includes developing a new unbiased dataset and conducting deeper traffic analysis using the proposed SDN framework.