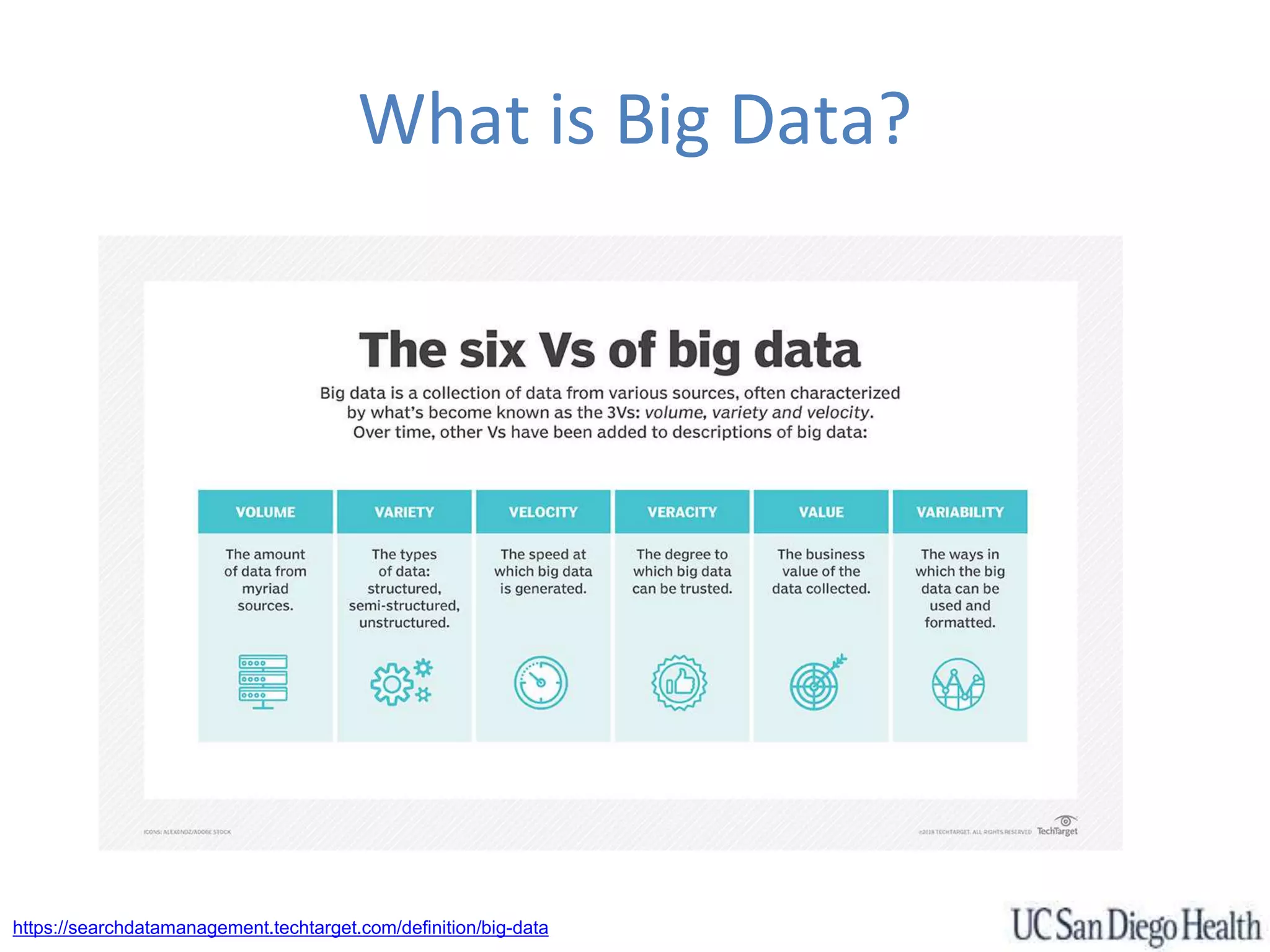

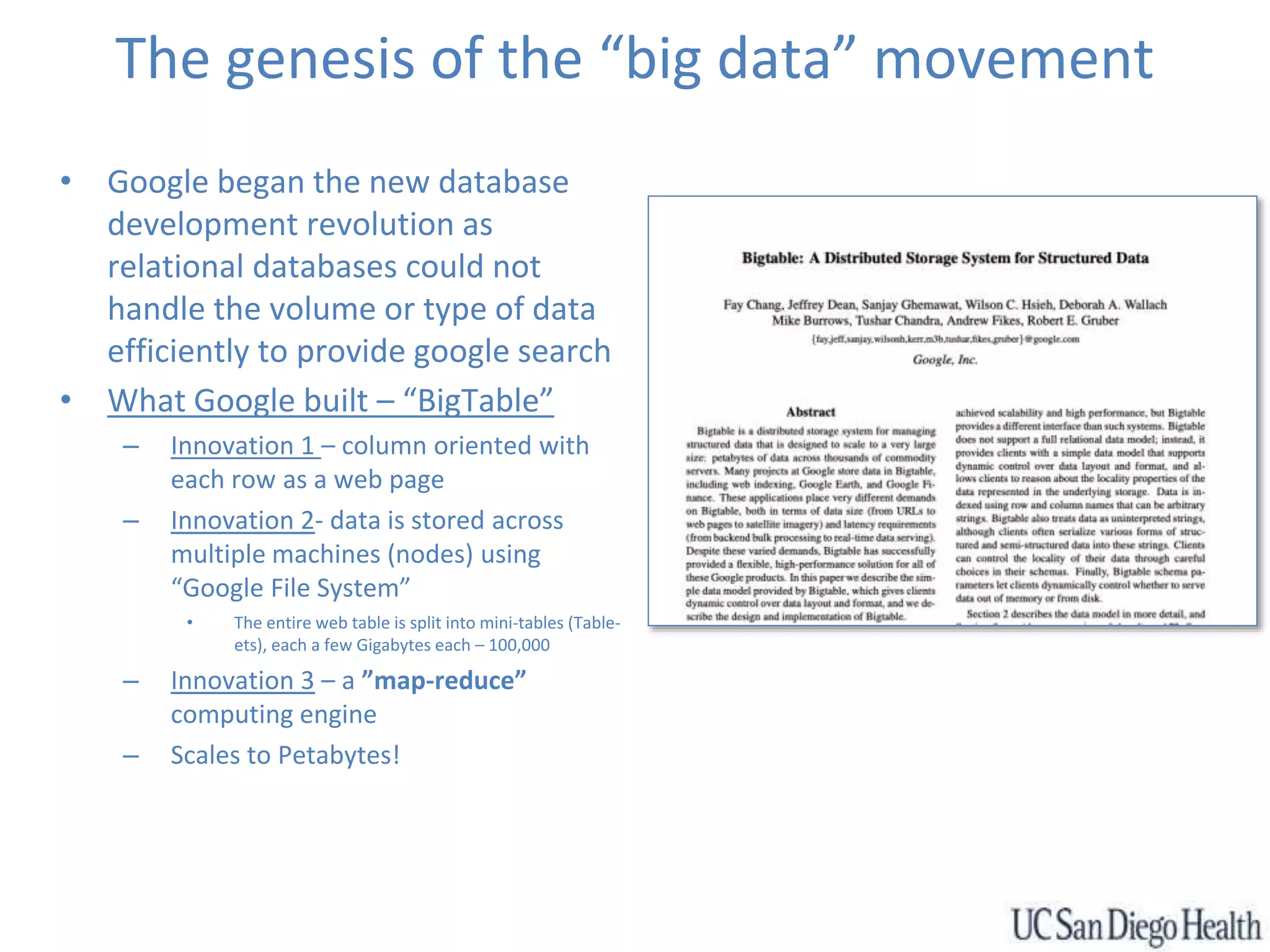

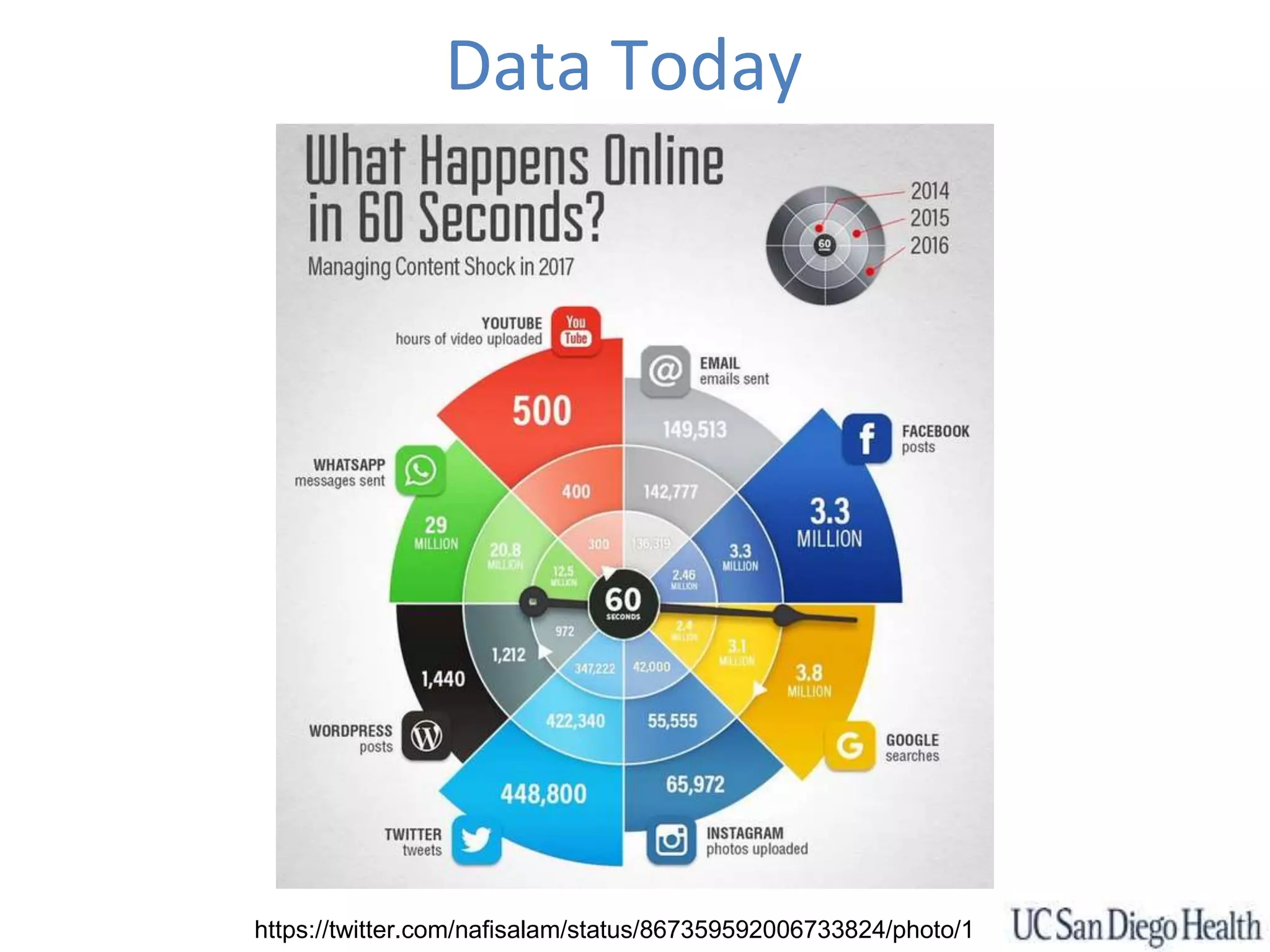

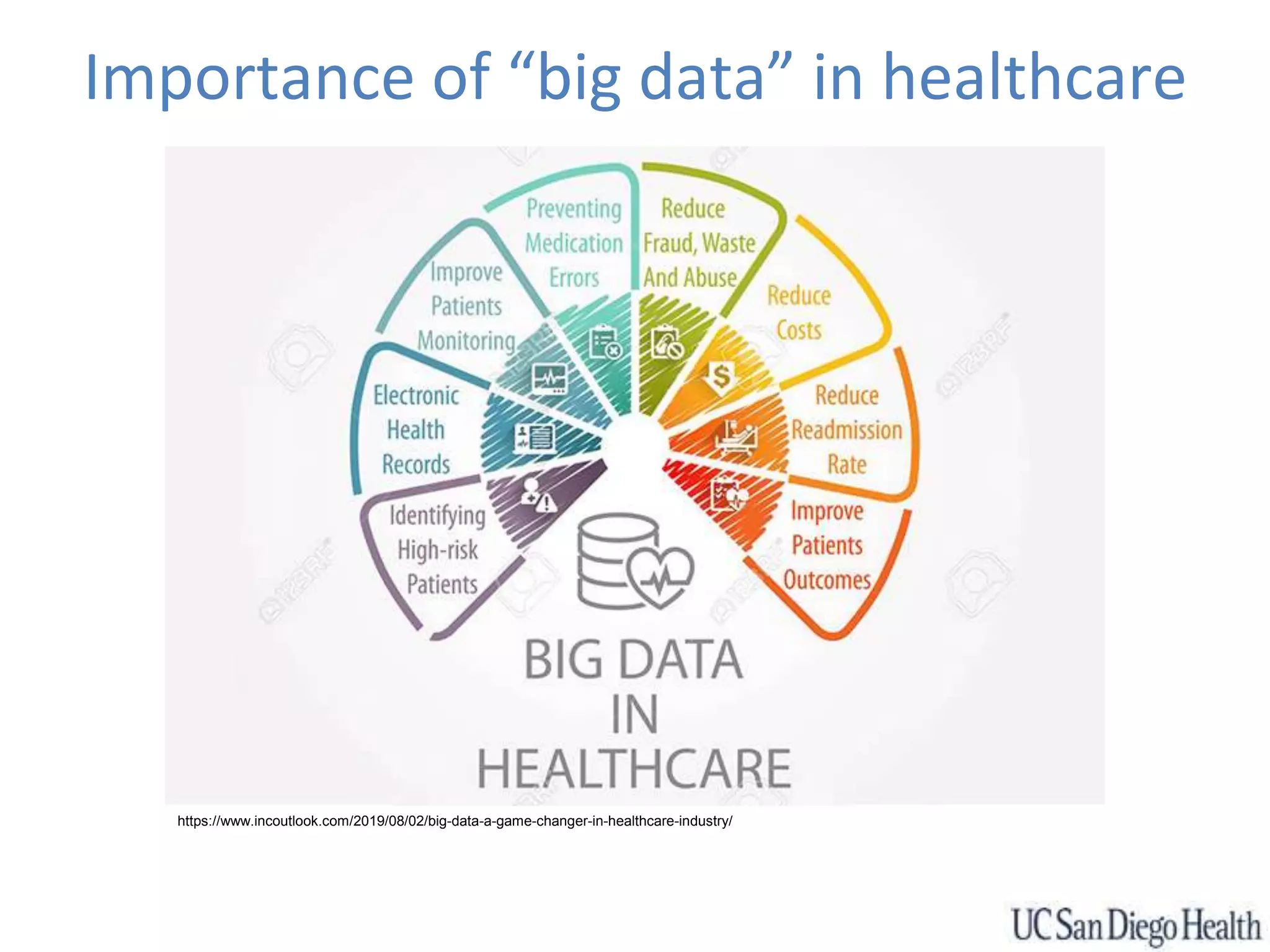

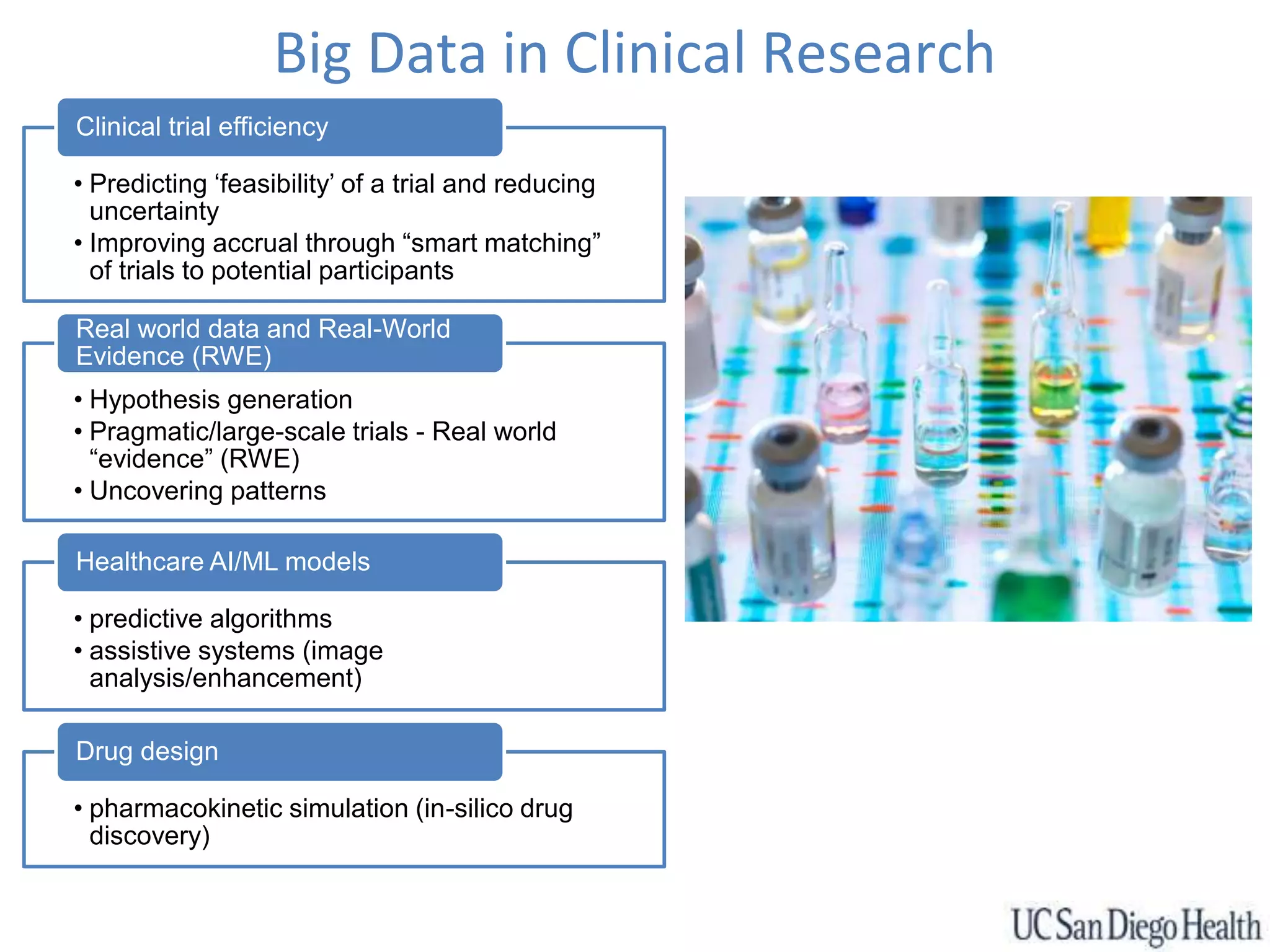

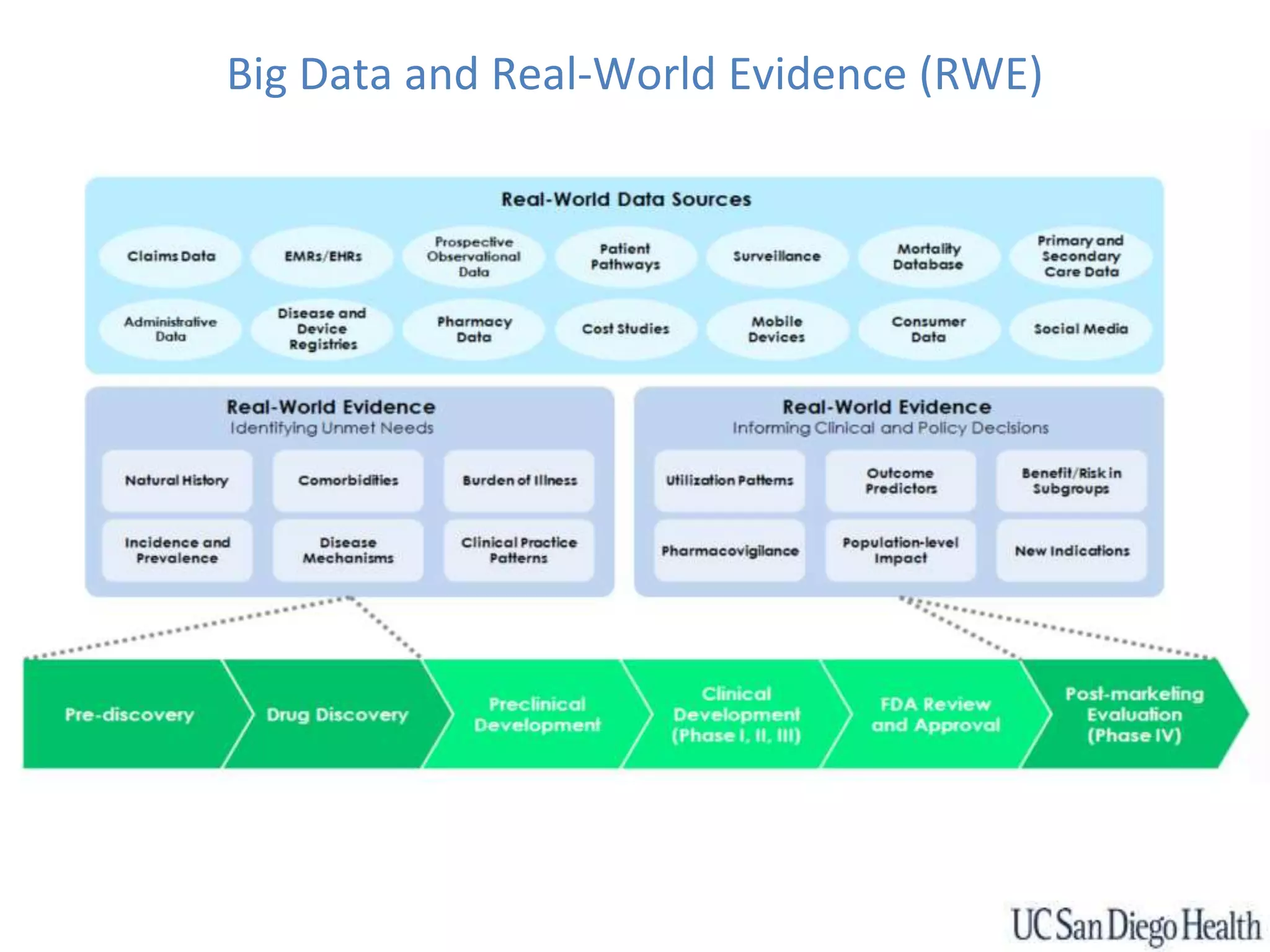

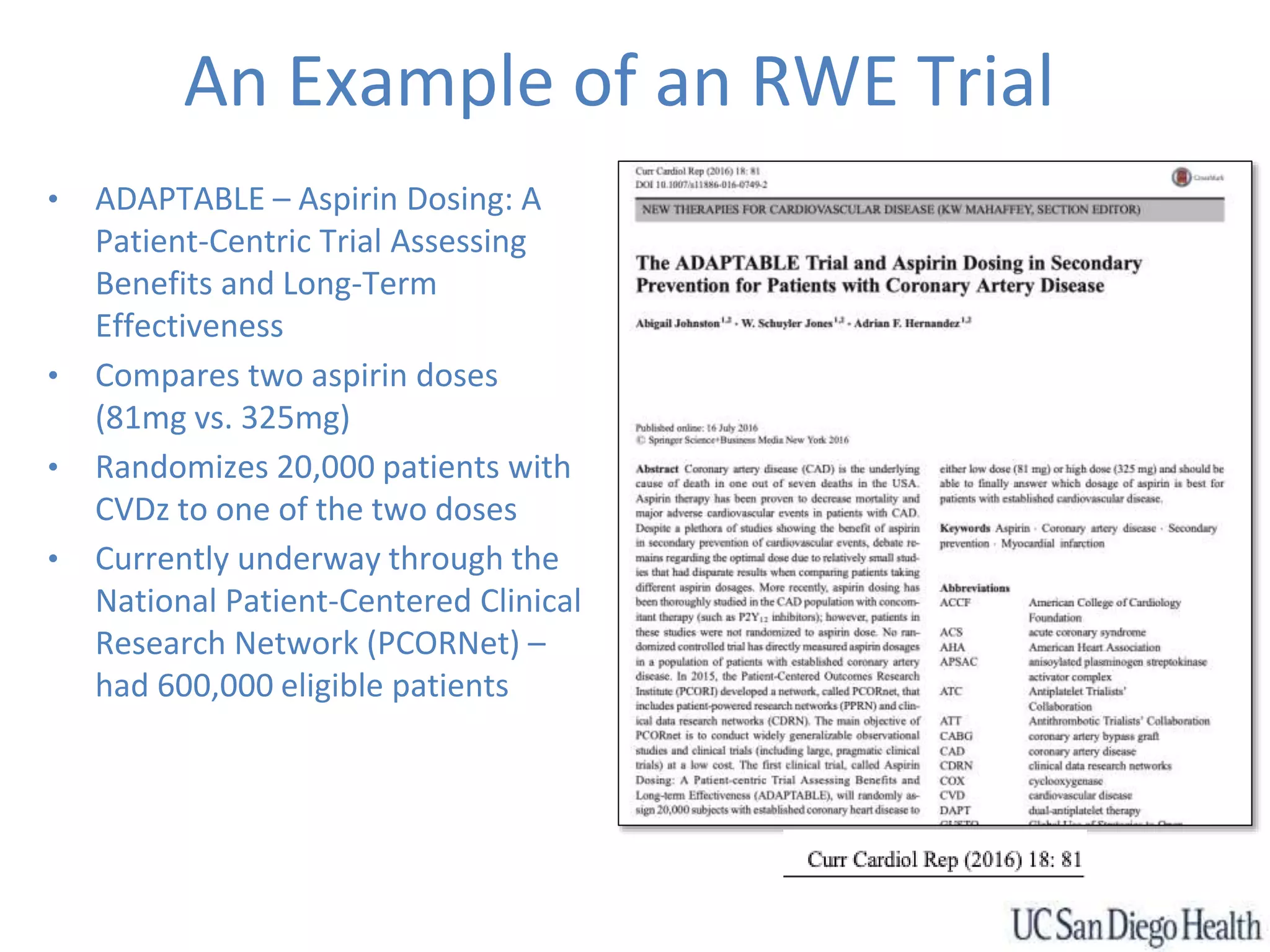

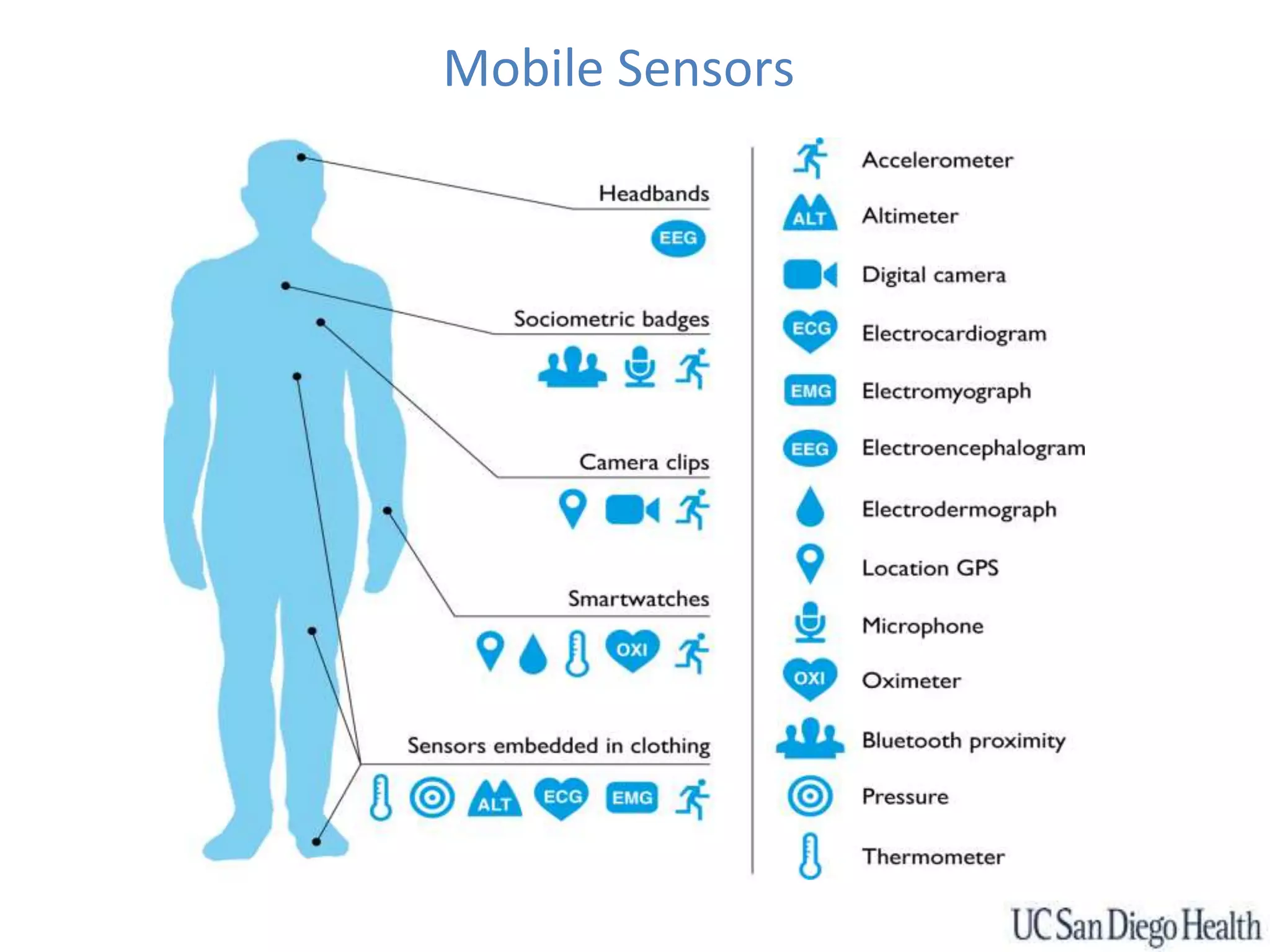

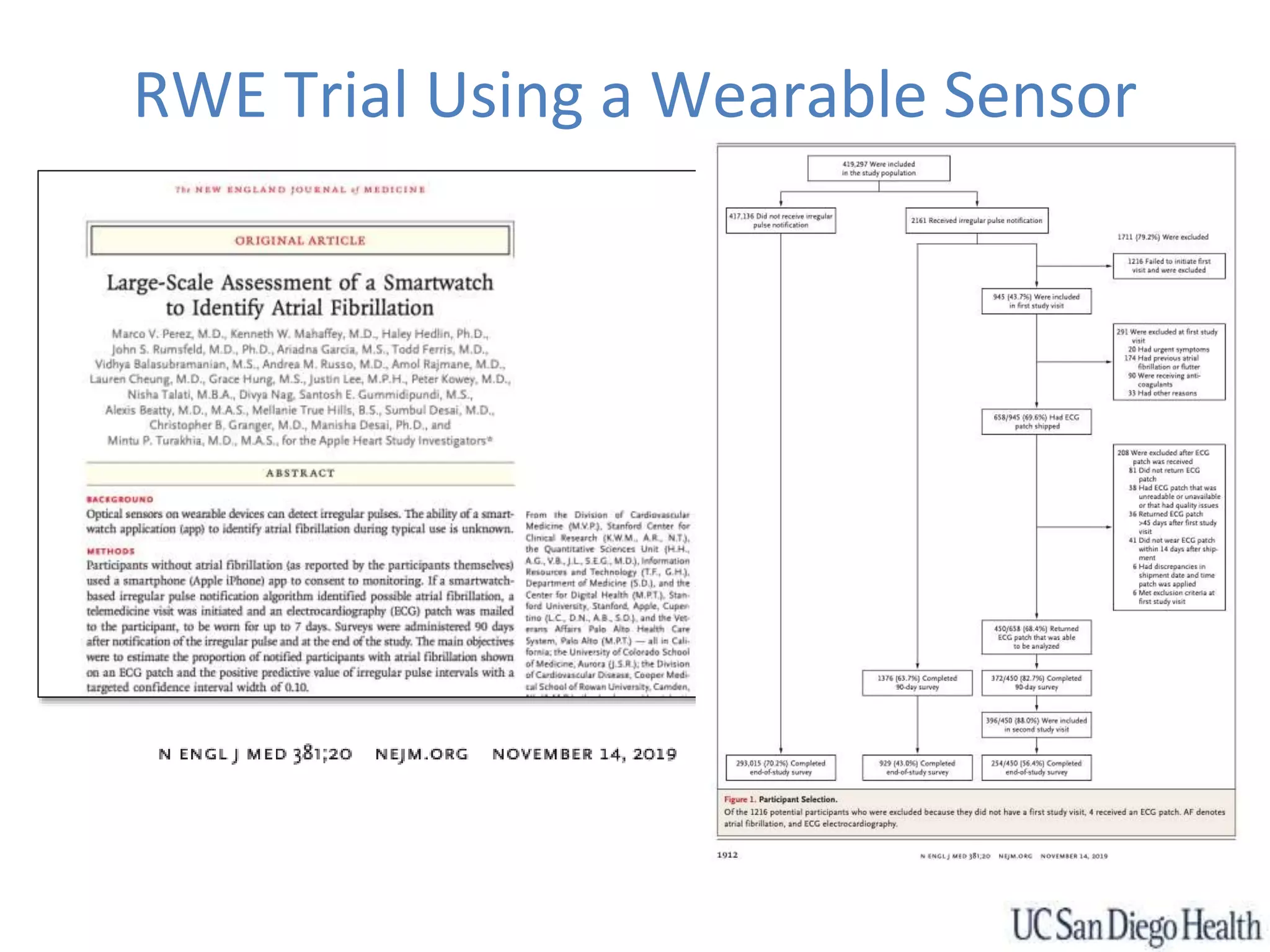

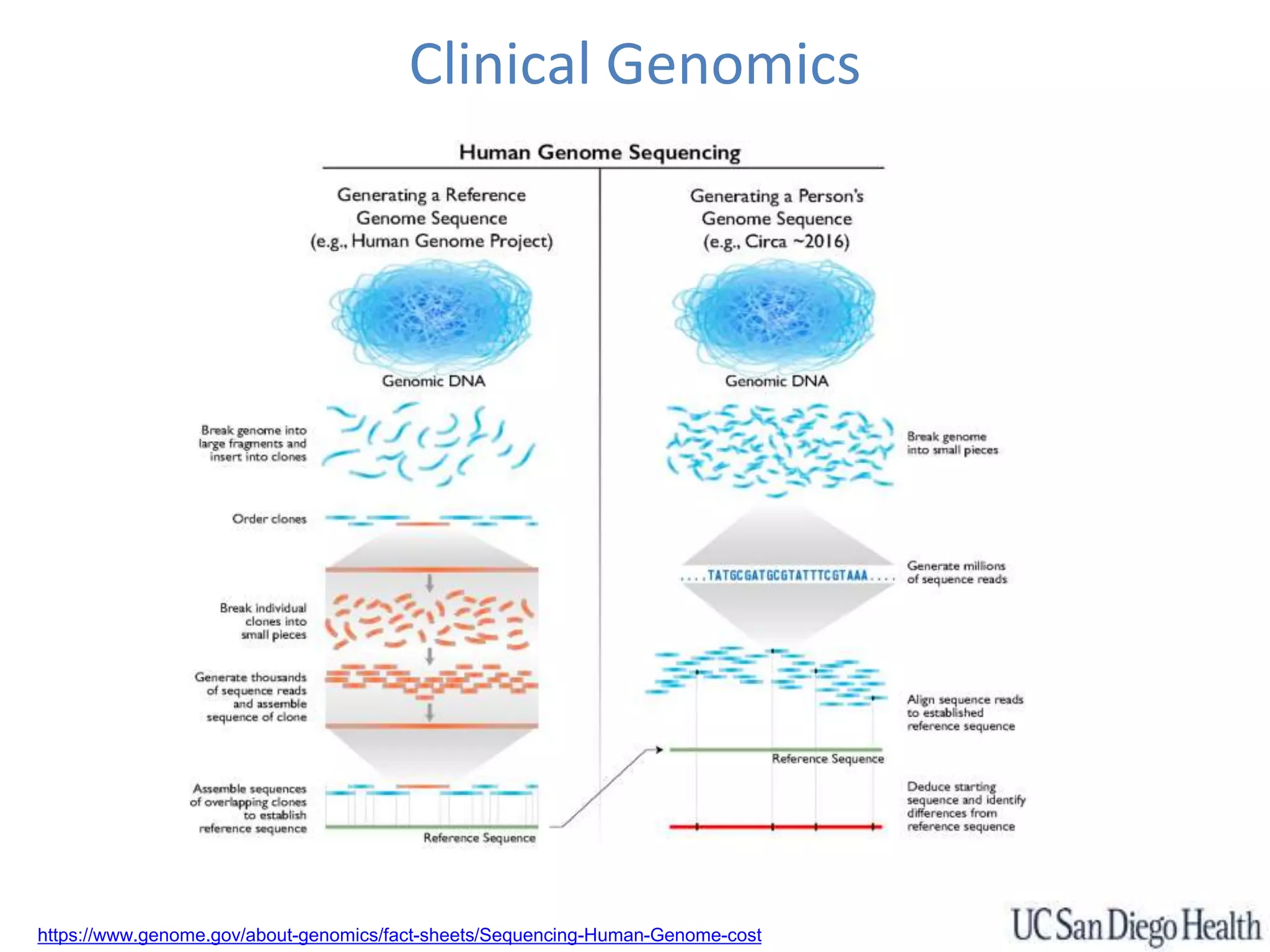

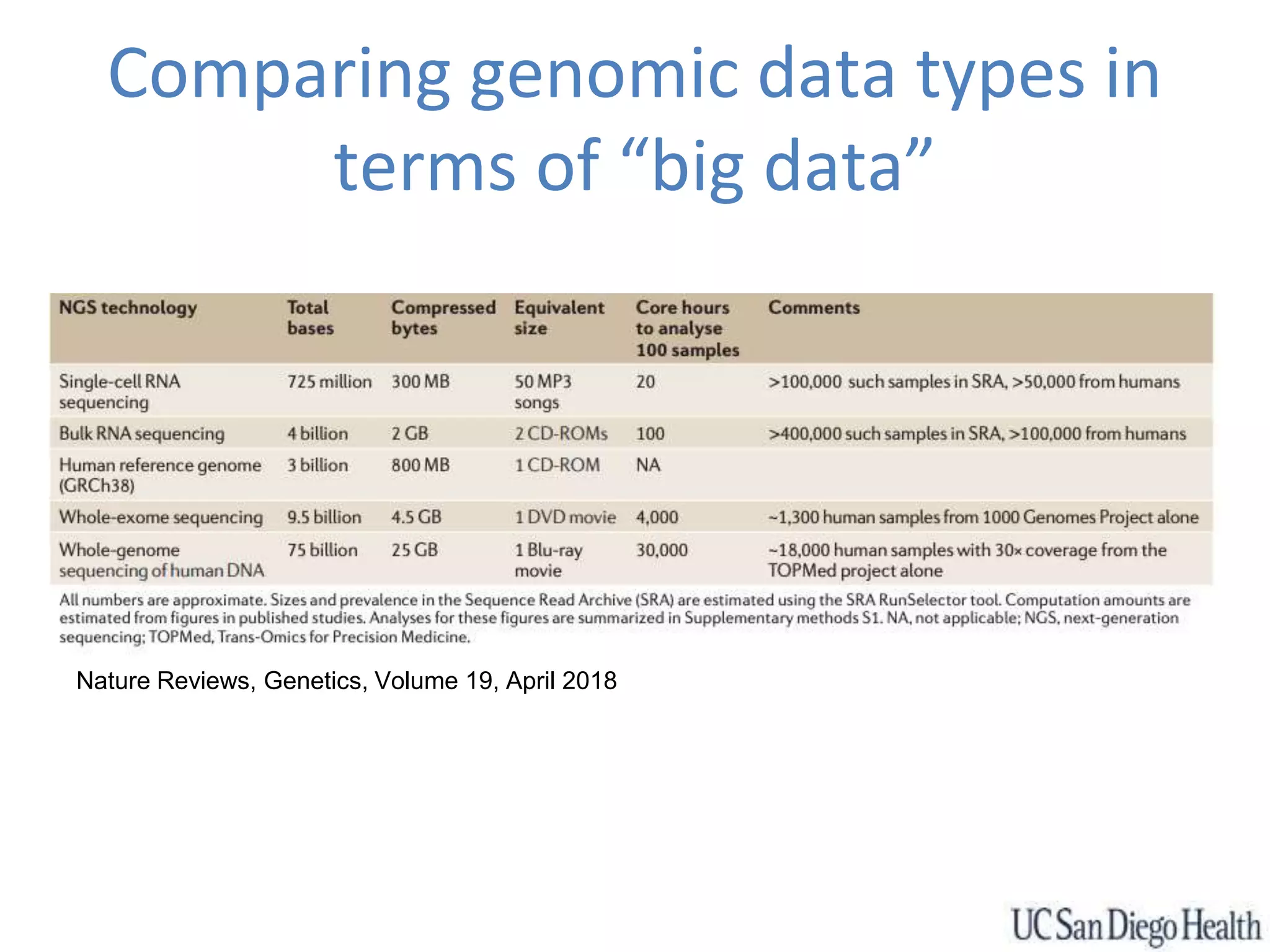

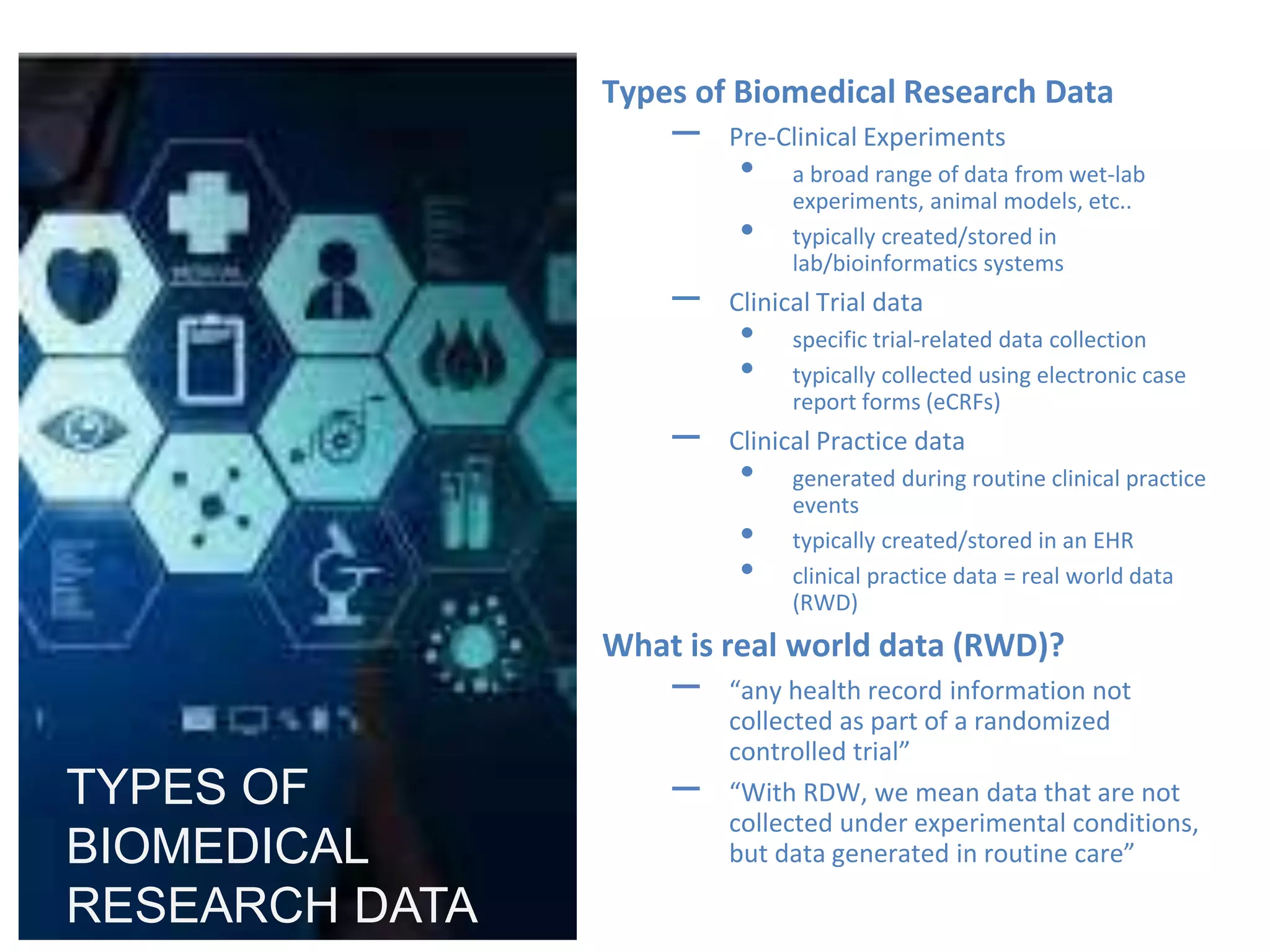

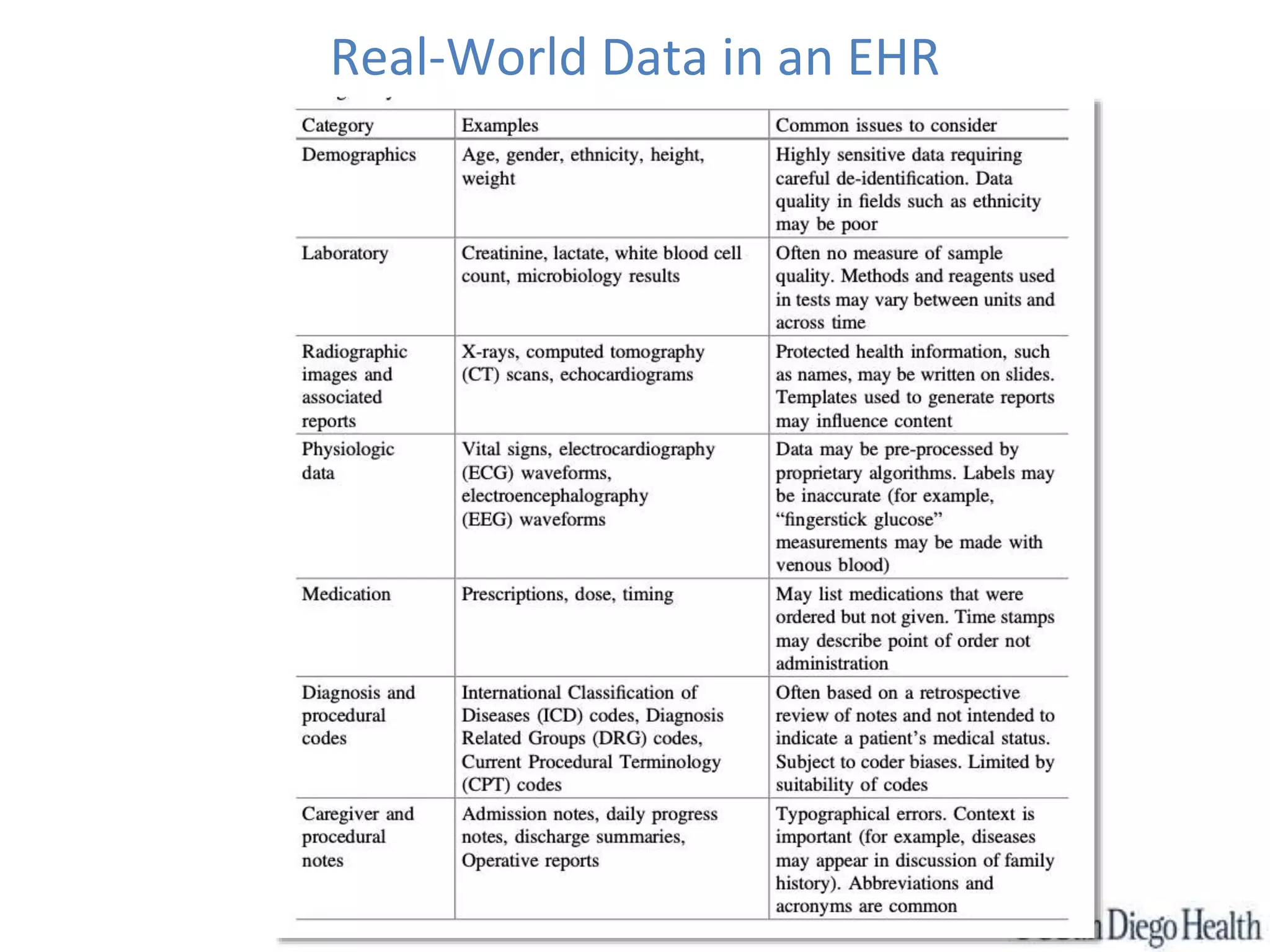

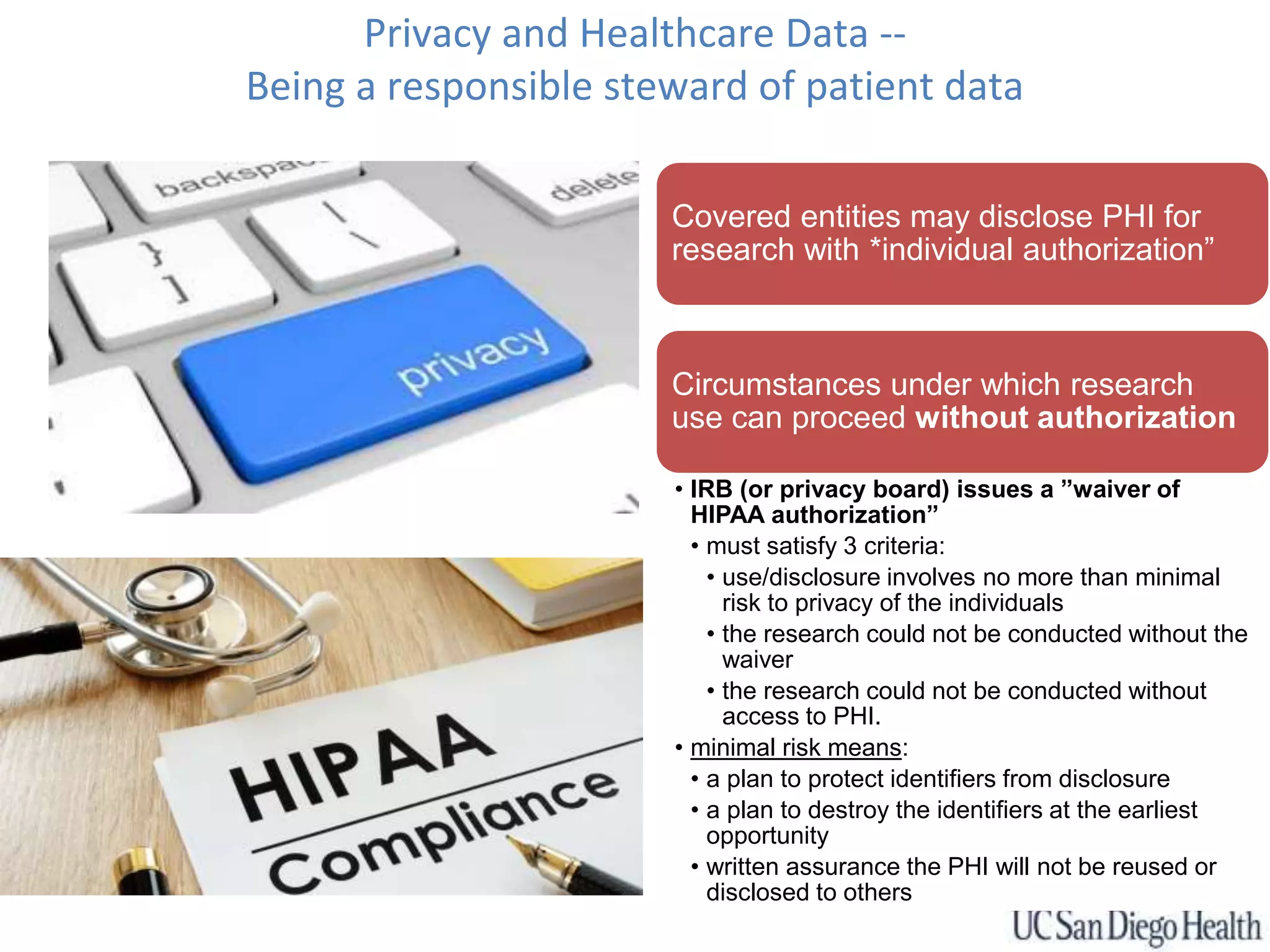

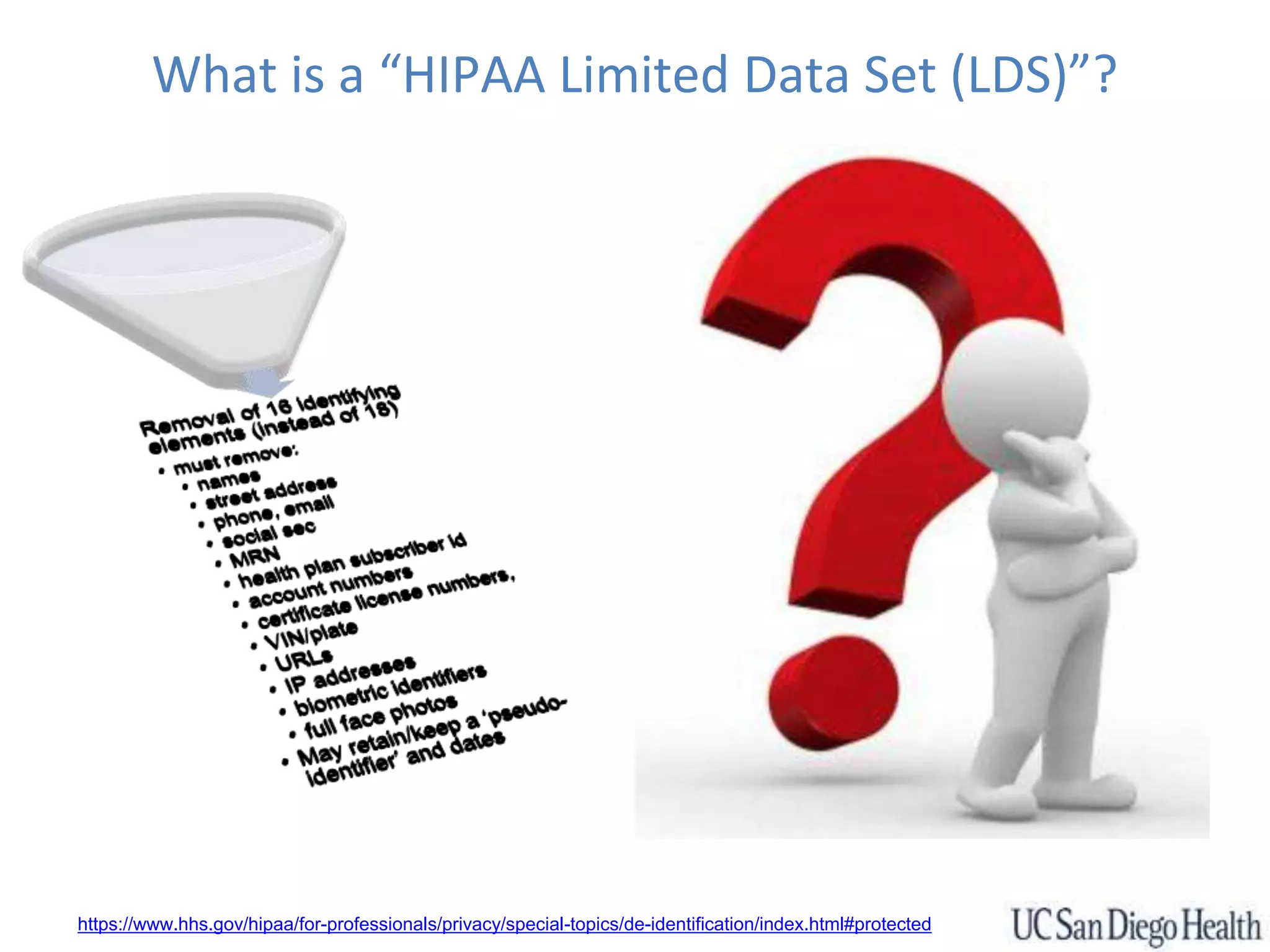

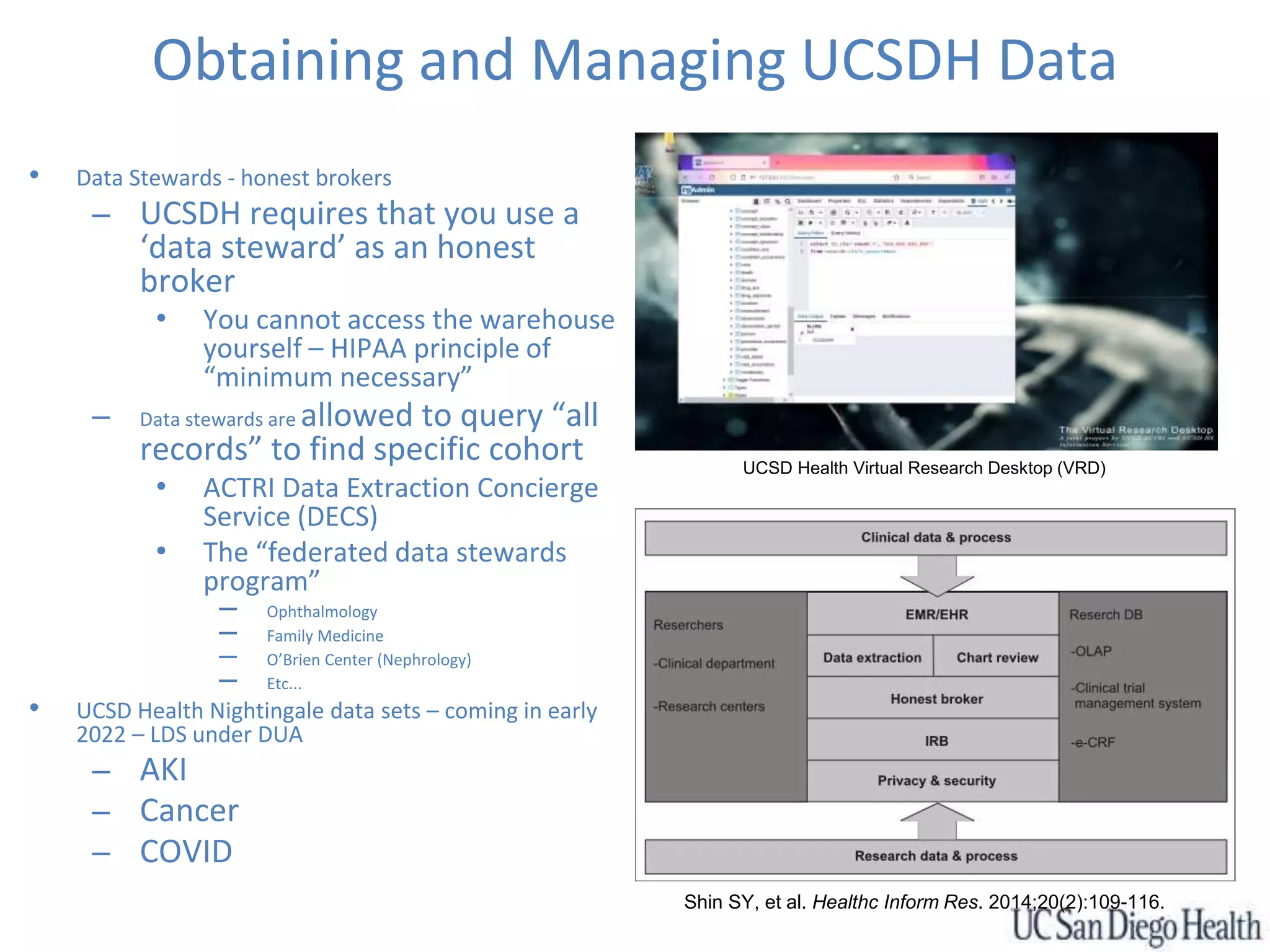

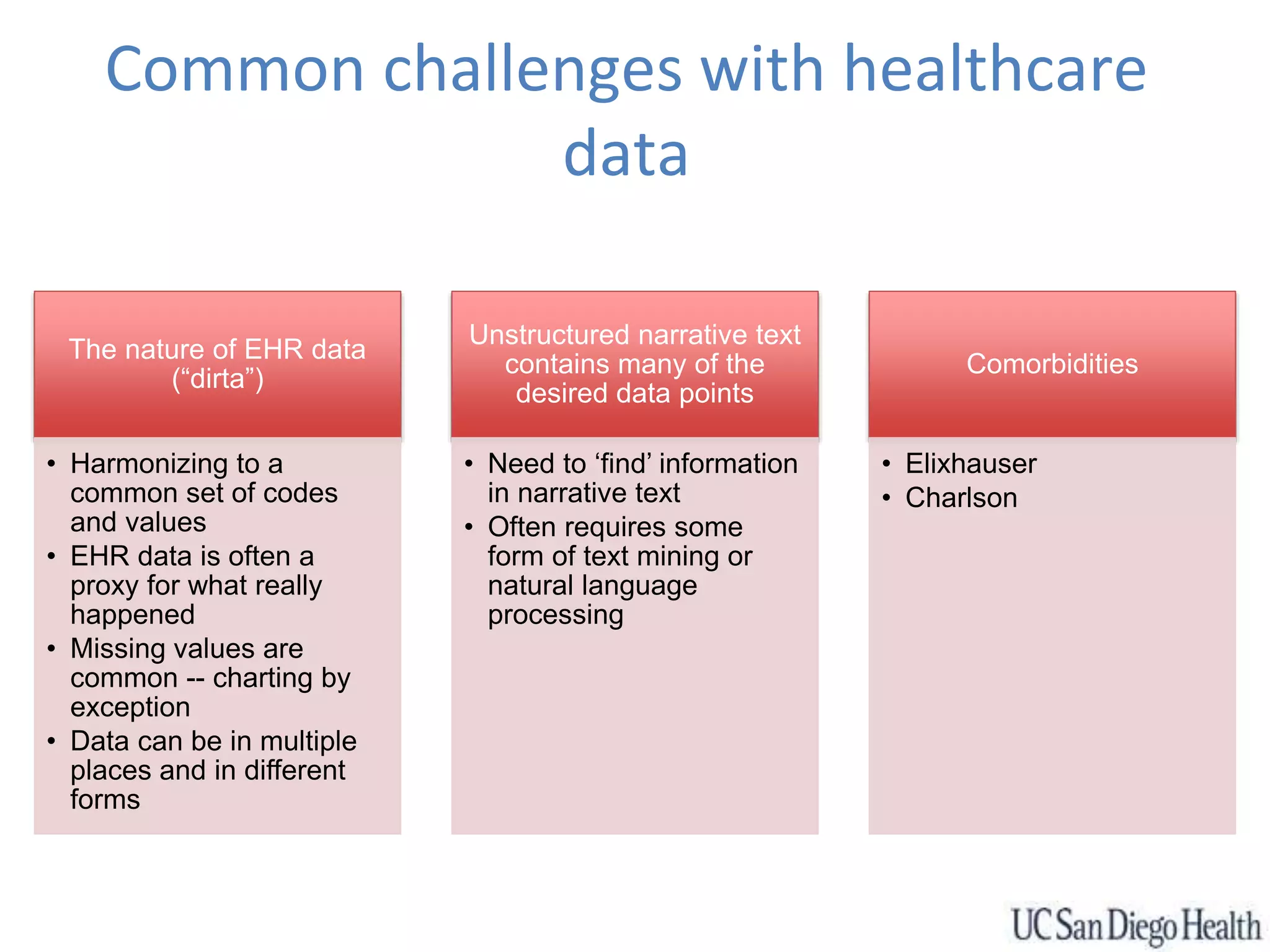

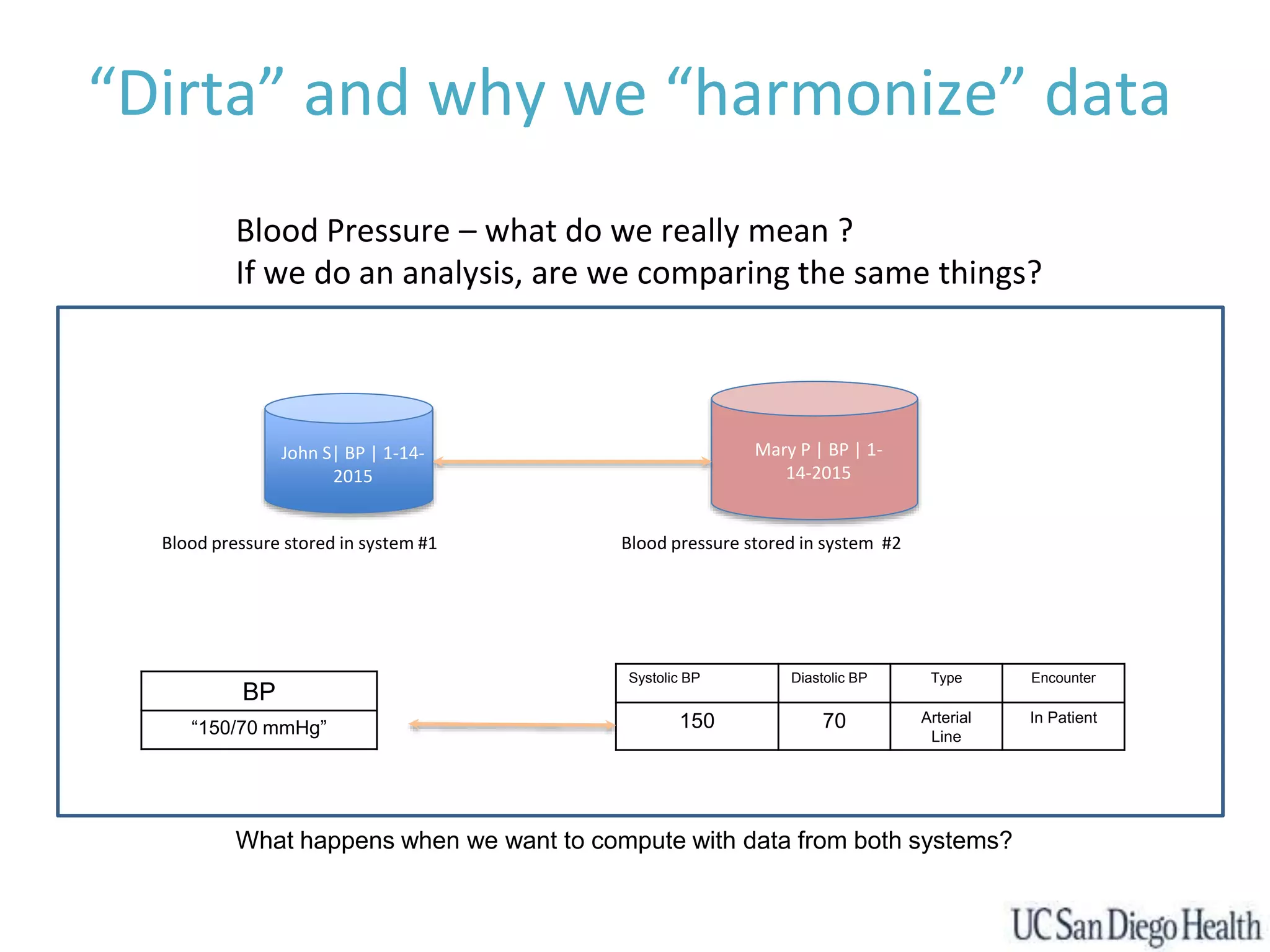

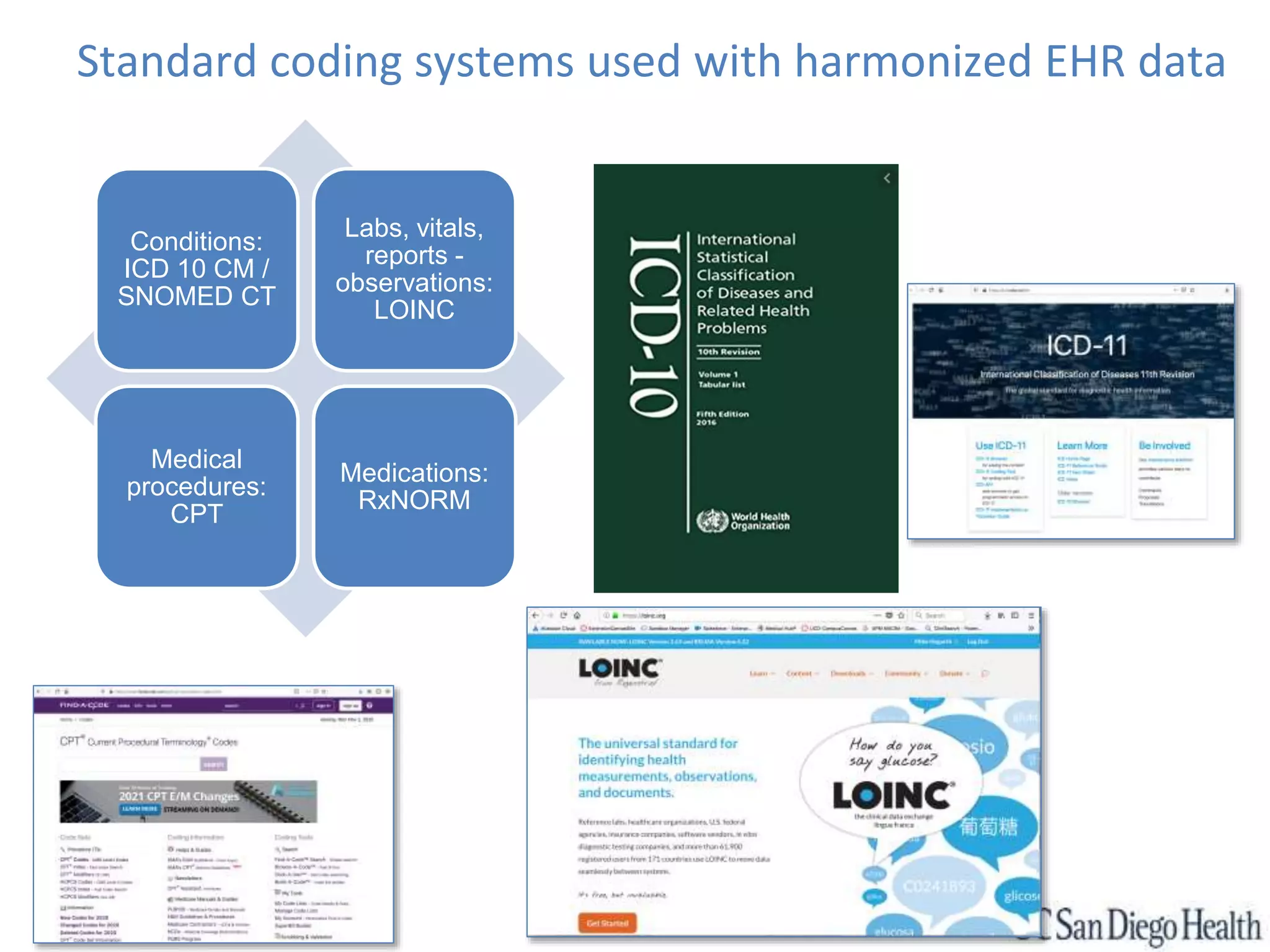

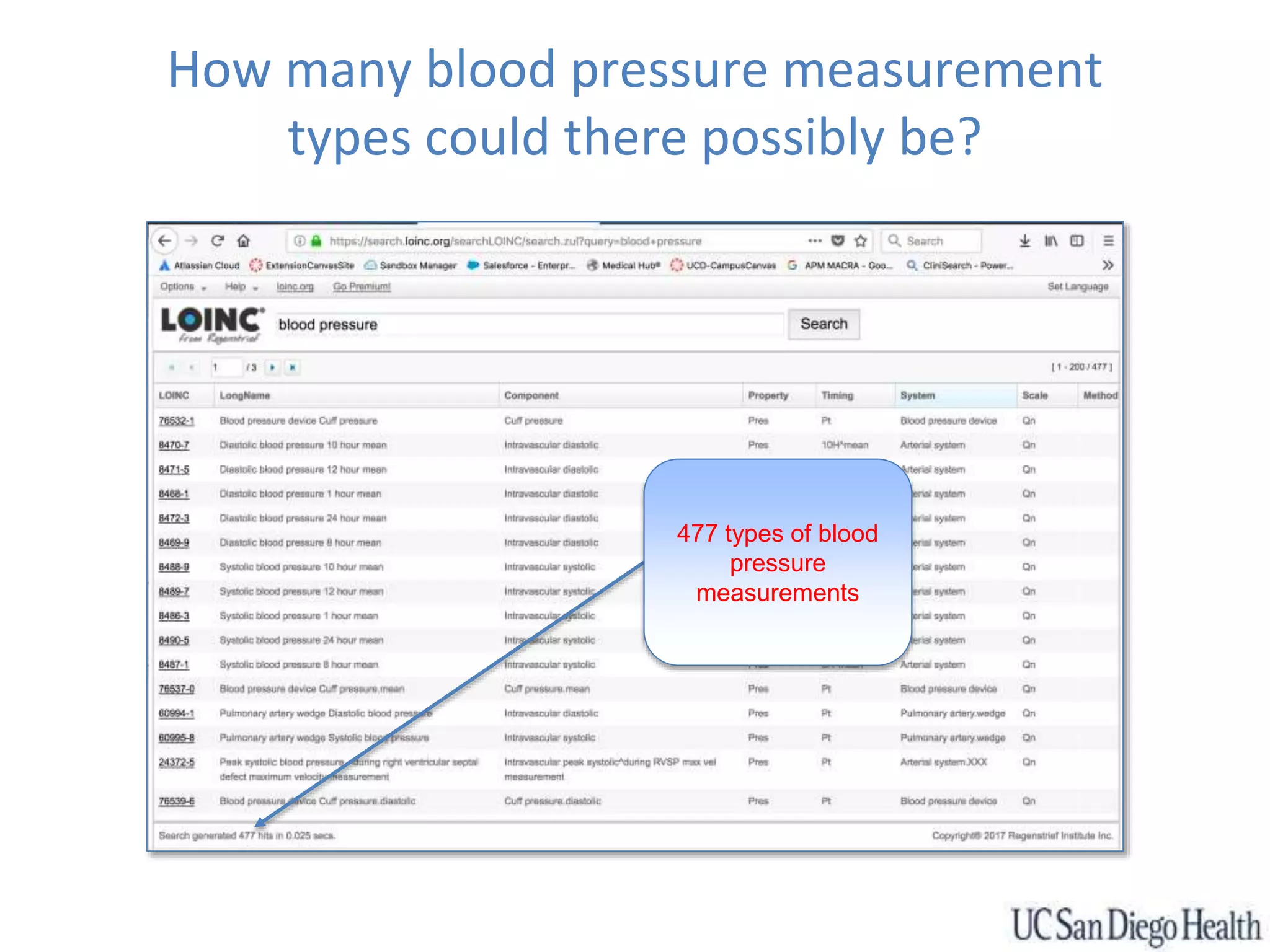

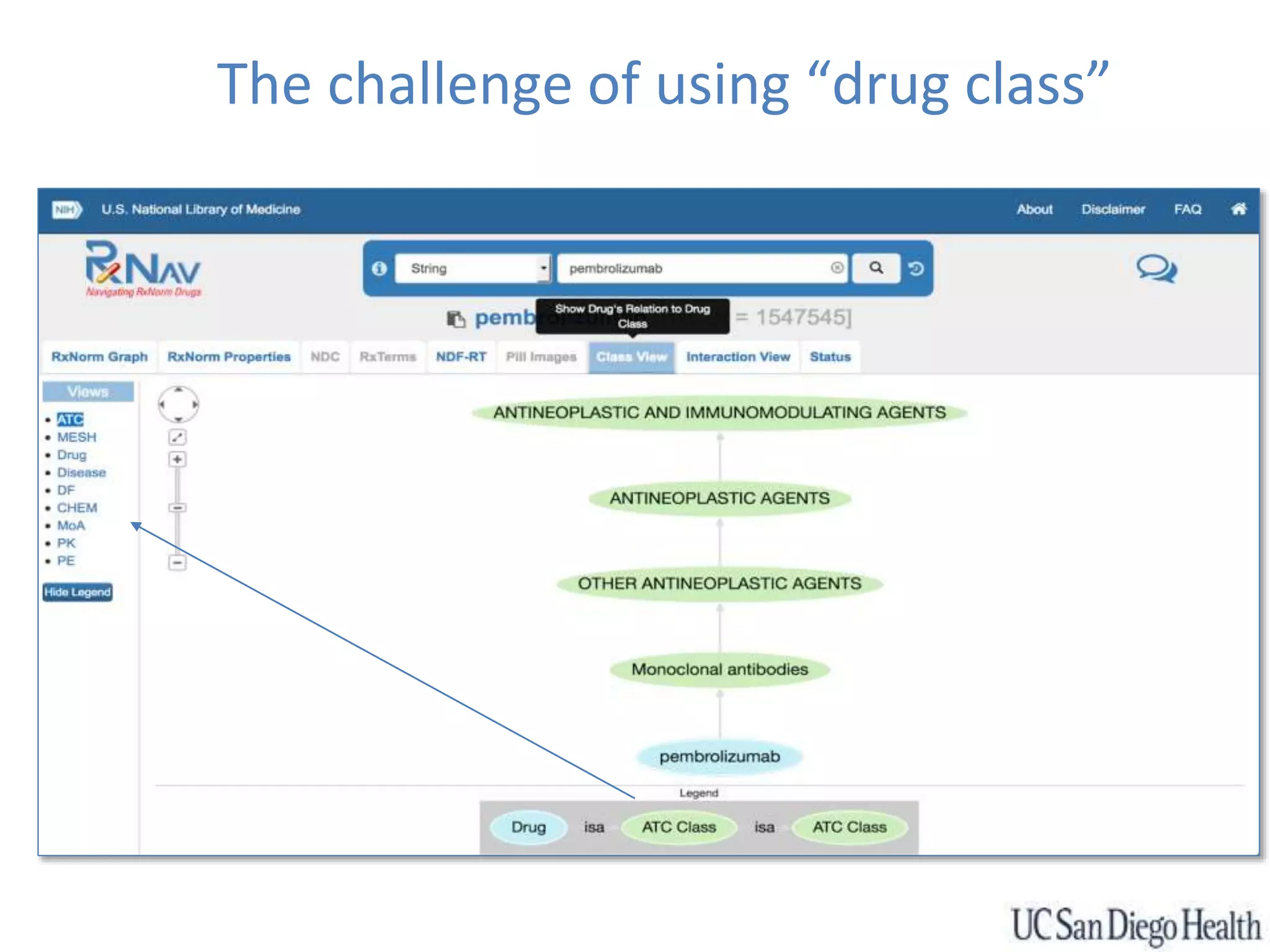

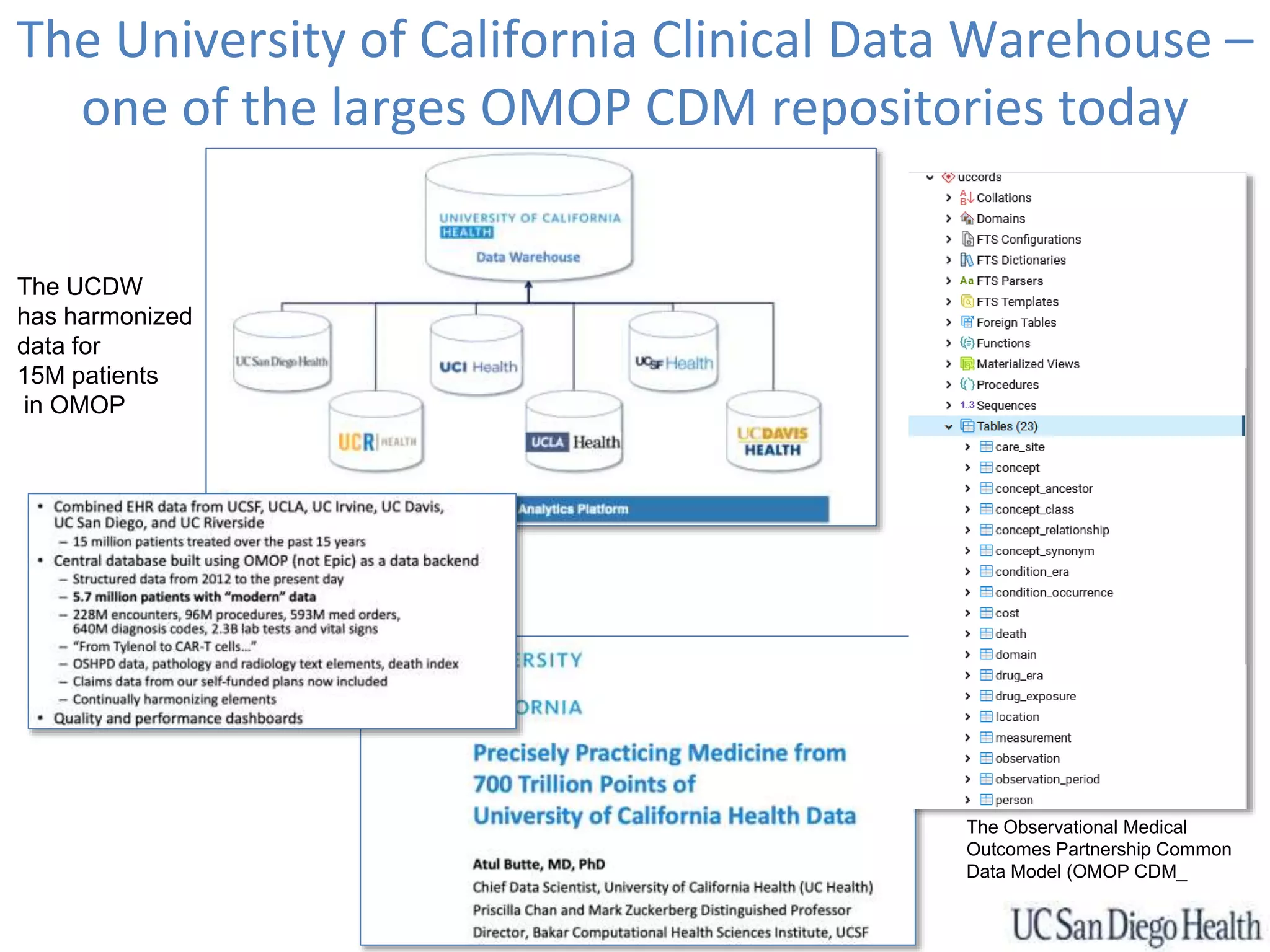

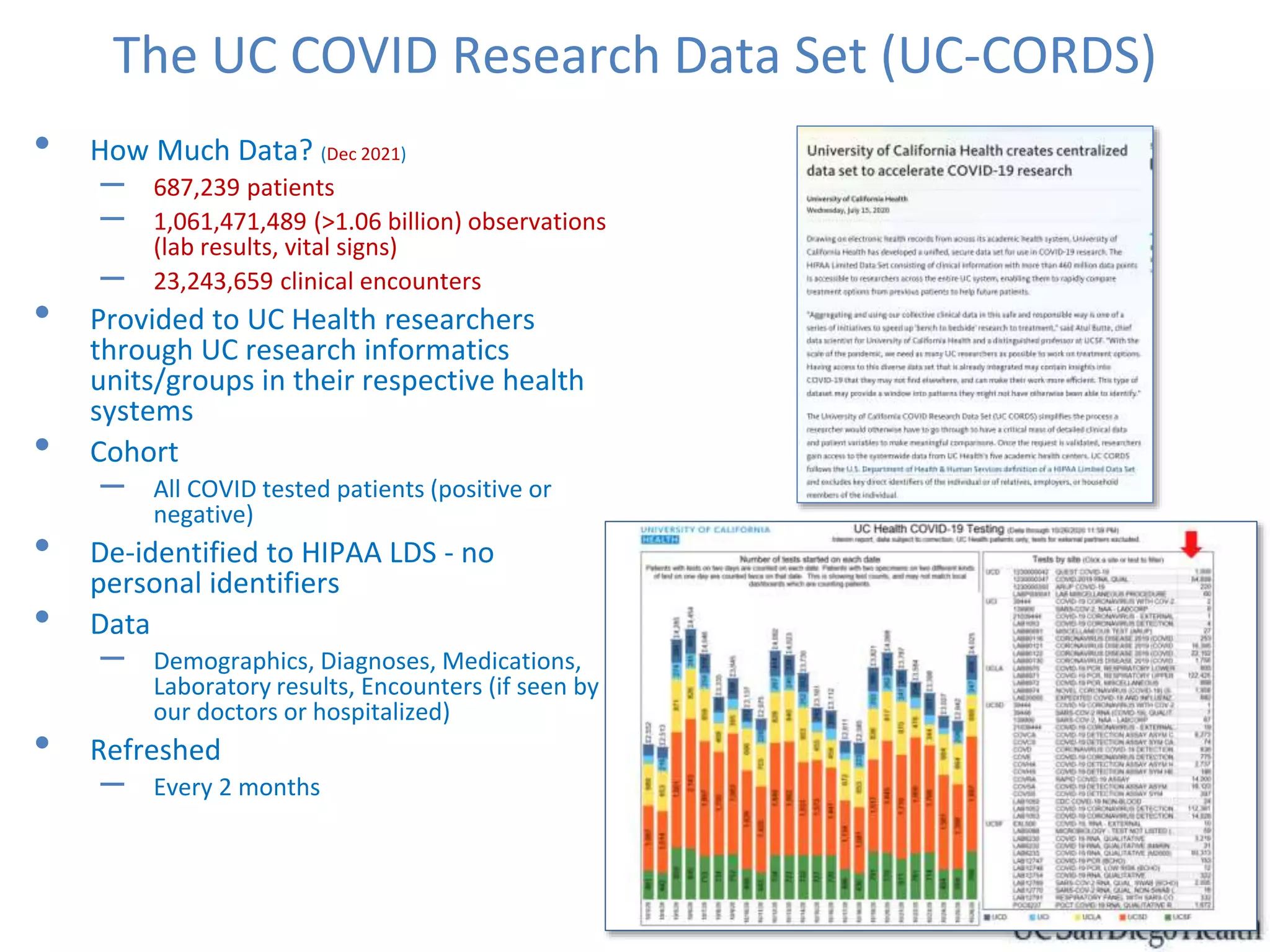

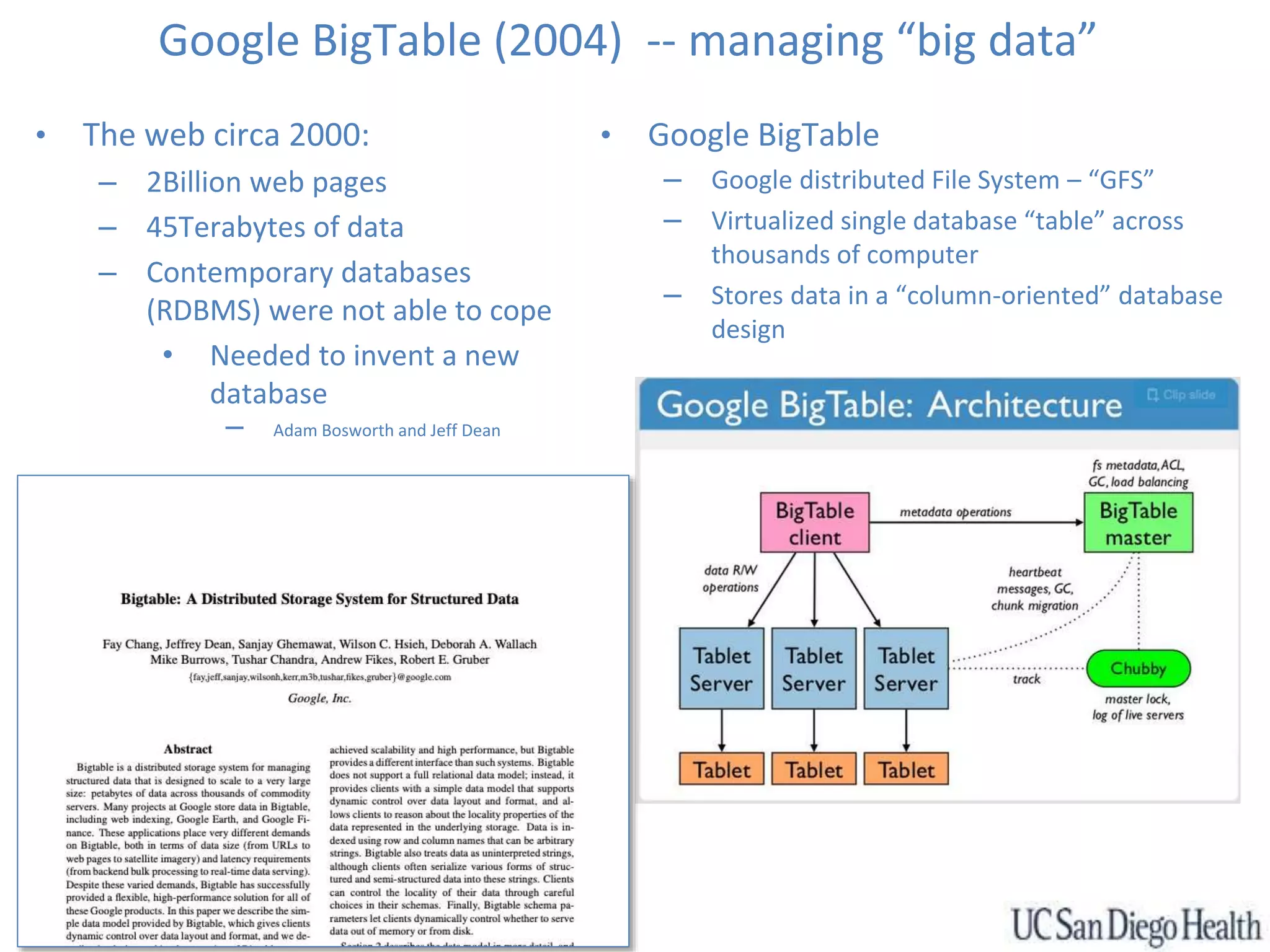

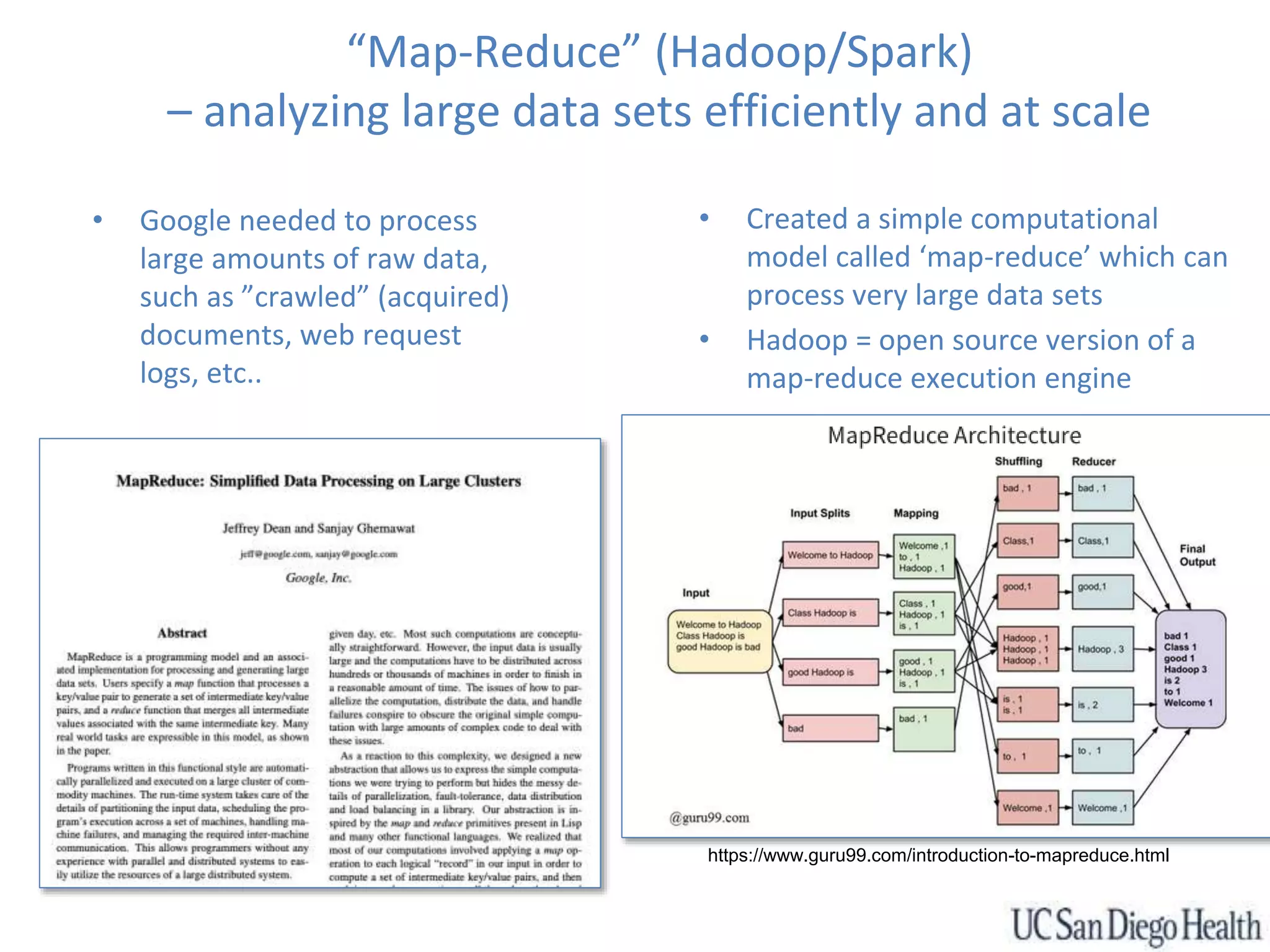

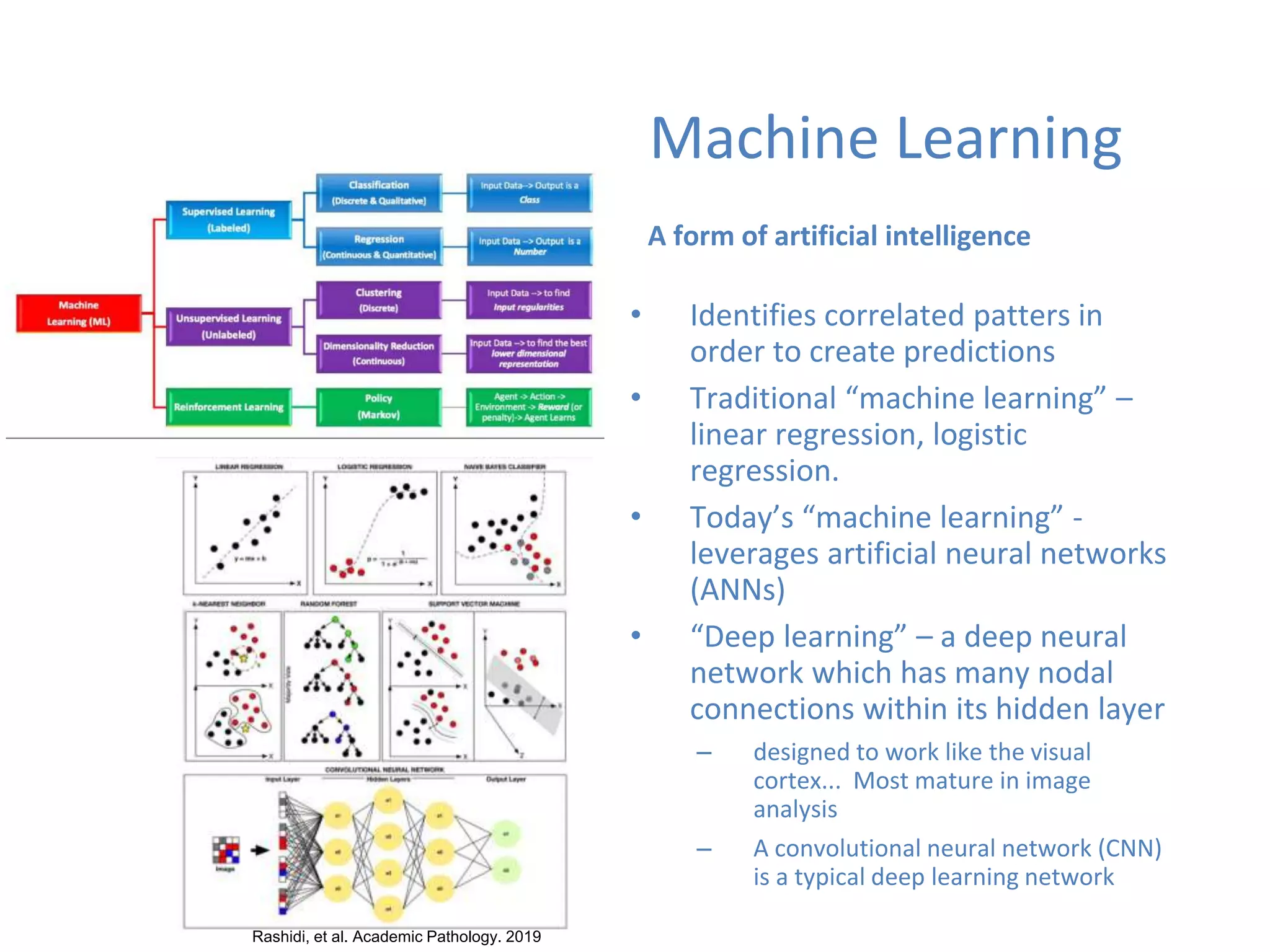

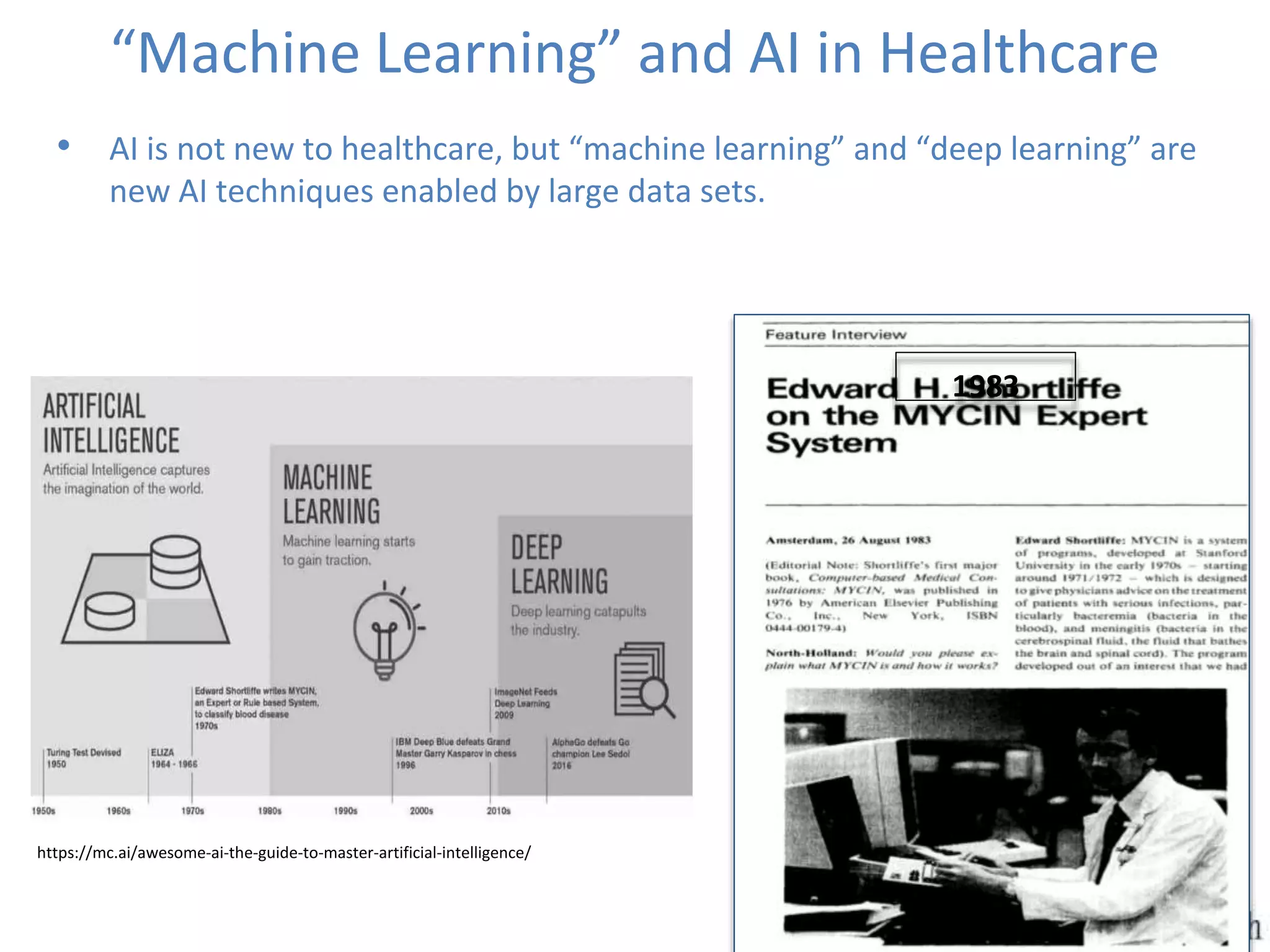

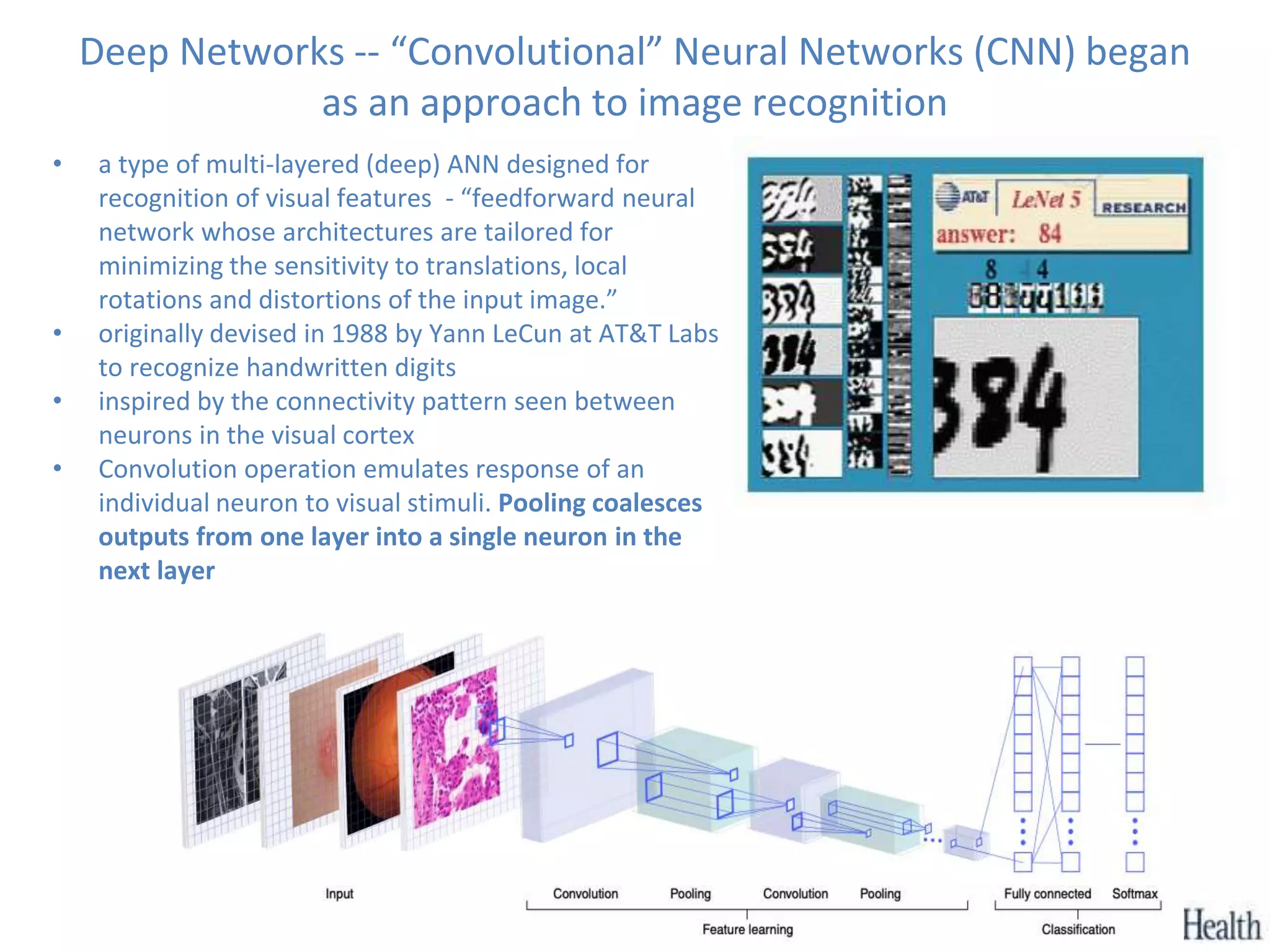

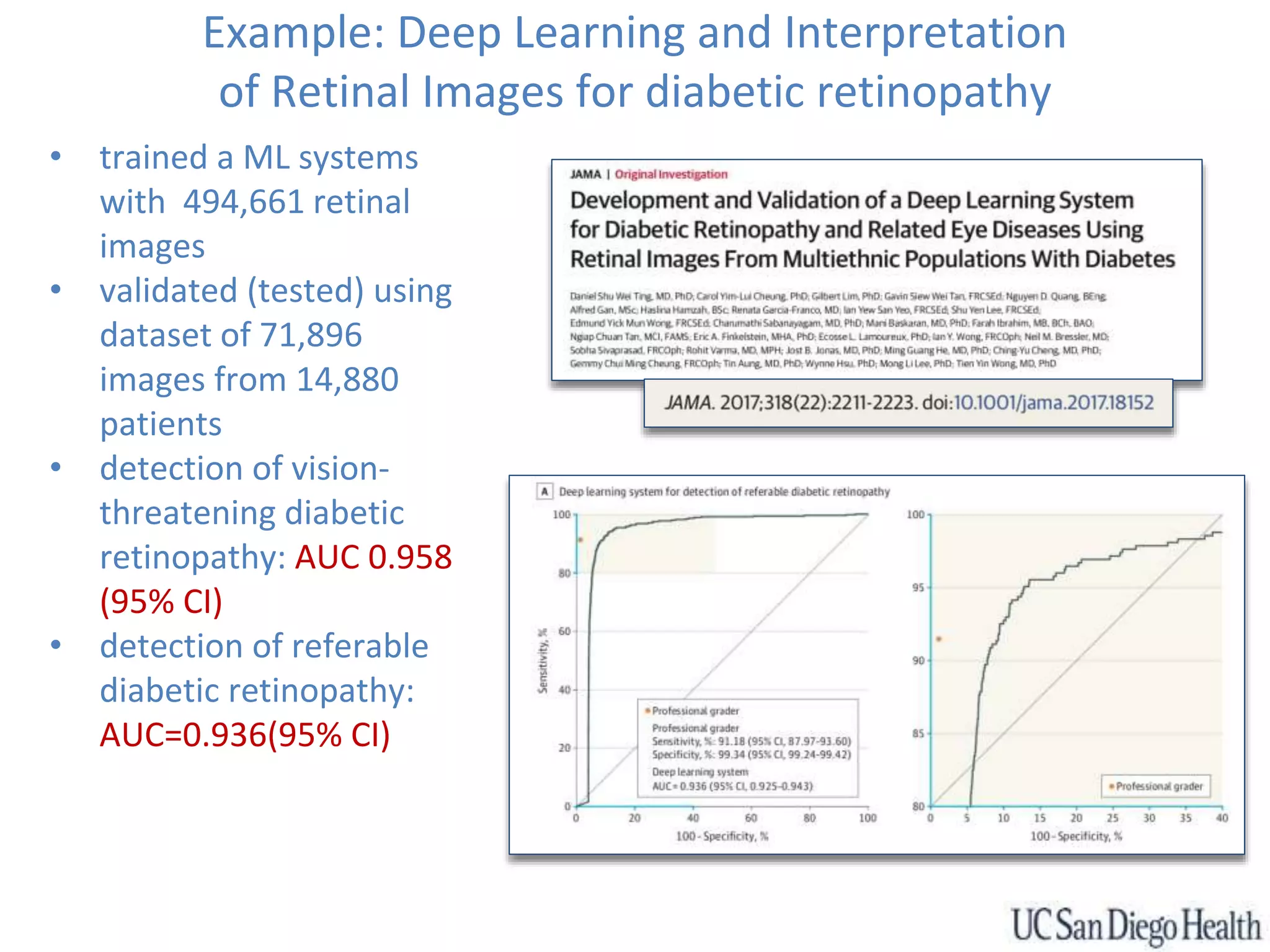

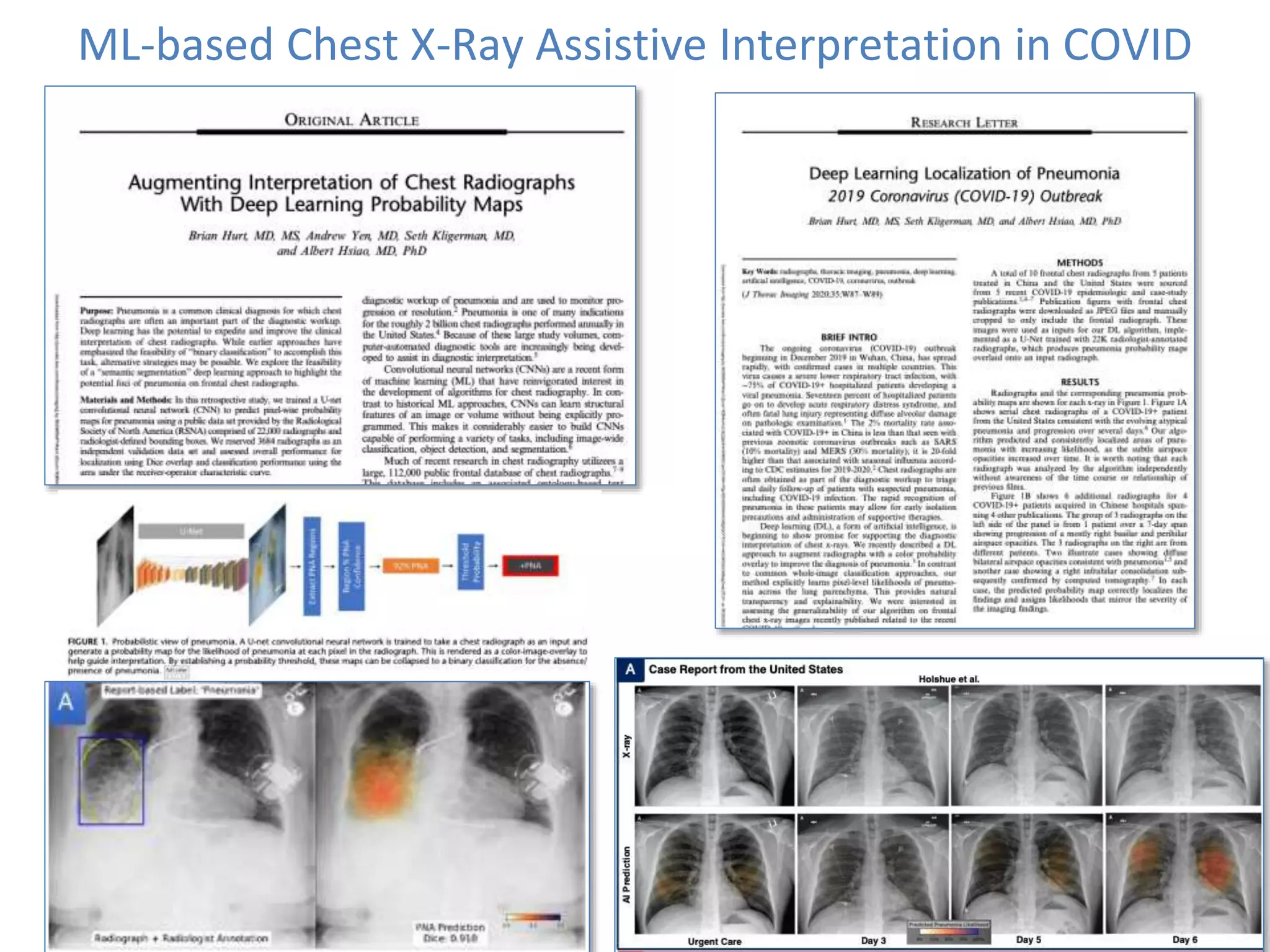

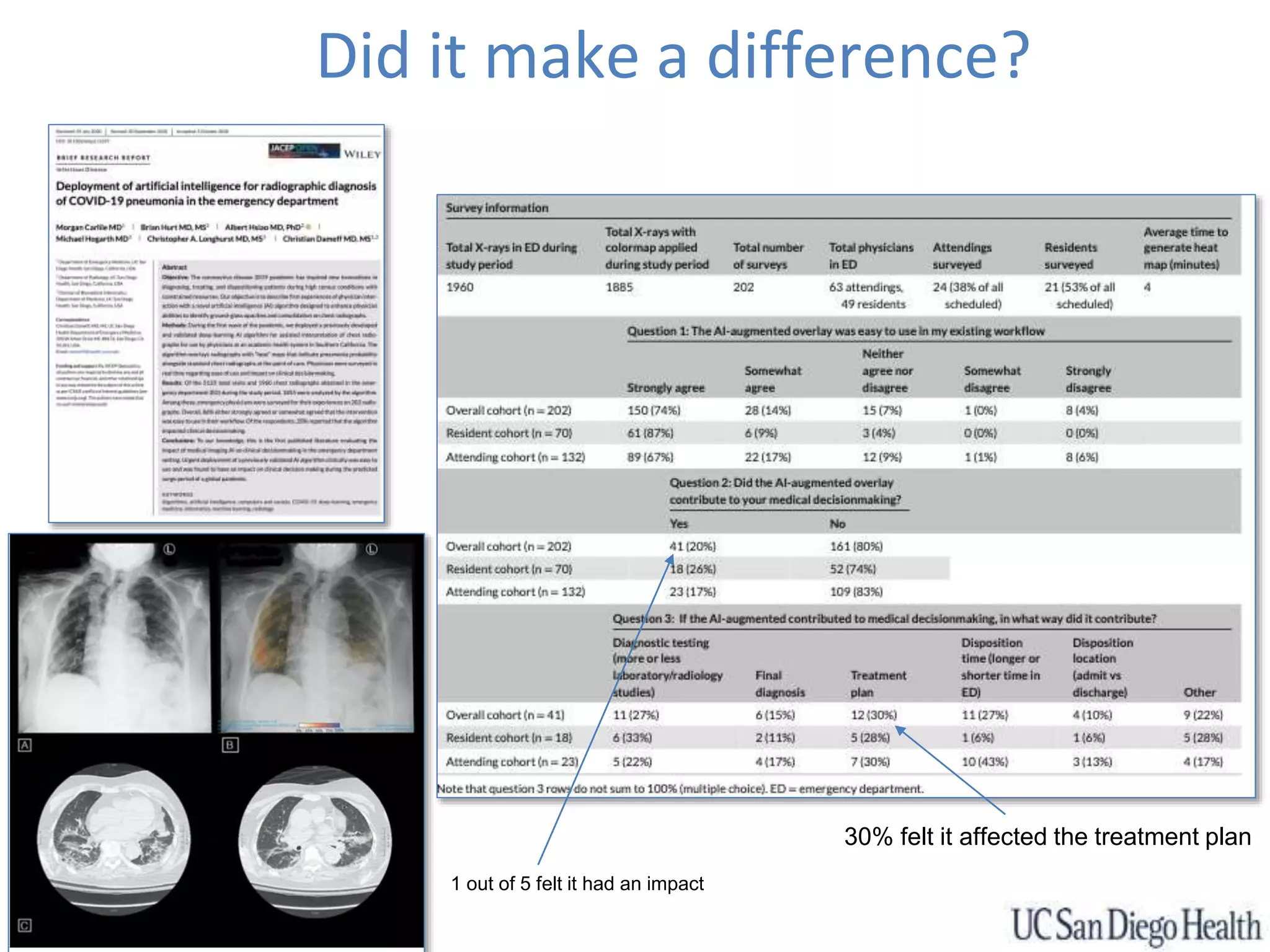

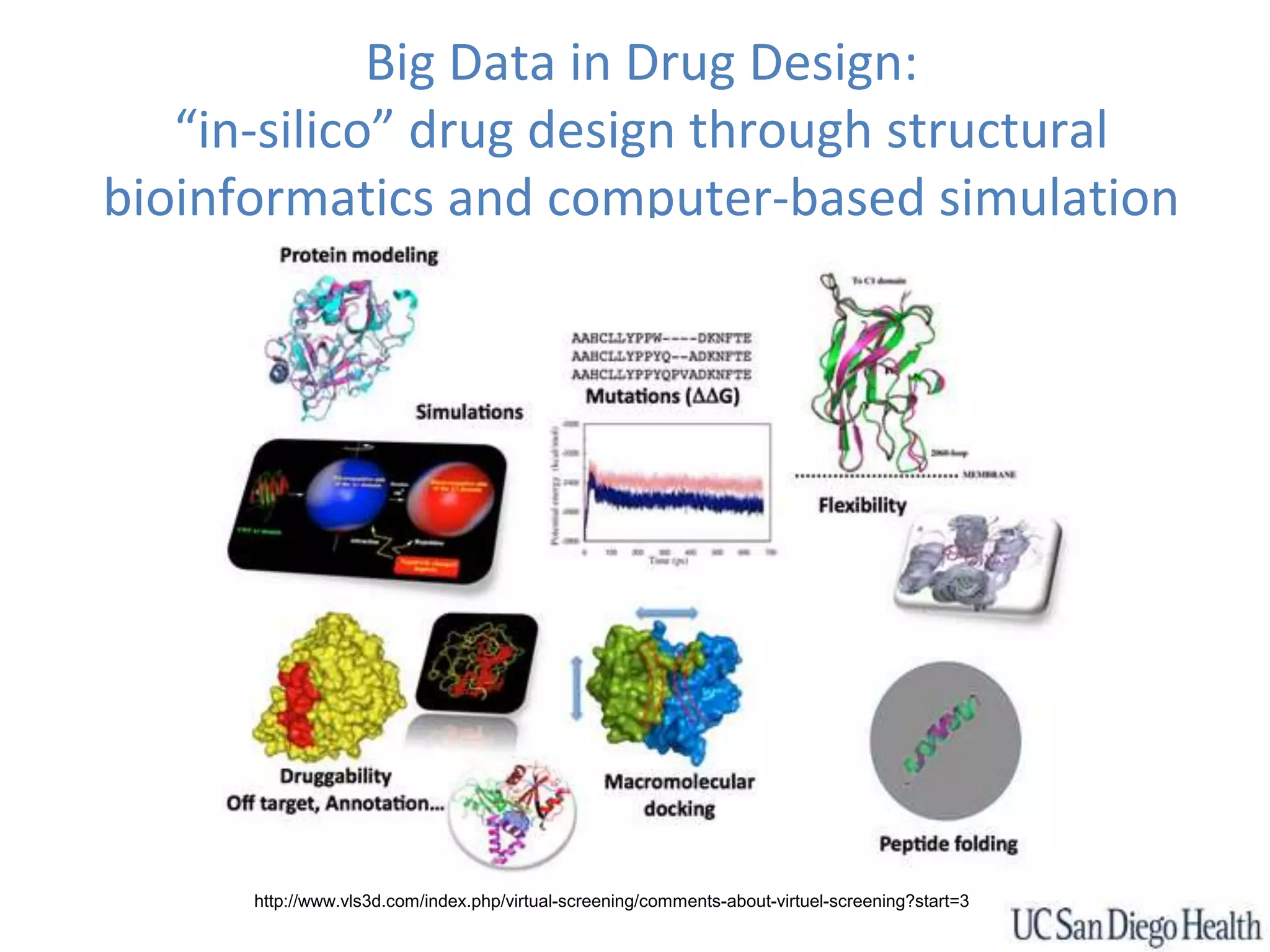

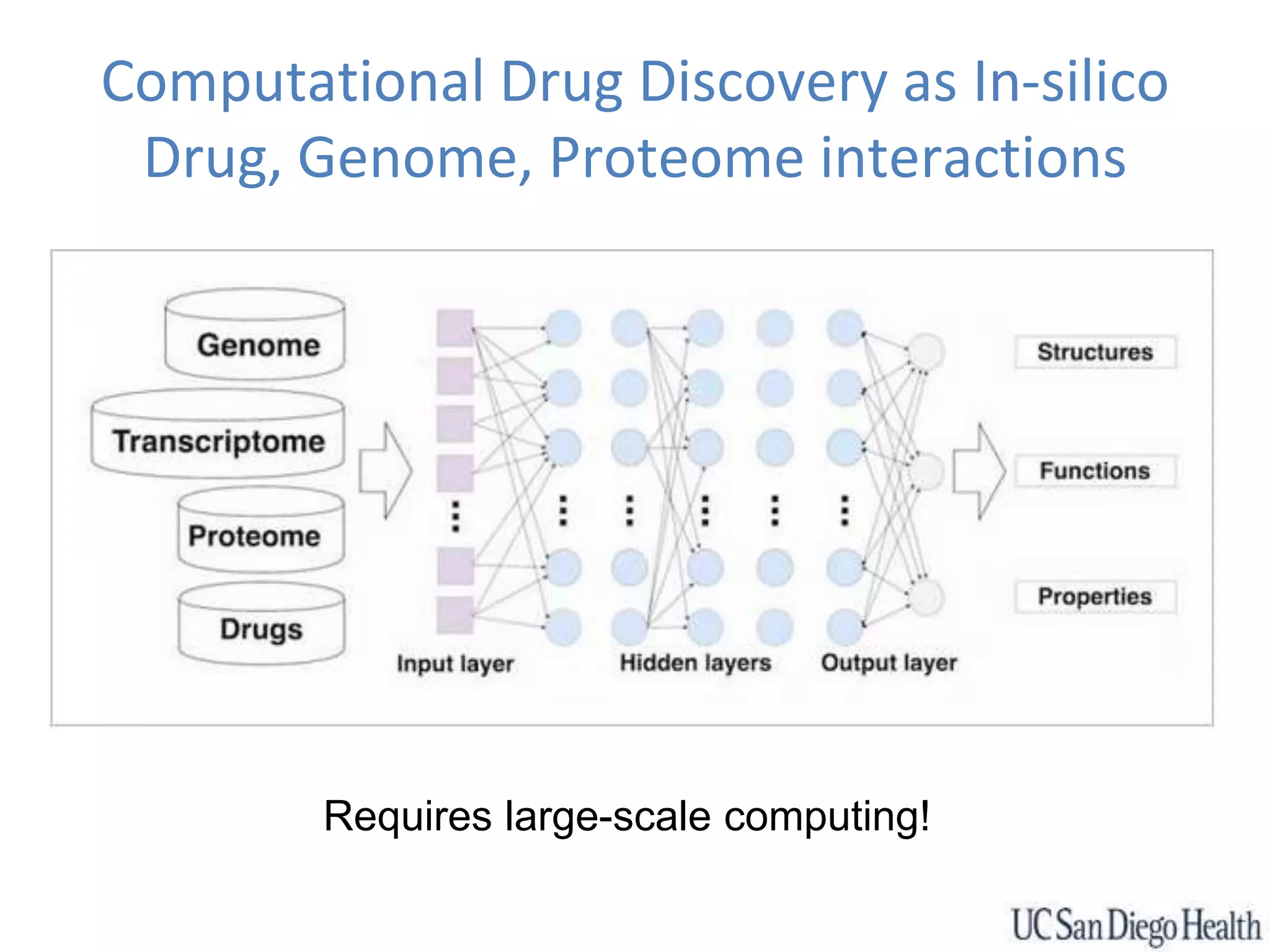

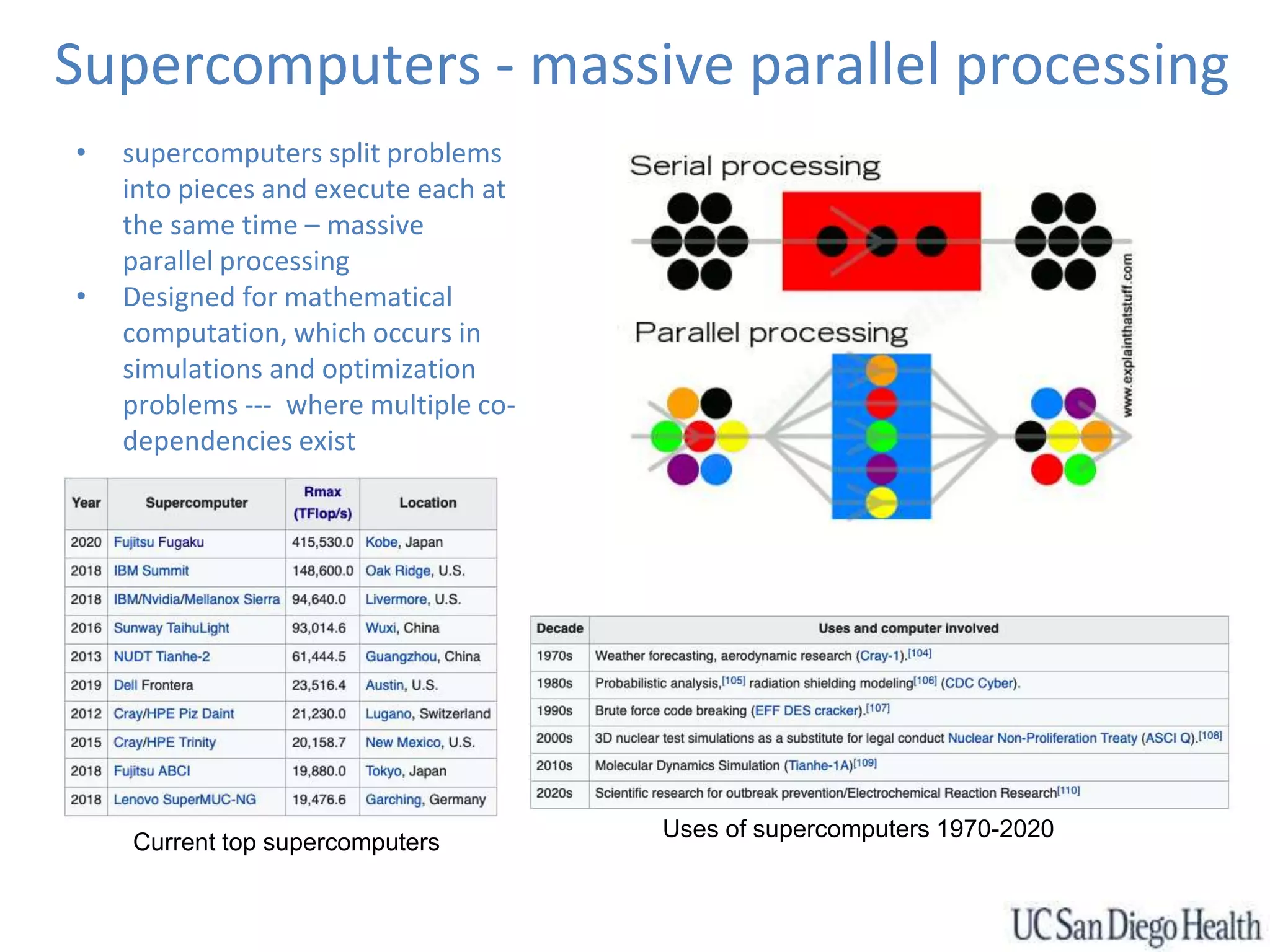

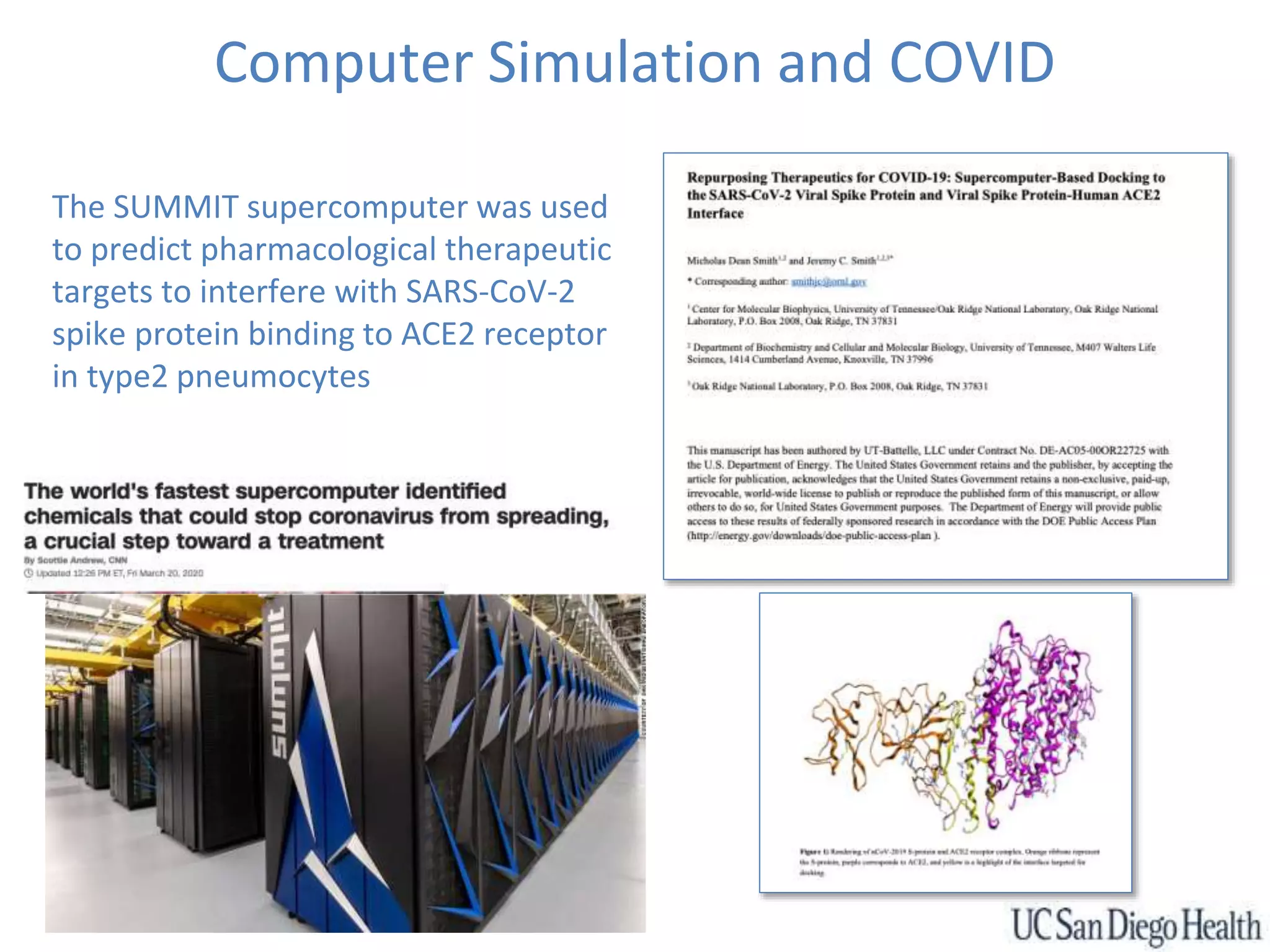

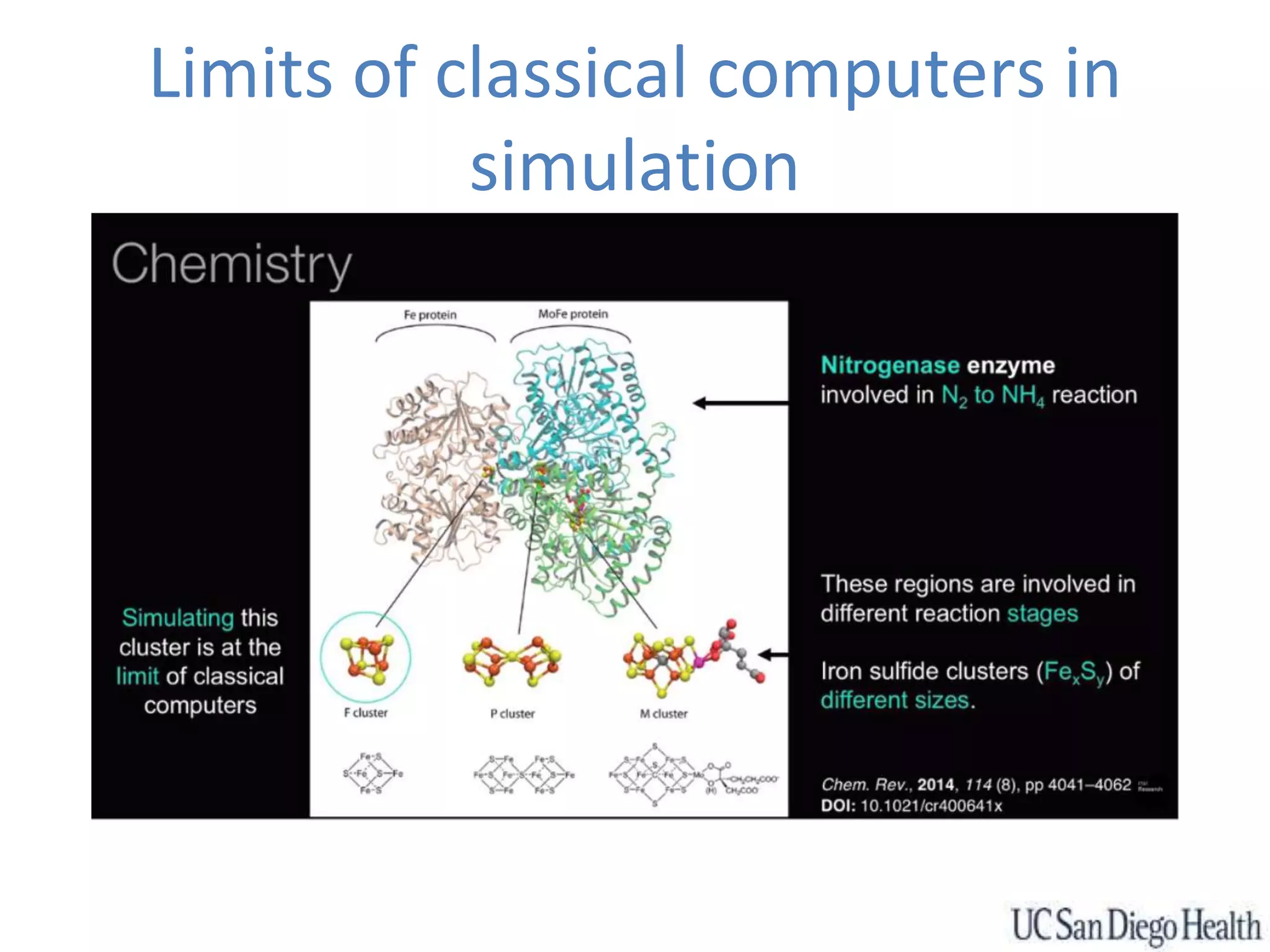

The document discusses the role of big data in clinical research, emphasizing its significance in predicting trial feasibility, improving participant matching, and enhancing drug development through real-world evidence (RWE). It highlights innovations such as Google Bigtable and the application of artificial intelligence and machine learning in healthcare, particularly in image analysis and drug design. Additionally, it outlines the data challenges in healthcare, including privacy concerns and harmonizing data from various sources.