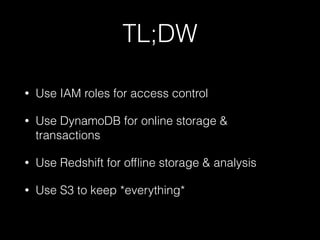

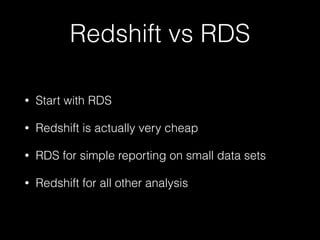

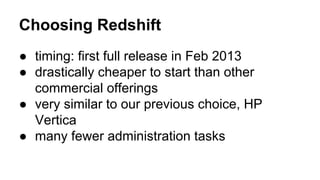

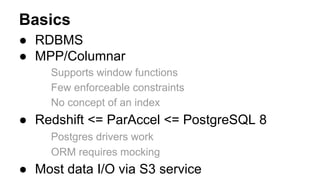

The AWS Chicago meetup featured talks on various AWS data storage and processing solutions, including DynamoDB, Redshift, and RDS. Key insights included the advantages and use cases of Redshift for data analysis, noting its cost-effectiveness and ease of integration with existing systems. The event also discussed best practices and recommendations for optimizing data workflows in analytics environments.