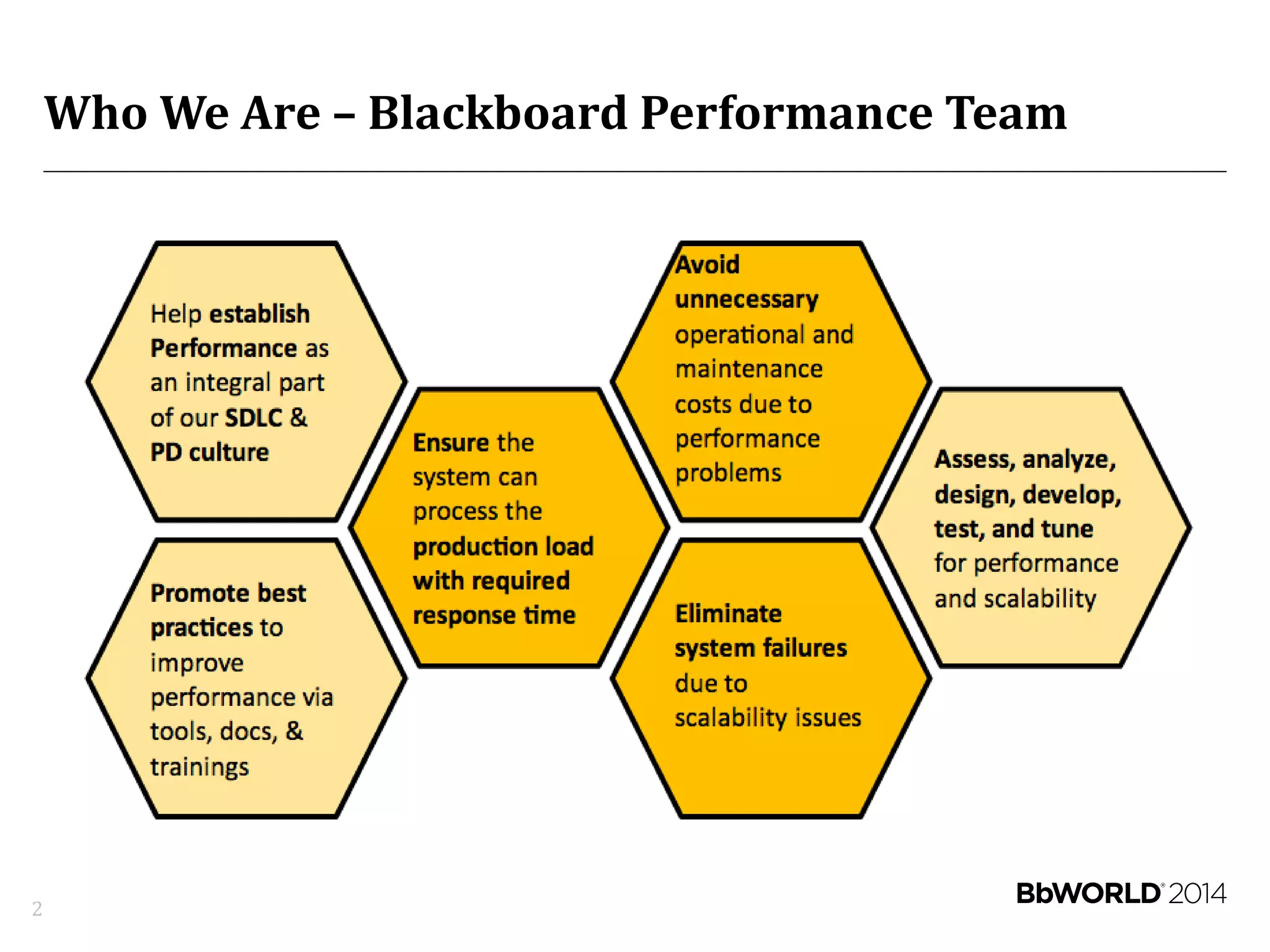

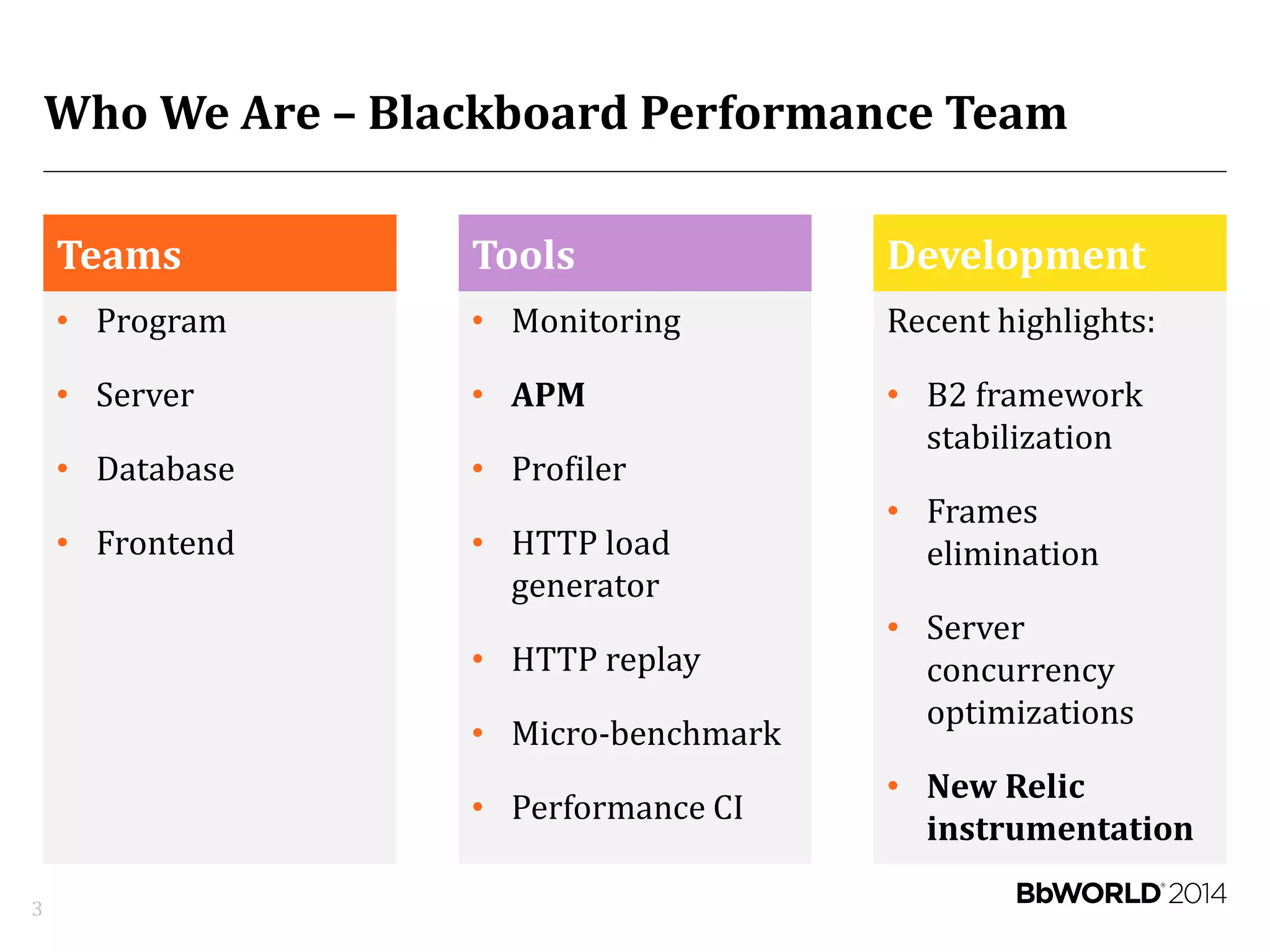

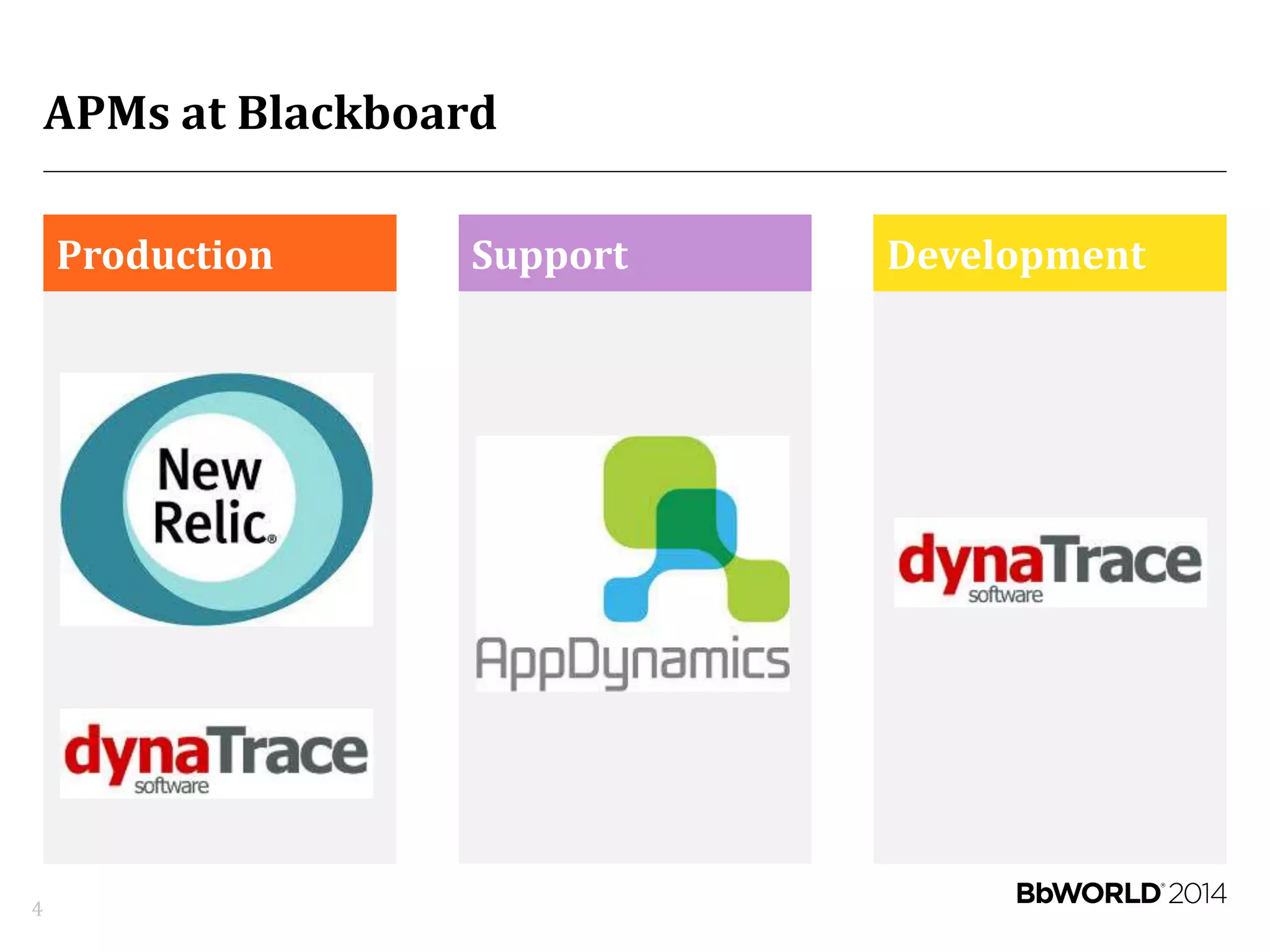

This document discusses application performance management (APM) tools at Blackboard, including:

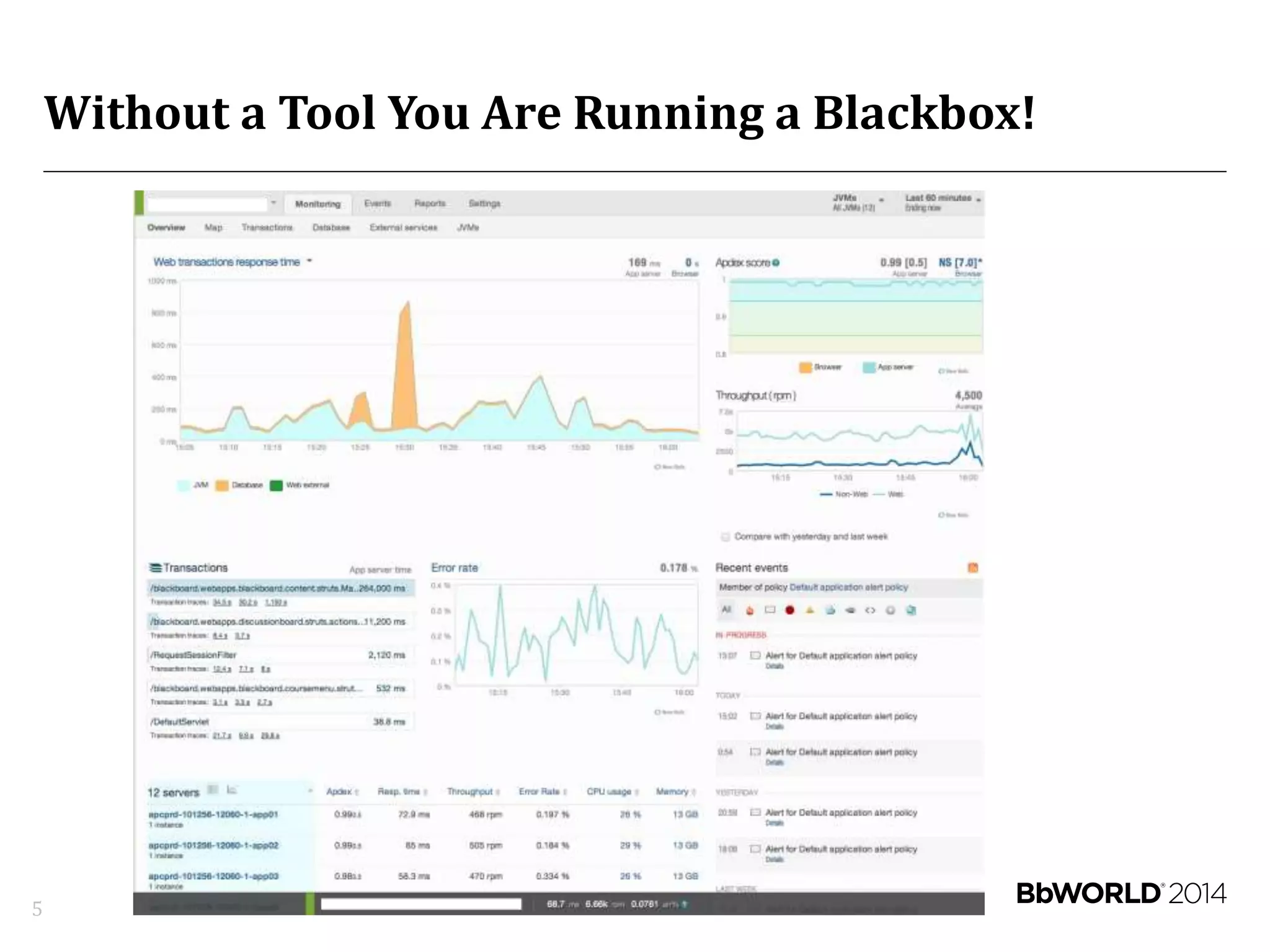

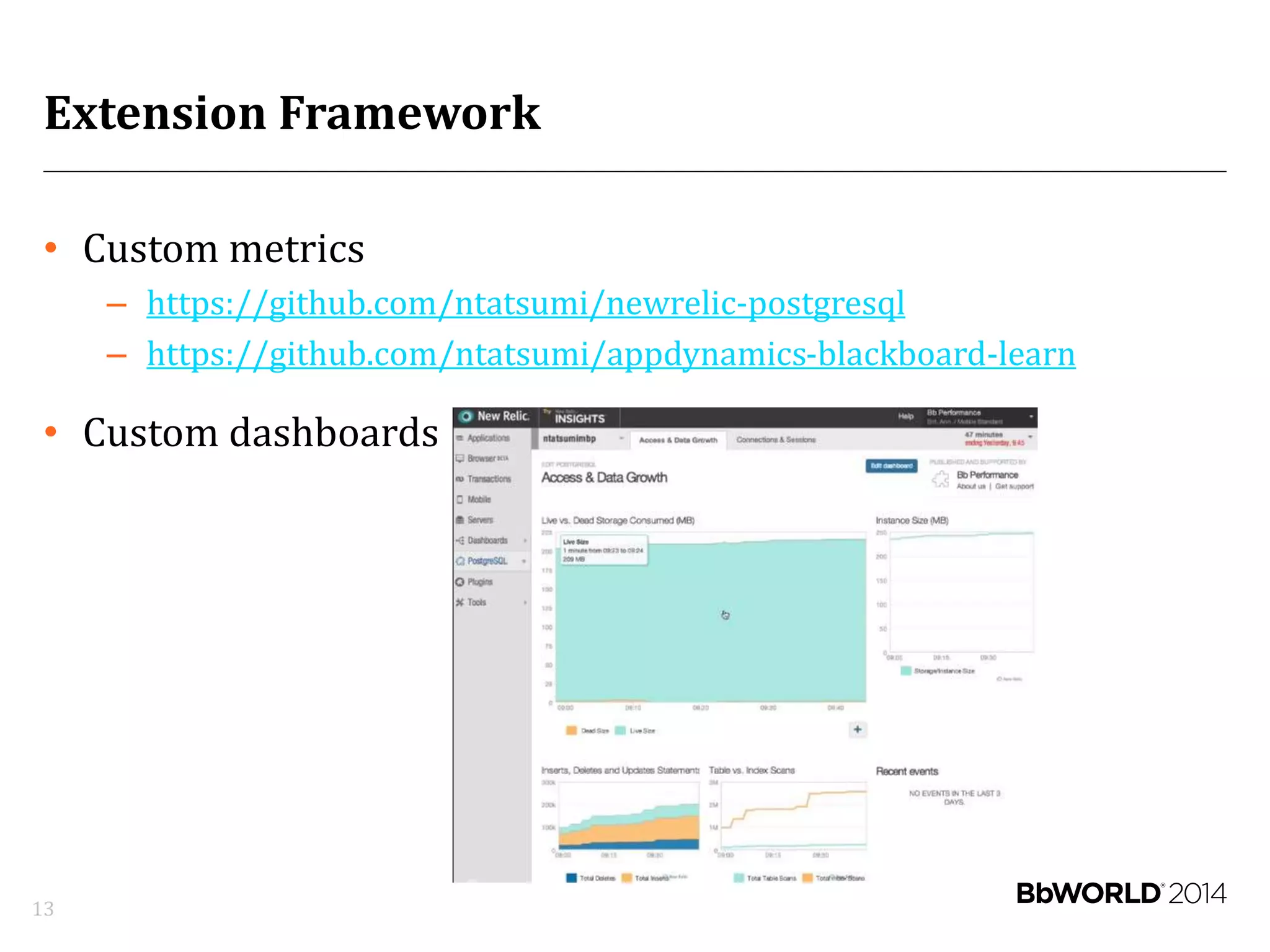

- The Blackboard performance team monitors servers, databases, and frontends using tools like New Relic, load generators, and profilers.

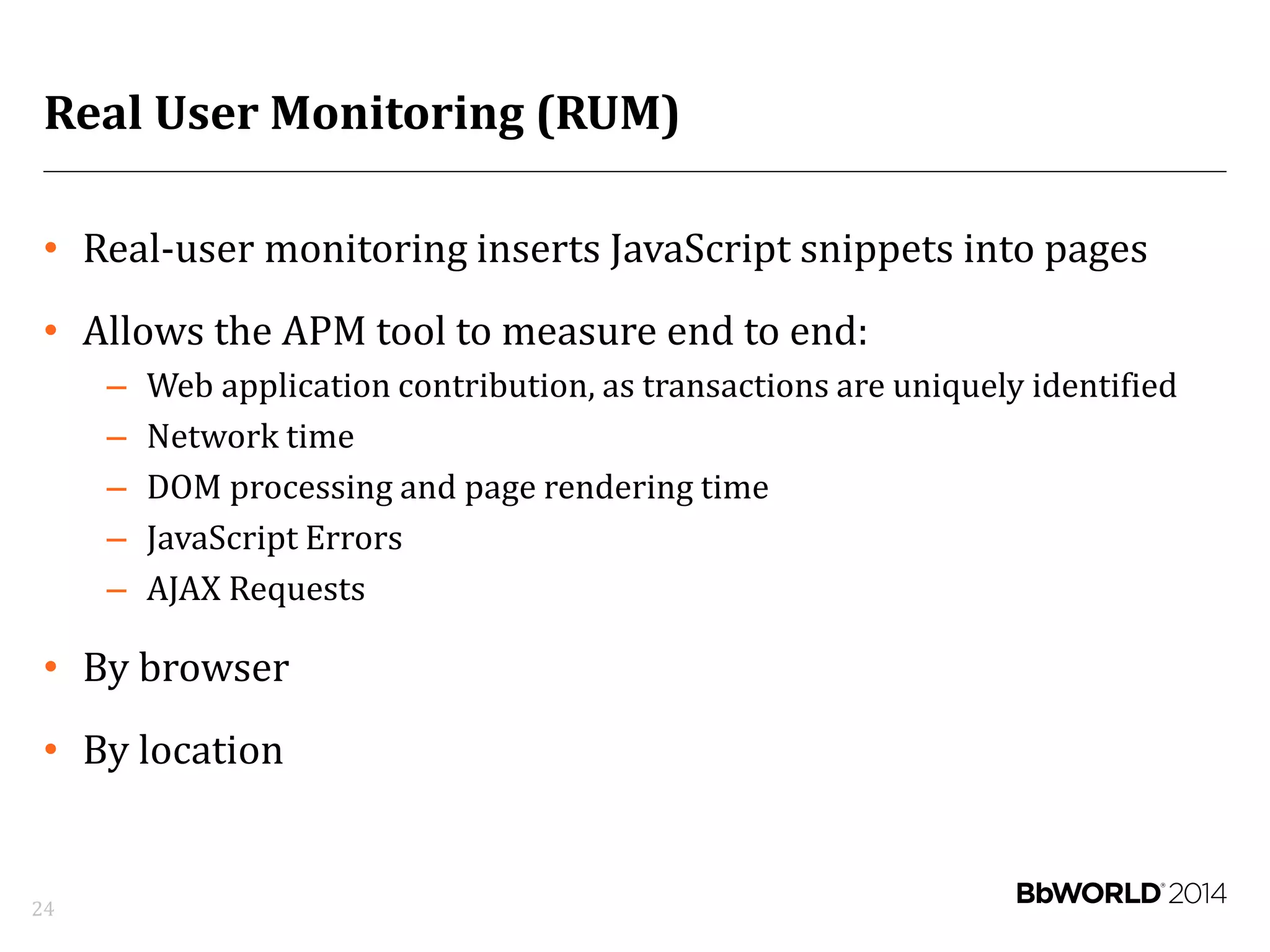

- APM tools provide visibility into performance issues through centralized monitoring, and help identify abnormal behaviors, anti-patterns, and diagnose root causes.

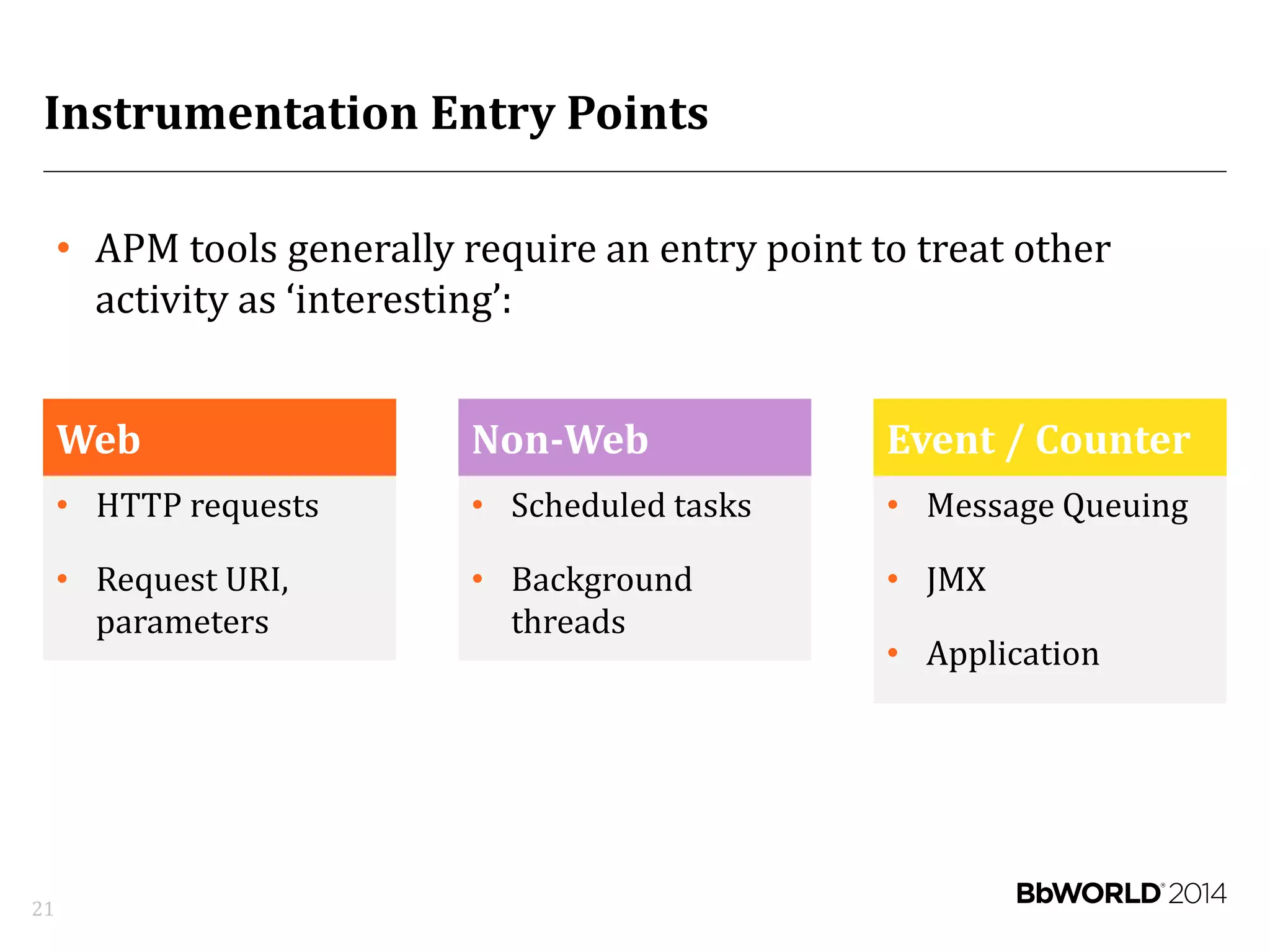

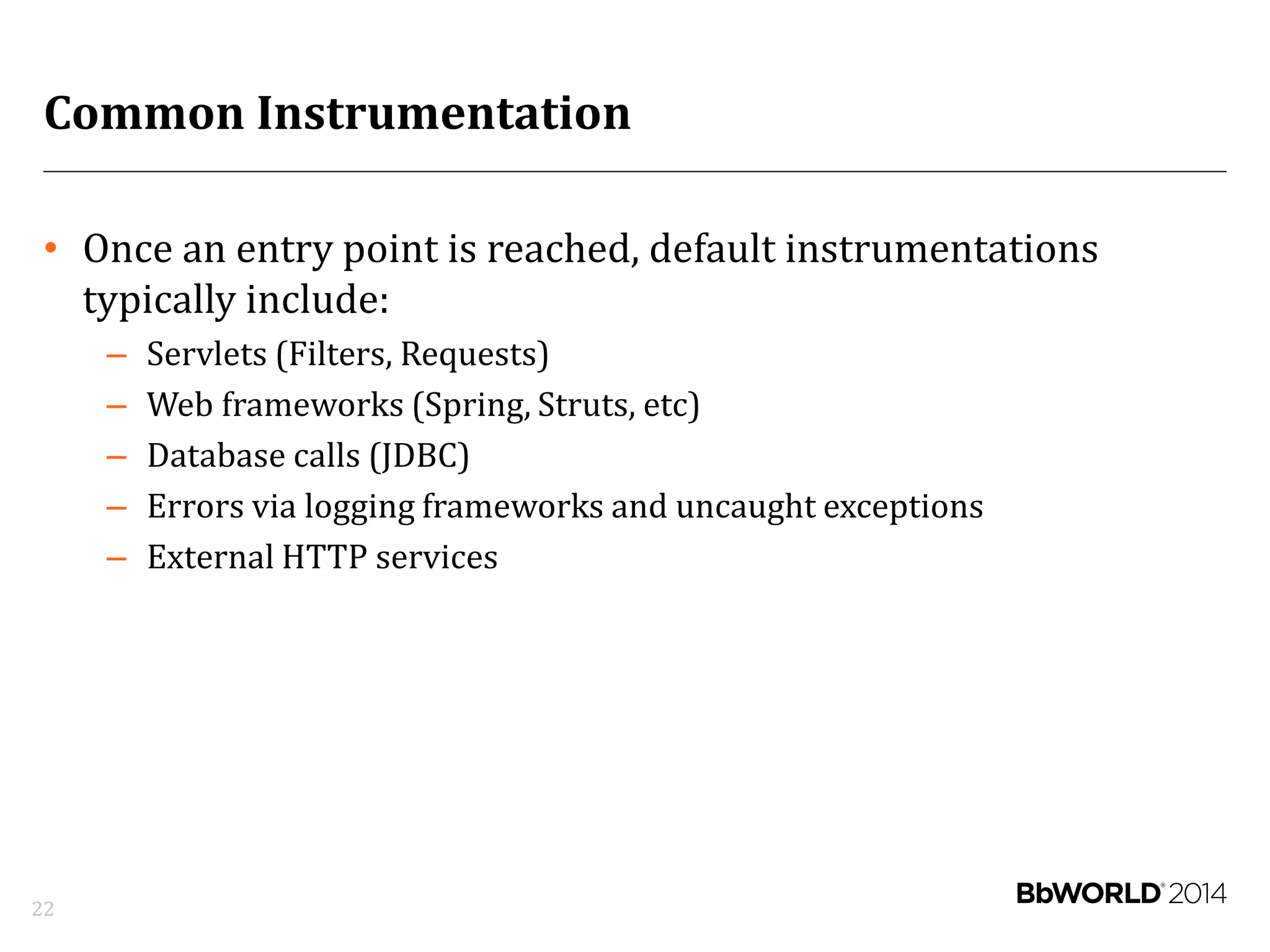

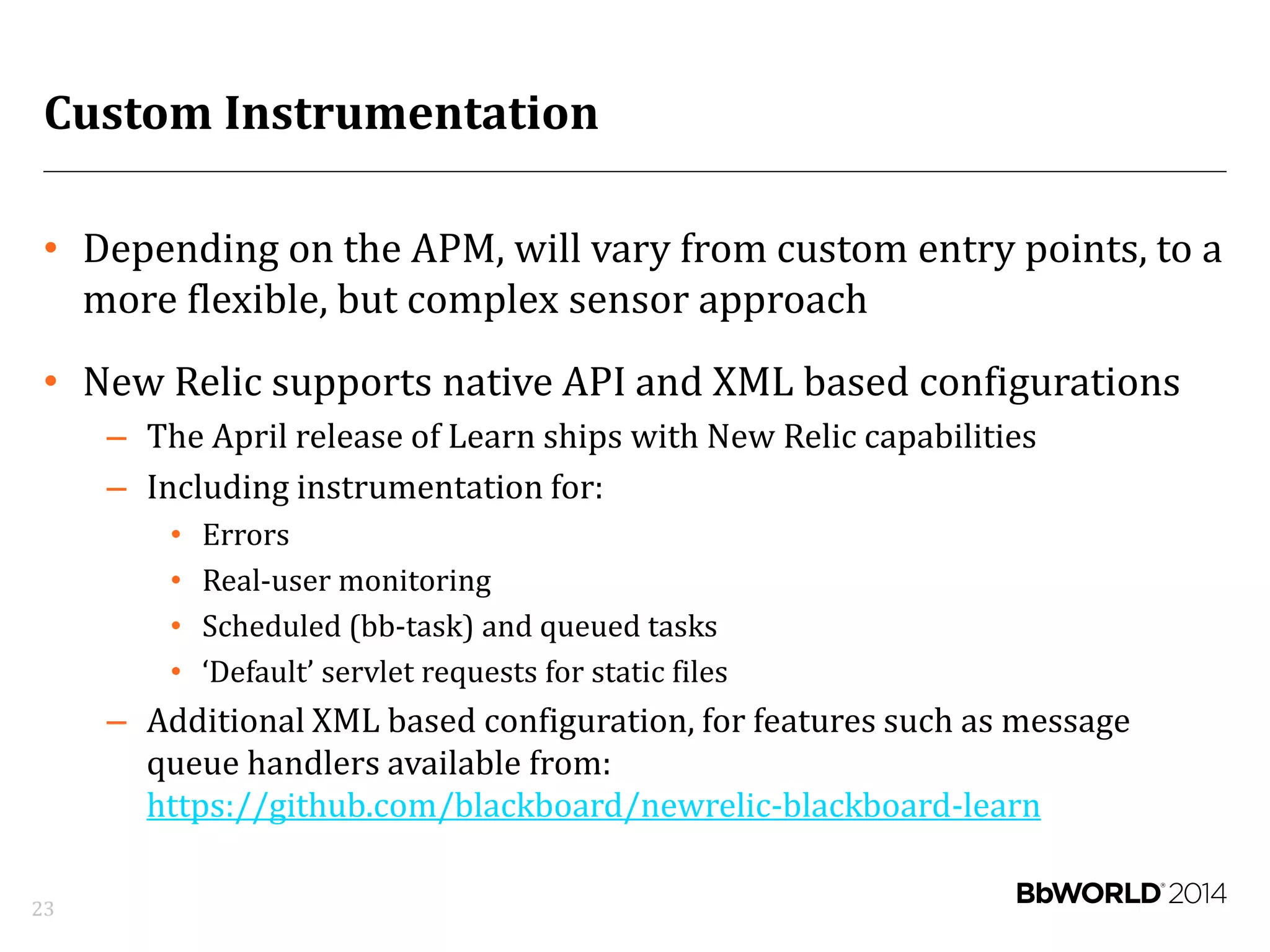

- Keys to success include choosing the right APM tool, automating deployments, constructing effective alert policies, and properly instrumenting applications.

- The document demonstrates New Relic and provides best practices around gradual deployment, right-sizing resources, and using APM data for troubleshooting.