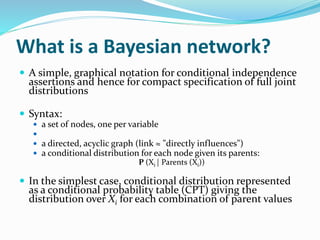

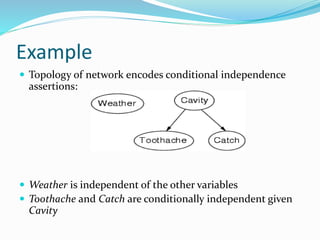

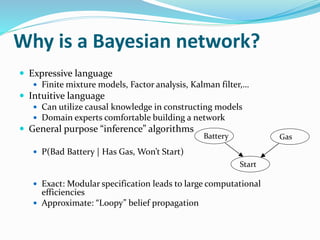

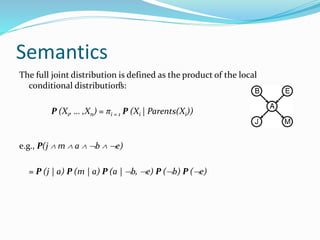

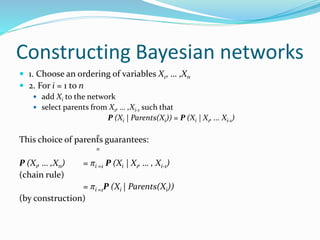

This document discusses Bayesian networks, which use a directed acyclic graph structure to represent conditional independence relationships between variables and compactly specify joint probability distributions. An example Bayesian network is given showing how the topology encodes that weather is independent of other variables and that toothache and catching a cold are conditionally independent given having a cavity. Reasons for using and learning Bayesian networks are explained, including that they provide an intuitive way to incorporate causal knowledge and allow for efficient inference queries.