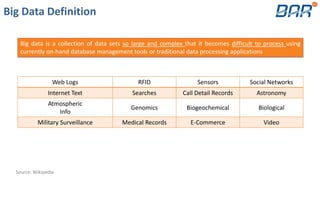

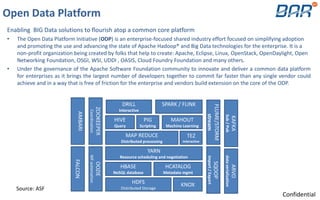

Sai Paravastu discusses the benefits of using an open data platform (ODP) for enterprises. The ODP would provide a standardized core of open source Hadoop technologies like HDFS, YARN, and MapReduce. This would allow big data solution providers to build compatible solutions on a common platform, reducing costs and improving interoperability. The ODP would also simplify integration for customers and reduce fragmentation in the industry by coordinating development efforts.