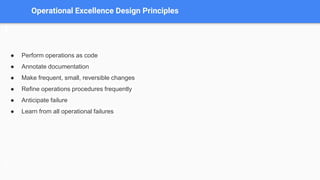

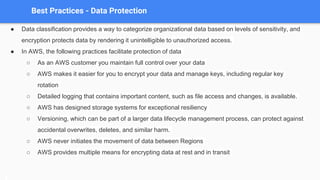

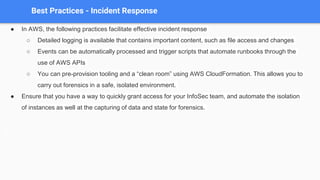

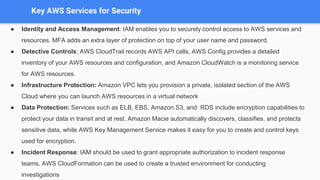

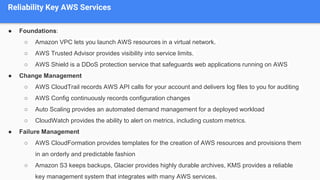

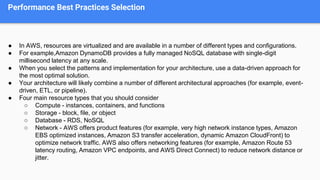

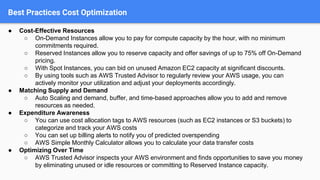

The document outlines the five pillars of a well-architected framework: operational excellence, security, reliability, performance efficiency, and cost optimization, each with defined principles and best practices. It emphasizes the importance of AWS services in supporting these pillars, such as AWS CloudFormation for operational excellence and IAM for security management. The document provides detailed guidelines on operational readiness, incident response, and continuous improvement to achieve desired business outcomes.