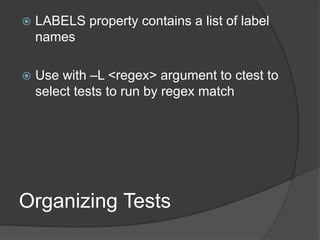

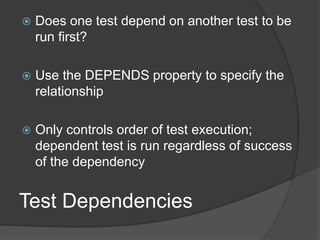

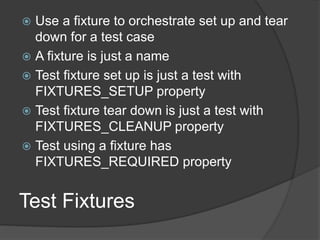

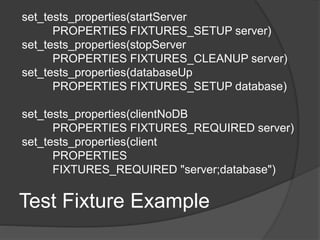

The document provides a comprehensive guide on adding testing support in CMake, detailing the steps for configuring tests using CMakeLists.txt, running tests with ctest, and analyzing test results through CDash. It outlines the syntax for adding tests, managing test properties, organizing test dependencies, and using frameworks like GoogleTest. Additional information is offered on customizing test execution and integrating with CDash for tracking and reporting test outcomes.

![add_test Syntax

add_test(

NAME <name>

COMMAND <command> [<arg>...]

[CONFIGURATIONS <config>...]

[WORKING_DIRECTORY <dir>]

[COMMAND_EXPAND_LISTS]

)](https://image.slidesharecdn.com/automatedtestingwithcmakectestandcdash-200409164321/85/Automated-Testing-with-CMake-CTest-and-CDash-5-320.jpg)

![Setting Test Properties

Tests are configured through properties

set_property(

TEST <test>

PROPERTY <name> [<value>...] )

set_tests_properties(

<test> [<test> ...]

PROPERTIES <prop> <value>

[<prop> <value>...] )

Use ; separated value lists with set_tests_properties](https://image.slidesharecdn.com/automatedtestingwithcmakectestandcdash-200409164321/85/Automated-Testing-with-CMake-CTest-and-CDash-7-320.jpg)

![Running CTest

ctest [args...]

-C <config> select config to run

needed for multi-config generators

-R <regex>, -E <regex>

Specify tests to run/exclude based on test name

--timeout <seconds>

--stop-time <time-of-day>

-j <n> for parallel execution

--resource-spec-file <path>

-L <regex> to select tests by label](https://image.slidesharecdn.com/automatedtestingwithcmakectestandcdash-200409164321/85/Automated-Testing-with-CMake-CTest-and-CDash-15-320.jpg)

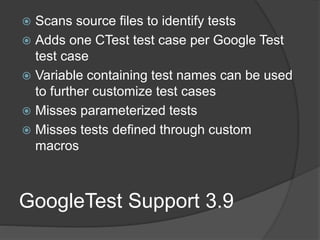

![GoogleTest Support 3.9

include(GoogleTest)

gtest_add_tests(

TARGET <target>

[SOURCES file1...]

[EXTRA_ARGS arg1...]

[WORKING_DIRECTORY dir]

[TEST_PREFIX prefix]

[TEST_SUFFIX suffix]

[SKIP_DEPENDENCY]

[TEST_LIST outVar]

)](https://image.slidesharecdn.com/automatedtestingwithcmakectestandcdash-200409164321/85/Automated-Testing-with-CMake-CTest-and-CDash-19-320.jpg)

![GoogleTest Support 3.10

include(GoogleTest)

gtest_discover_tests(

TARGET <target>

[EXTRA_ARGS arg1...]

[WORKING_DIRECTORY dir]

[TEST_PREFIX prefix]

[TEST_SUFFIX suffix]

[NO_PRETTY_TYPES]

[NO_PRETTY_VALUES]

[PROPERTIES name1 value1...]

[TEST_LIST outVar]

[DISCOVERY_TIMEOUT seconds]

)](https://image.slidesharecdn.com/automatedtestingwithcmakectestandcdash-200409164321/85/Automated-Testing-with-CMake-CTest-and-CDash-21-320.jpg)