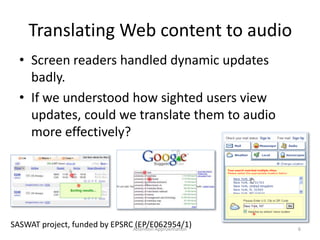

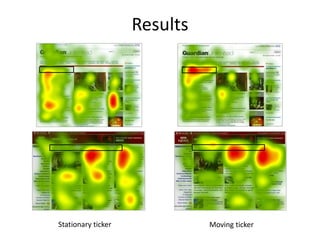

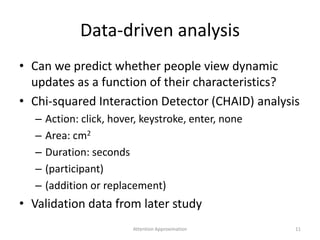

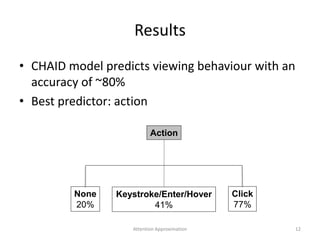

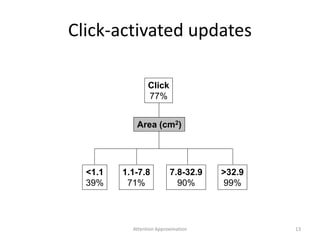

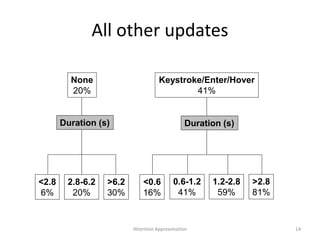

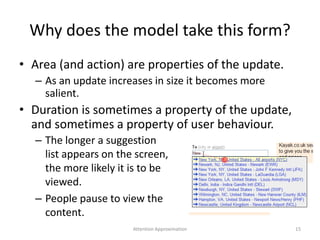

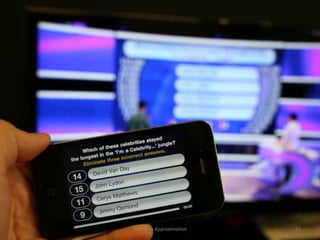

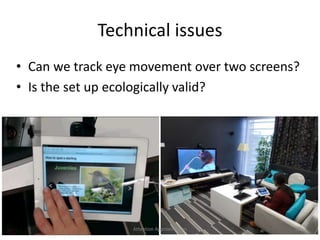

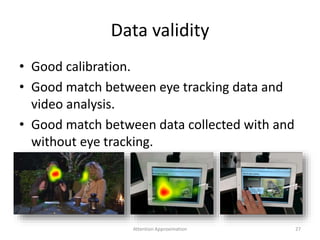

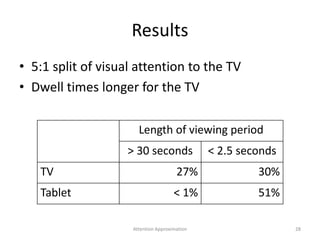

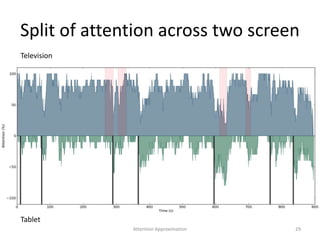

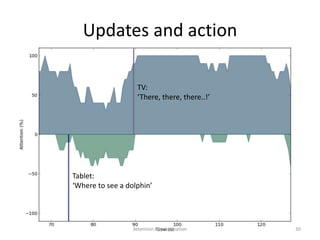

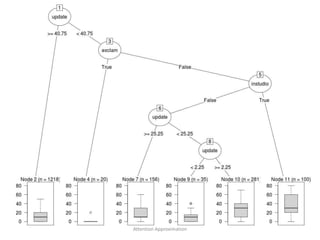

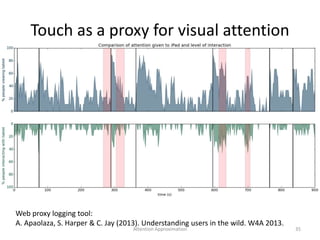

The document discusses 'attention approximation,' a method for determining user focus across various devices and contexts, particularly in relation to dynamic web content and multi-screen interactions. It highlights a study that uses empirical models to predict user behavior and optimize technology design based on attention patterns observed through eye tracking and interaction data. Key findings suggest that user interactions significantly vary with screen dynamics, and the research aims to improve how information is translated across different media formats.