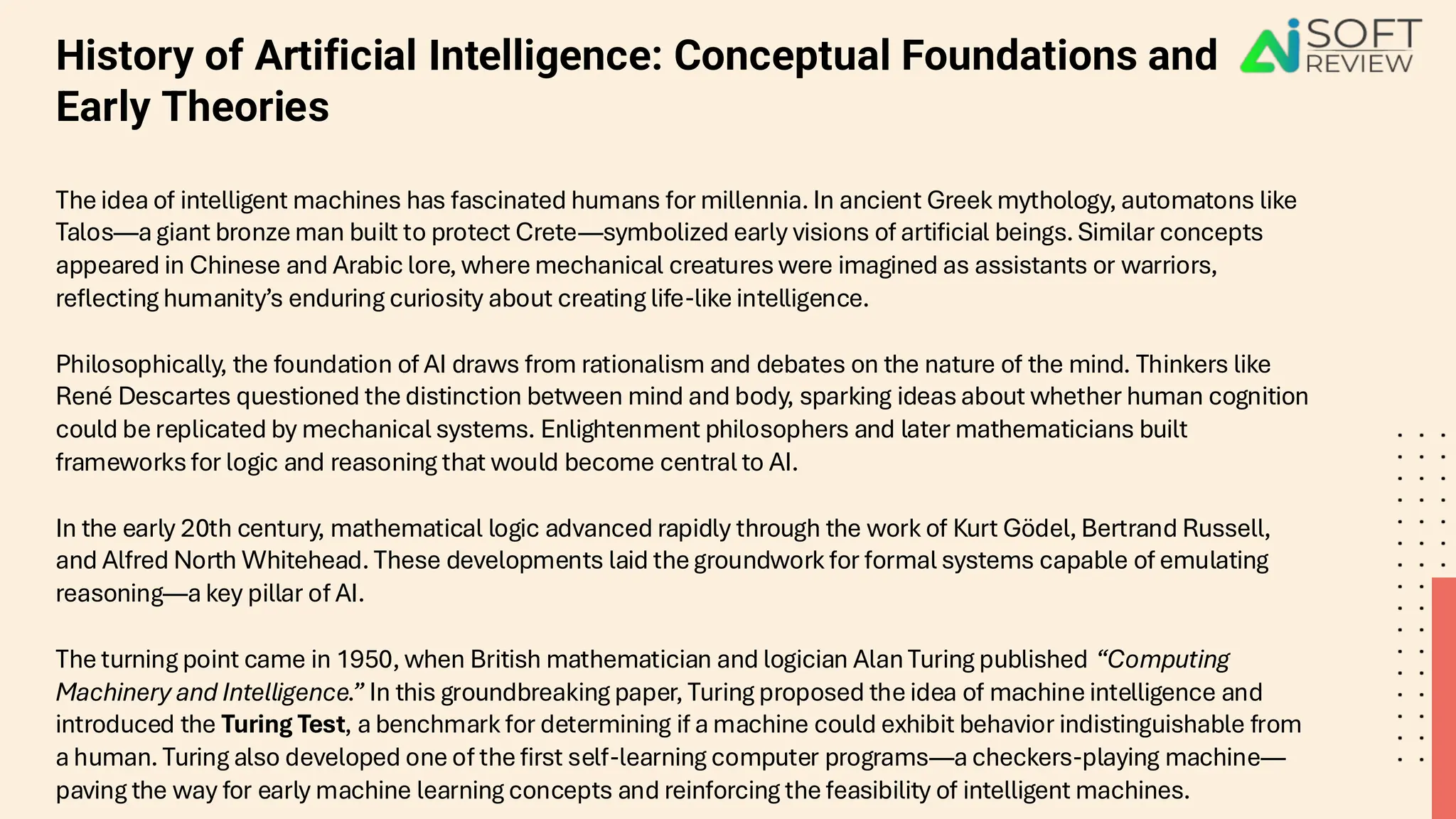

The history of Artificial Intelligence: From Ancient Ideas to Modern Algorithms is a remarkable journey through time—one that blends human curiosity with technological breakthroughs. While the dream of intelligent machines dates back to ancient civilizations, it wasn’t until the 20th century that the idea began to take scientific shape.

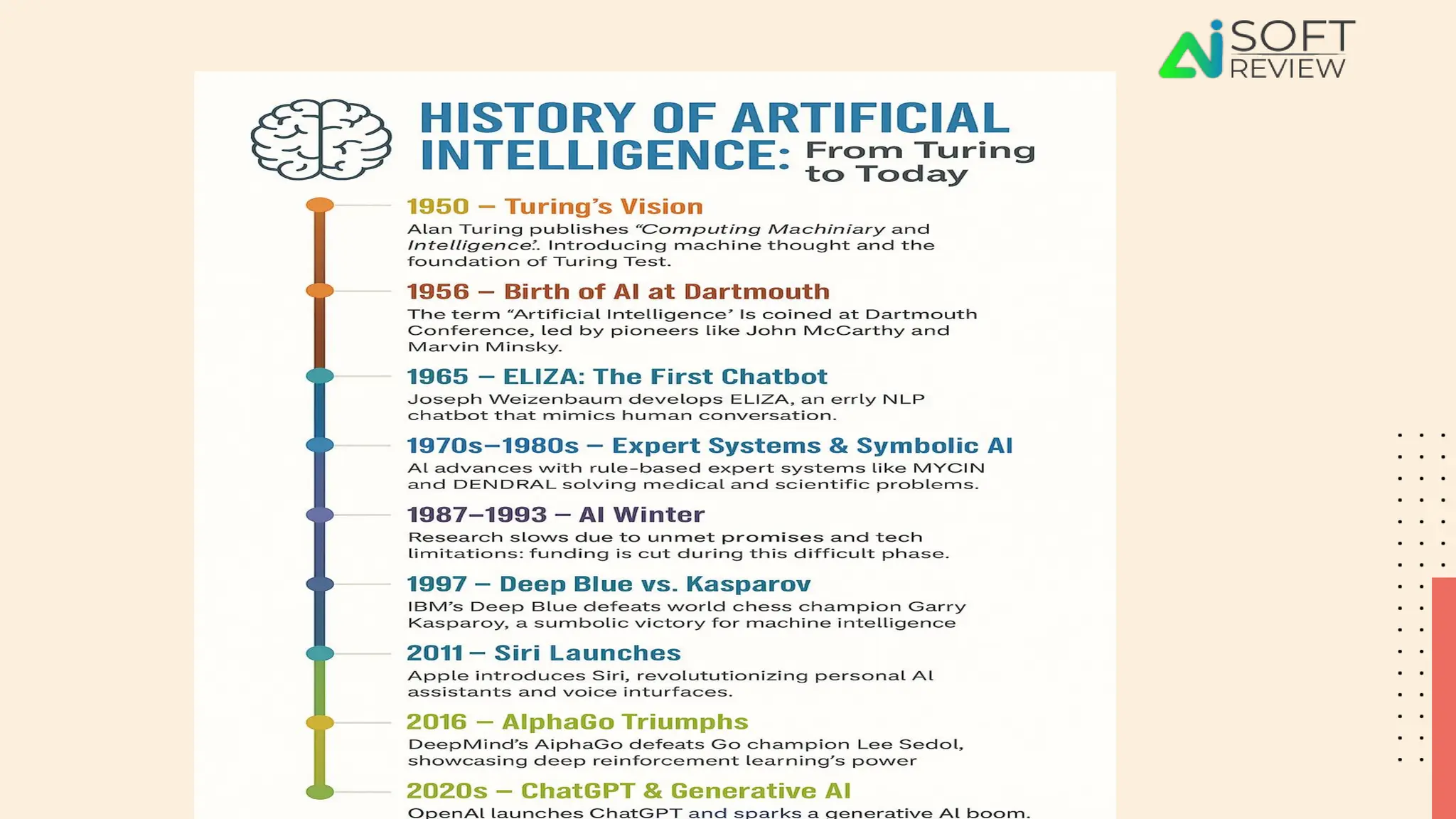

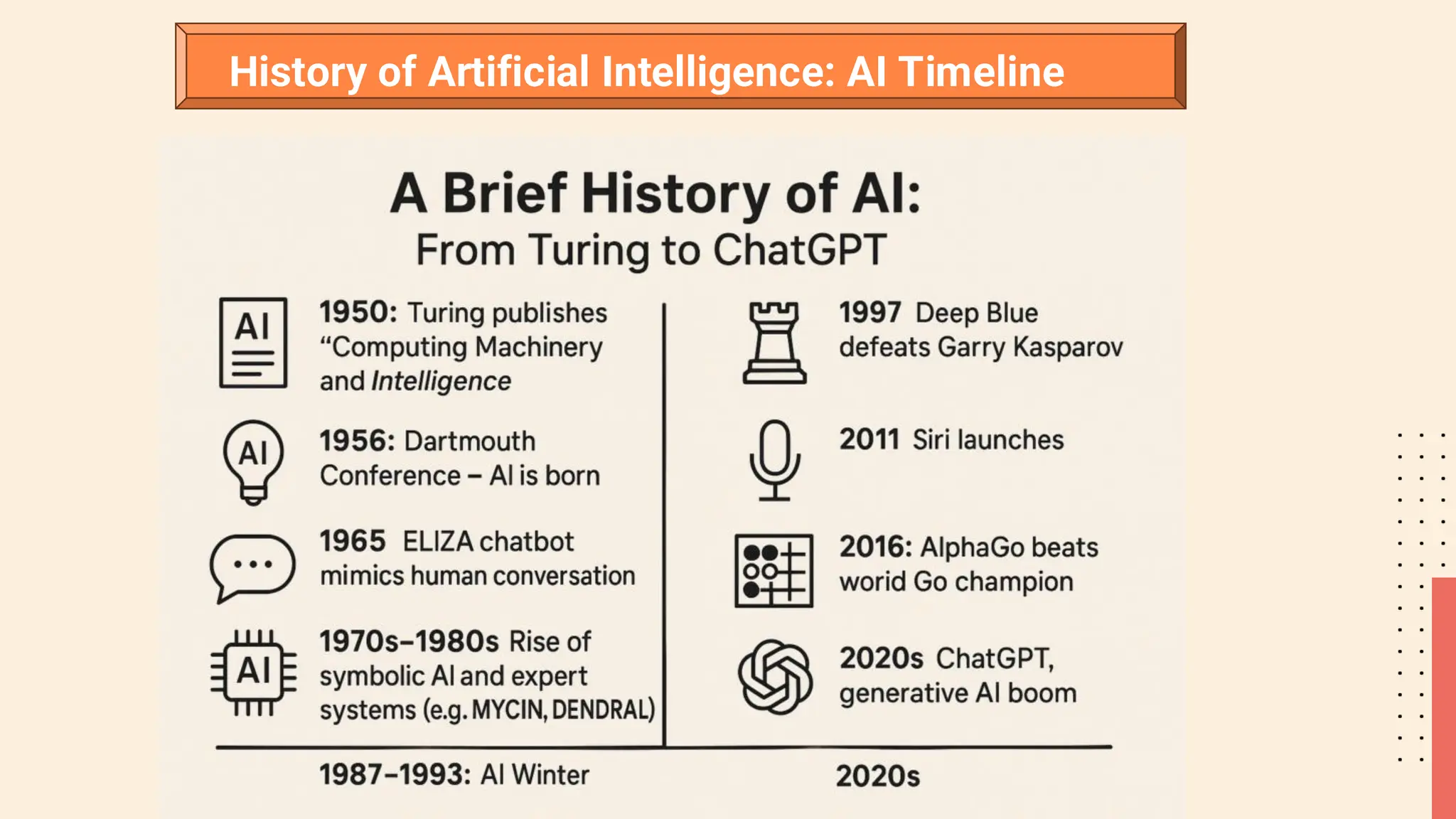

In 1950, British mathematician Alan Turing introduced a revolutionary concept: that machines could imitate human thought. His creation of the "Turing Test" provided a framework for measuring machine intelligence. This milestone was one of the first major chapters in the history of Artificial Intelligence: From Ancient Ideas to Modern Algorithms.

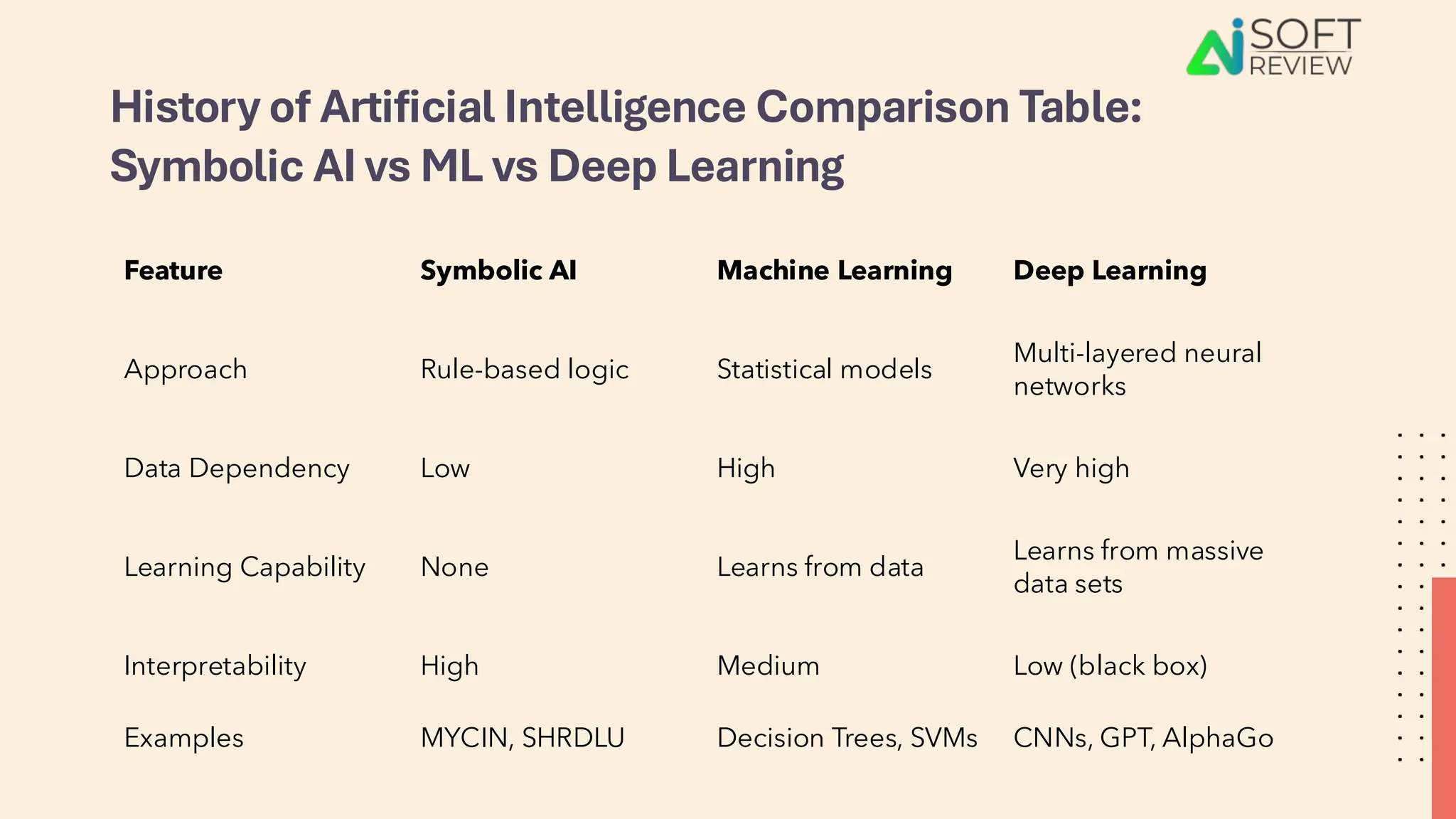

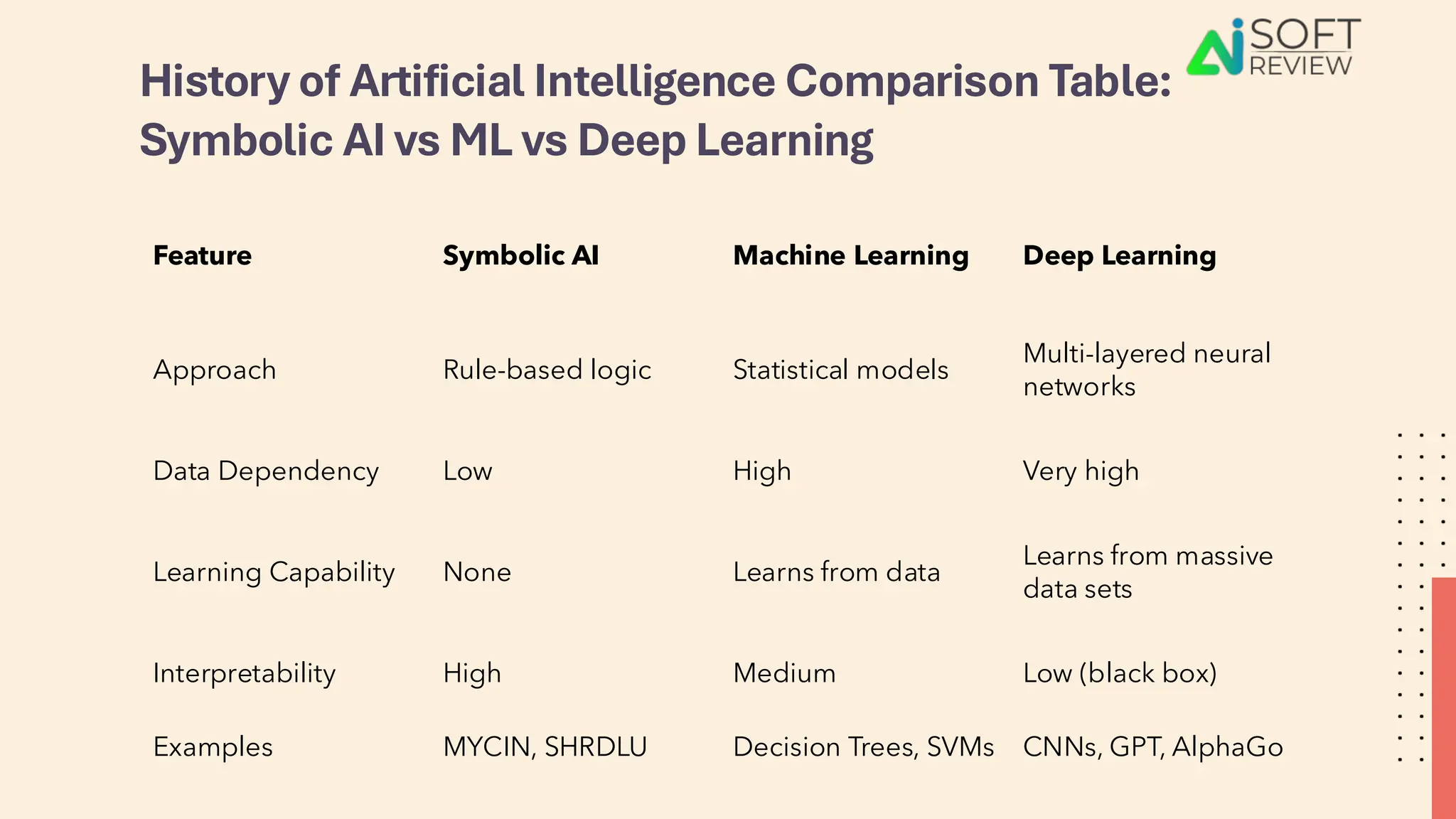

By 1956, the term "Artificial Intelligence" had been officially coined during the Dartmouth Conference, igniting decades of innovation. From symbolic AI in the 1960s to expert systems in the 1980s, and the rise of machine learning and neural networks in the 1990s and 2000s, each era brought us closer to what we now recognize as modern AI. Technologies like deep learning, real-time automation, and natural language processing have turned AI into a powerful tool used in everyday life.

The ongoing evolution in the history of Artificial Intelligence: From Ancient Ideas to Modern Algorithms reveals how ancient visions are becoming today’s realities—and tomorrow’s possibilities.