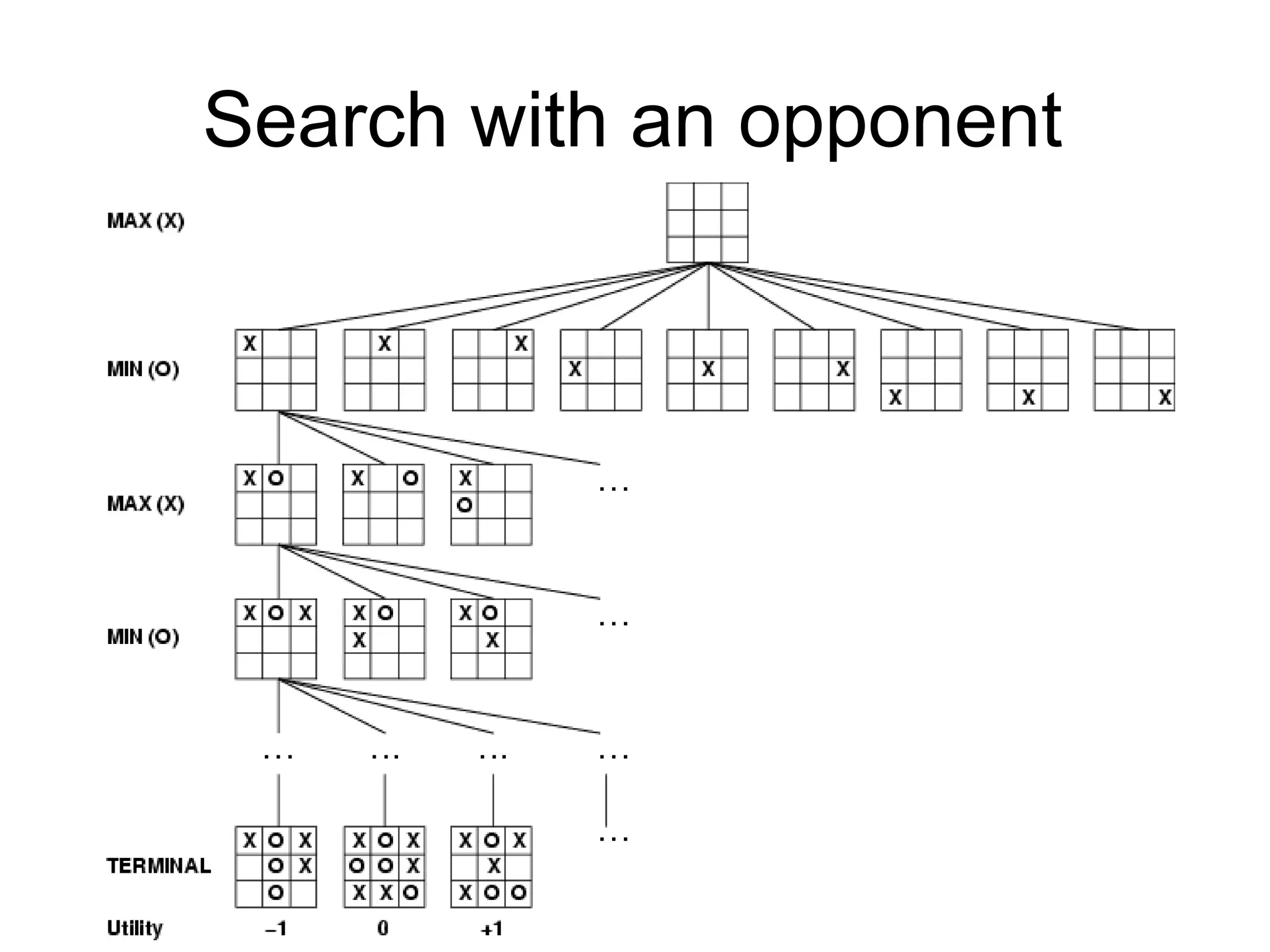

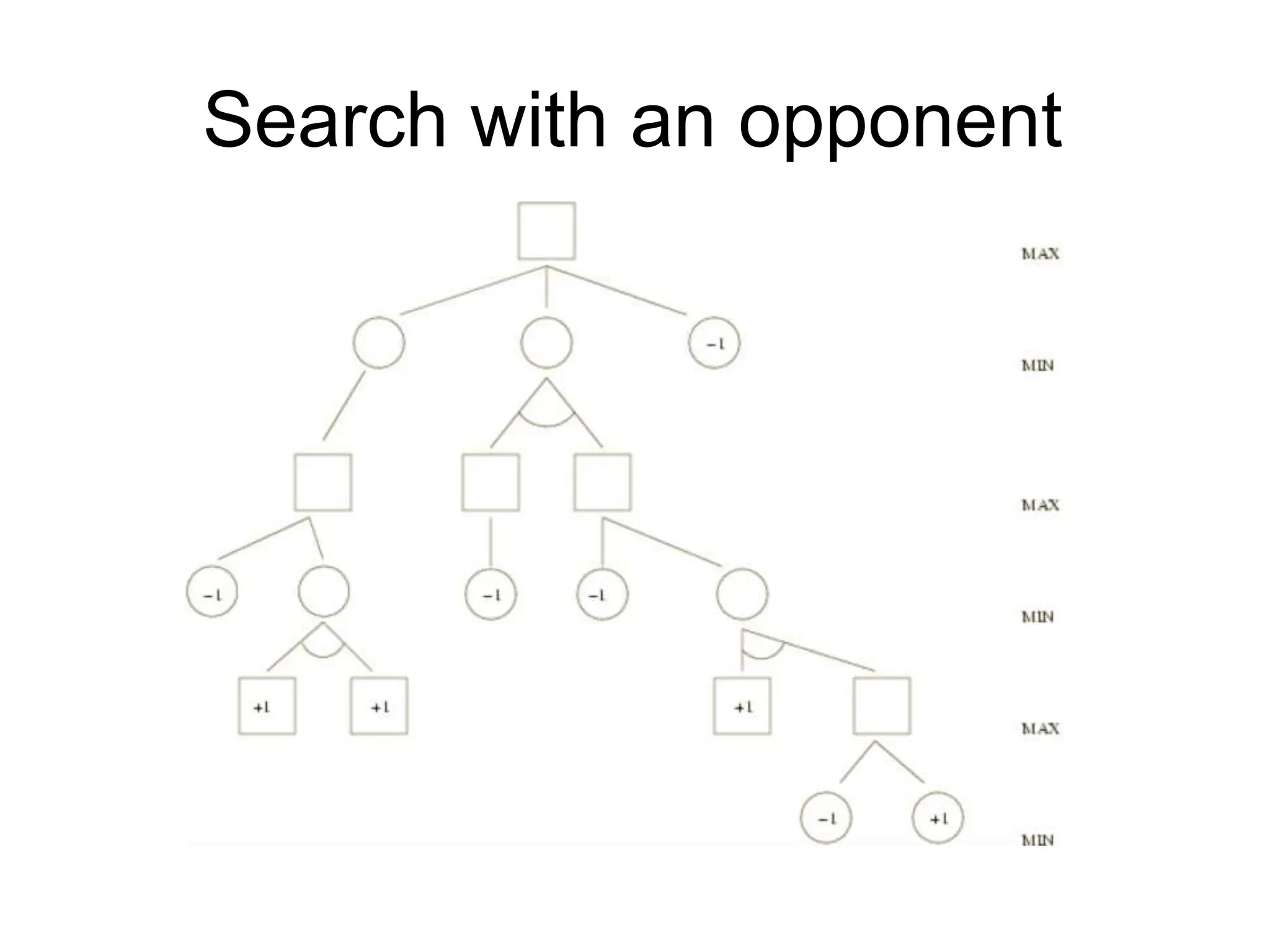

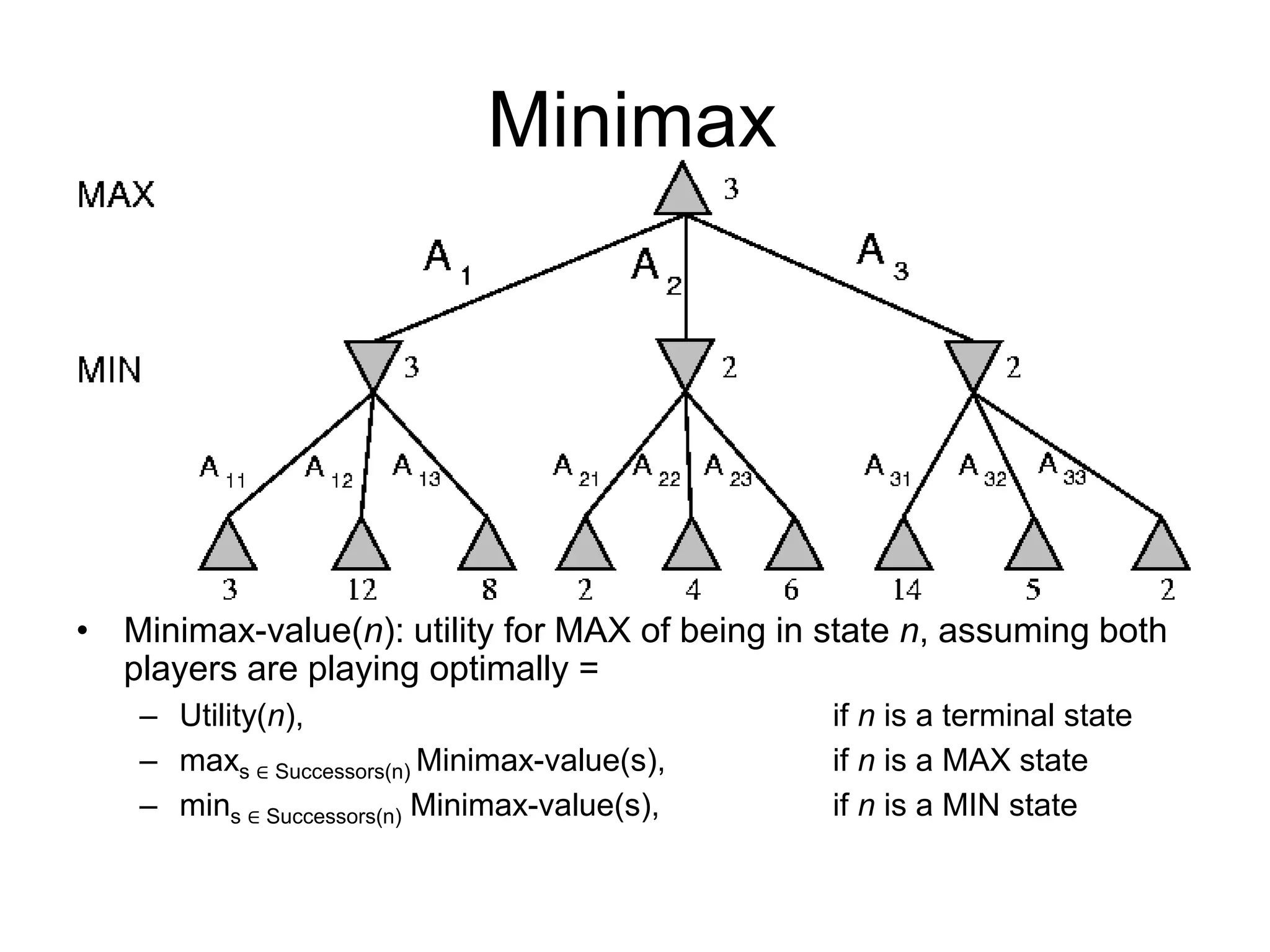

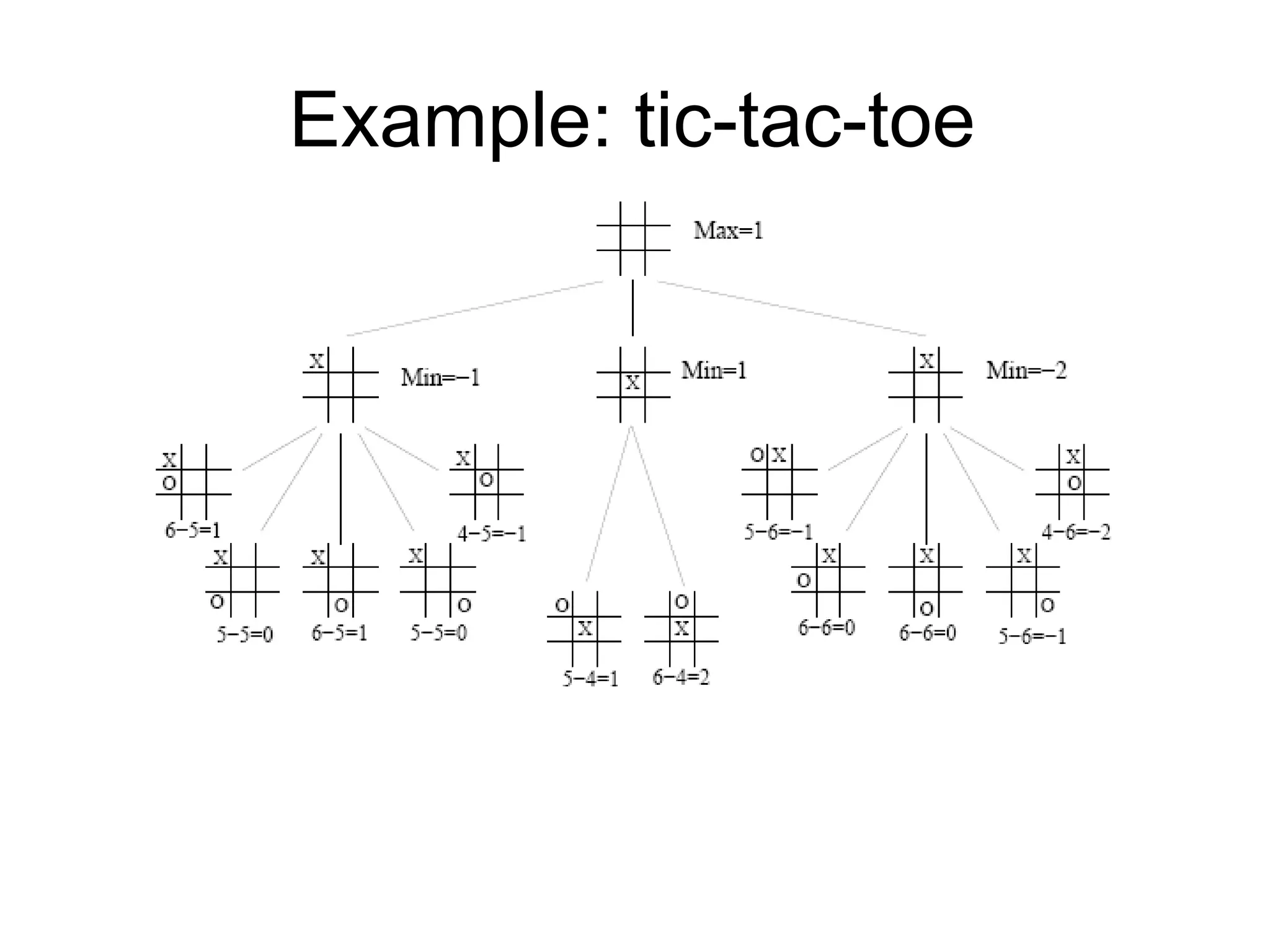

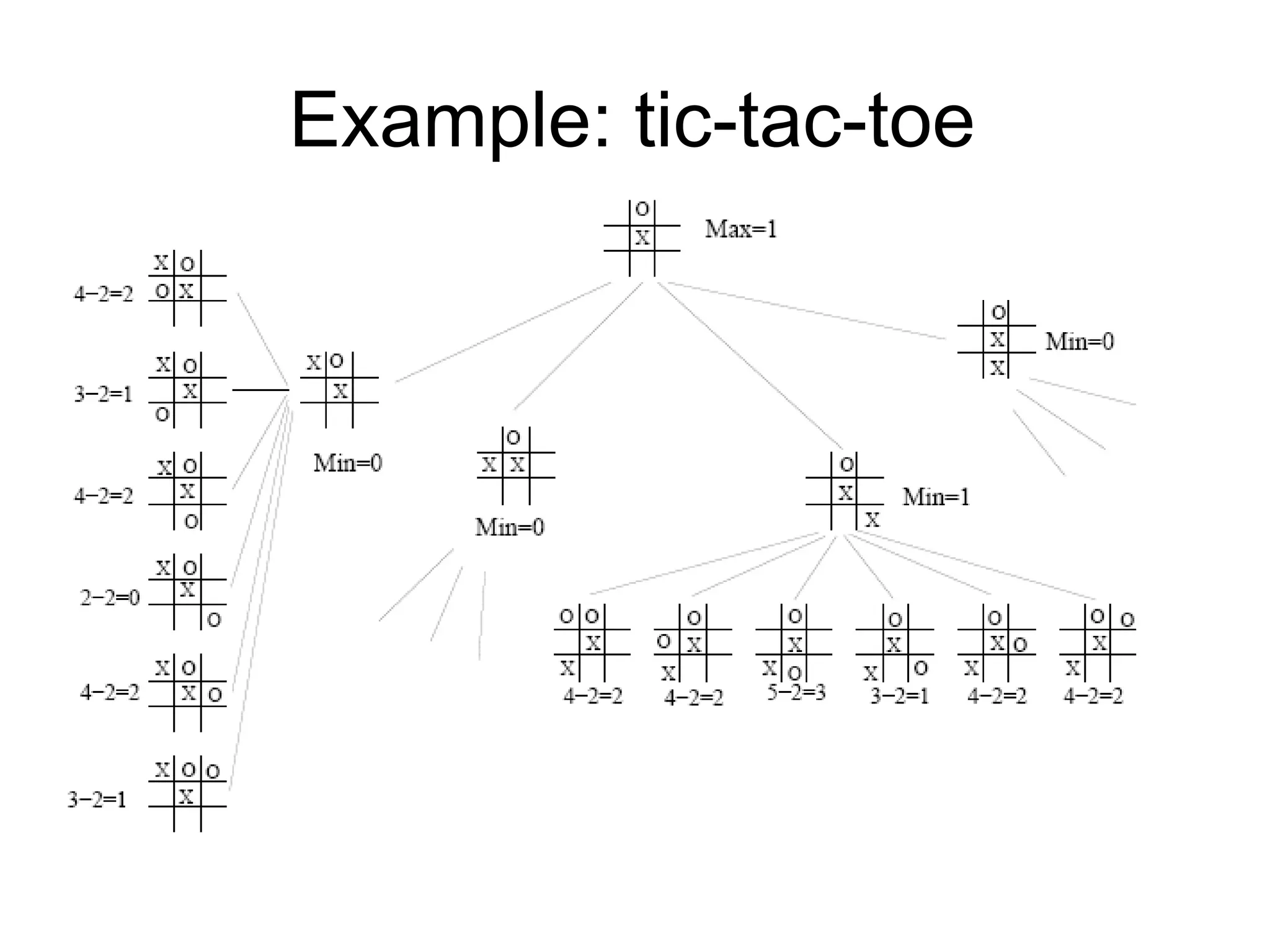

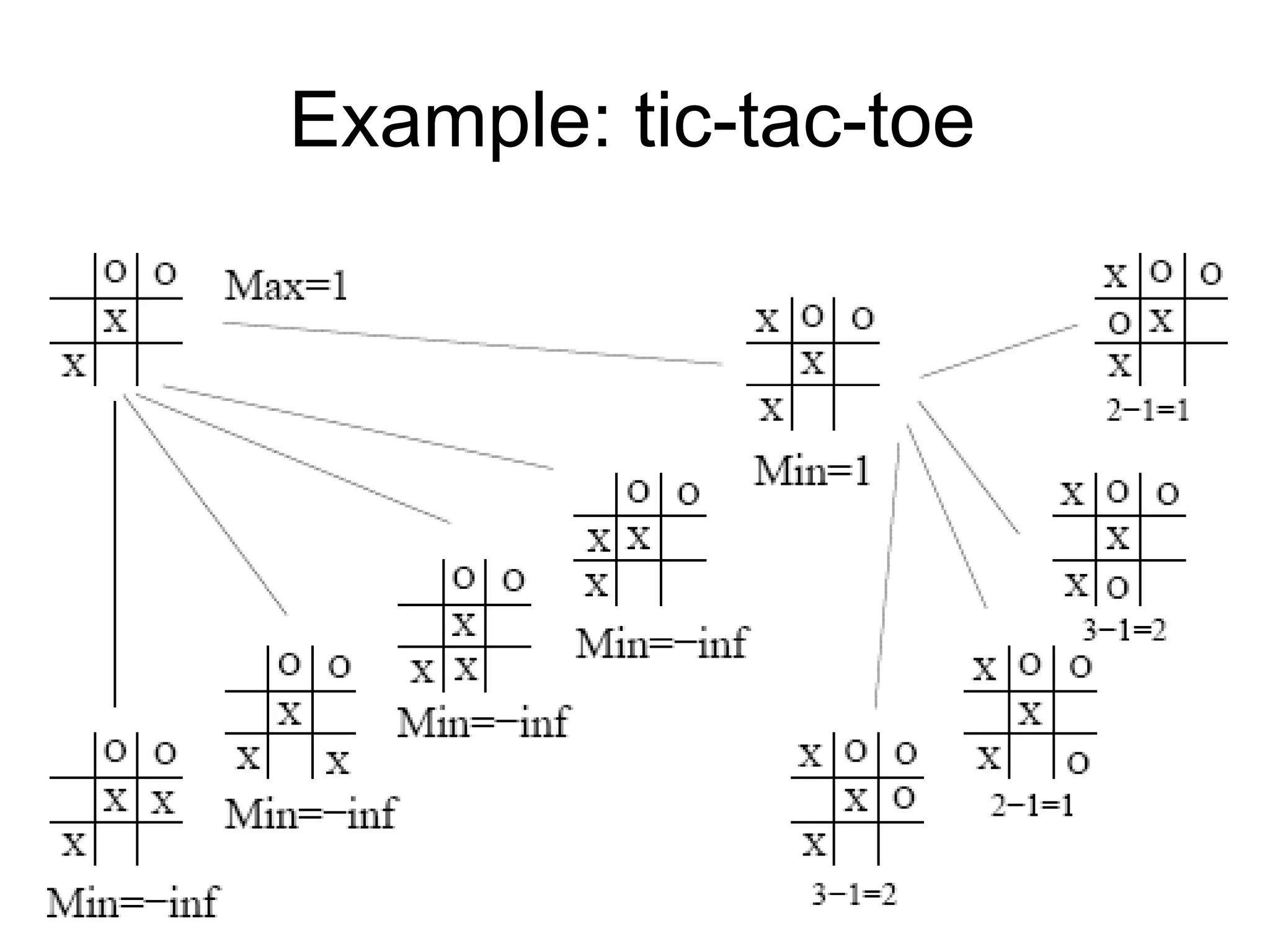

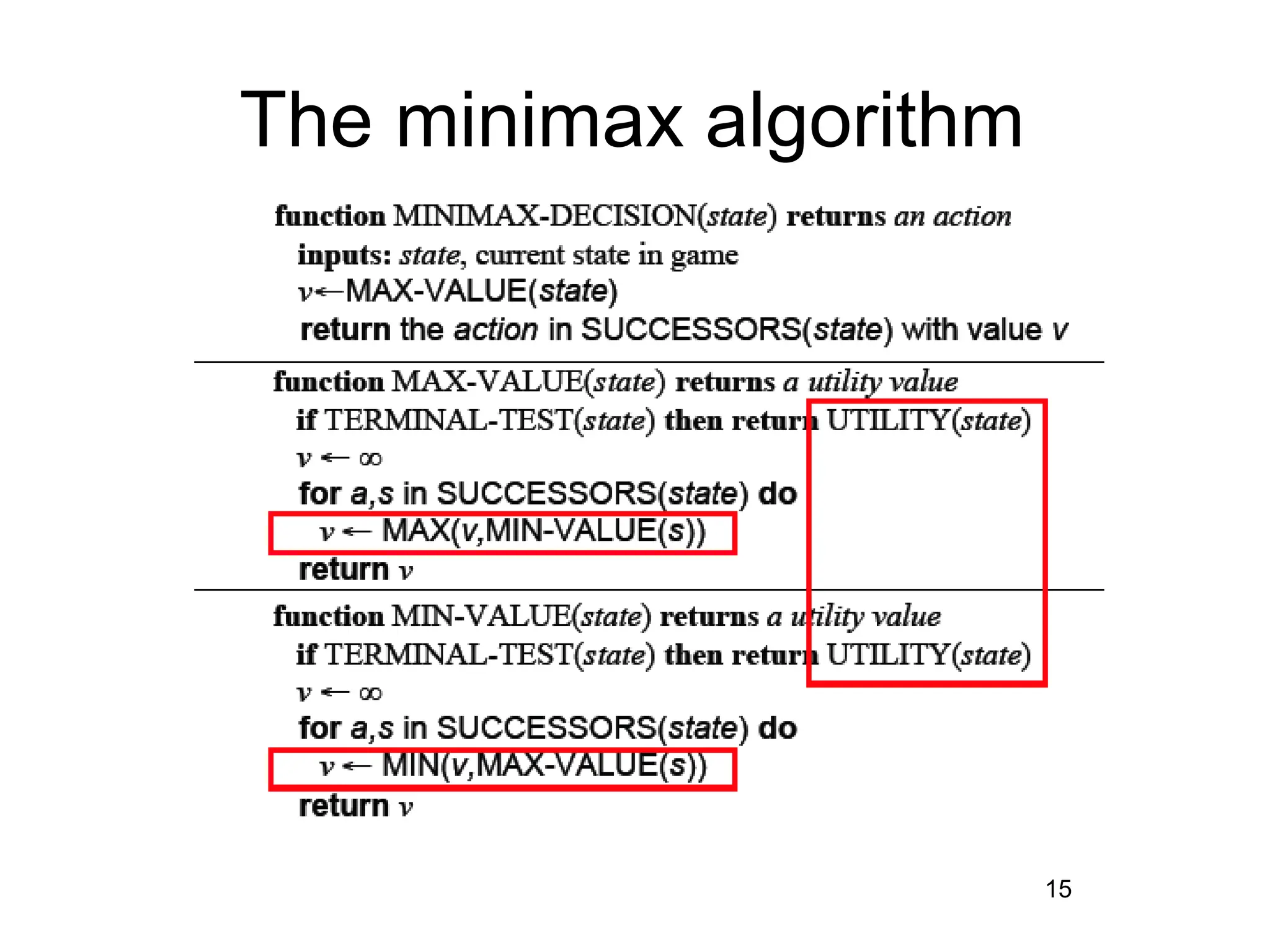

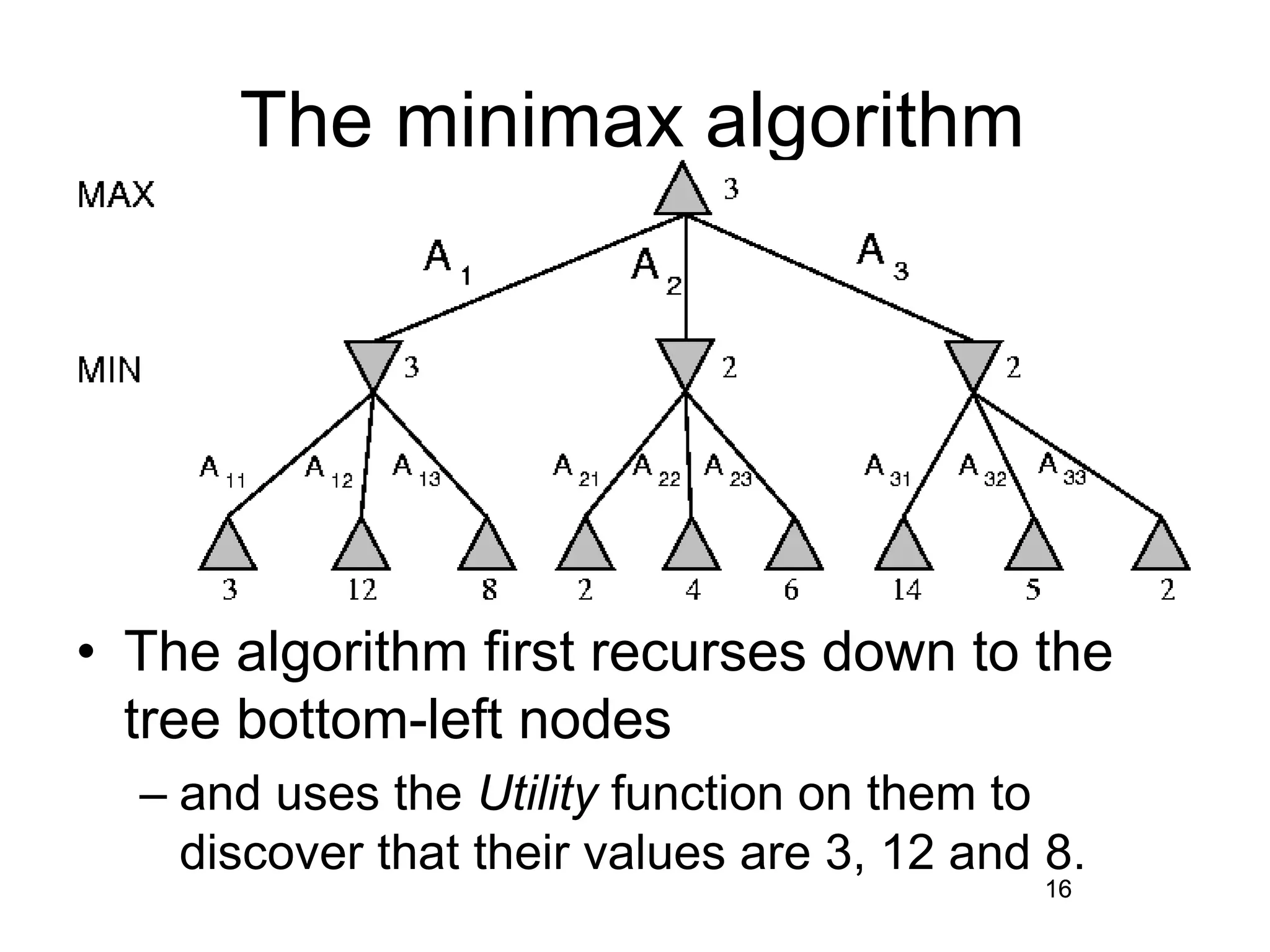

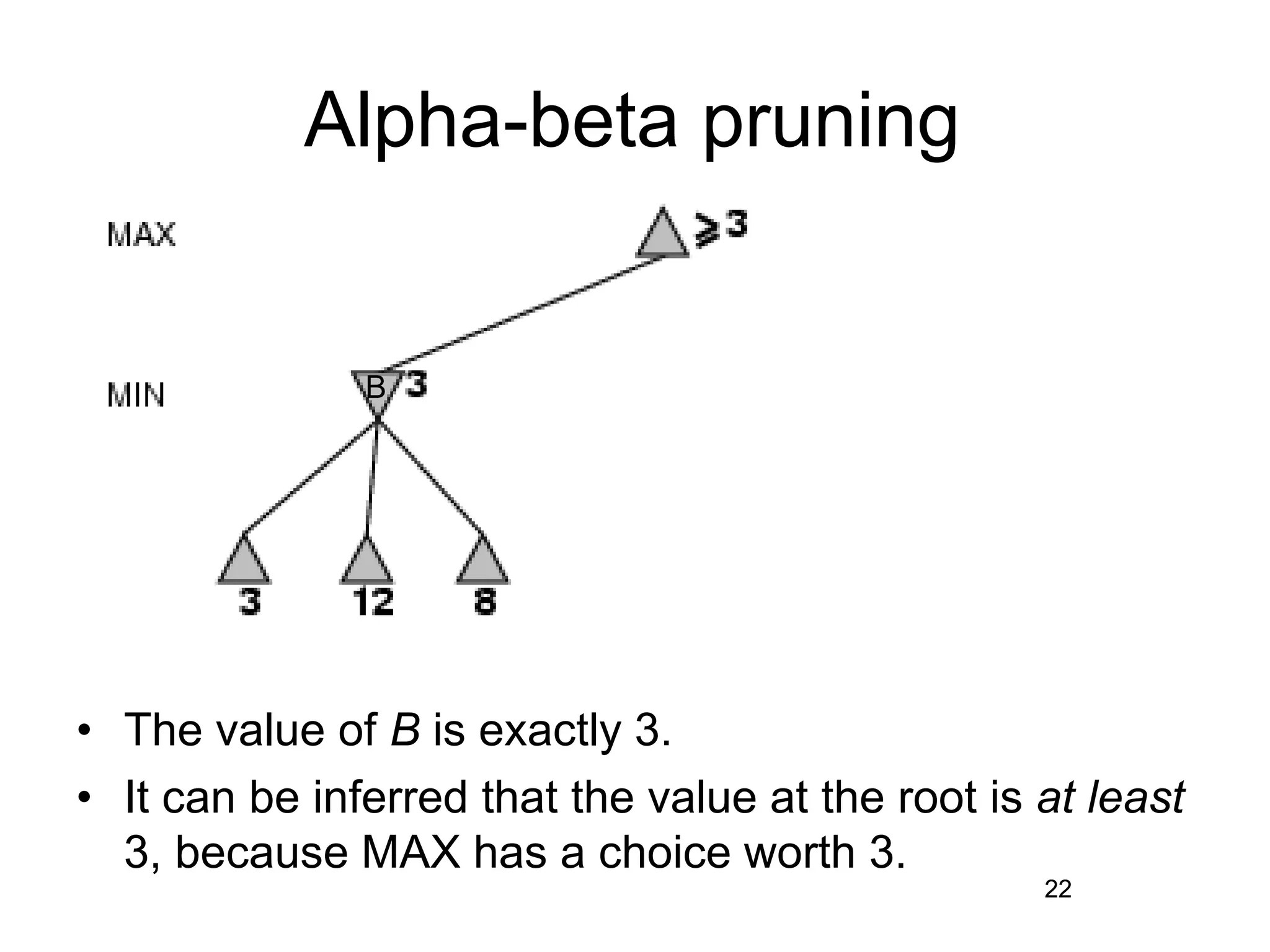

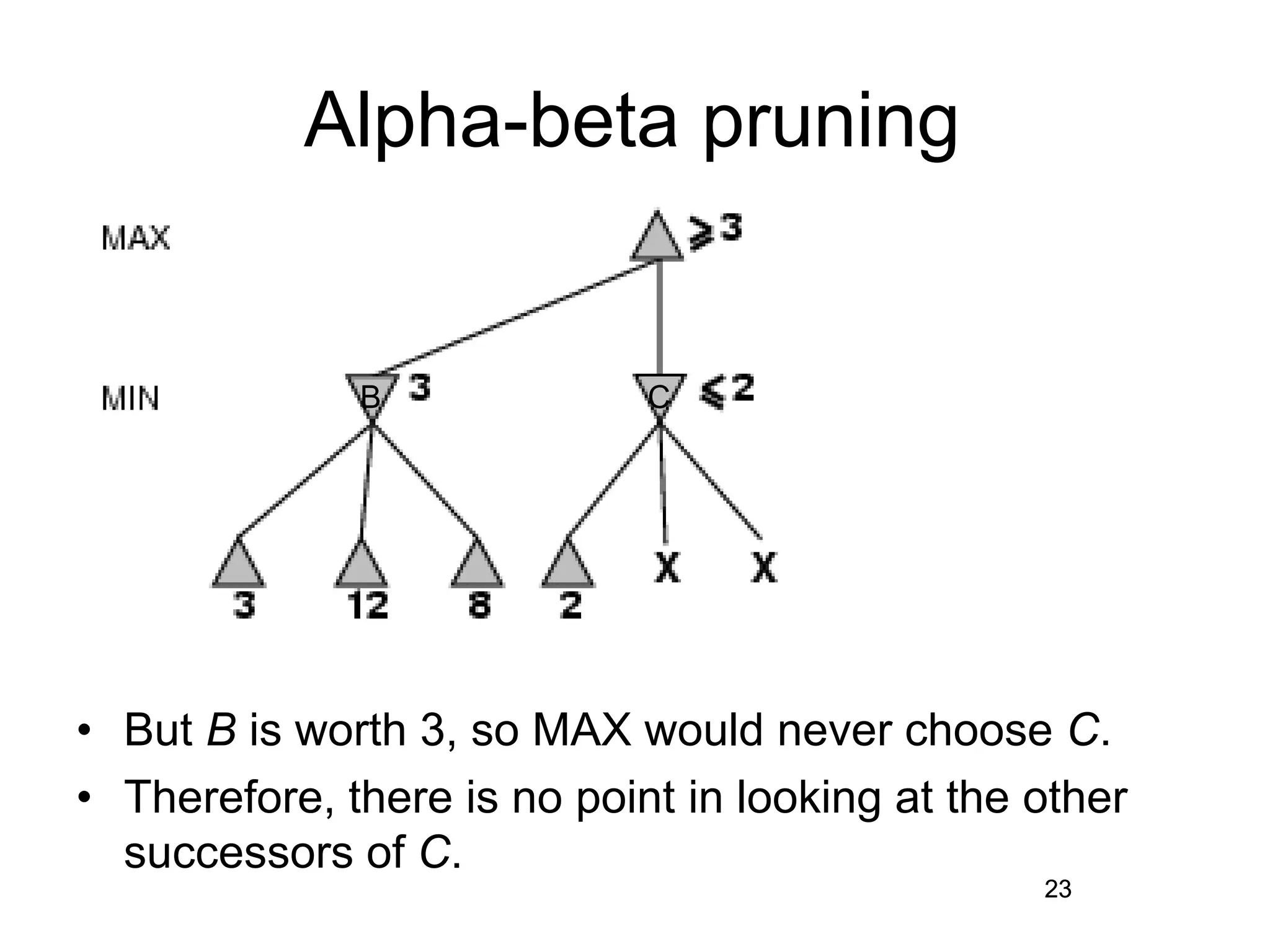

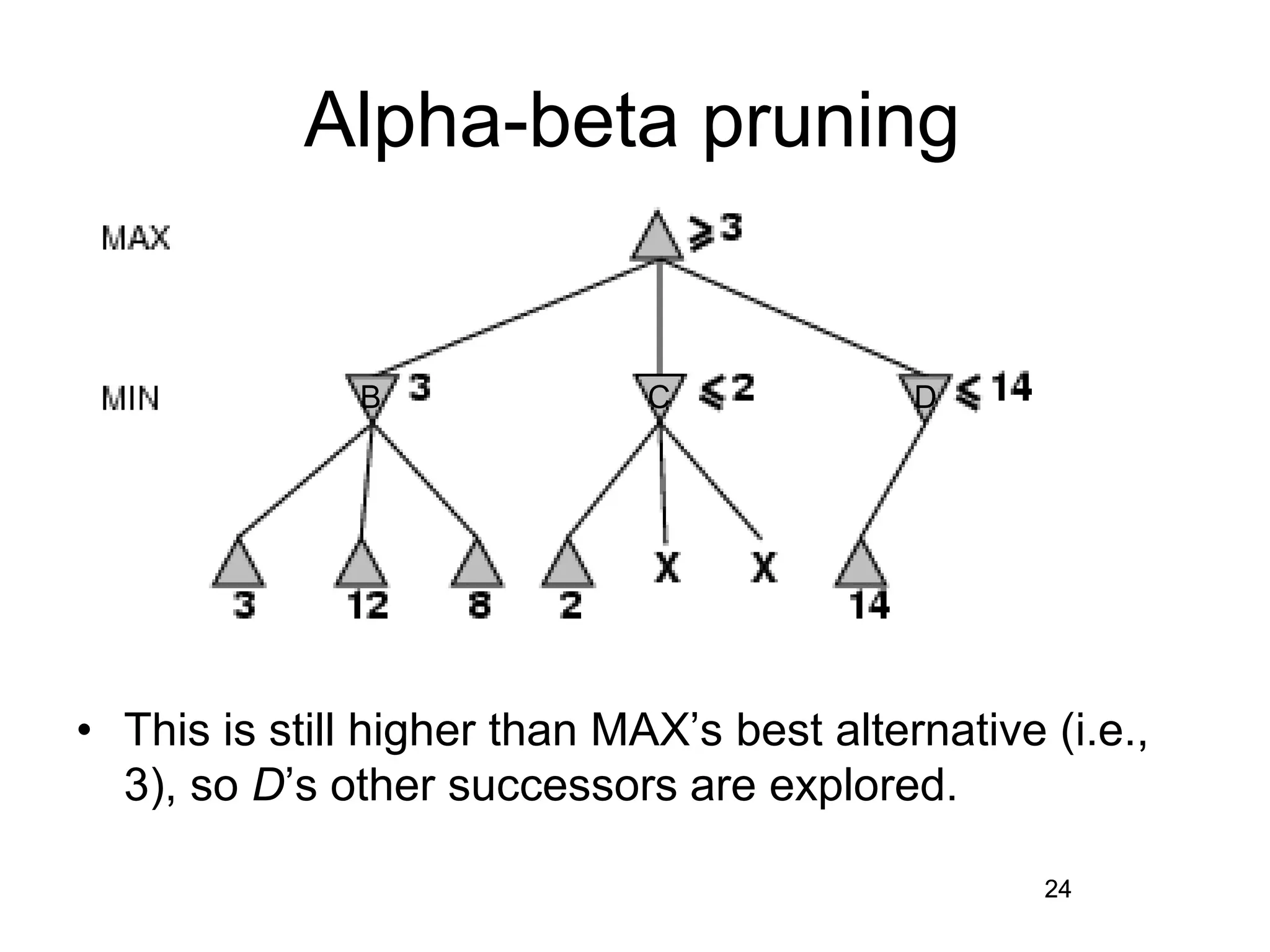

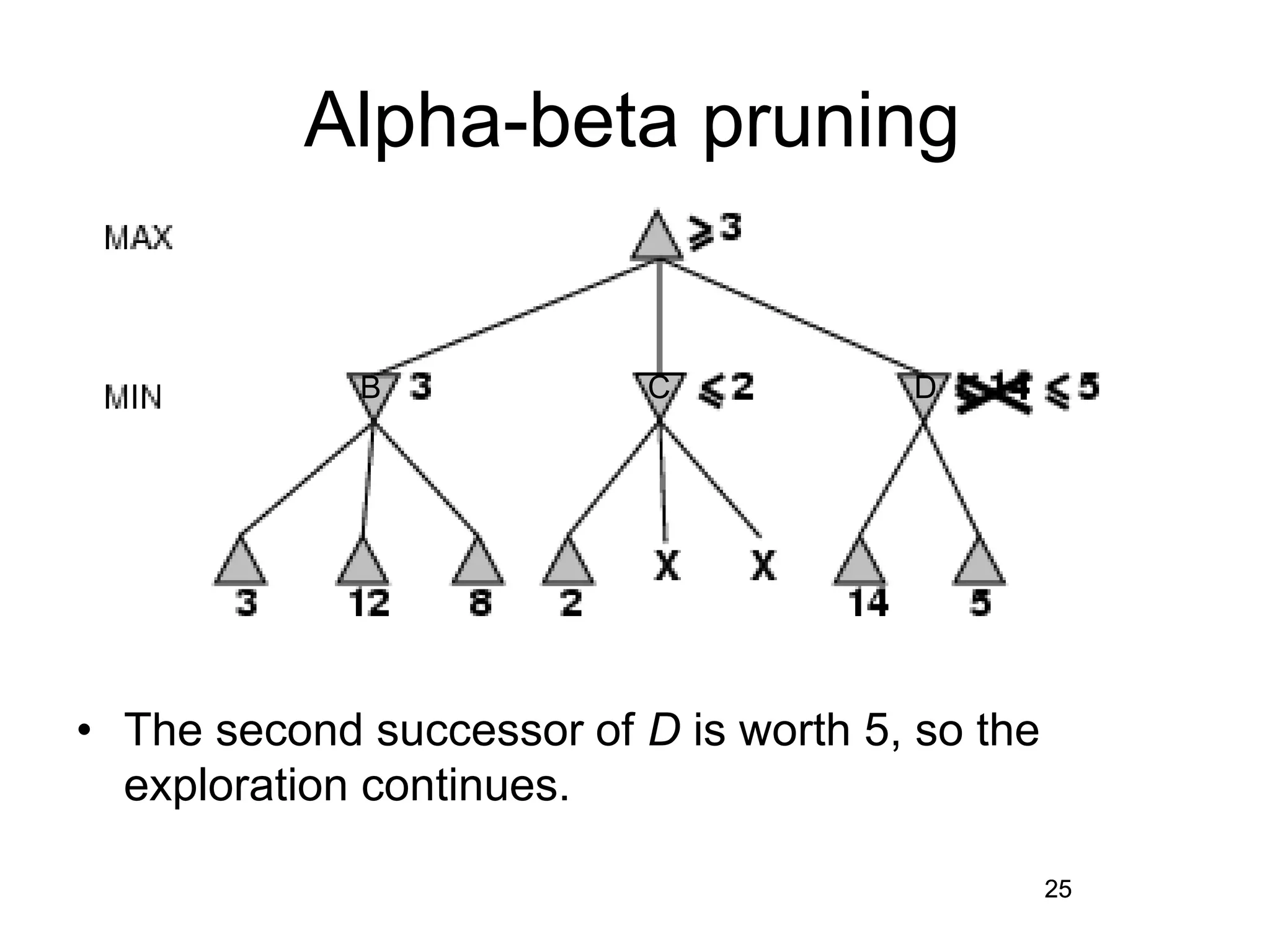

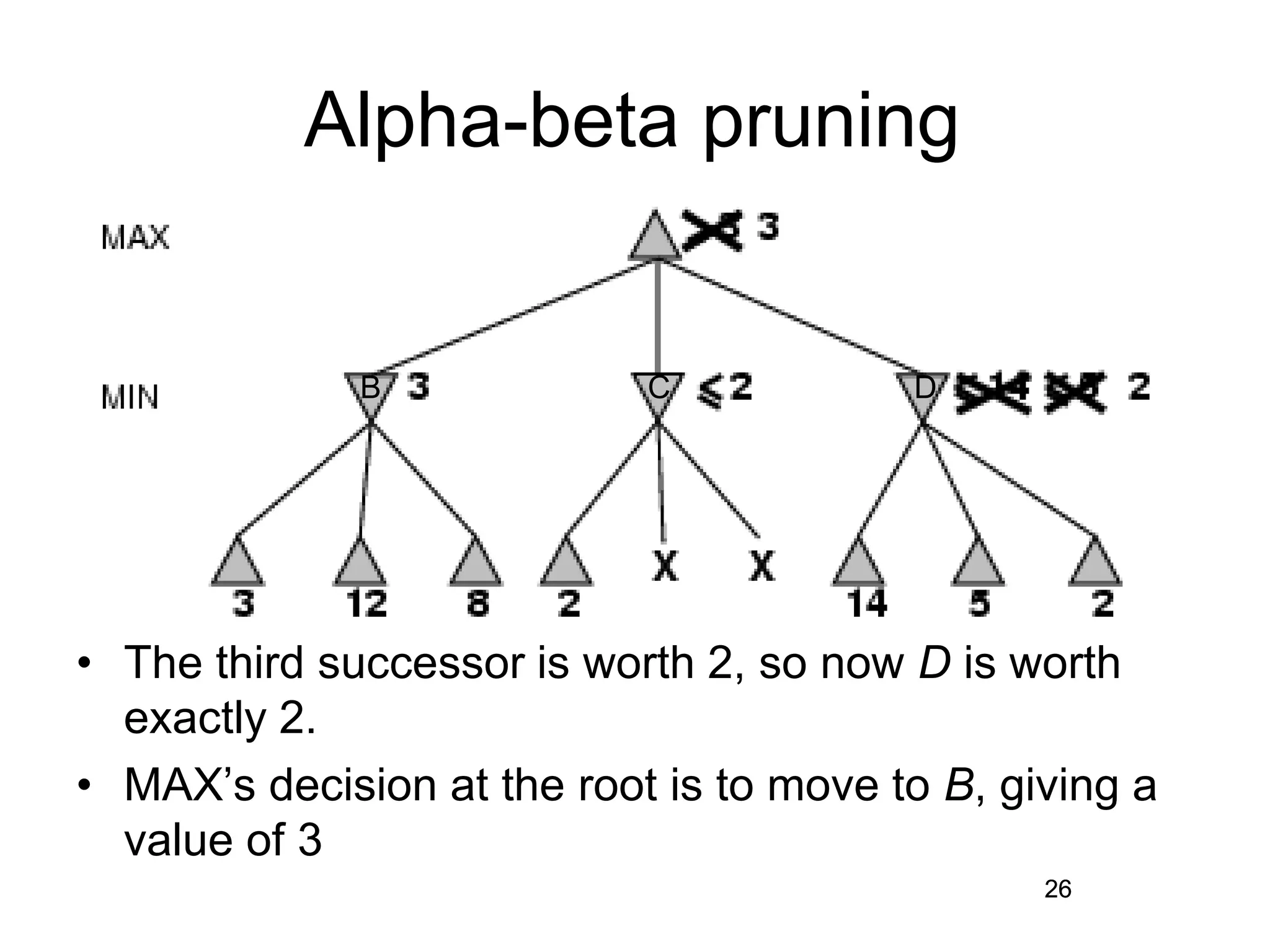

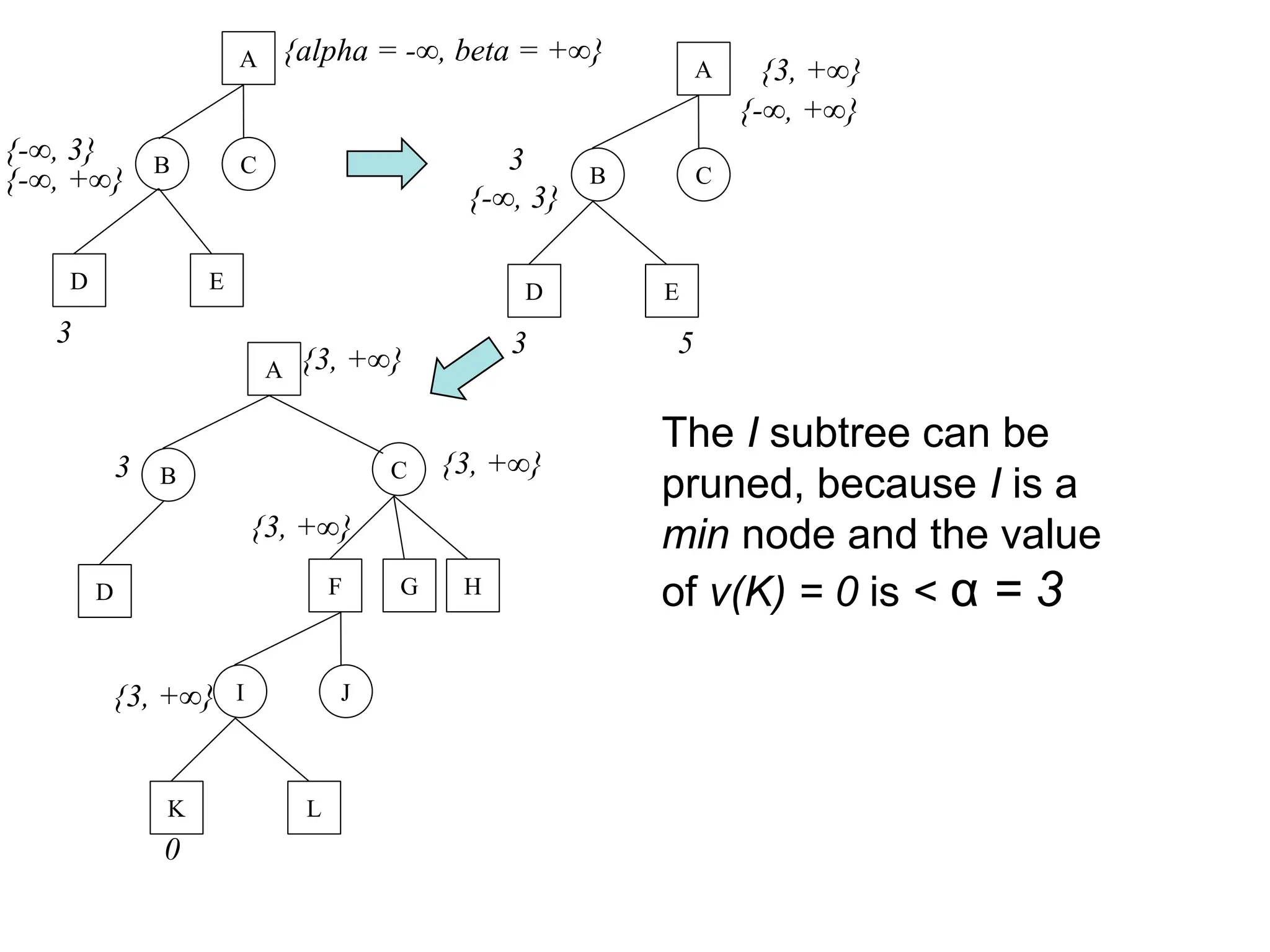

The document discusses adversarial search in artificial intelligence, focusing on game theory frameworks involving two players with alternate moves and perfect or imperfect information. Key concepts include the minimax algorithm, which recursively calculates values for each game state to find optimal moves, and alpha-beta pruning, which optimizes this process by eliminating branches that won't affect the final decision. The effectiveness of alpha-beta pruning is influenced by the order of examining successors, potentially reducing the time complexity of the search.