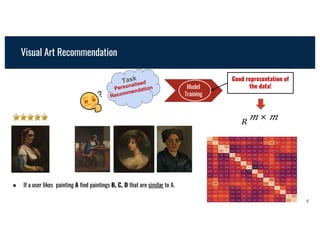

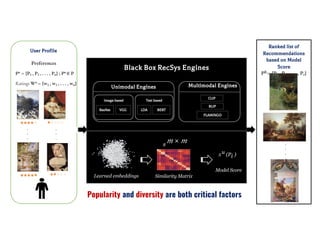

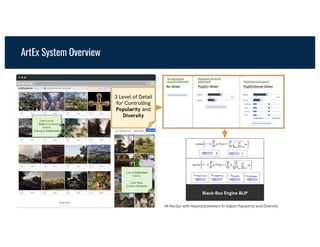

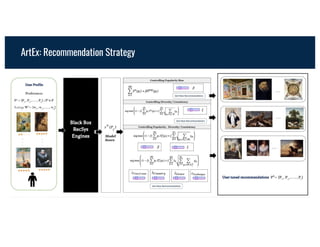

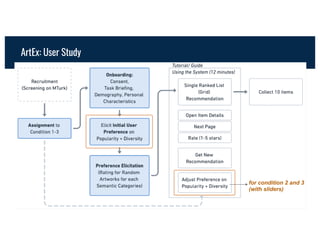

IntRS 2025 presentation at RecSys 2025. Abstract: Recommender Systems (RecSys) have transformed personalized applications by delivering tailored content and

experiences. However, modern Deep Learning RecSys often operate as opaque “black boxes,” offering users no

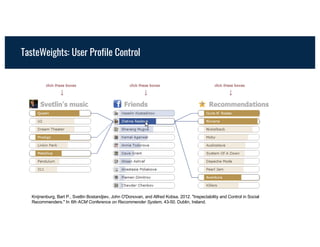

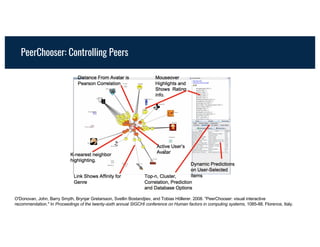

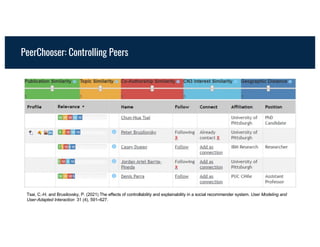

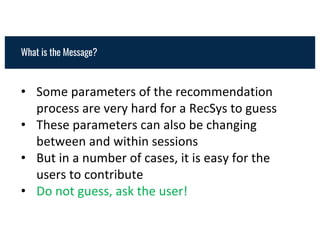

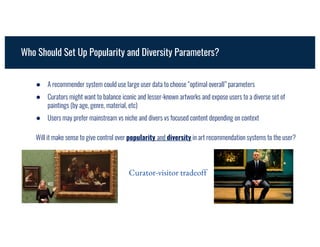

control over how personalization is shaped. We introduce a novel algorithmic approach to bridge this gap in the

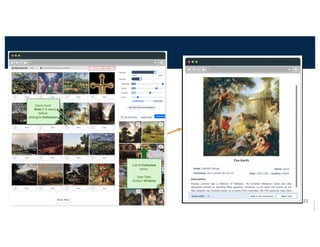

context of visual art recommendation by integrating user agency directly into the RecSys engines. By allowing

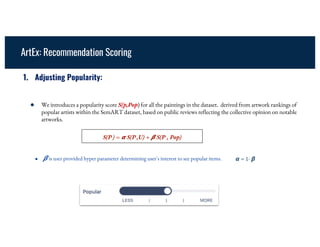

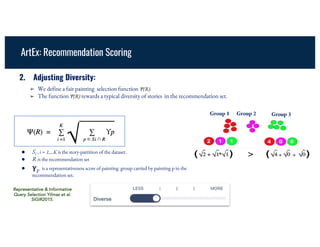

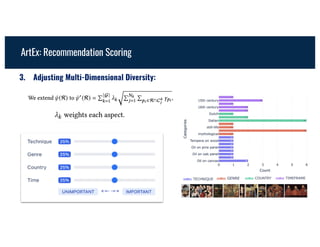

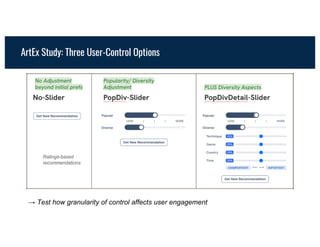

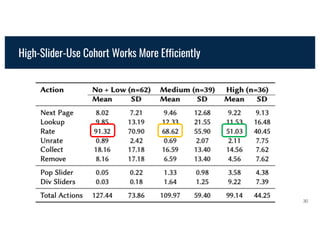

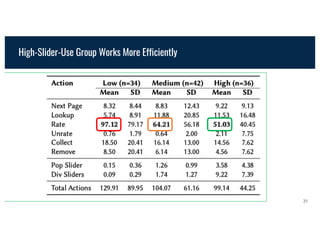

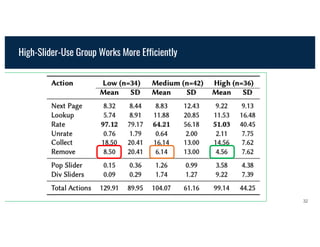

users to dynamically adjust facets such as content diversity and popularity, through the use of hyperparameters

implemented as sliders, the system creates a feedback loop where users can actively tune recommendations

while also helping the system to learn about their preferences. This approach ensures that personalization is not

only algorithmically optimized but also user-driven, fostering a balance between automation and human control.

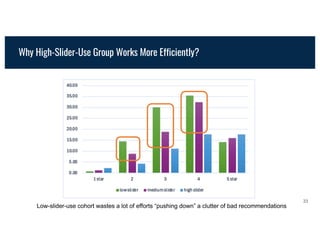

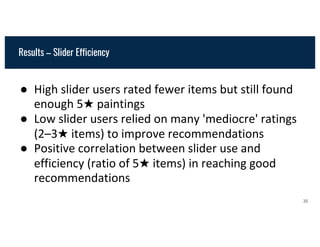

The results of a large-scale user study (n=151) evidenced that sliders enhance engagement and recommendation

quality by promoting meaningful exploration.