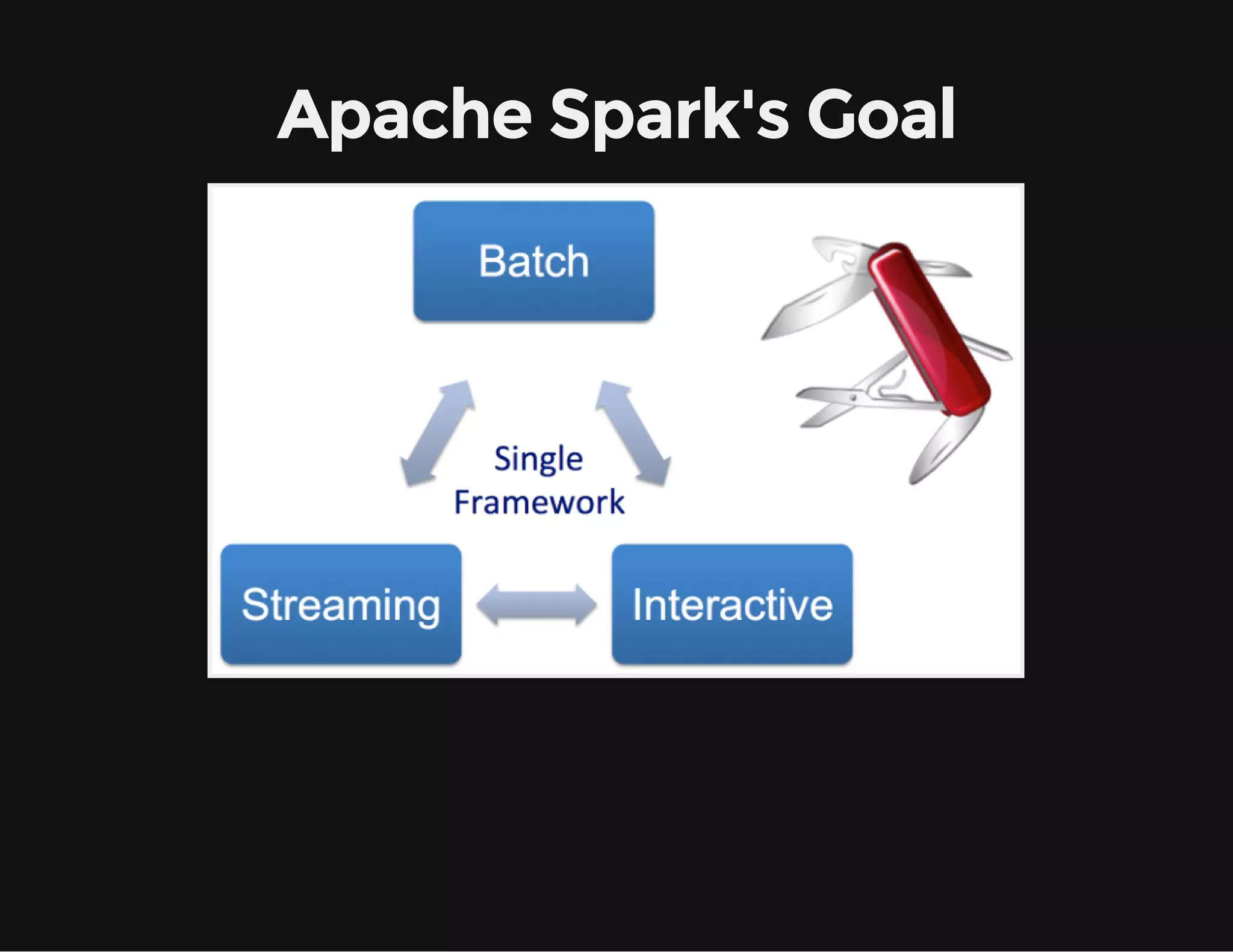

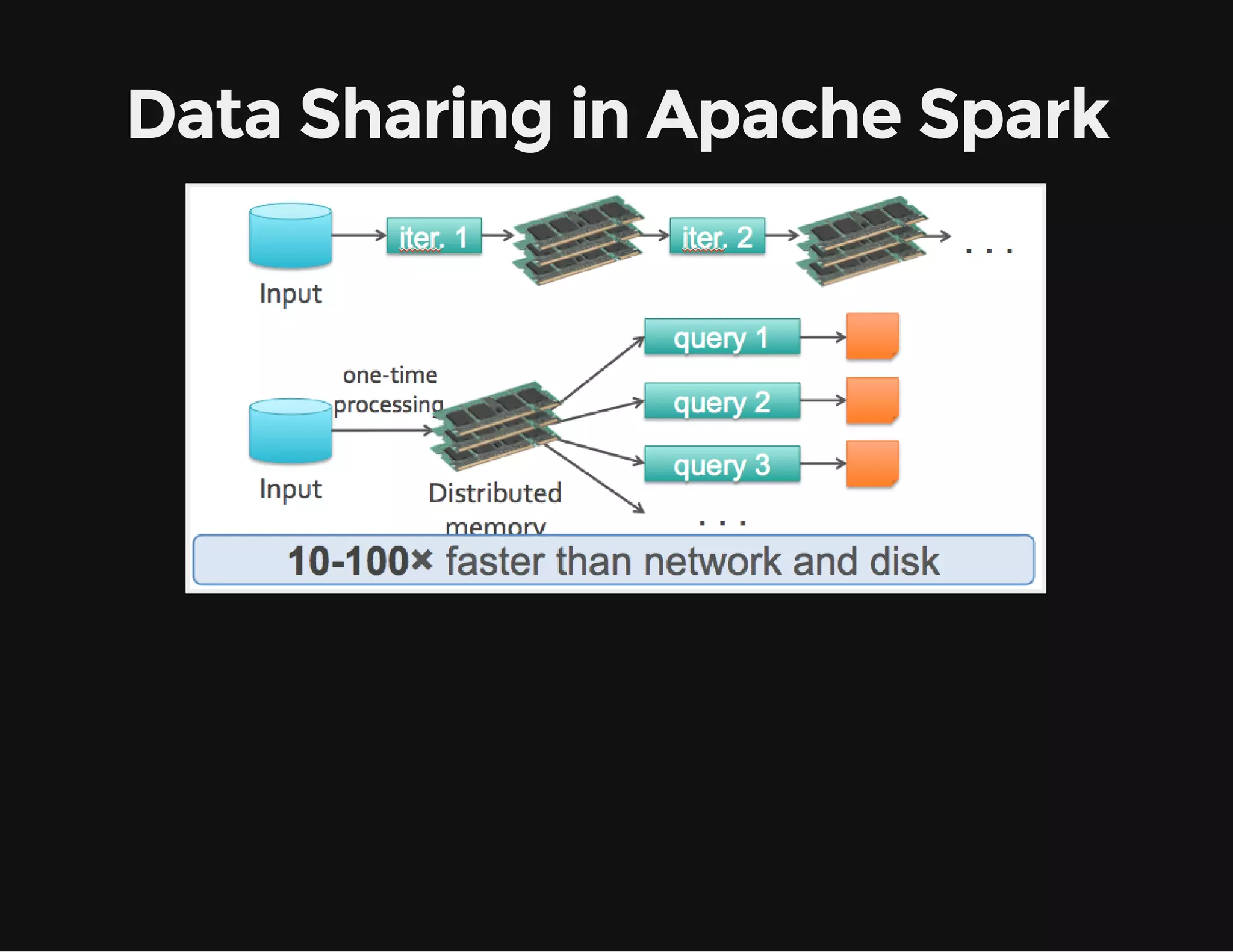

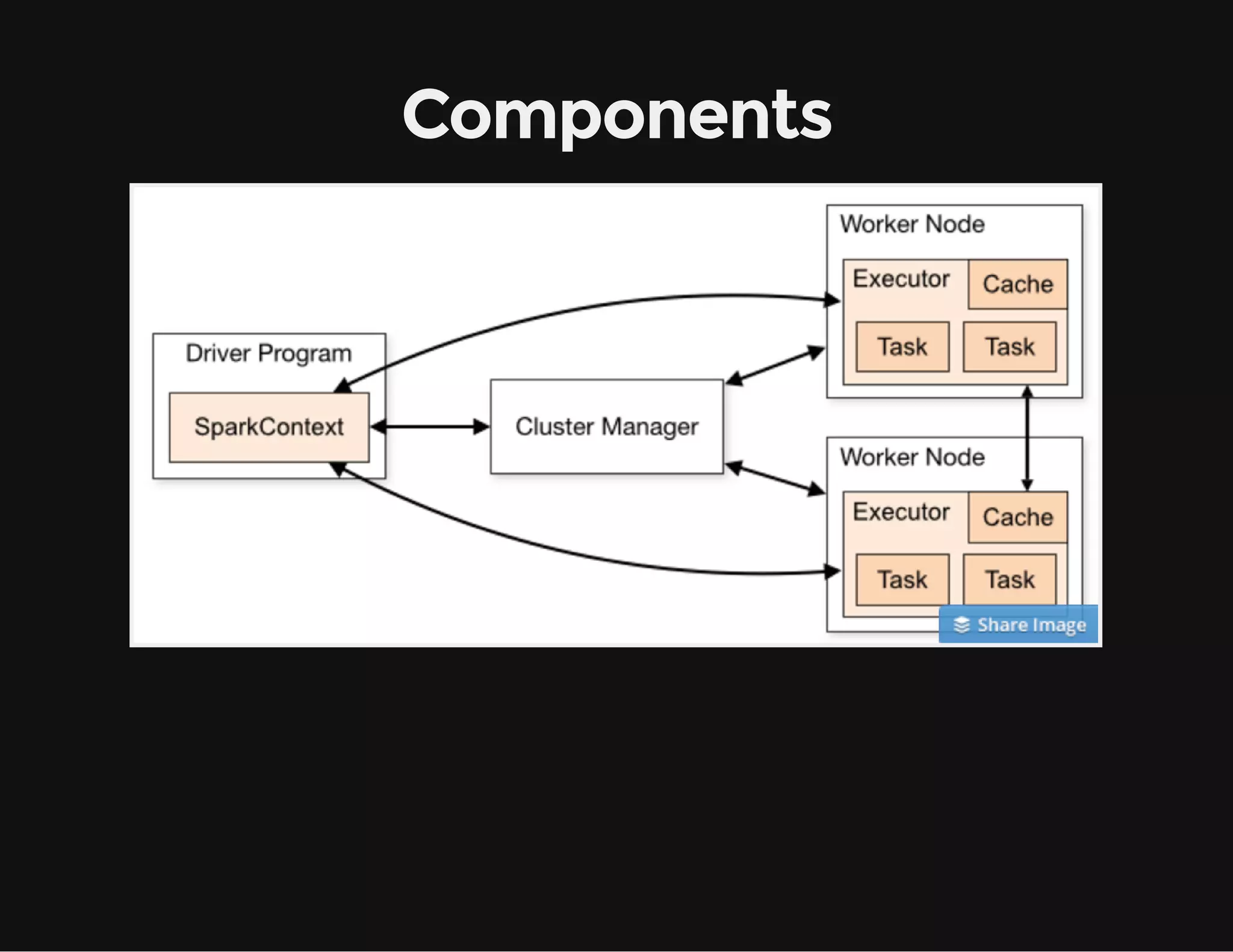

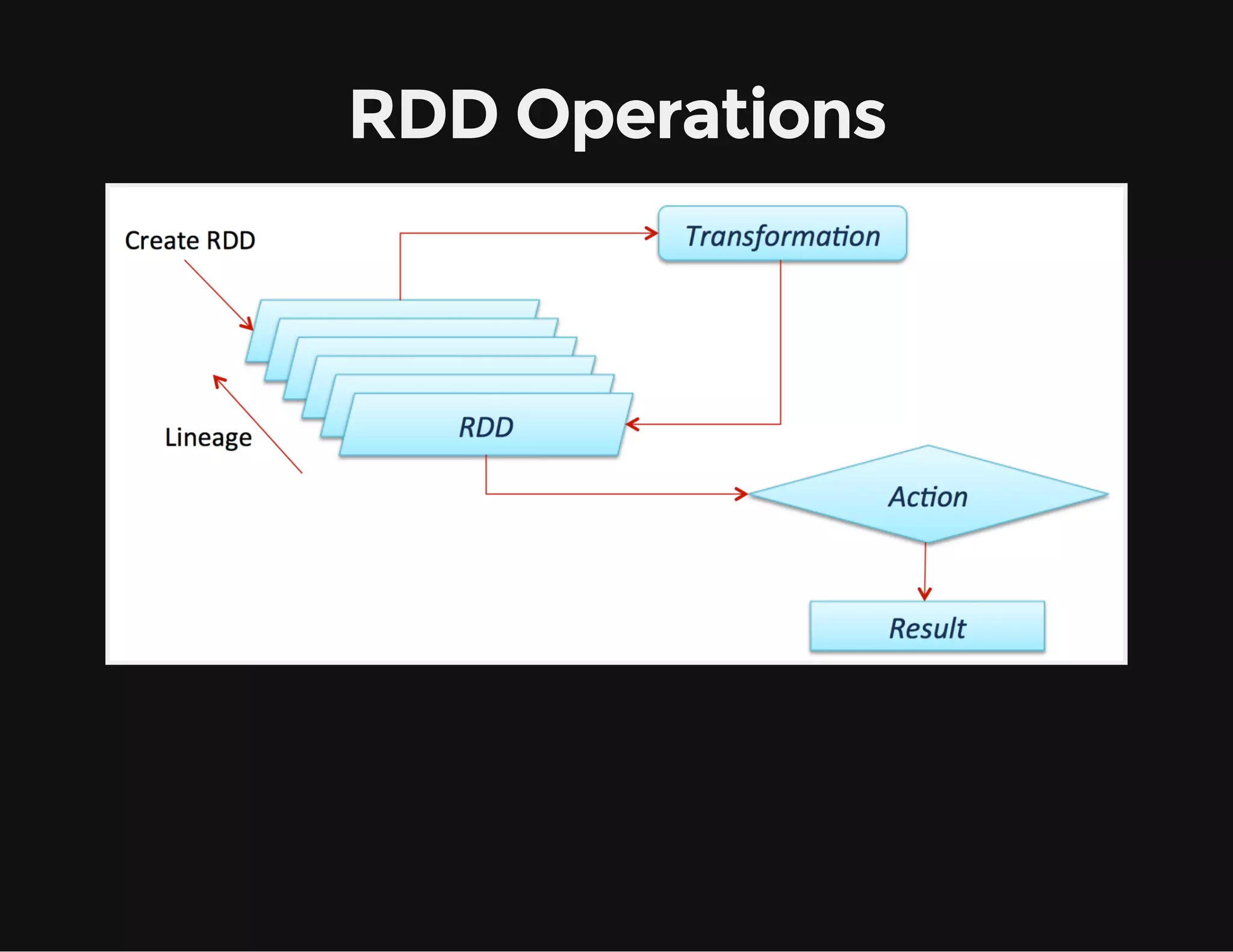

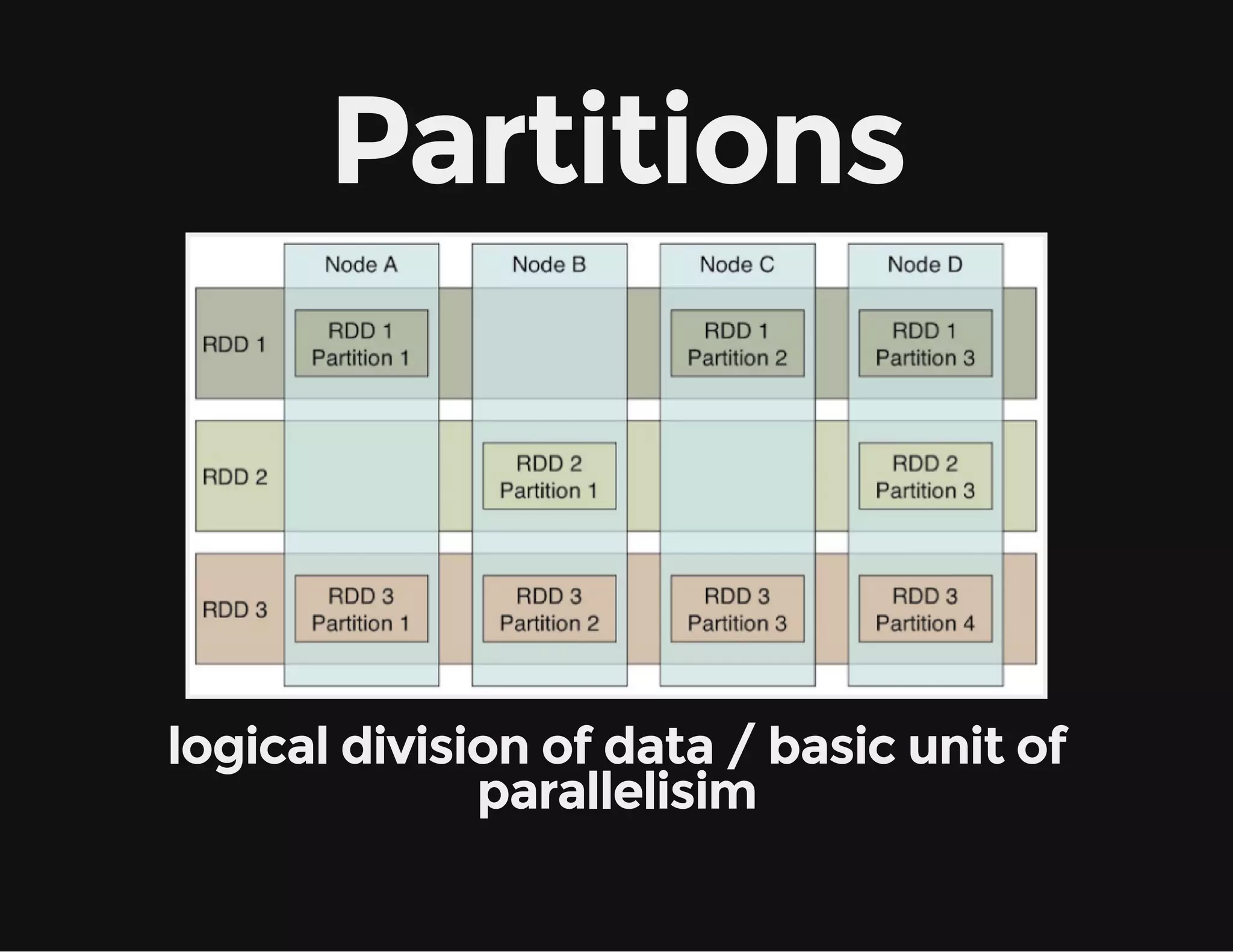

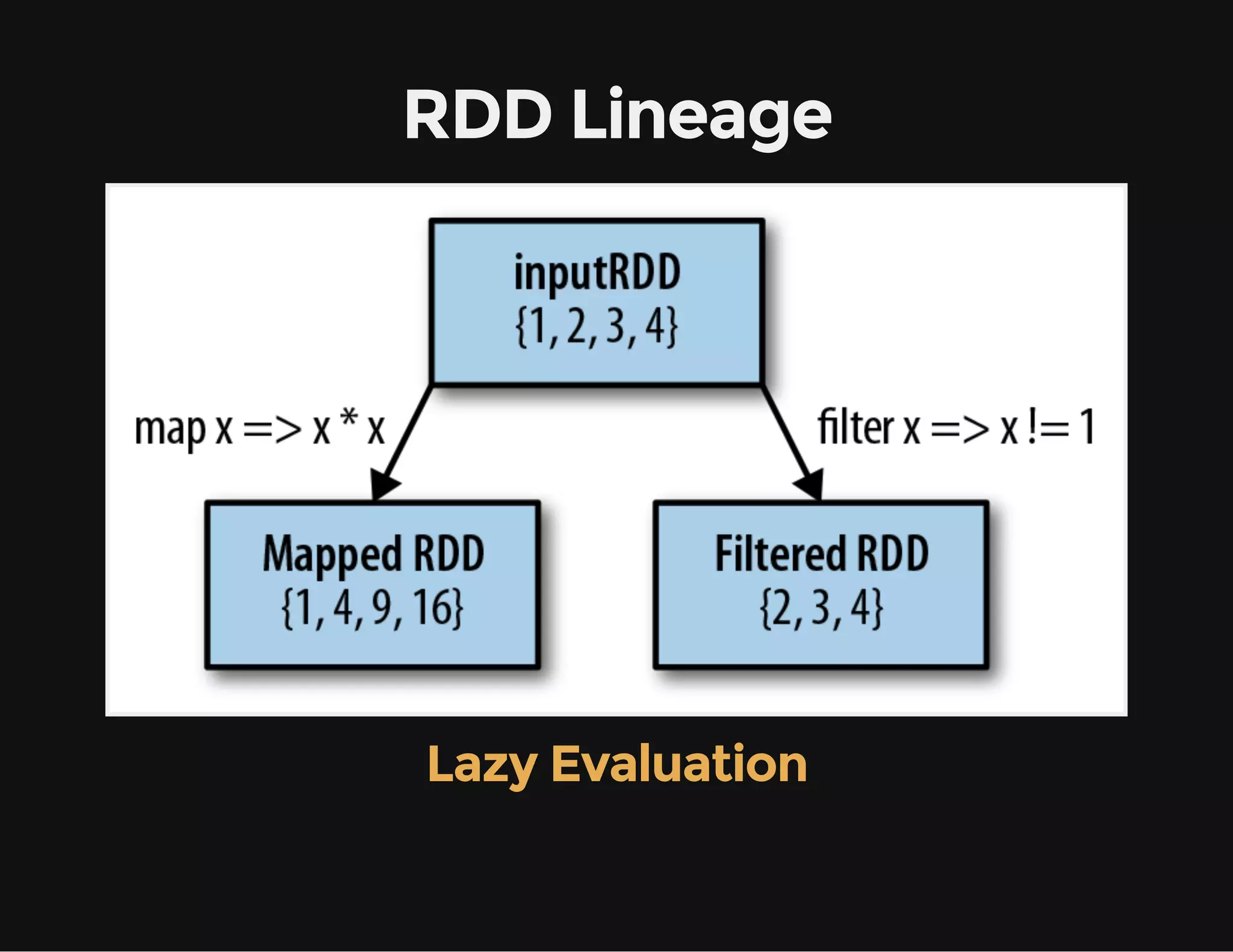

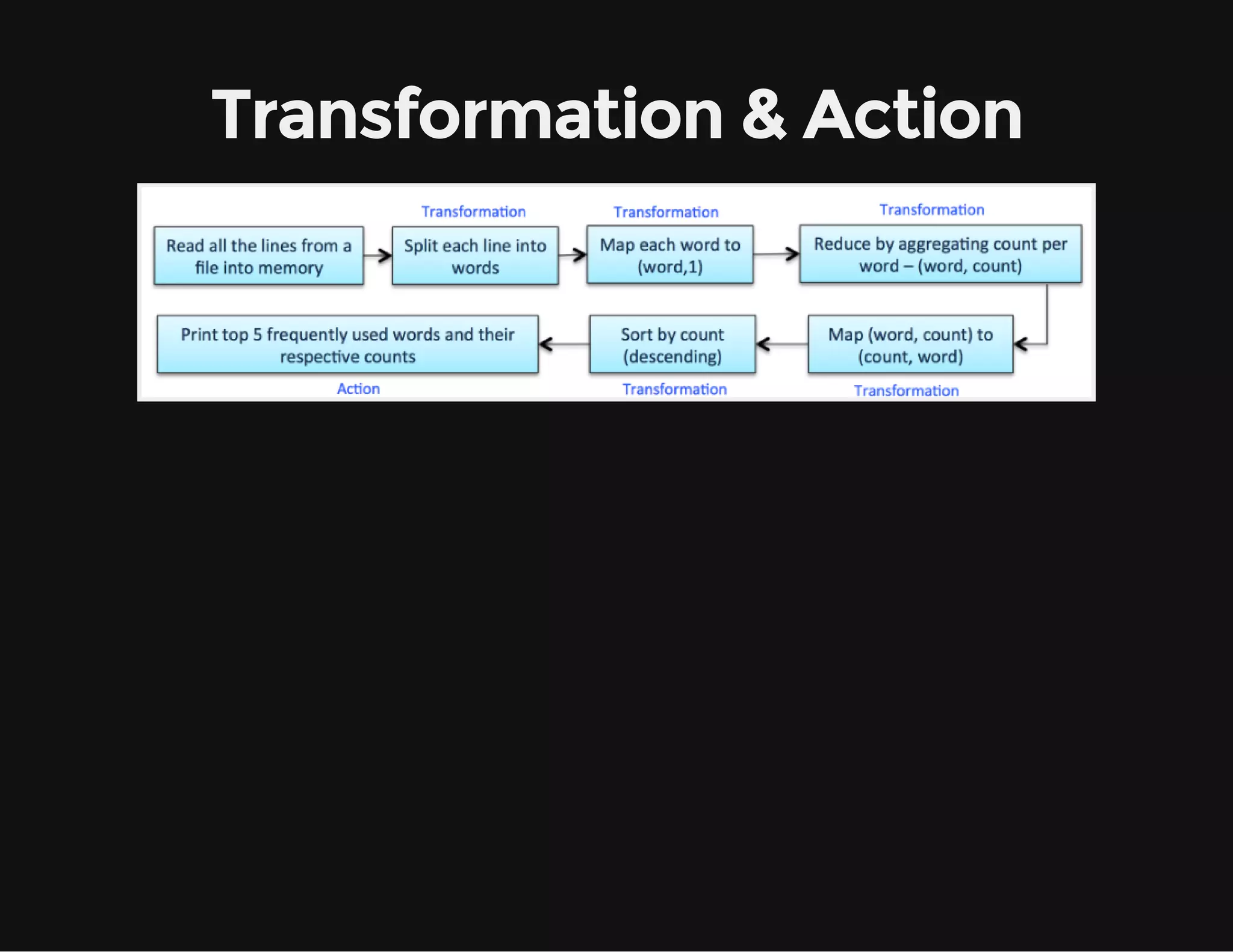

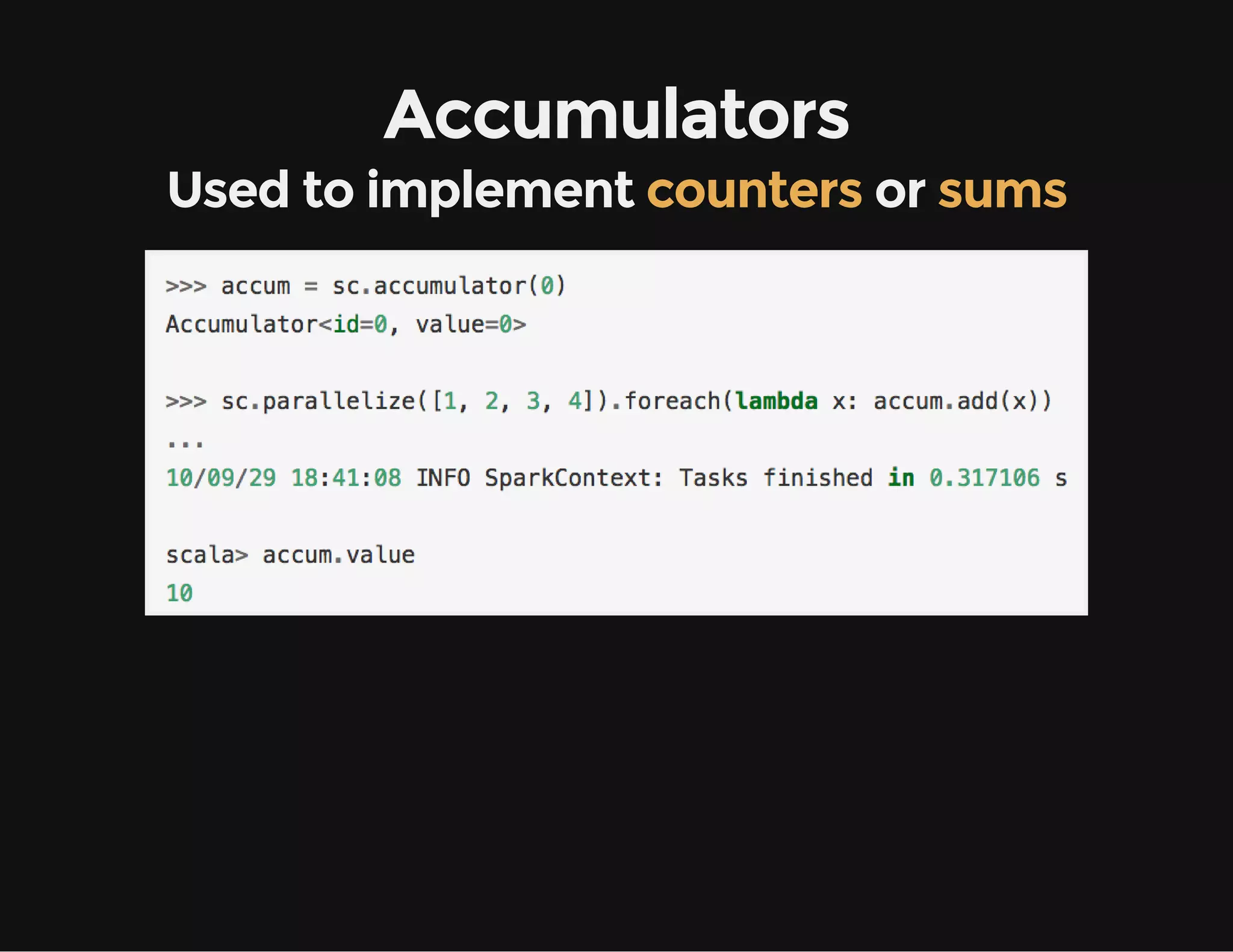

This document provides an overview of Apache Spark, including its goal of providing a fast and general engine for large-scale data processing. It discusses Spark's programming model, components like RDDs and DAGs, and how to initialize and deploy Spark on a cluster. Key aspects covered include RDDs as the fundamental data structure in Spark, transformations and actions, and storage levels for caching data in memory or disk.