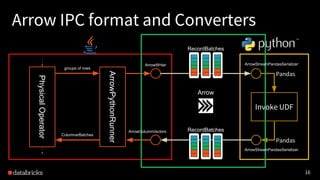

The document discusses Apache Arrow and Pandas UDF on Apache Spark. It provides an overview of PySpark and Pandas, describes Python UDF and the new Pandas UDF feature, and explains how Pandas UDFs use Apache Arrow for efficient serialization and communication between the JVM and Python workers. The document outlines the physical operators implemented for different Pandas UDF types and the ongoing work to improve performance and functionality.

![18

Arrow Converters in Spark

in Java/Scala

• ArrowWriter [src]

• A wrapper for writing VectorSchemaRoot and ValueVectors

• ArrowColumnVector [src]

• A wrapper for reading ValueVectors, works with ColumnarBatch

in Python

• ArrowStreamPandasSerializer [src]

• A wrapper for RecordBatchReader and RecordBatchWriter](https://image.slidesharecdn.com/apachearrowandpandasudf-181208061748/85/Apache-Arrow-and-Pandas-UDF-on-Apache-Spark-18-320.jpg)

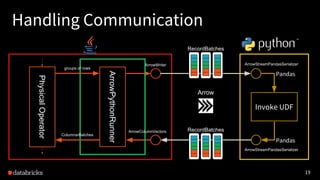

![20

Handling Communication

ArrowPythonRunner [src]

• Handle the communication between JVM and the Python

worker

• Create or reuse a Python worker

• Open a Socket to communicate

• Write data to the socket with ArrowWriter in a separate thread

• Read data from the socket

• Return an iterator of ColumnarBatch of ArrowColumnVectors](https://image.slidesharecdn.com/apachearrowandpandasudf-181208061748/85/Apache-Arrow-and-Pandas-UDF-on-Apache-Spark-20-320.jpg)

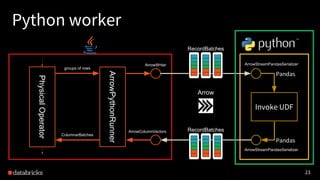

![24

Python worker

worker.py [src]

• Open a Socket to communicate

• Set up a UDF execution for each PythonUDFType

• Create a map function

– prepare the arguments

– invoke the UDF

– check and return the result

• Execute the map function over the input iterator of Pandas

DataFrame

• Write back the results](https://image.slidesharecdn.com/apachearrowandpandasudf-181208061748/85/Apache-Arrow-and-Pandas-UDF-on-Apache-Spark-24-320.jpg)

![26

Work In Progress

We can track issues related to Pandas UDF.

• [SPARK-22216] Improving PySpark/Pandas interoperability

• 37 subtasks in total

• 3 subtasks are in progress

• 4 subtasks are open](https://image.slidesharecdn.com/apachearrowandpandasudf-181208061748/85/Apache-Arrow-and-Pandas-UDF-on-Apache-Spark-26-320.jpg)

![27

Work In Progress

• Window Pandas UDF

• [SPARK-24561] User-defined window functions with pandas udf

(bounded window)

• Performance Improvement of toPandas -> merged!

• [SPARK-25274] Improve toPandas with Arrow by sending out-of-order

record batches

• SparkR

• [SPARK-25981] Arrow optimization for conversion from R DataFrame

to Spark DataFrame](https://image.slidesharecdn.com/apachearrowandpandasudf-181208061748/85/Apache-Arrow-and-Pandas-UDF-on-Apache-Spark-27-320.jpg)