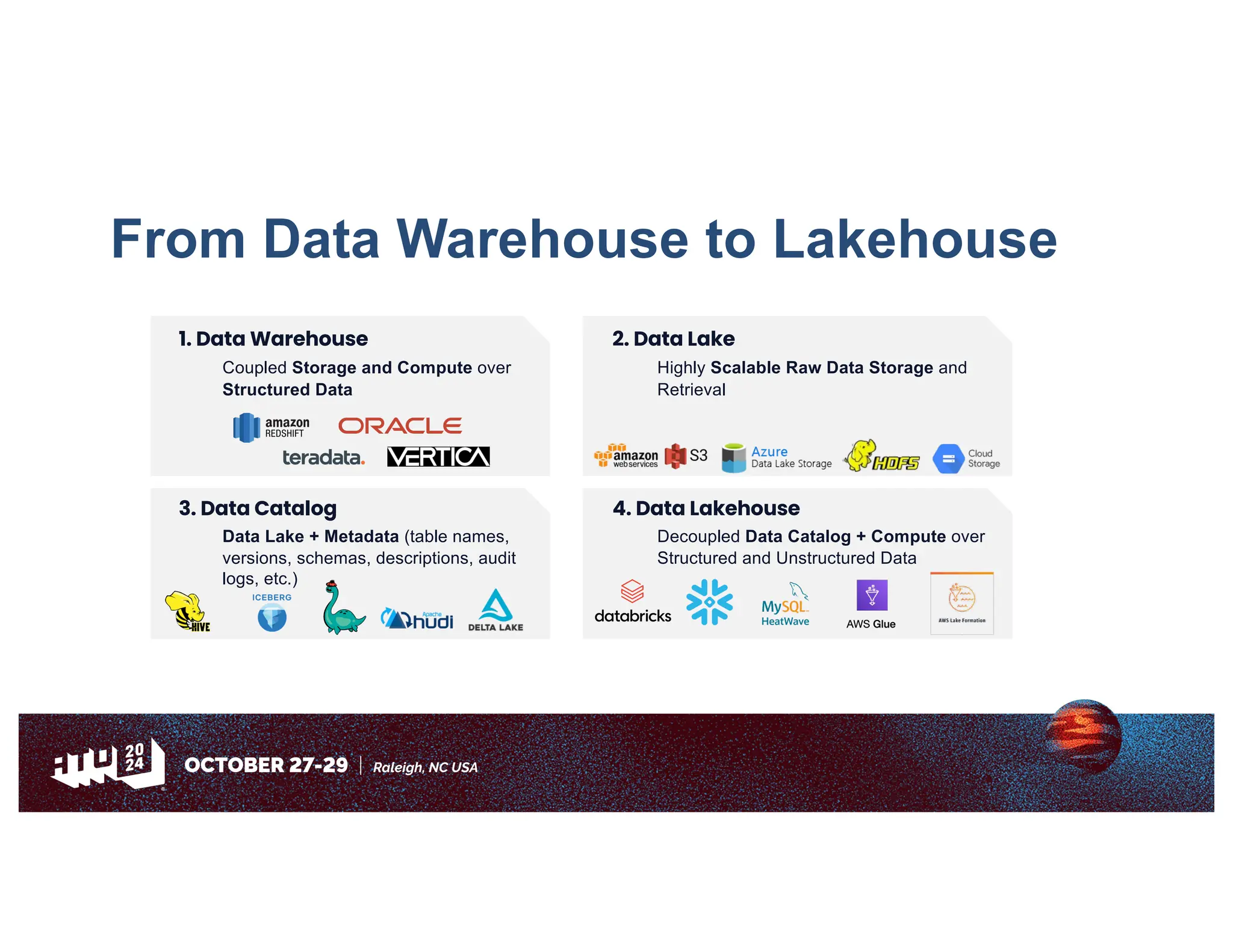

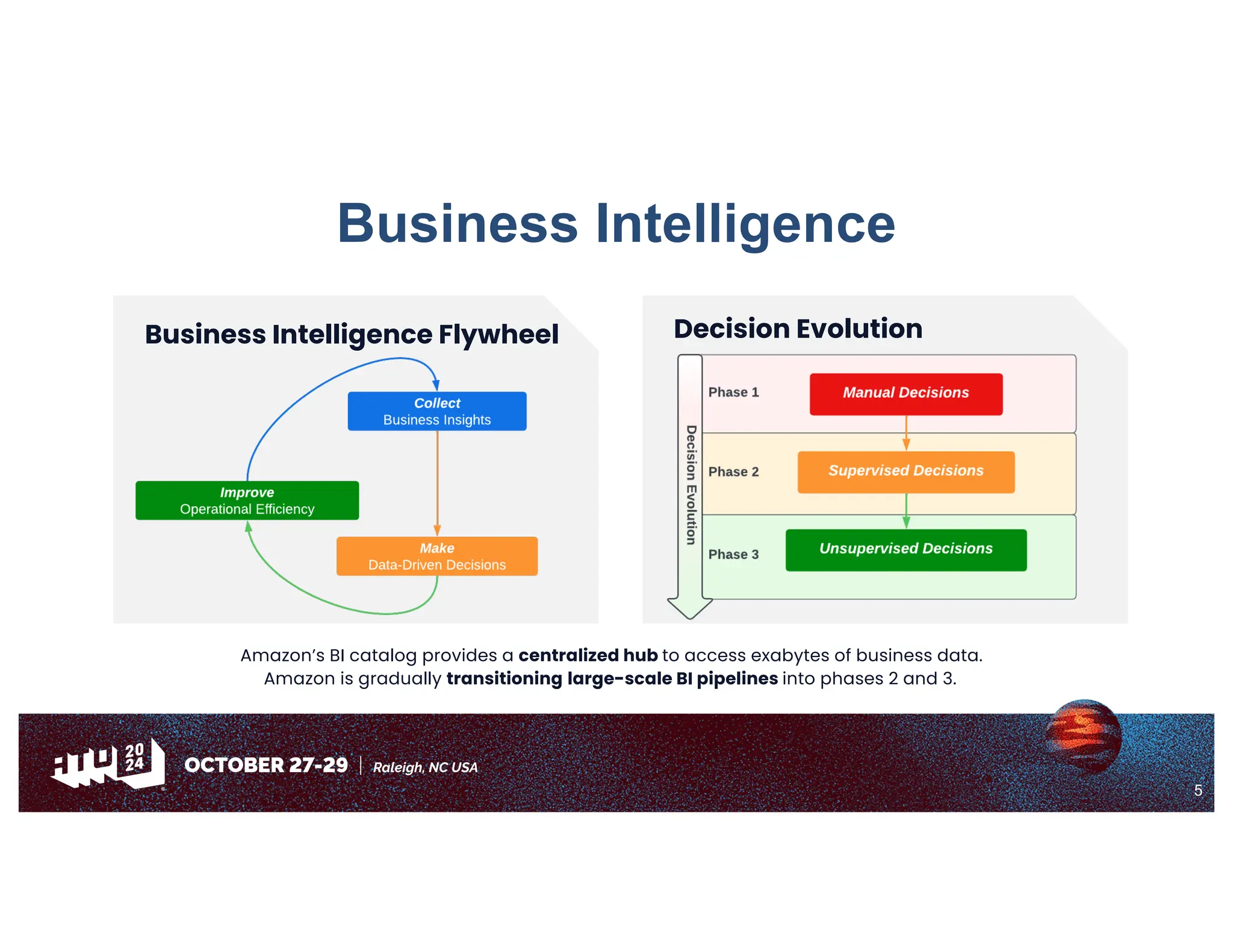

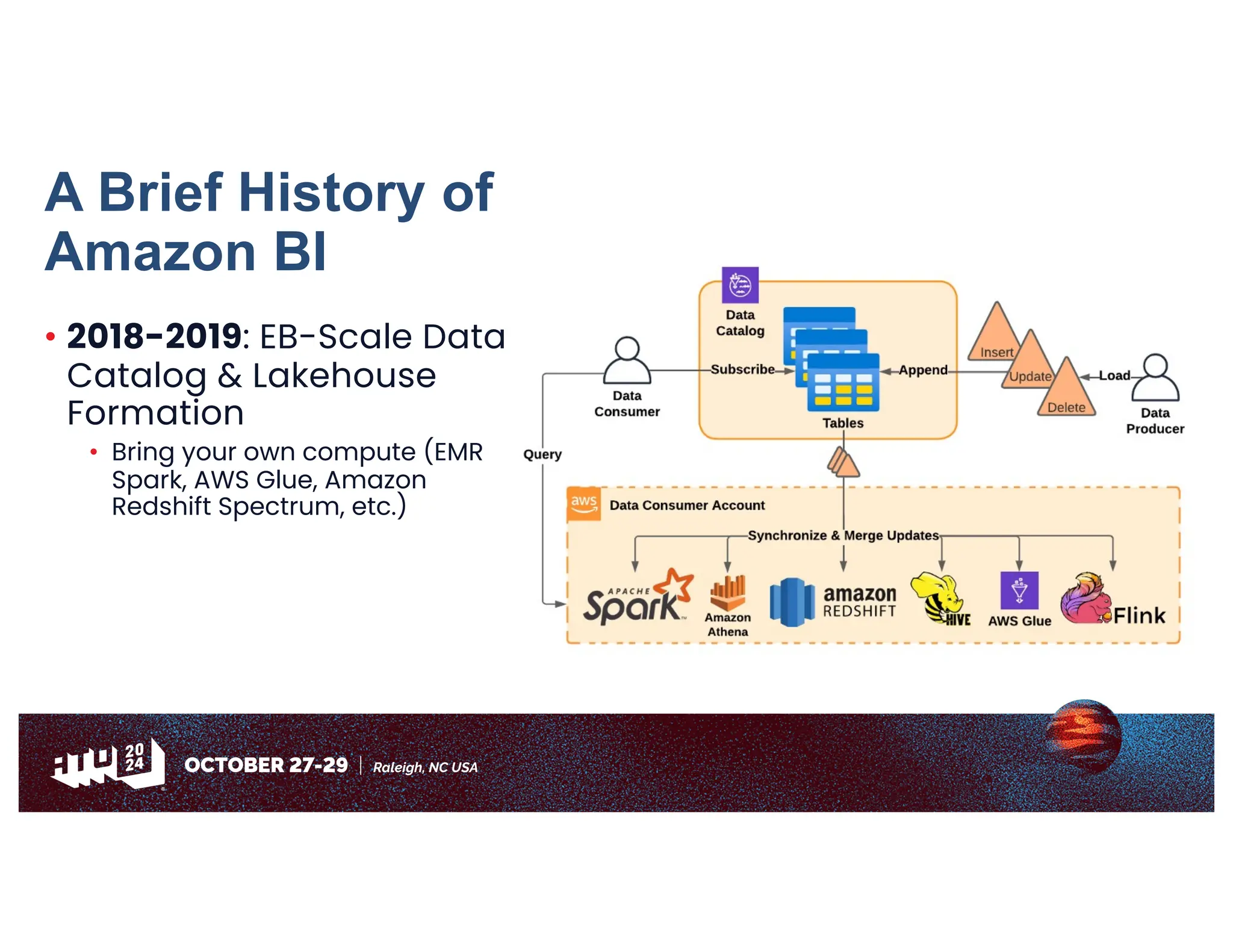

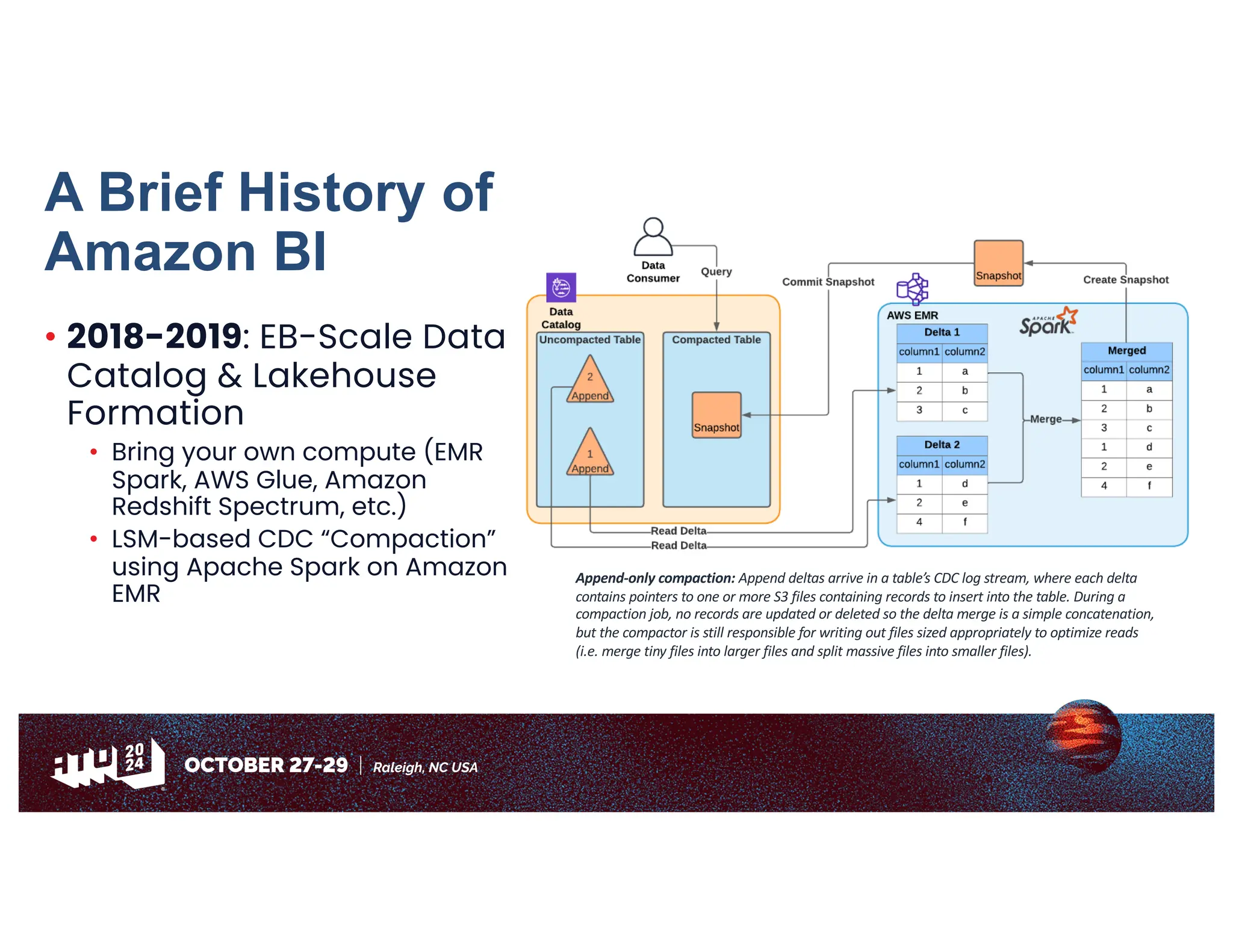

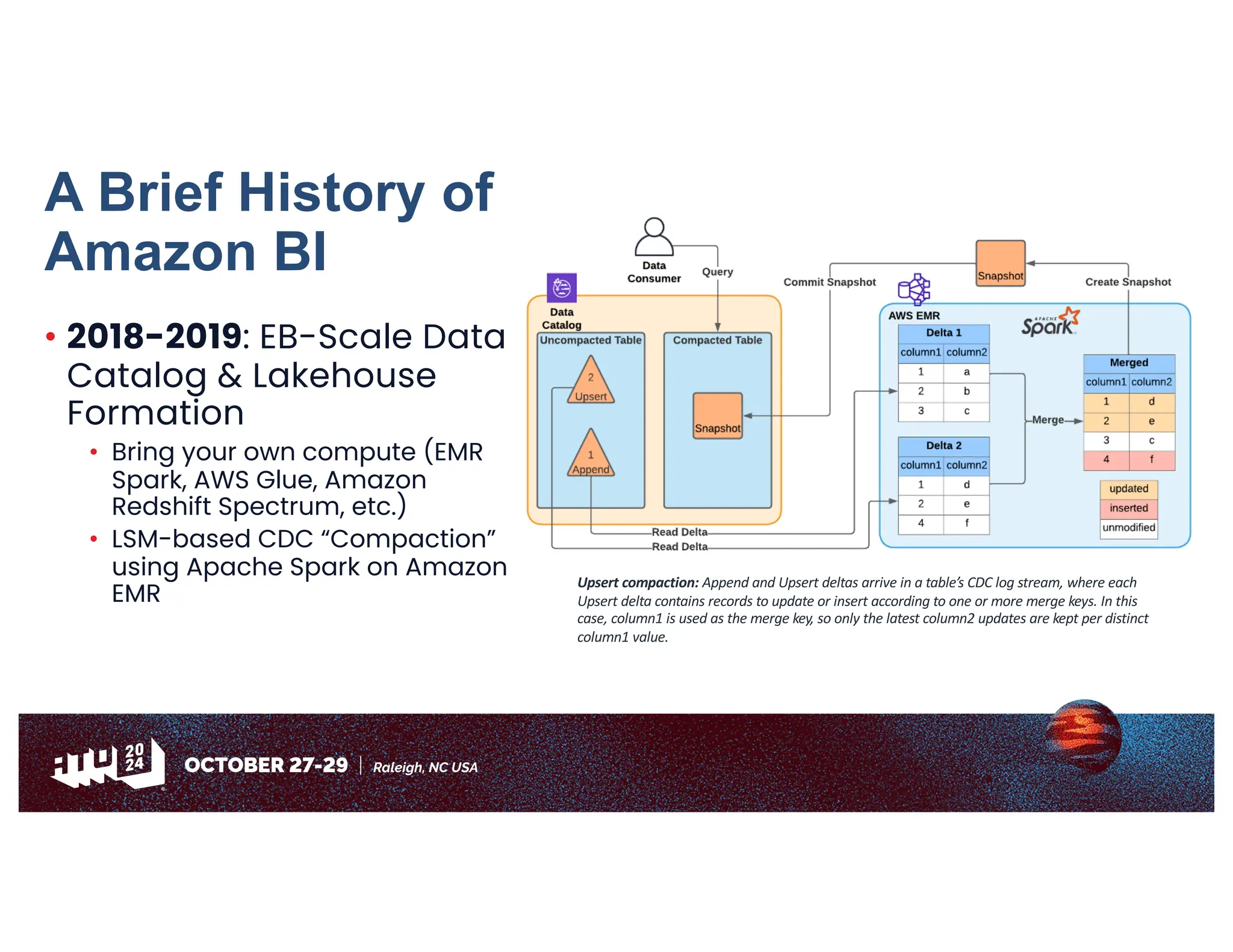

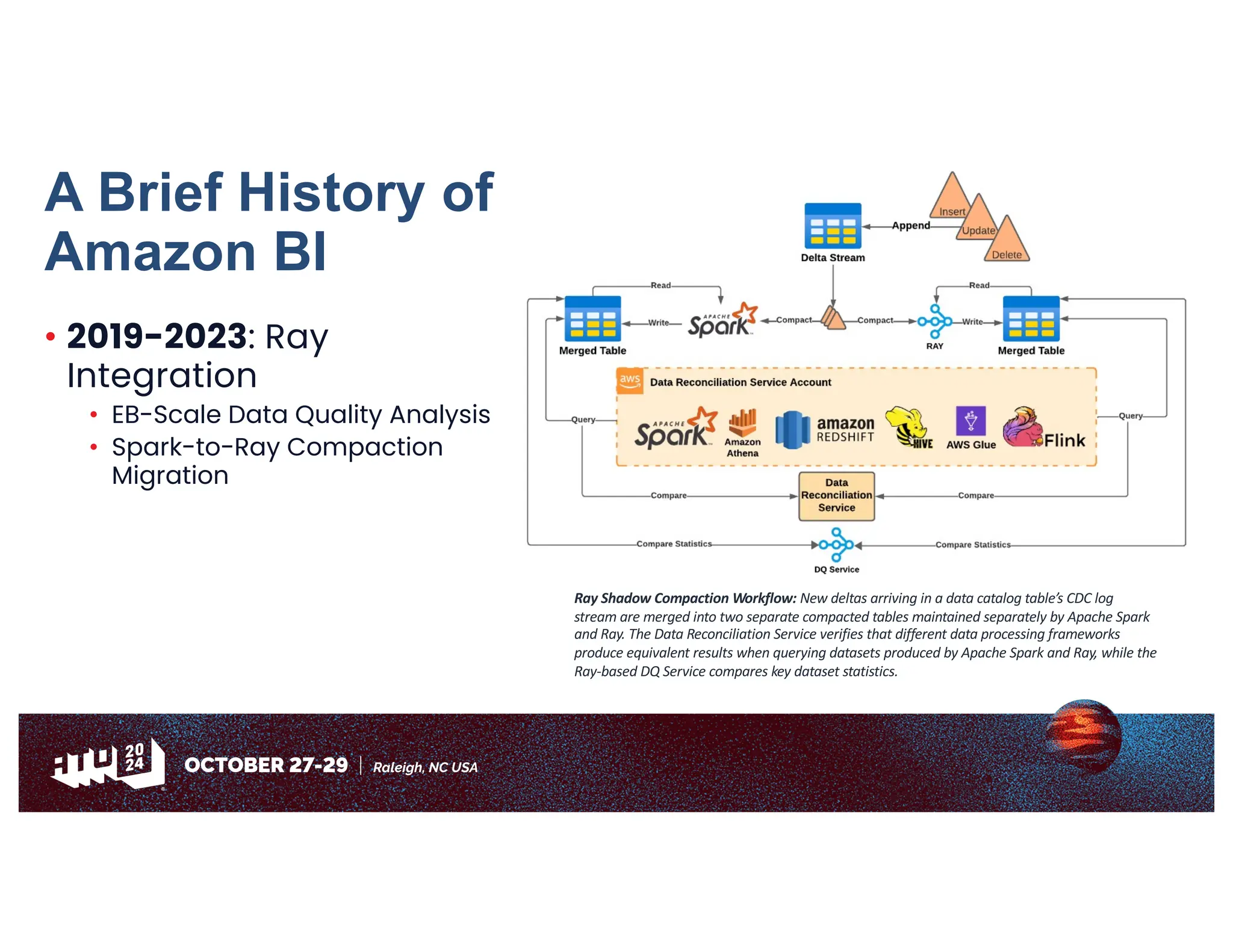

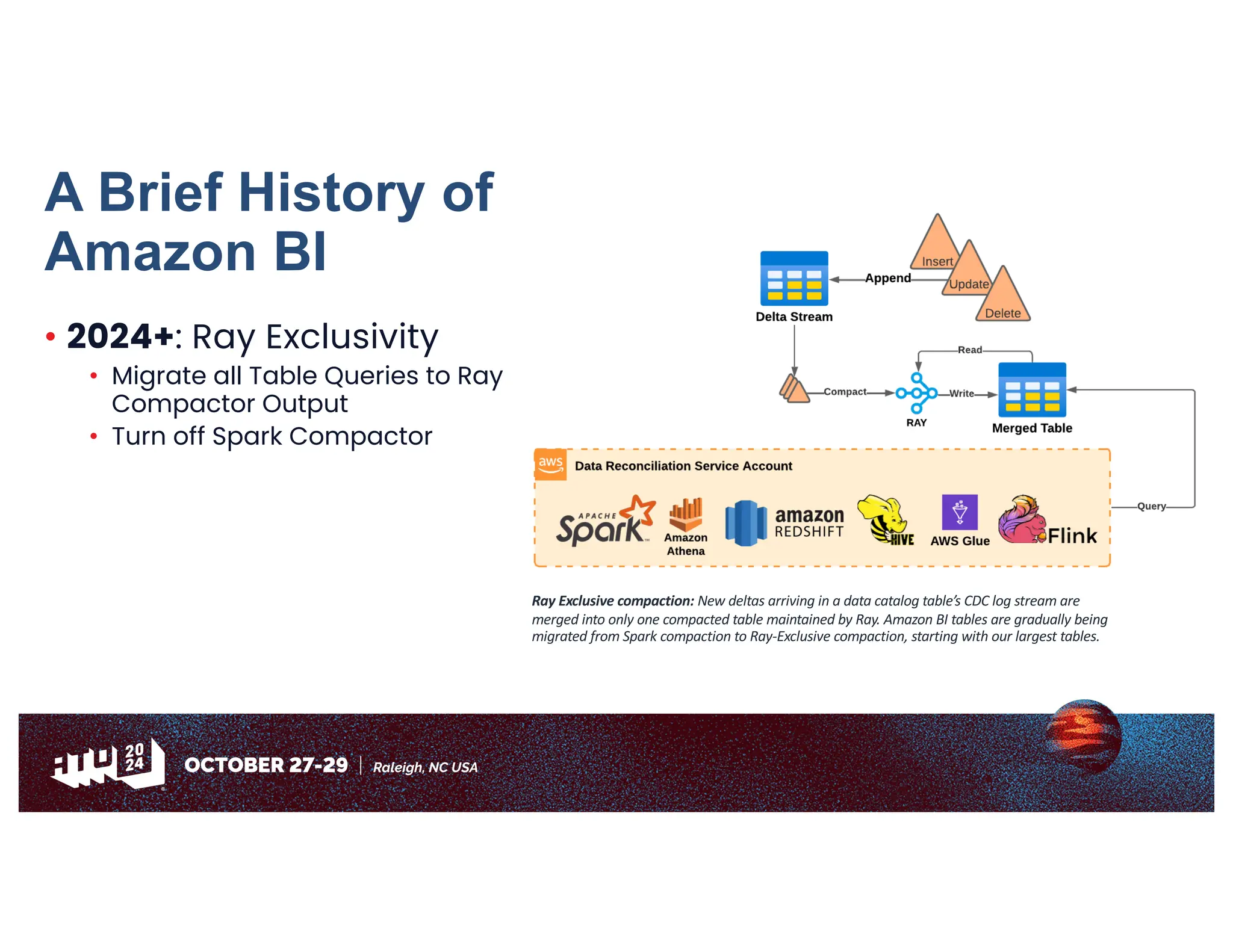

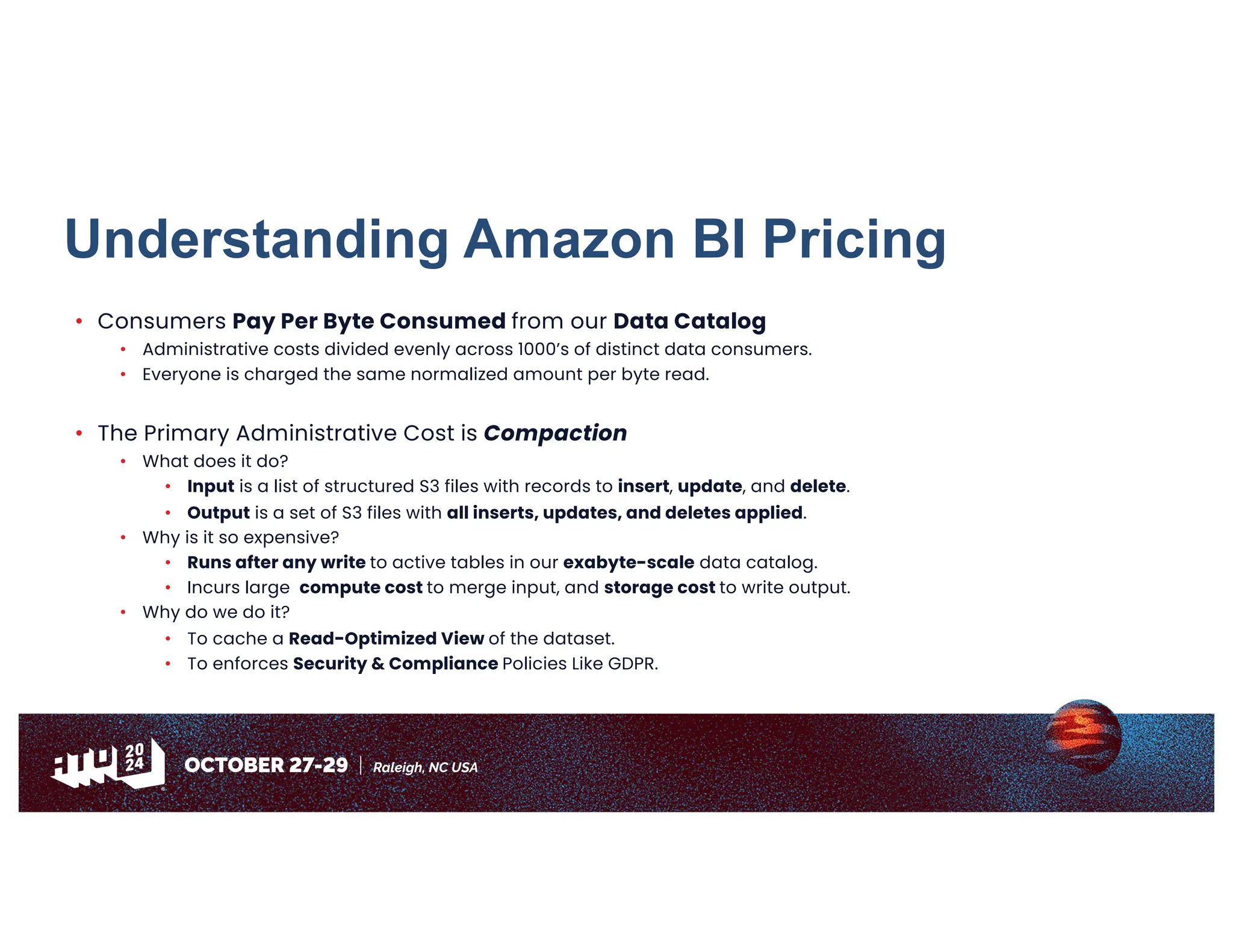

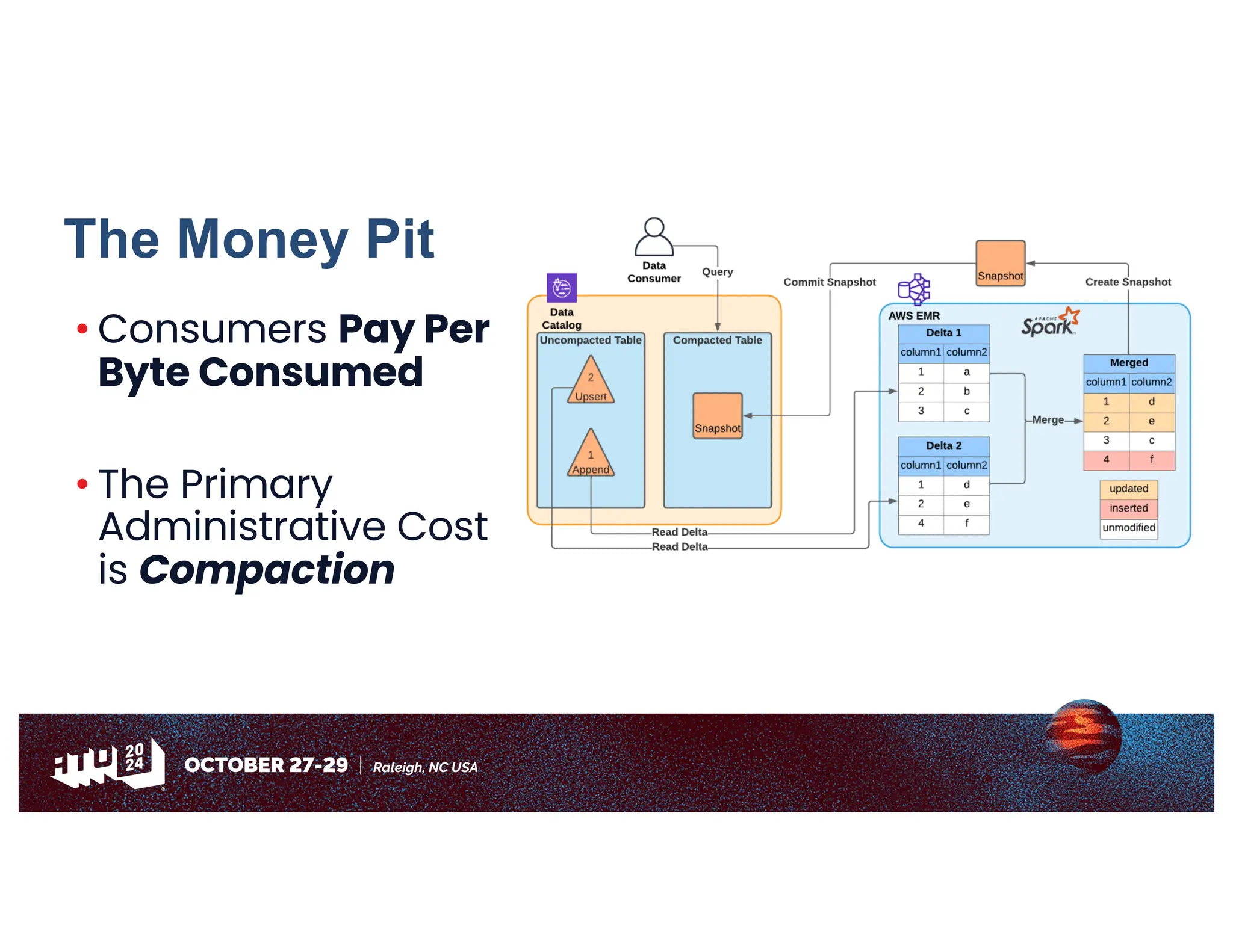

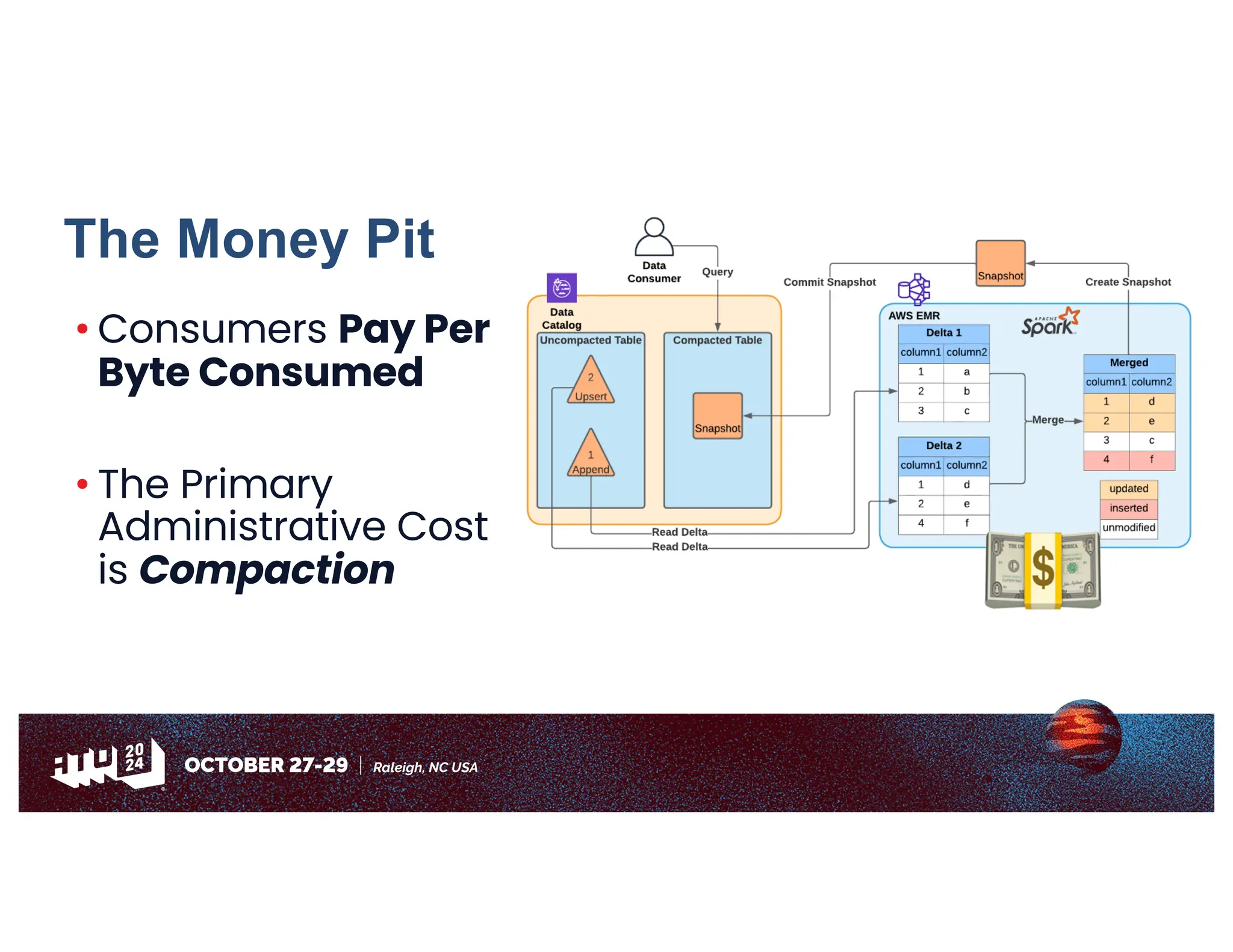

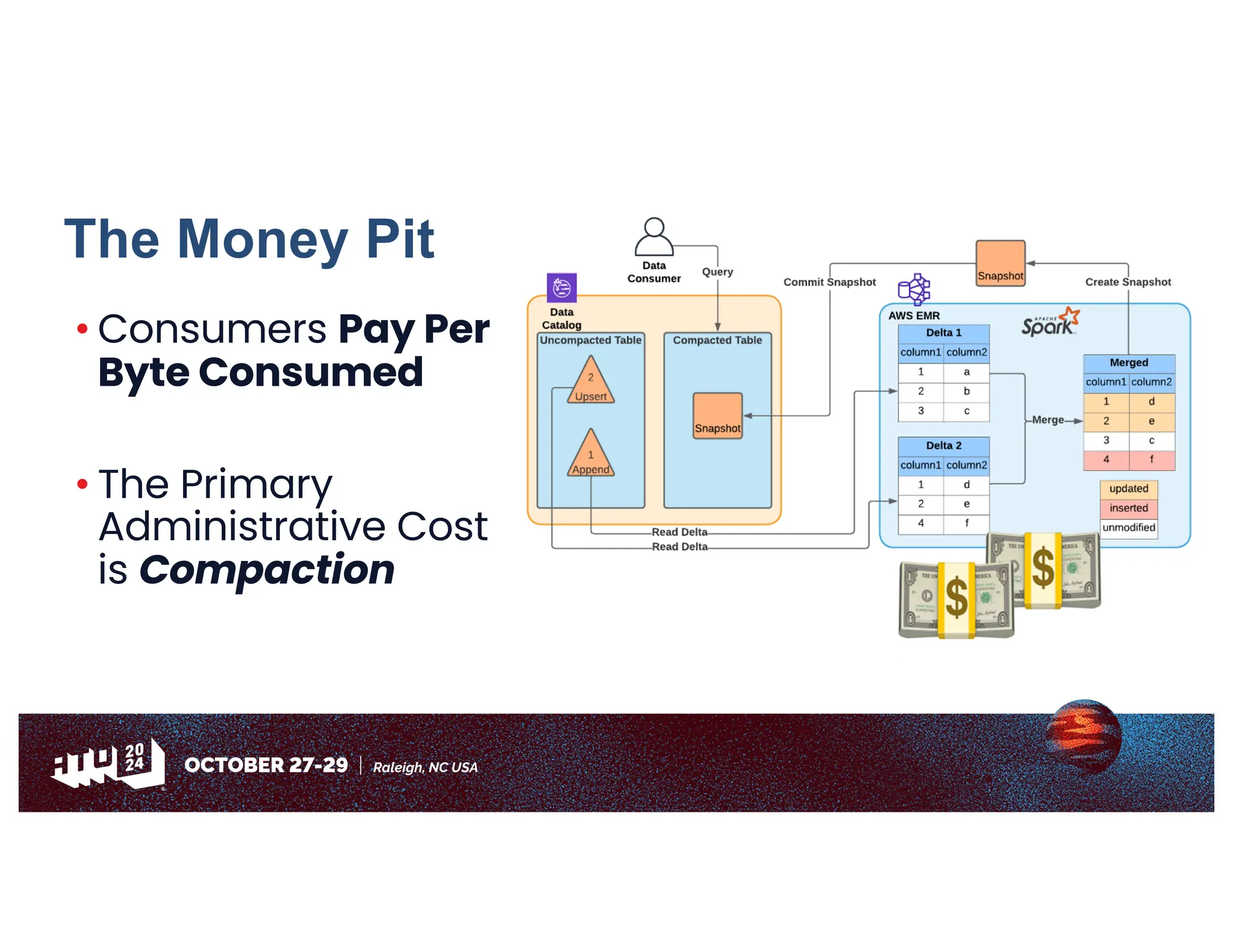

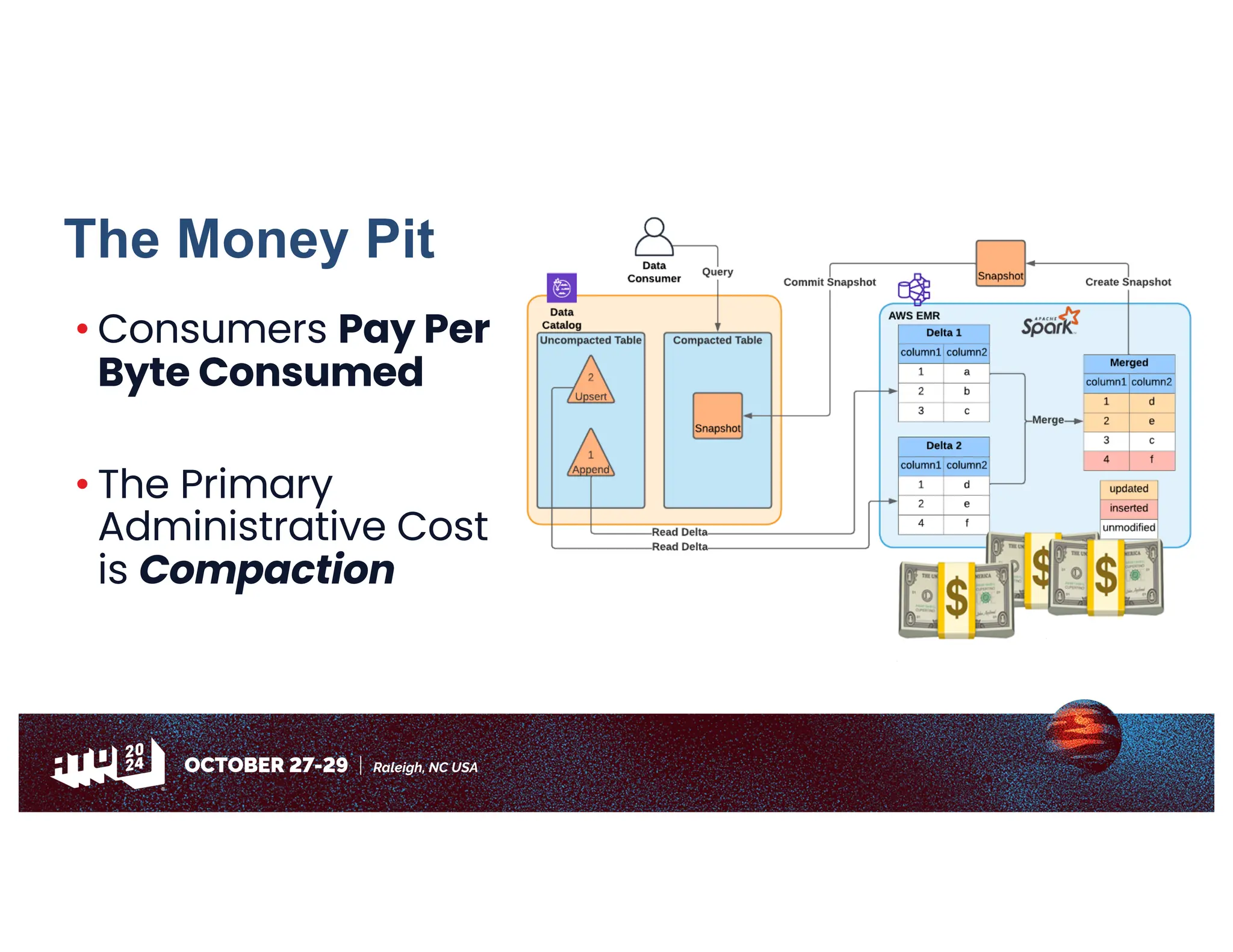

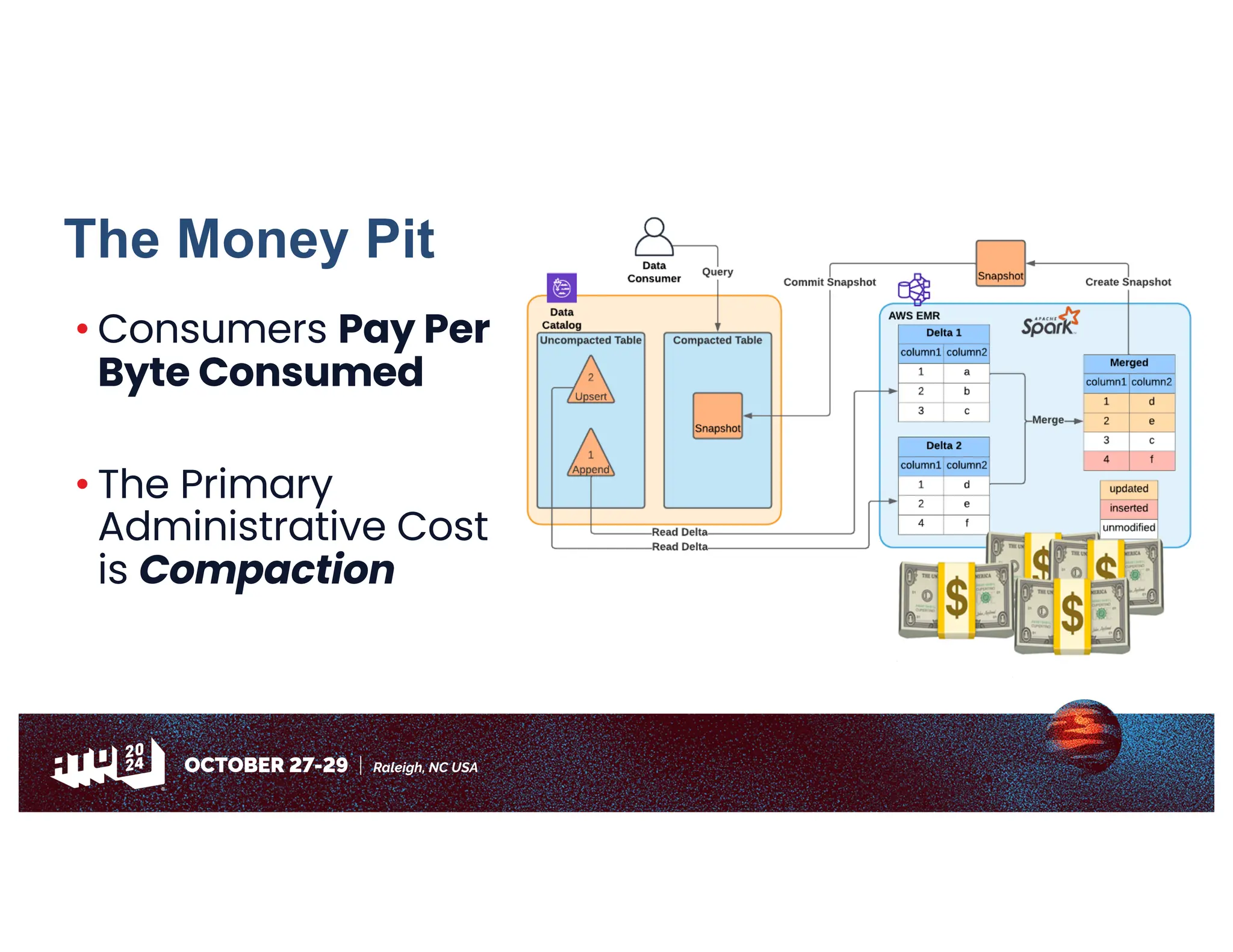

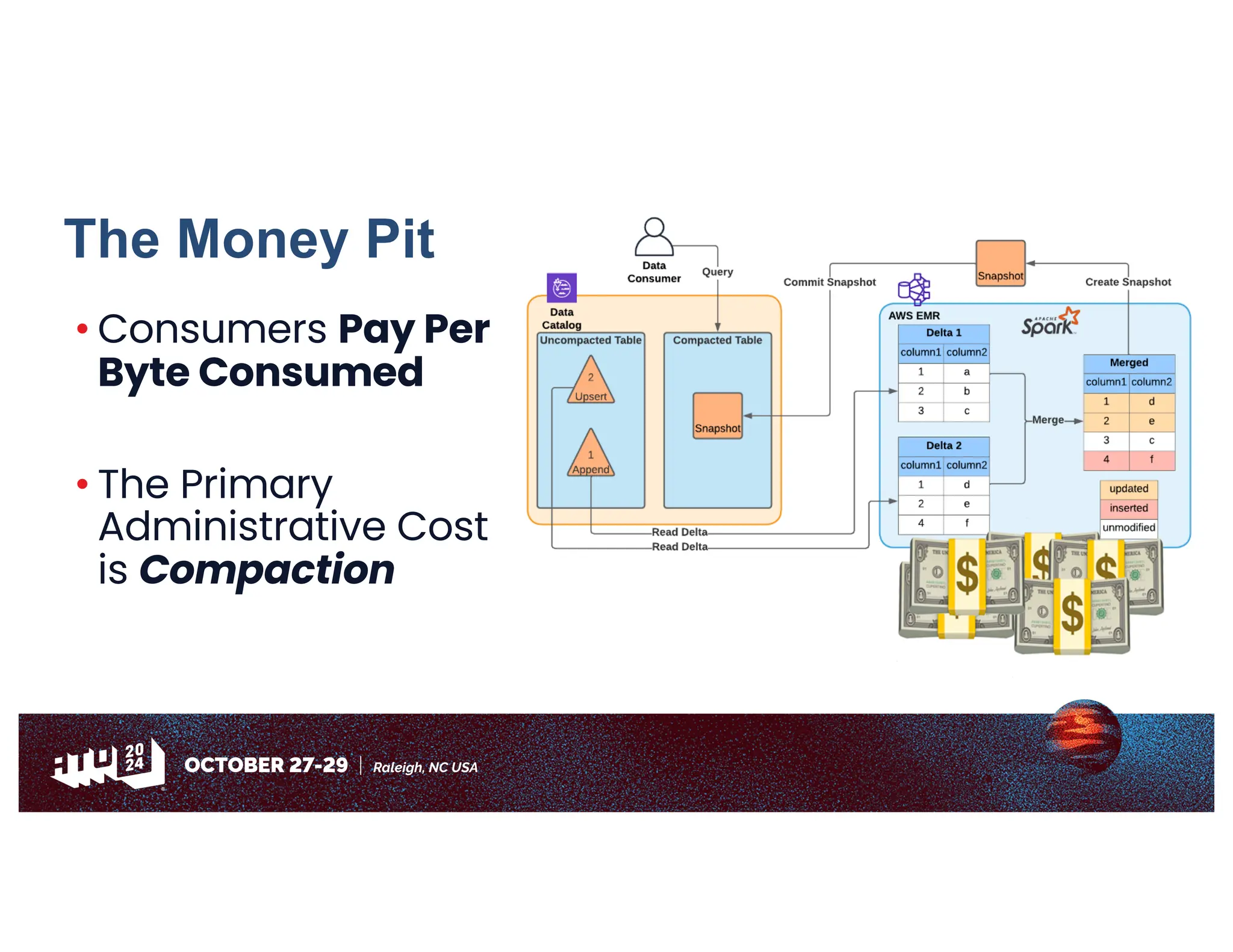

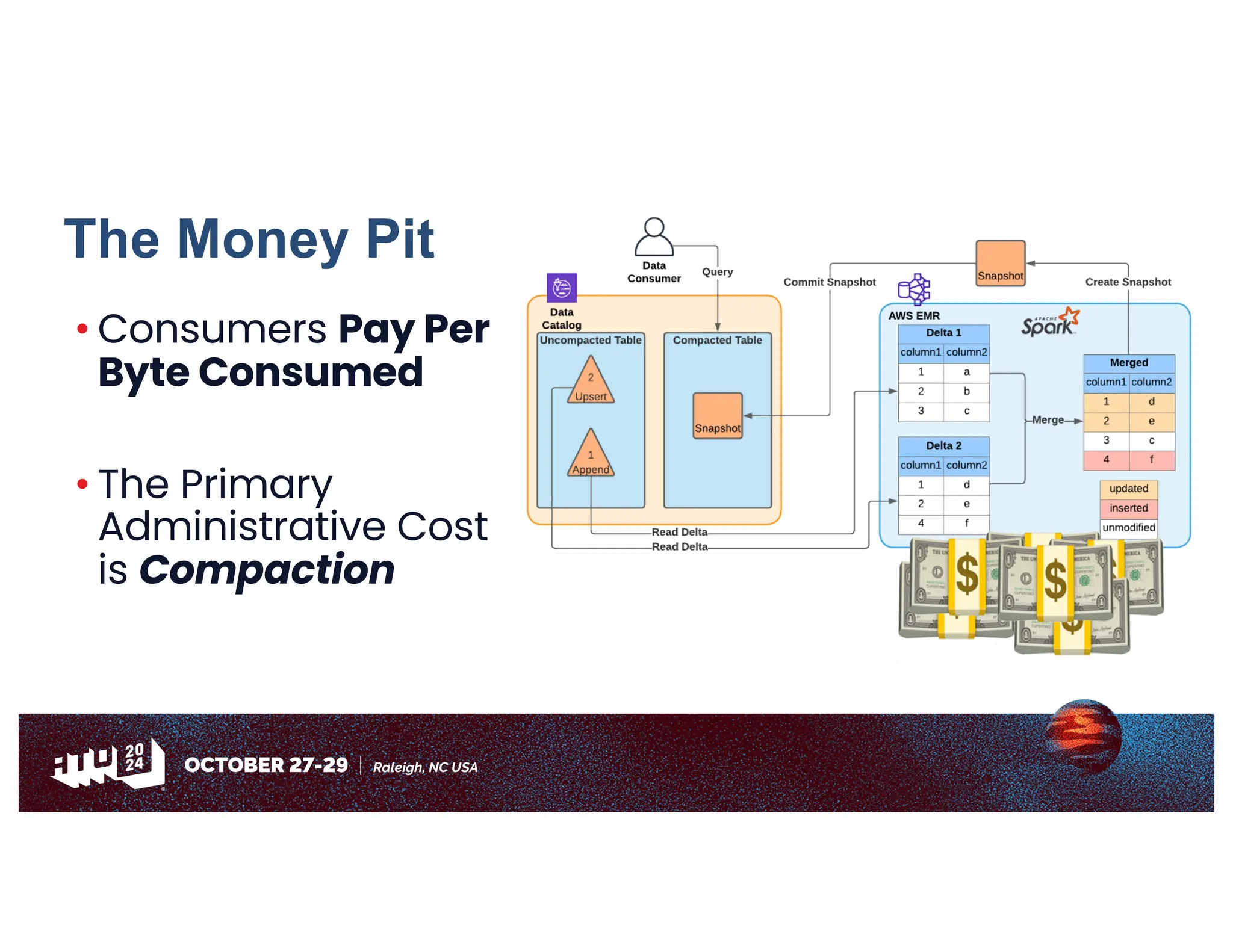

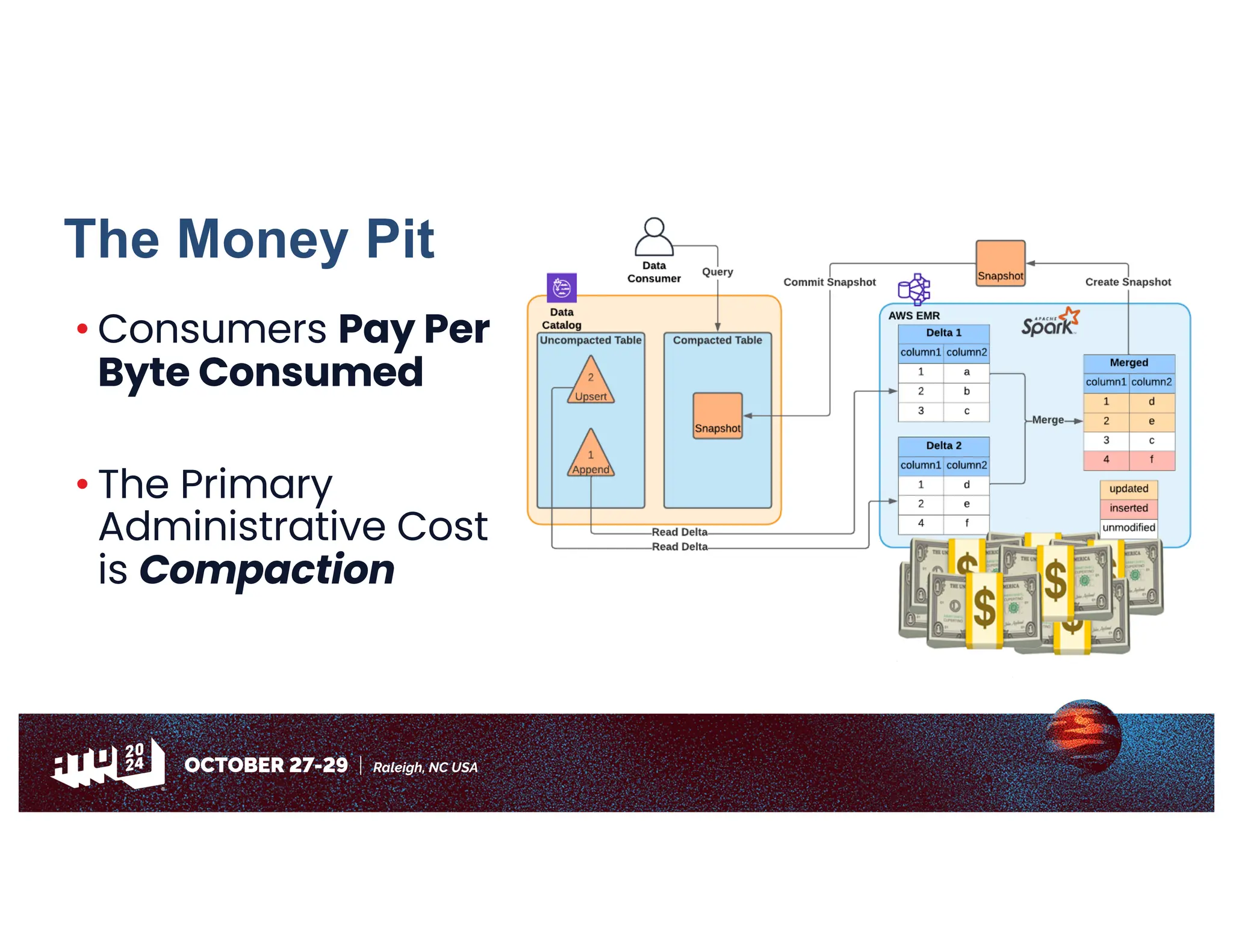

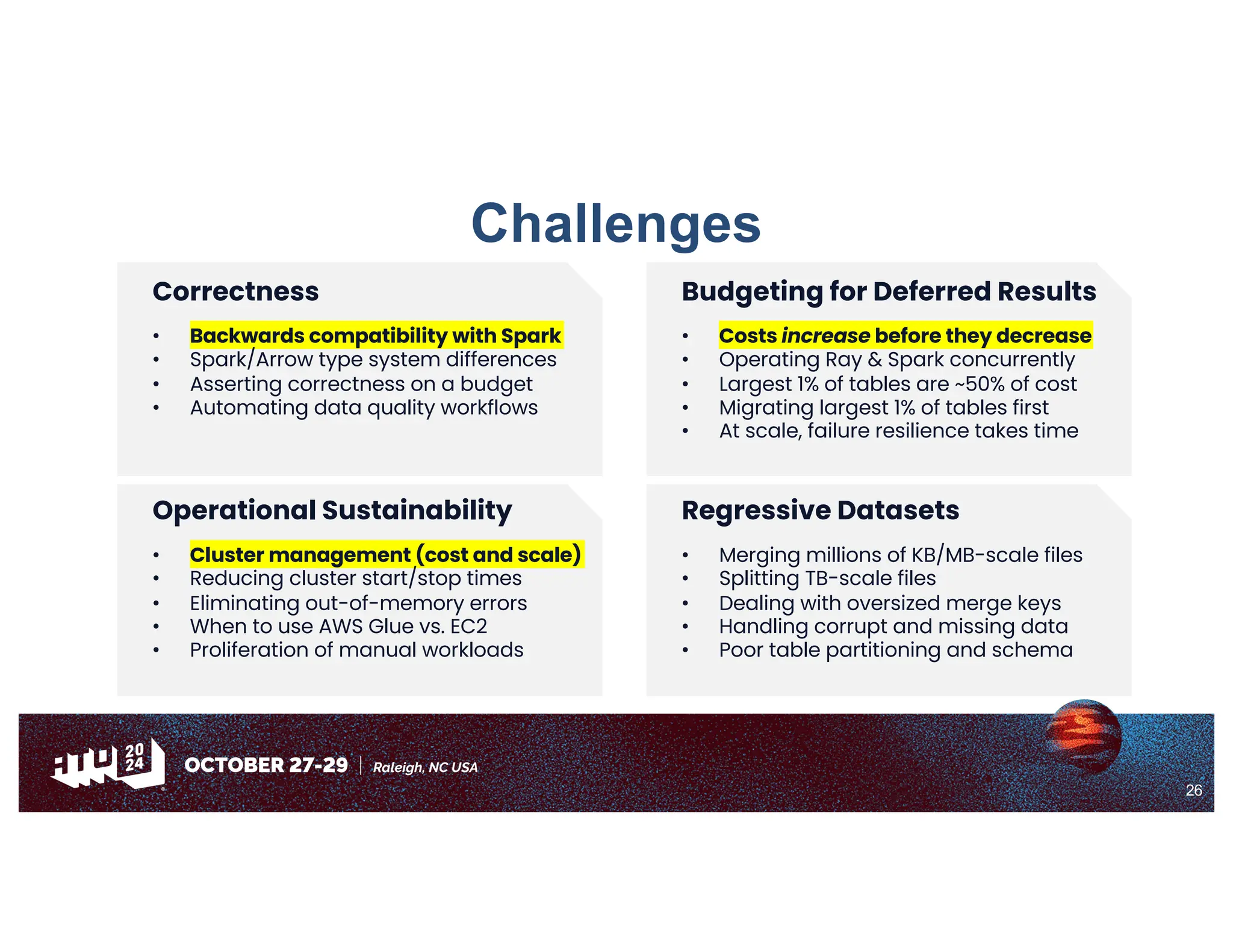

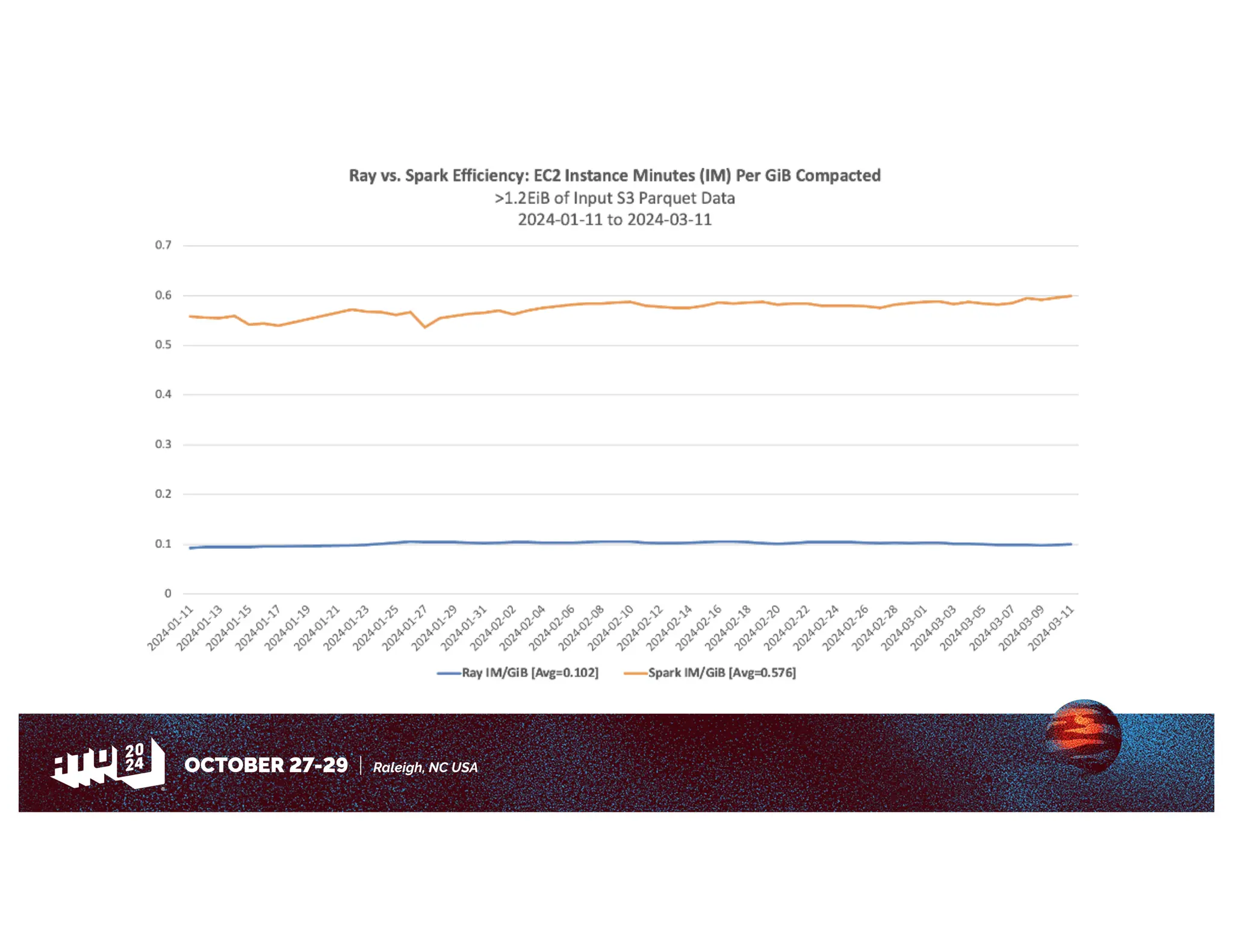

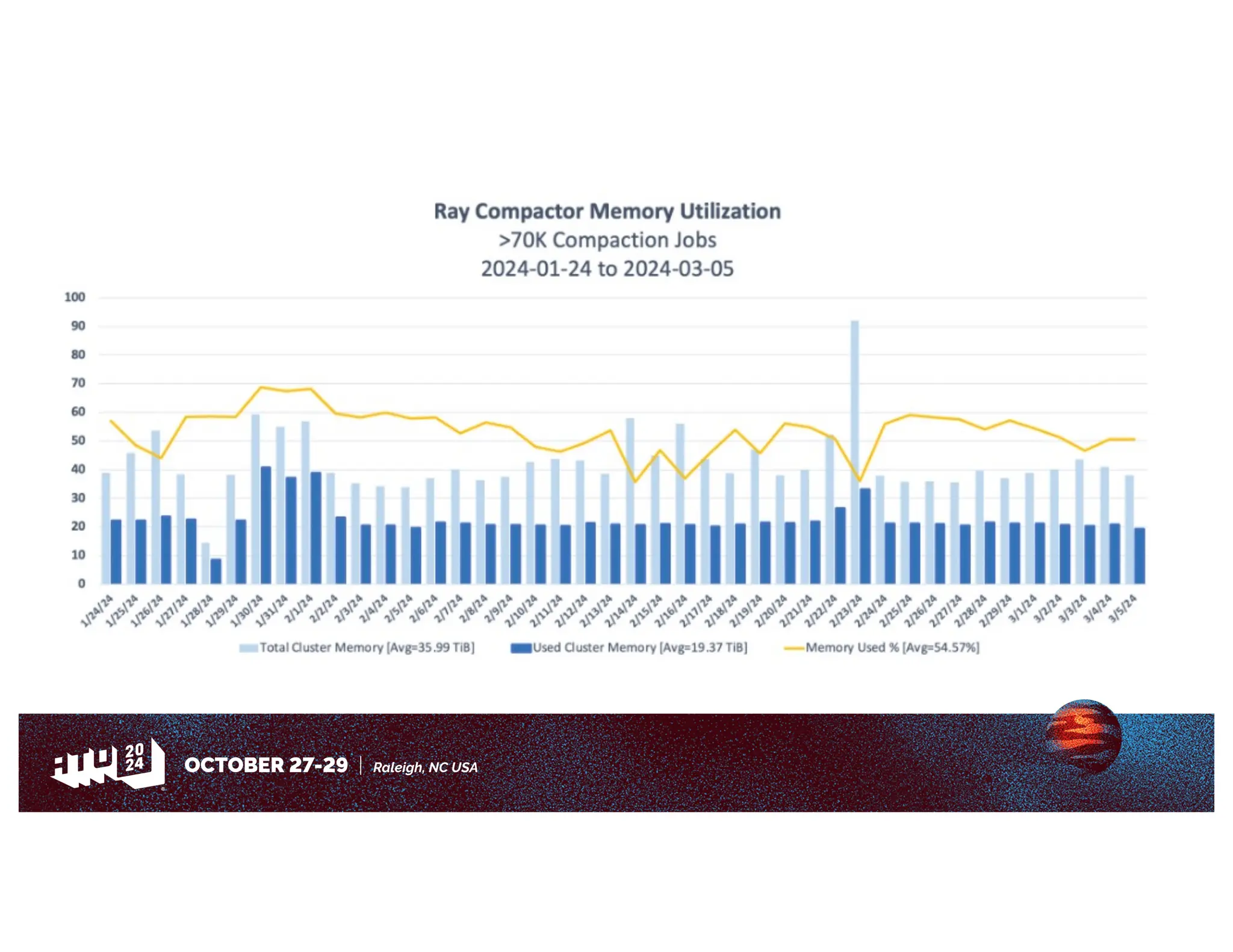

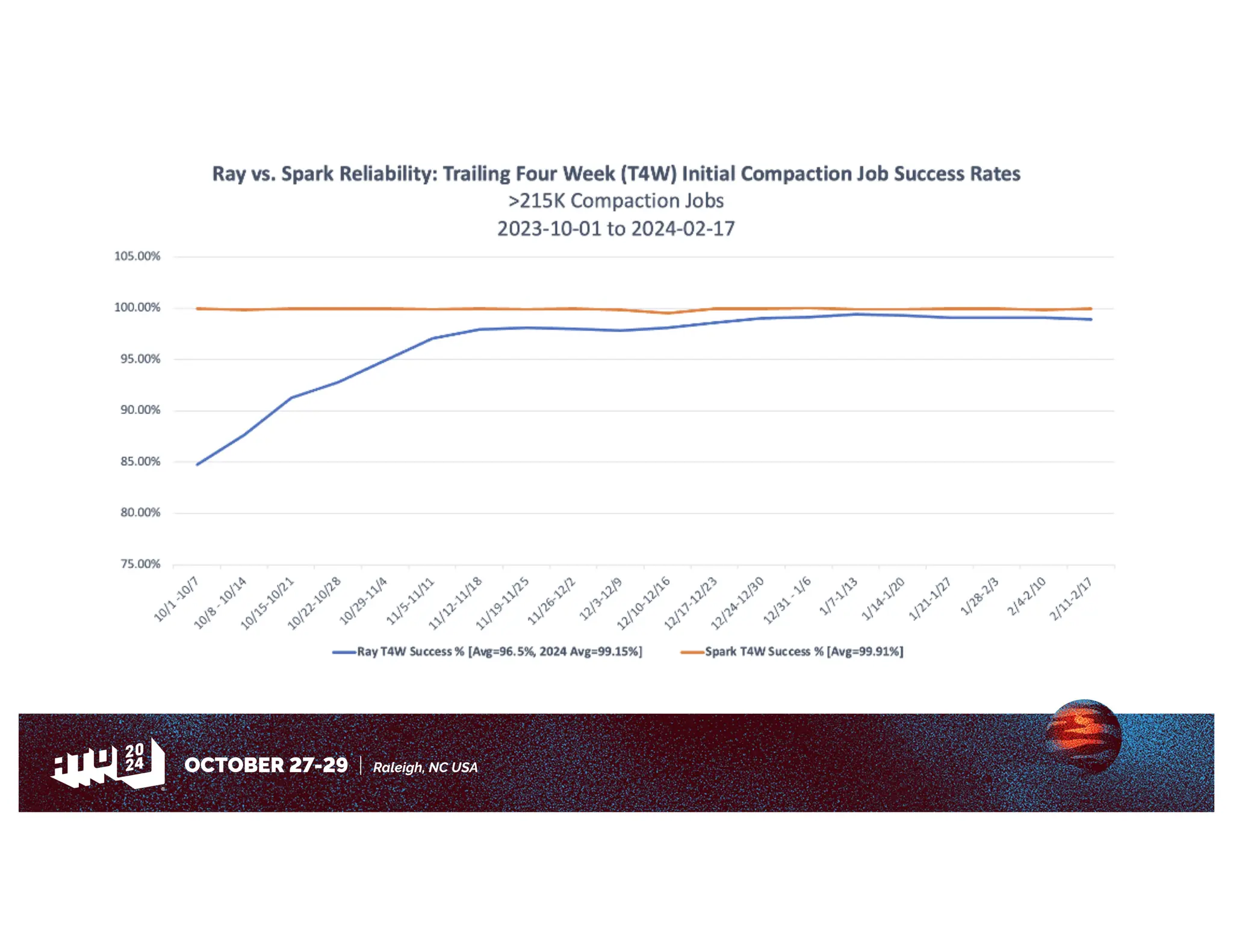

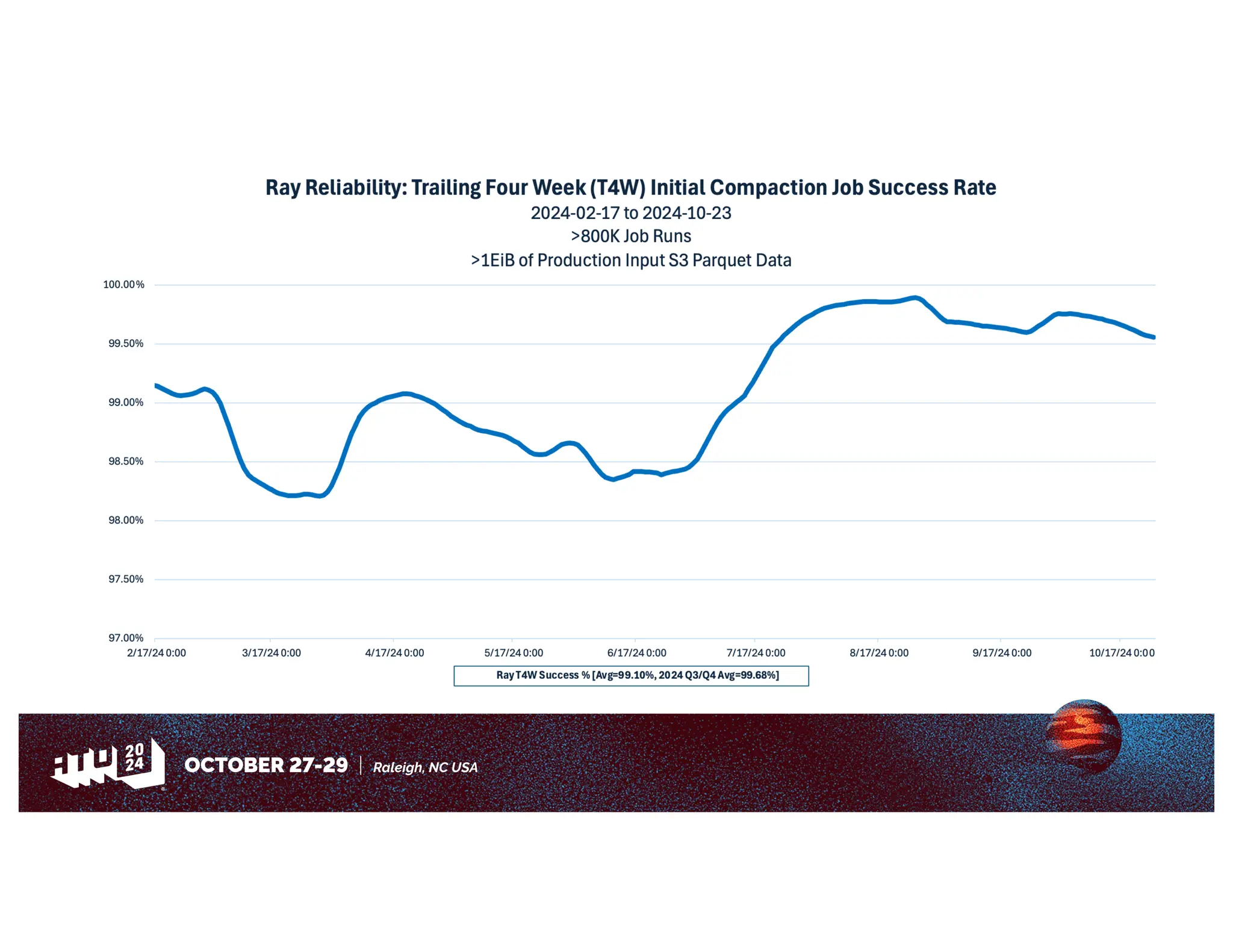

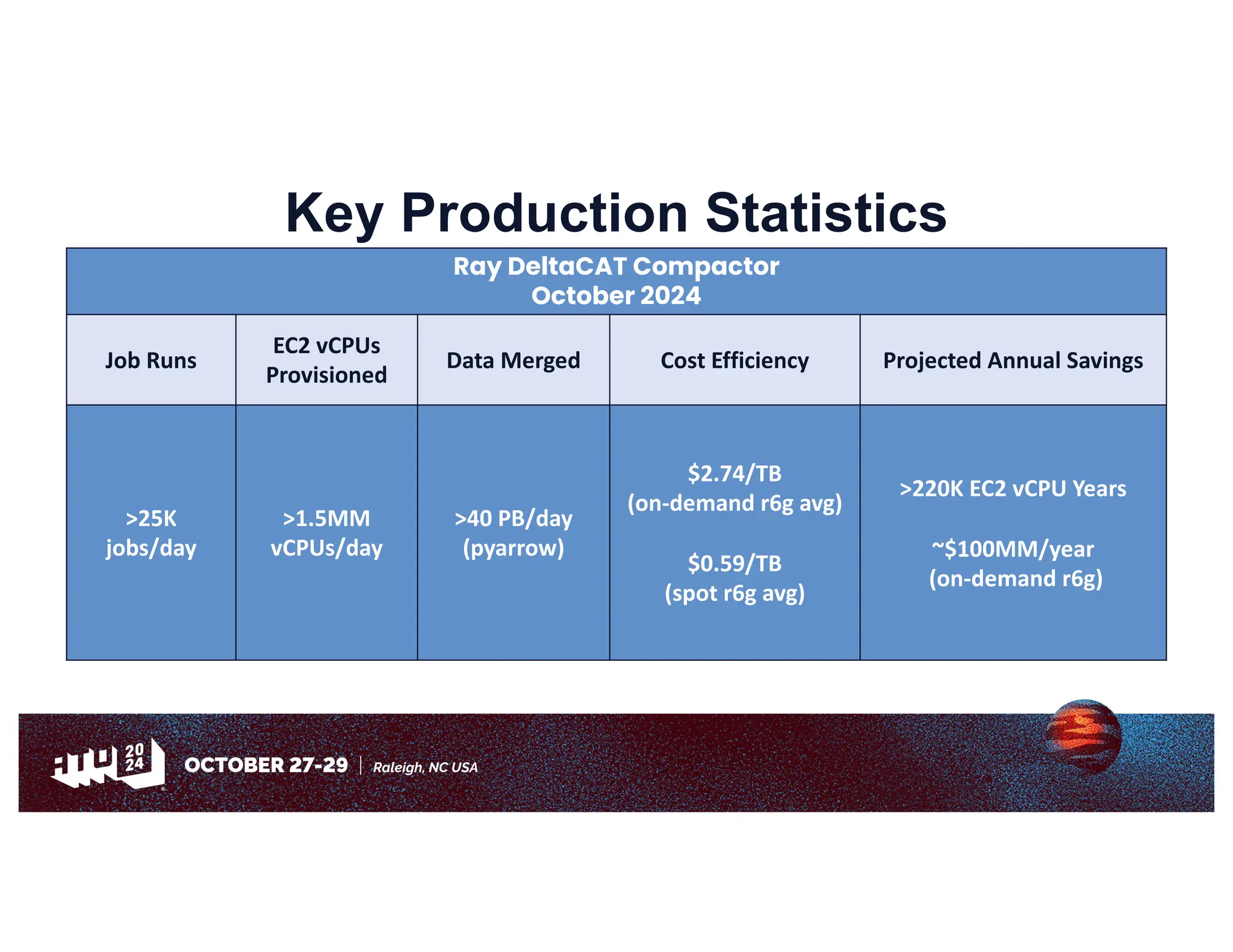

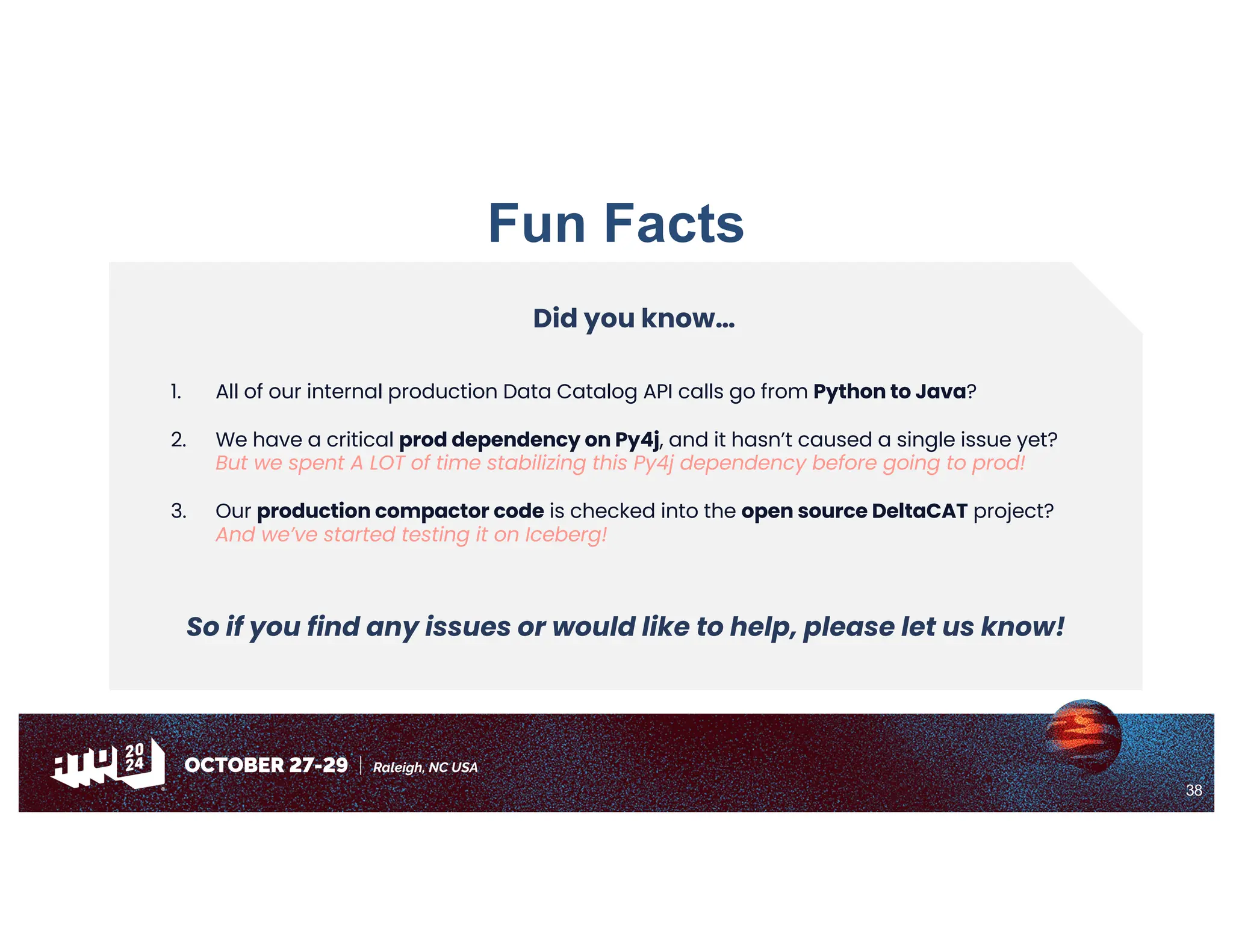

Amazon has migrated its data processing from Spark to Ray to improve efficiency and scalability of its bi pipelines. The transition involves moving compaction processes and adopting new technologies while addressing challenges such as cost, compatibility, and operational sustainability. Future work includes enhancing Apache Iceberg integration and optimizing performance across data processing workflows.