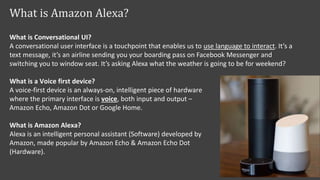

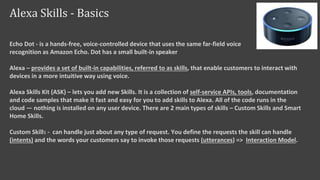

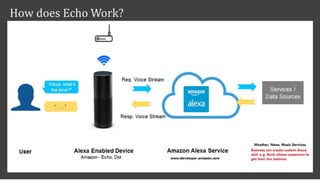

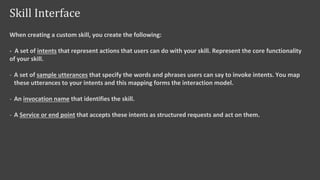

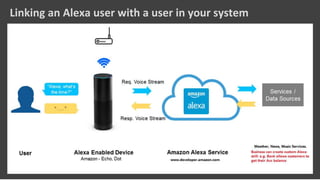

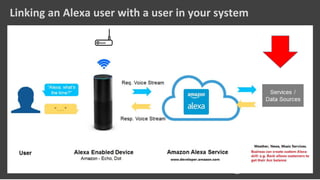

This document provides an overview of Amazon Alexa and voice-first devices. It discusses how advances in AI and speech recognition are driving adoption of these devices. By 2020, it is predicted that 75% of US households will have a smart speaker and 30% of web requests will be via voice. The document then describes Amazon Alexa, the Alexa Skills Kit for developing voice skills, and how account linking using OAuth 2.0 allows skills to connect to external systems on a user's behalf.

![Why?

- Advances in AI, speech recognition and natural language processing.

- Plunging cost of processing and data storage.

What’s next?

- In 2017, will be 24.5 million devices shipped, leading to 33 million voice-first devices in circulation.

- Edison research predict 75% US households will have smart speakers [Amazon Echo, etc.] by end 2020.

- Gartner predicts 30% of web information requests by 2020 will be via audio-centric technologies.

- BMW announced Alexa will be integrated into BMWs starting in mid-2018.

“Whoever wins voice will be the dominant tech company

of the next decade, like Google was for the web

and Intel was for the computing age.”

– Adam Cheynor (Inventor of Siri and founder of Viv –

Voice startup bought by Samsung)

Why are Voice first devices getting close to mainstream?](https://image.slidesharecdn.com/amazonalexadevelopmentoverview-171124143250/85/Amazon-Alexa-Development-Overview-3-320.jpg)