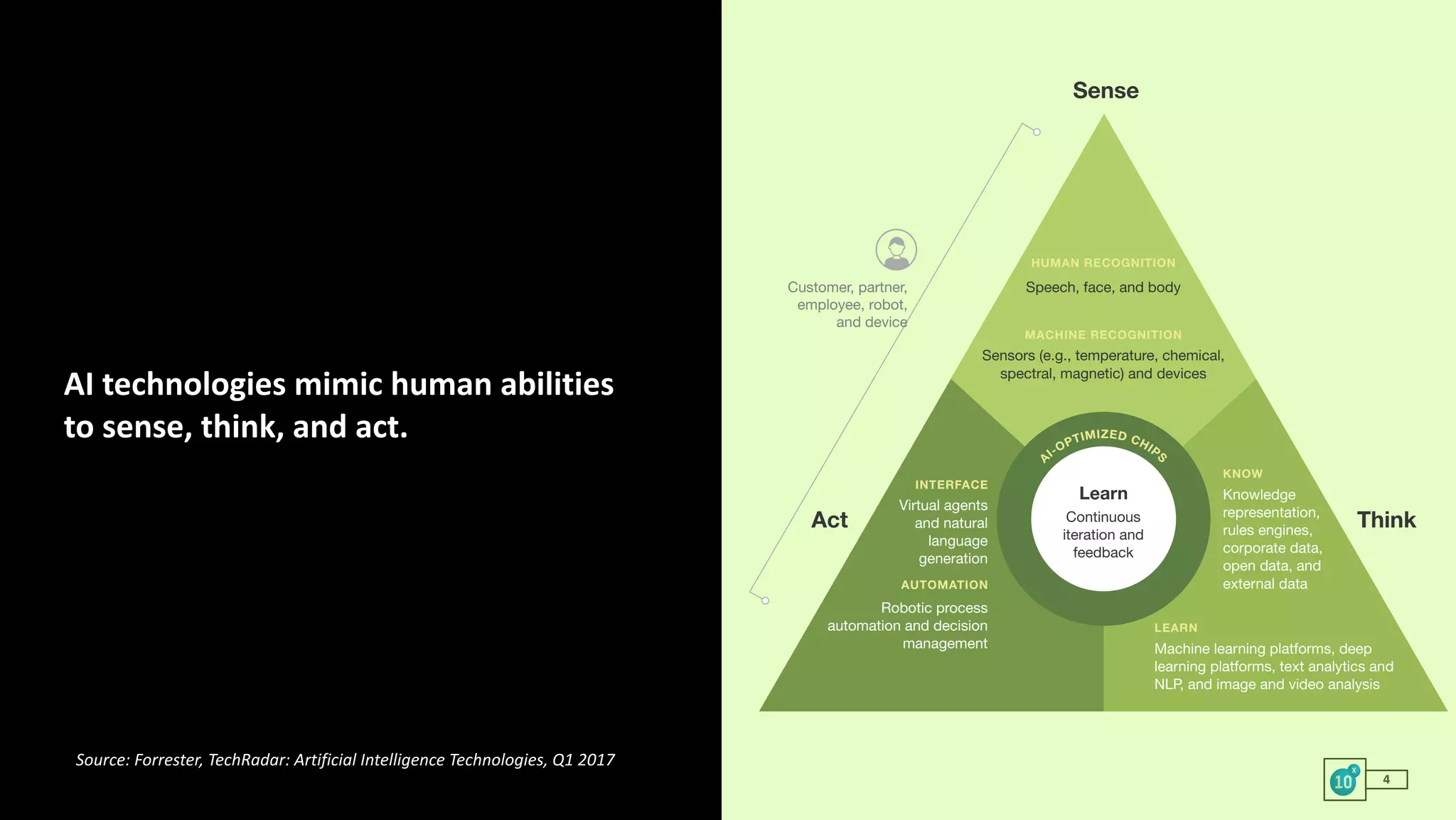

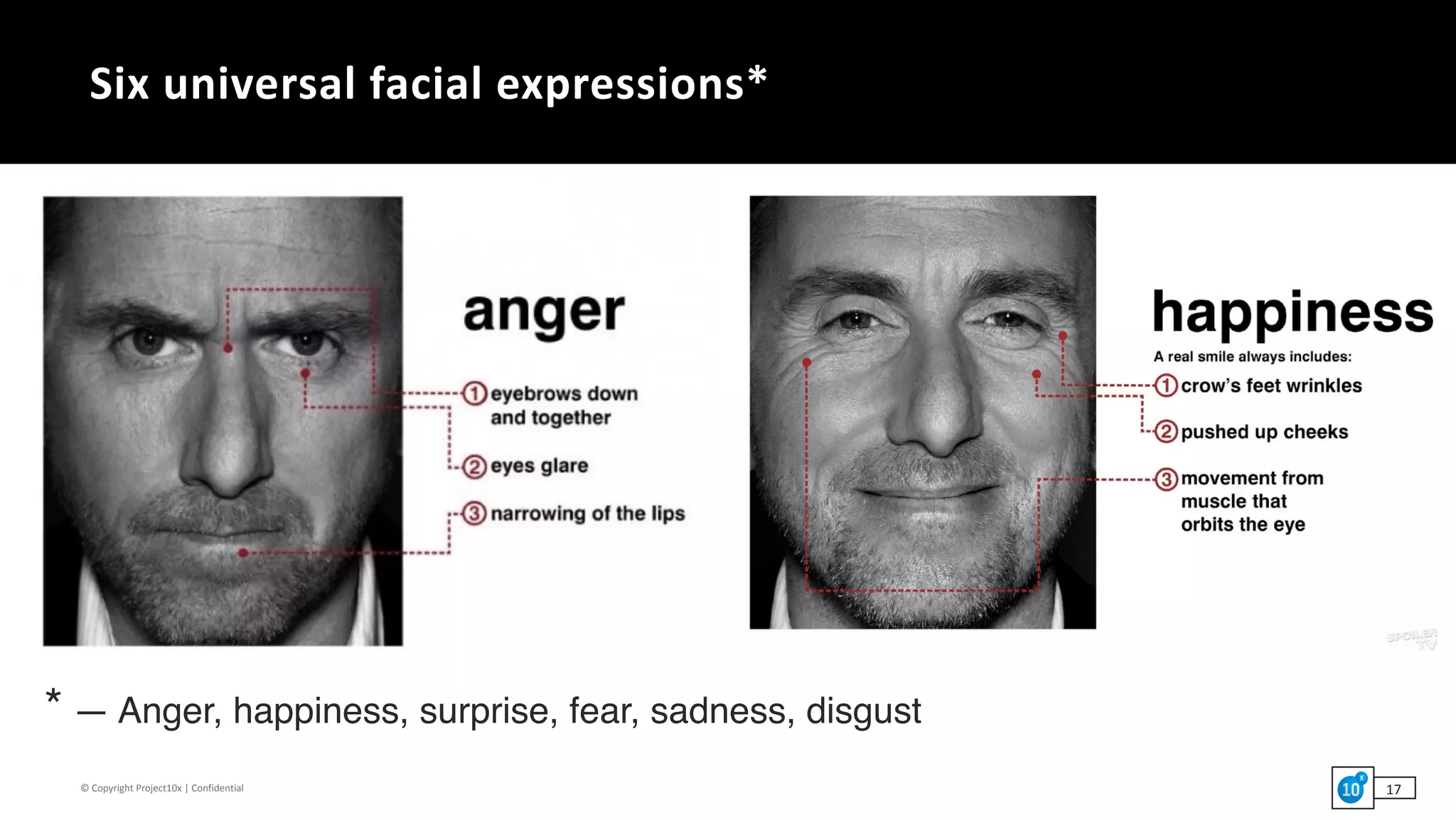

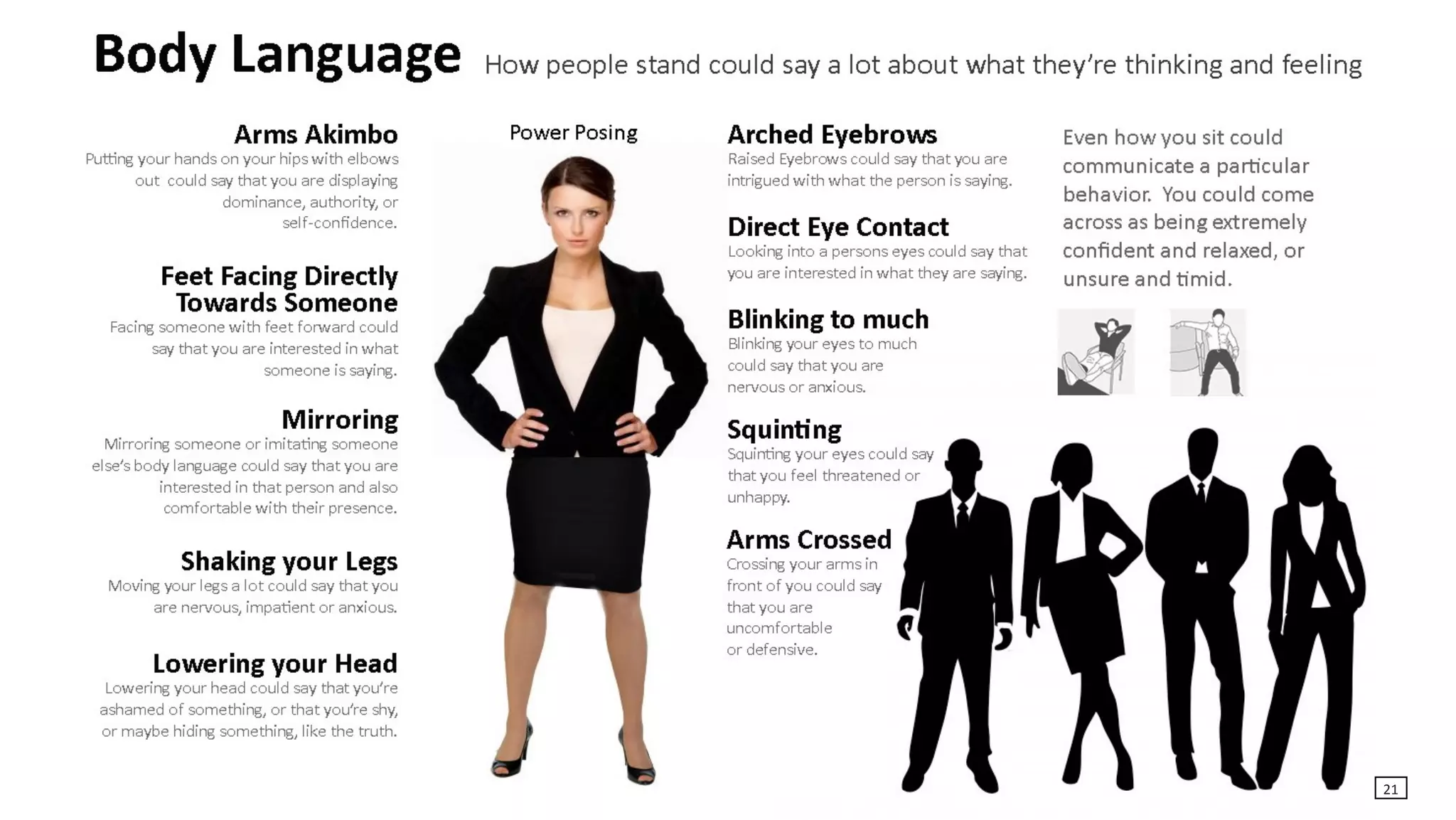

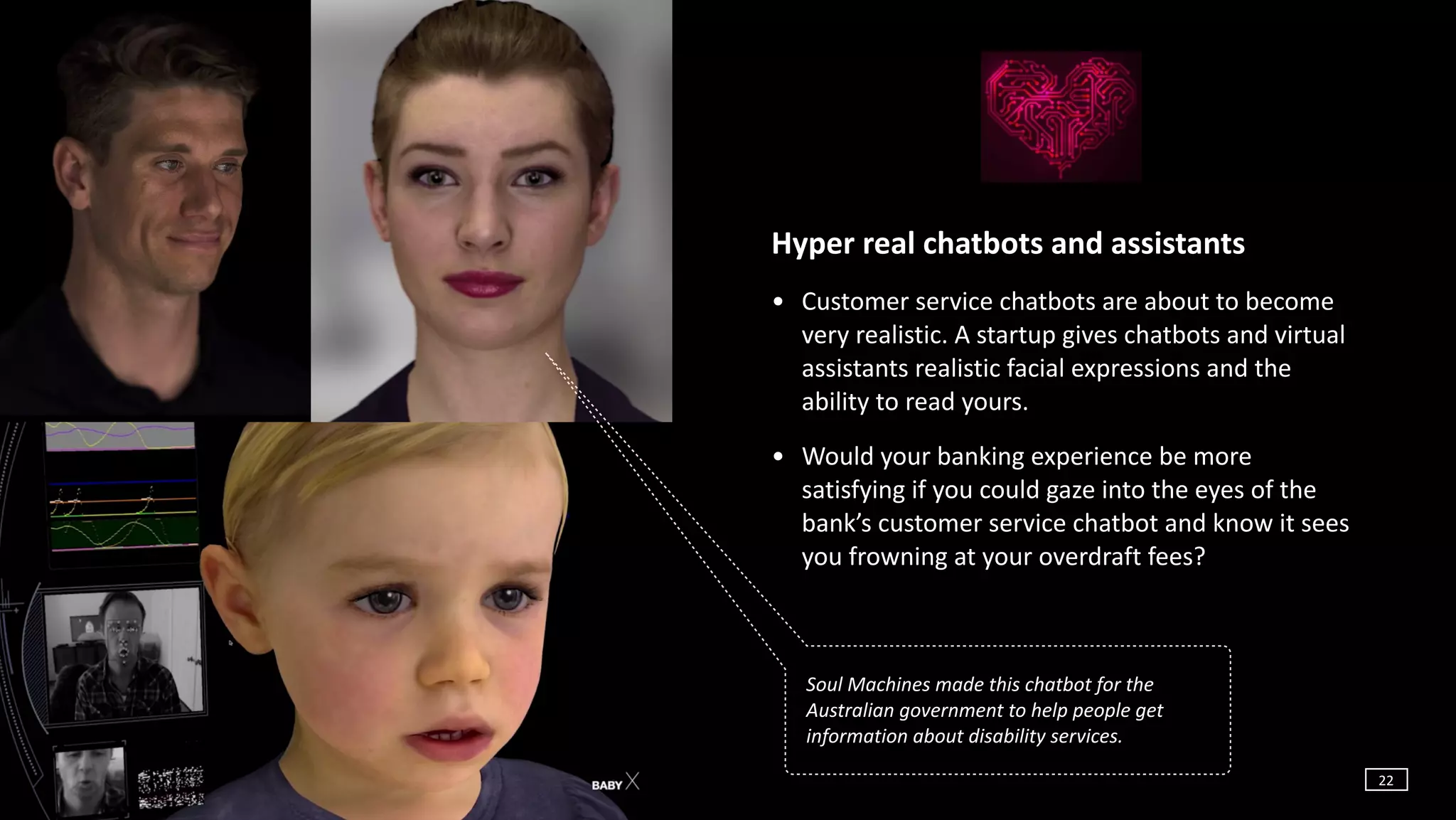

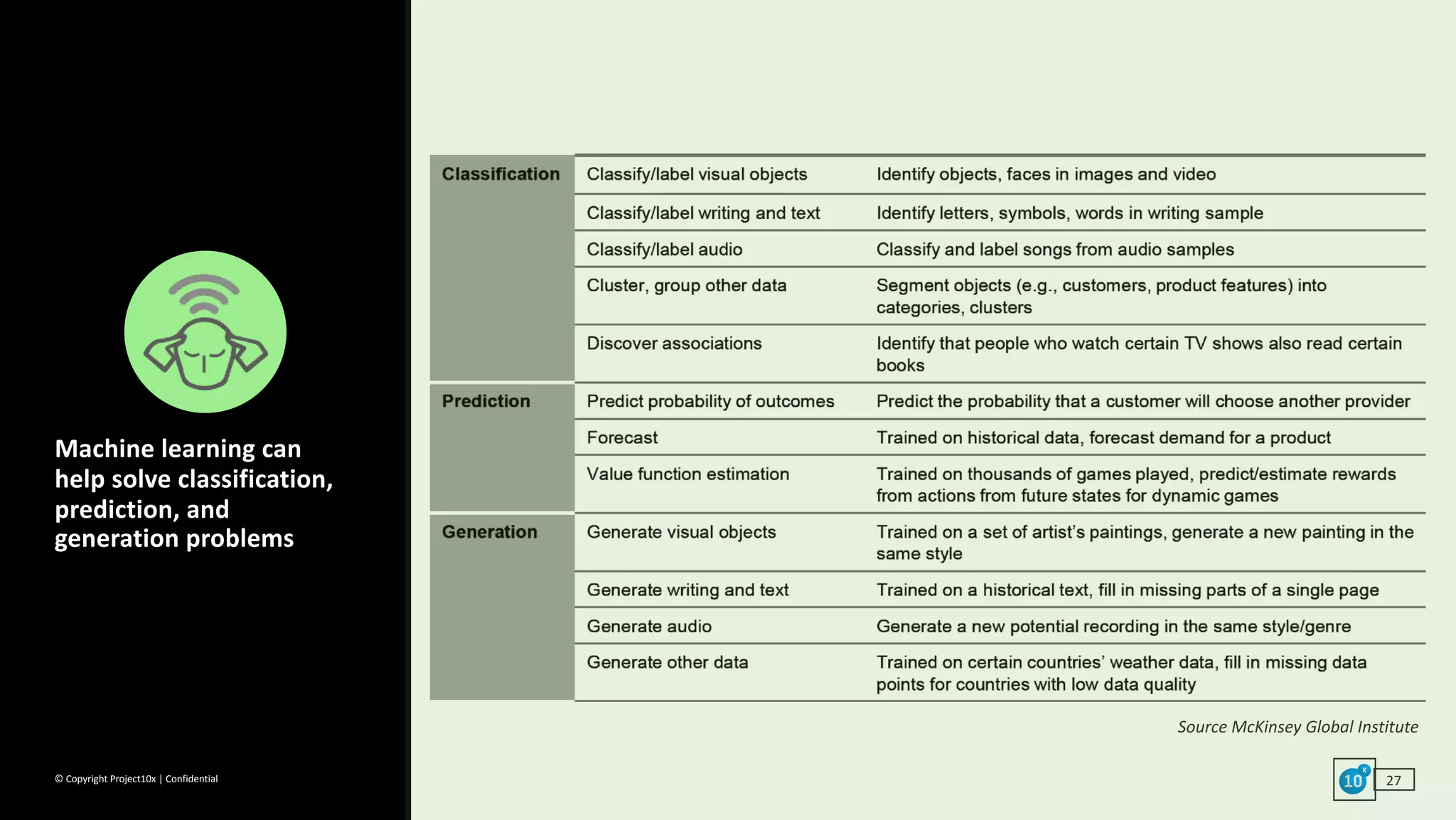

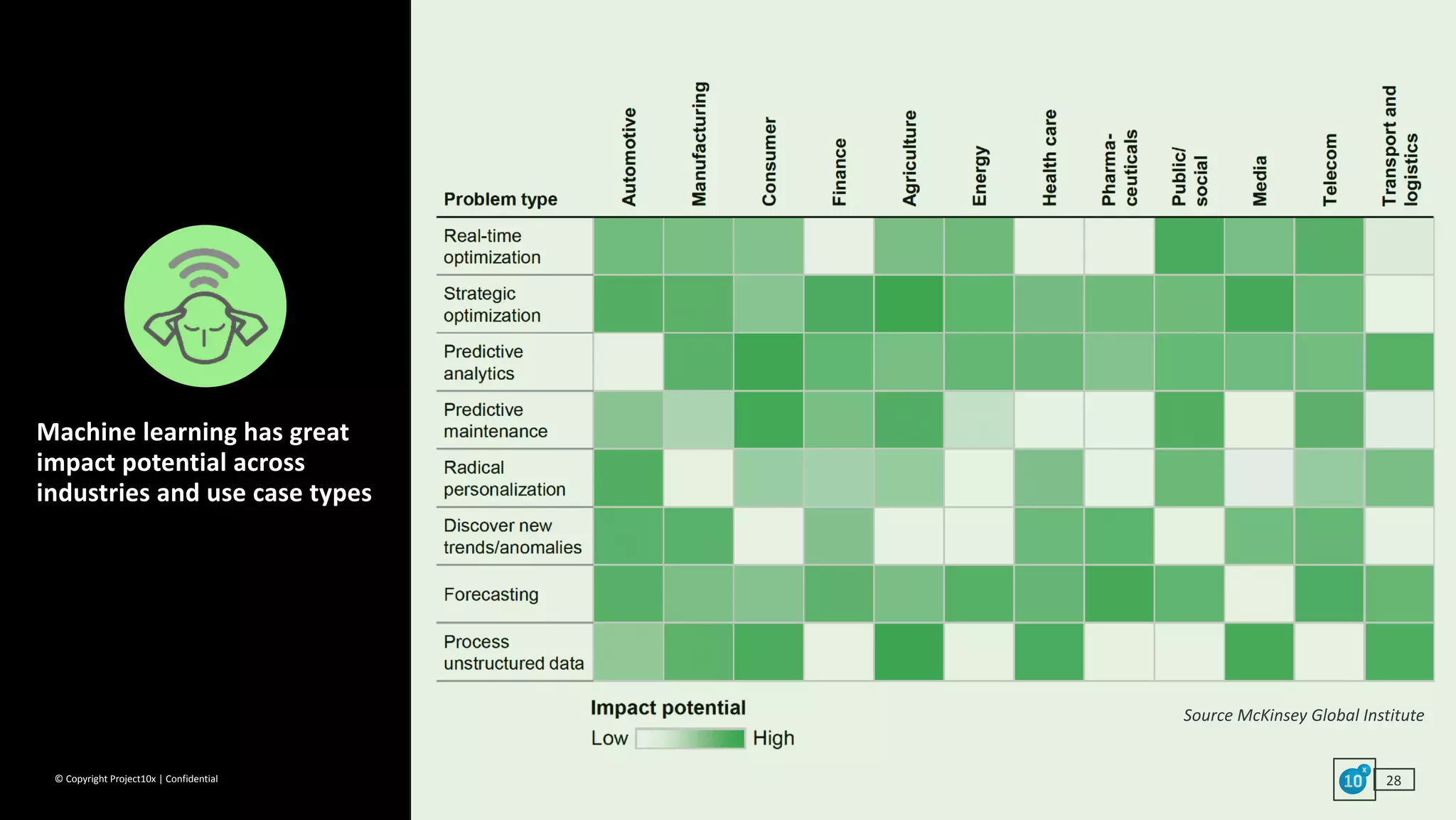

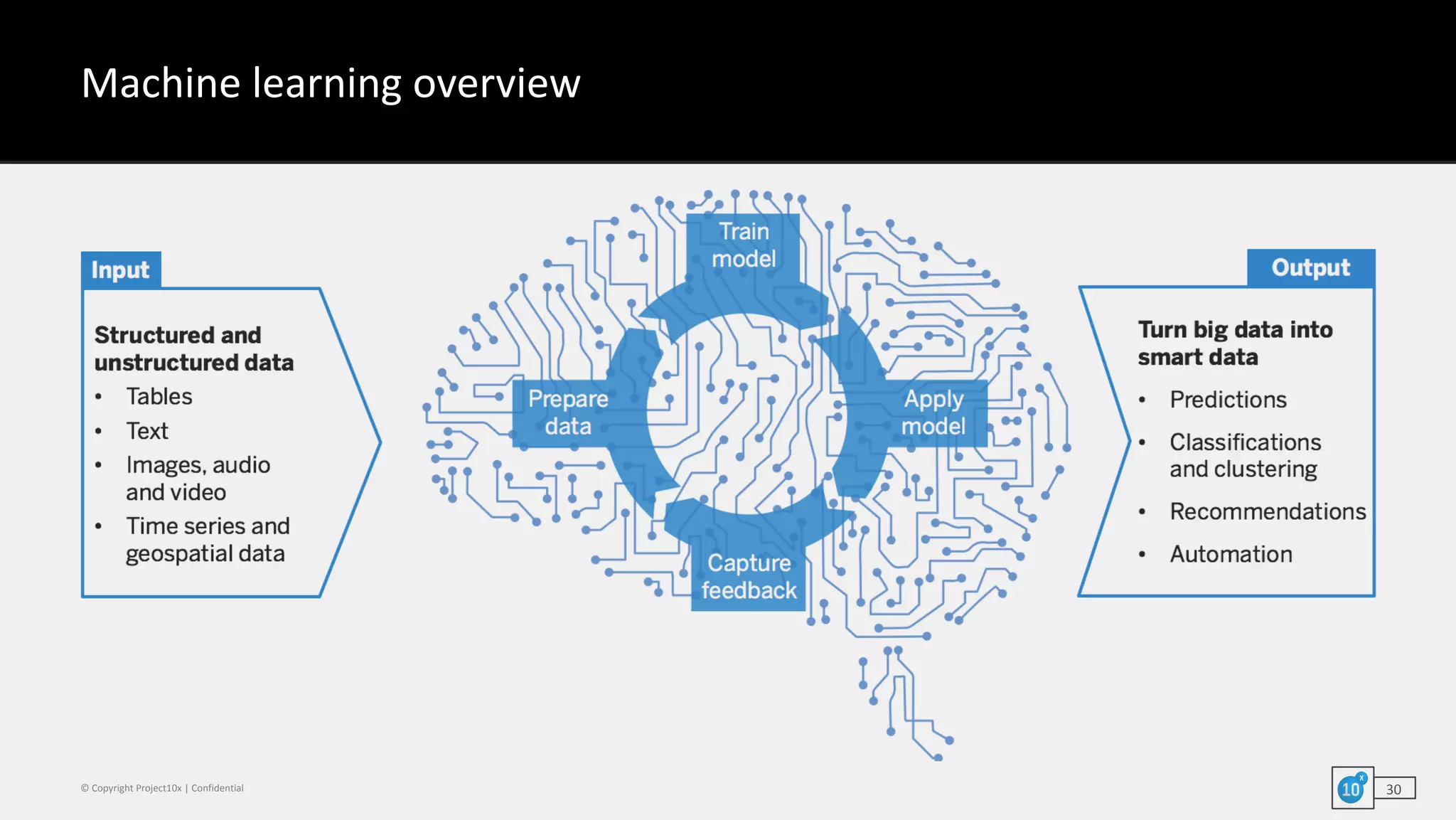

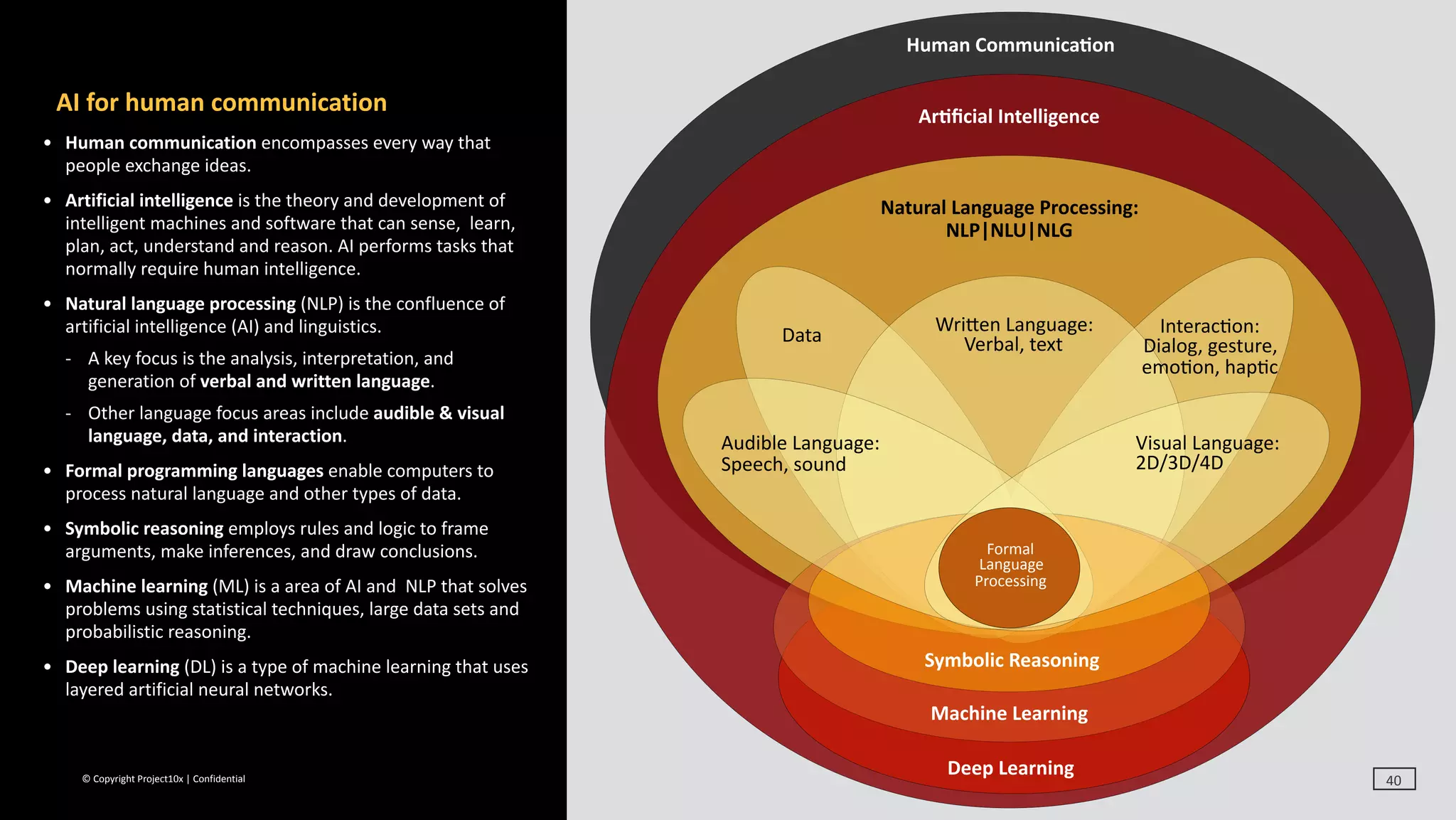

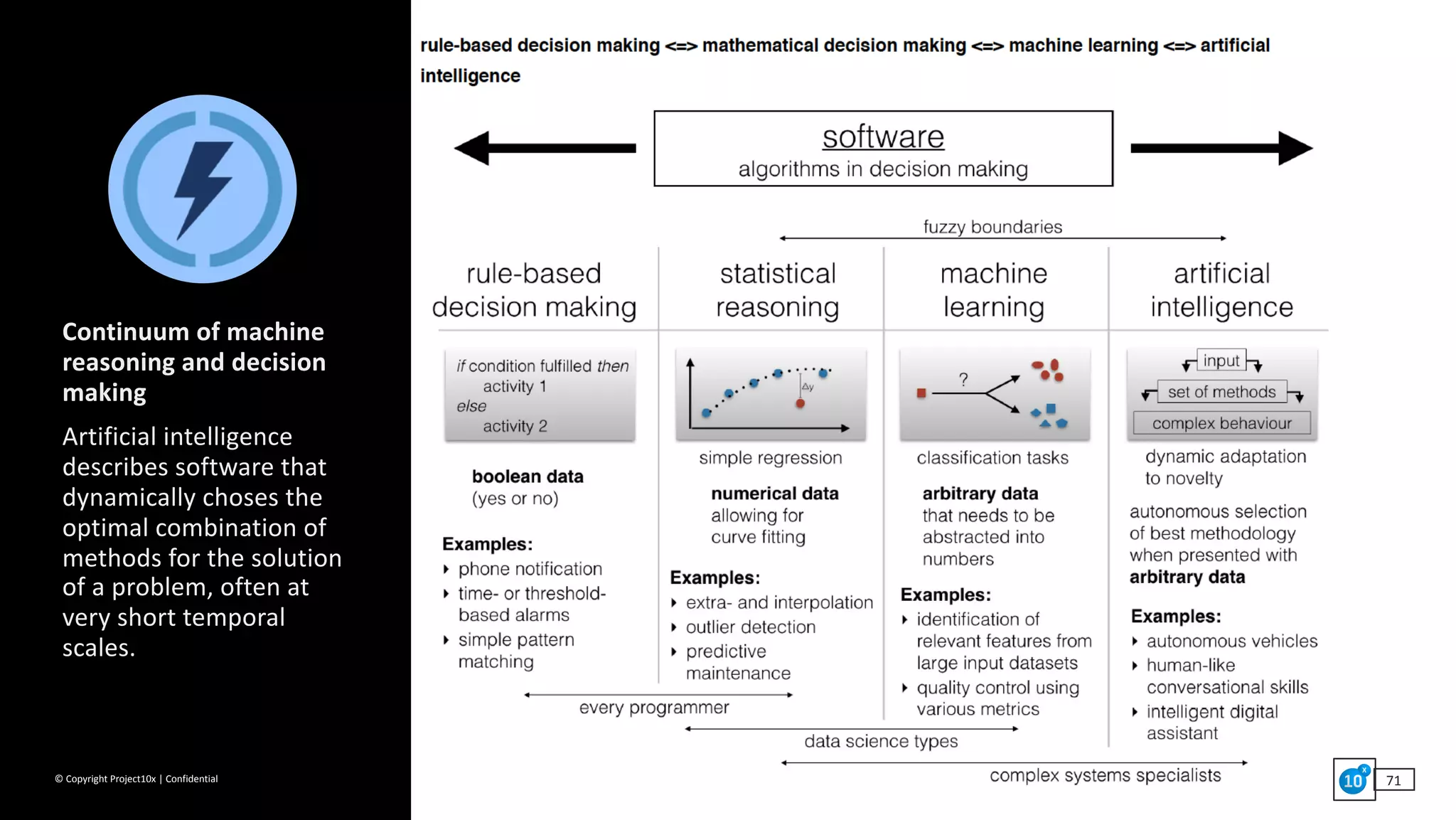

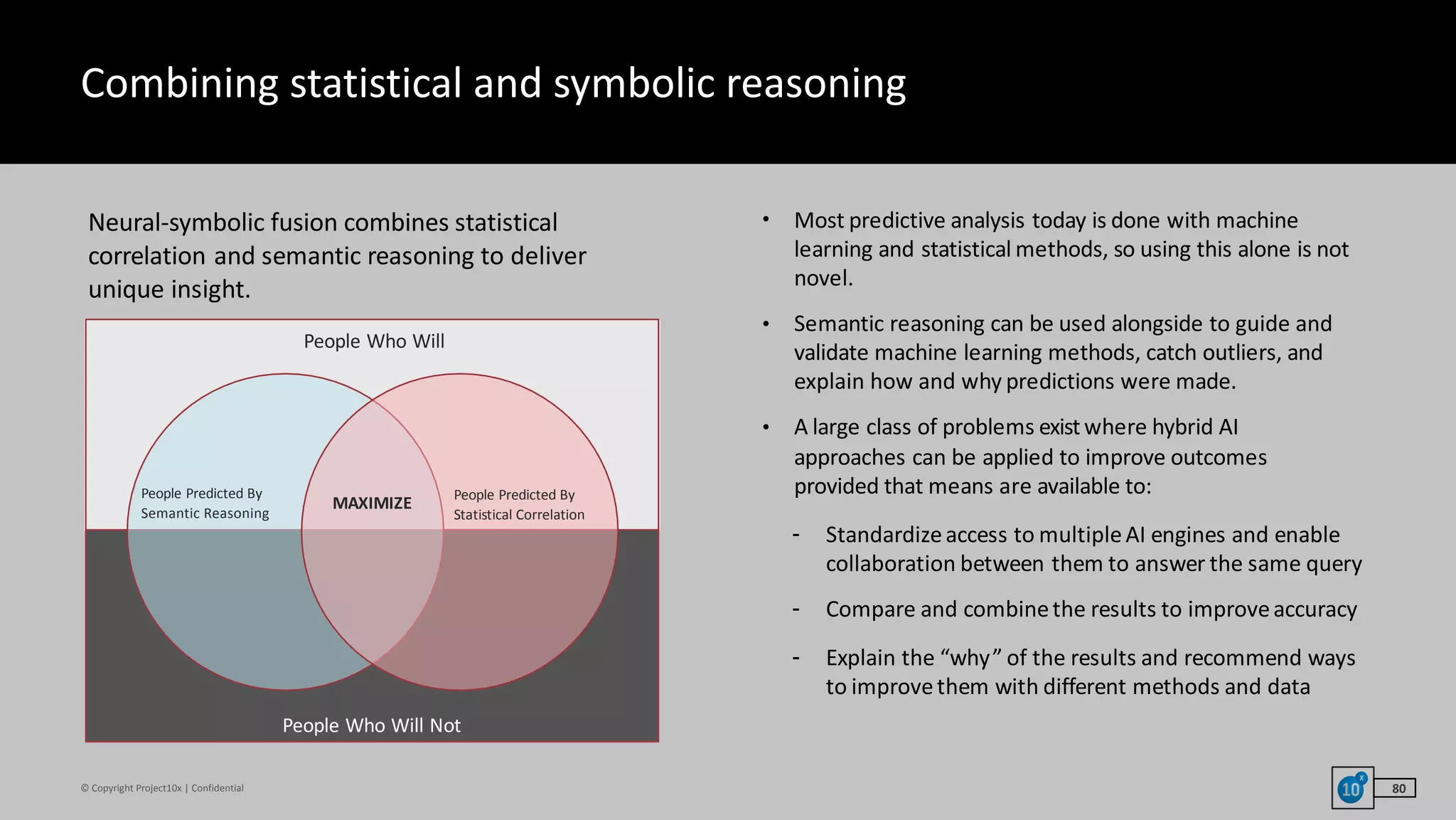

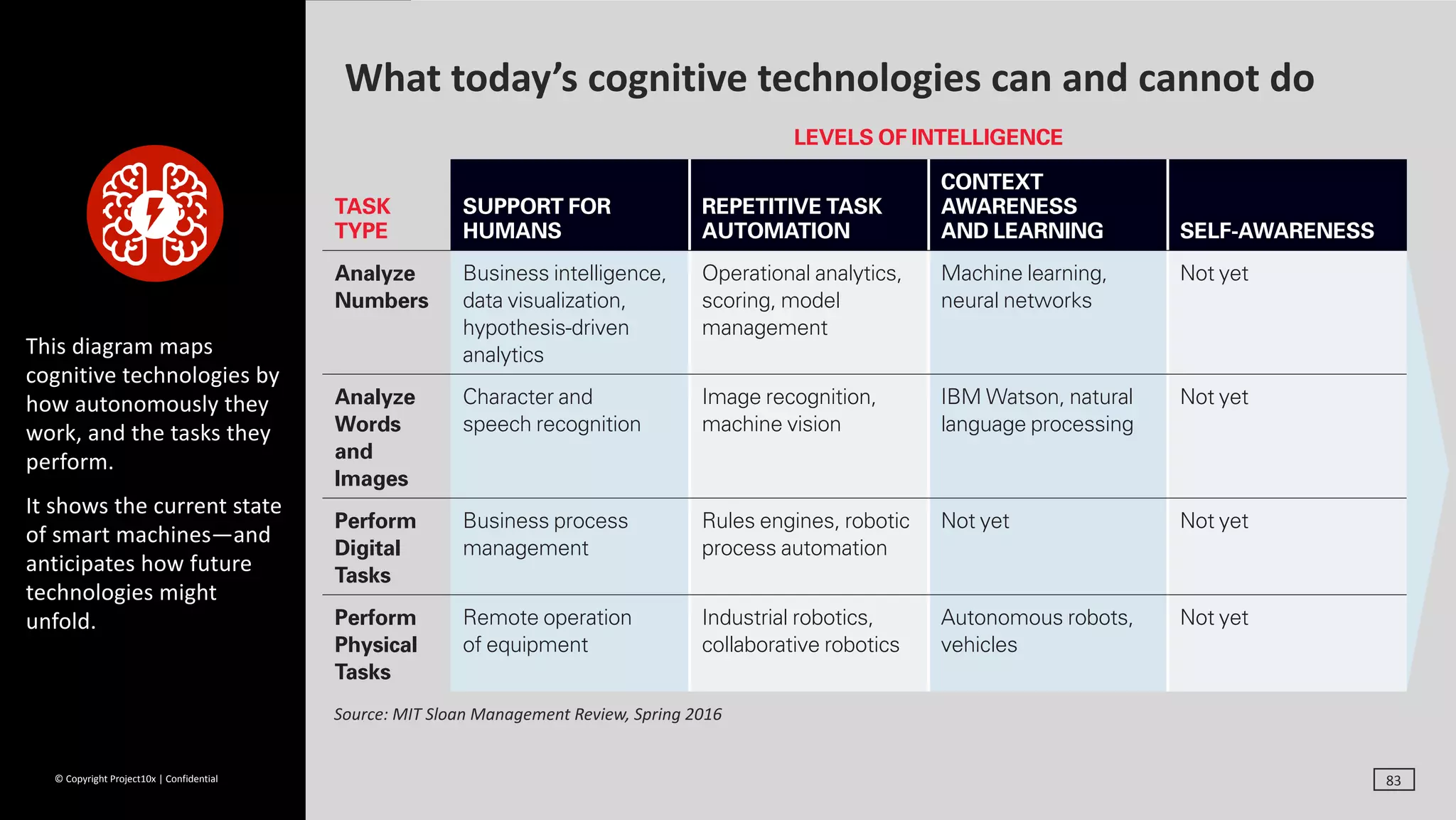

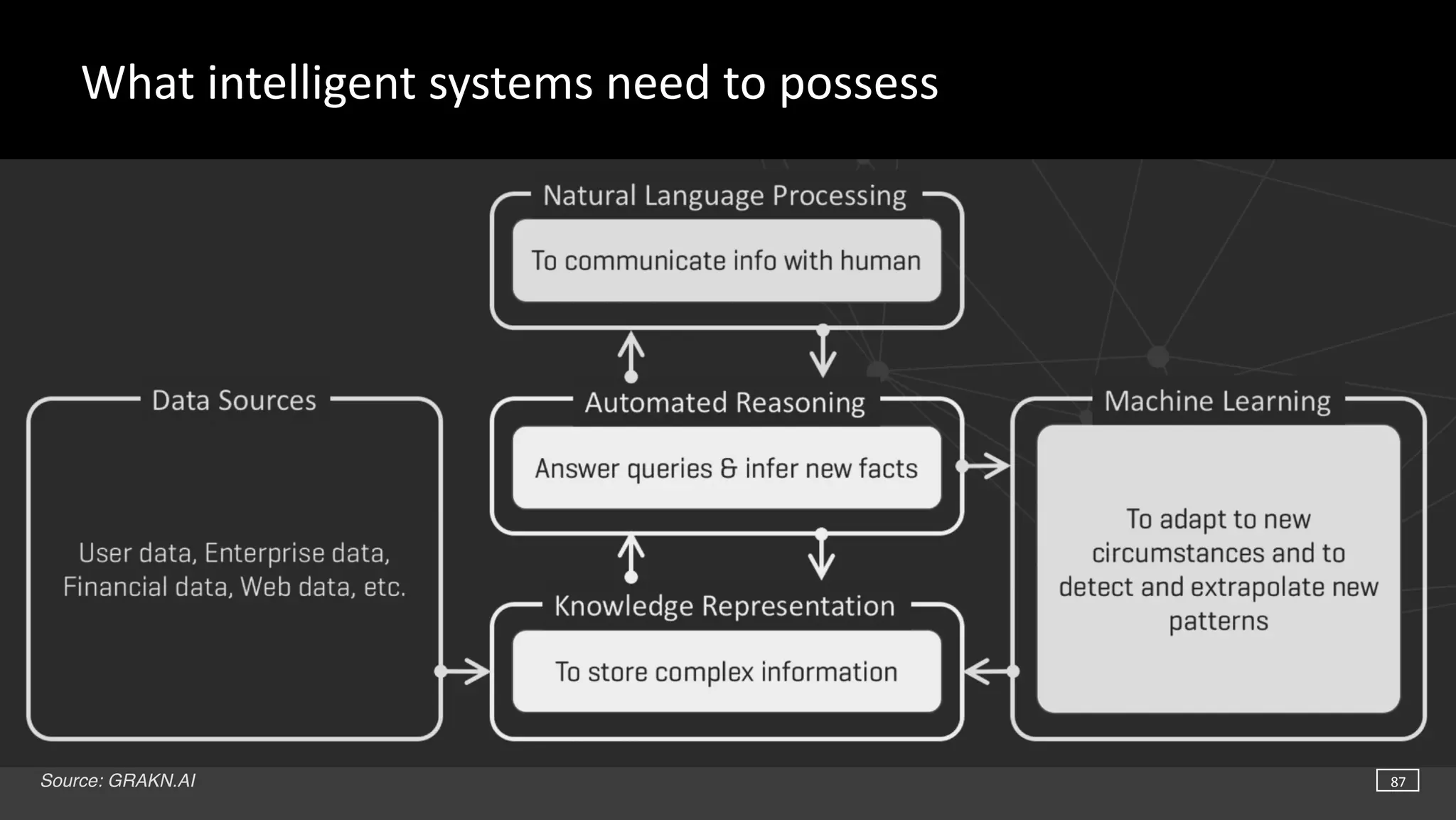

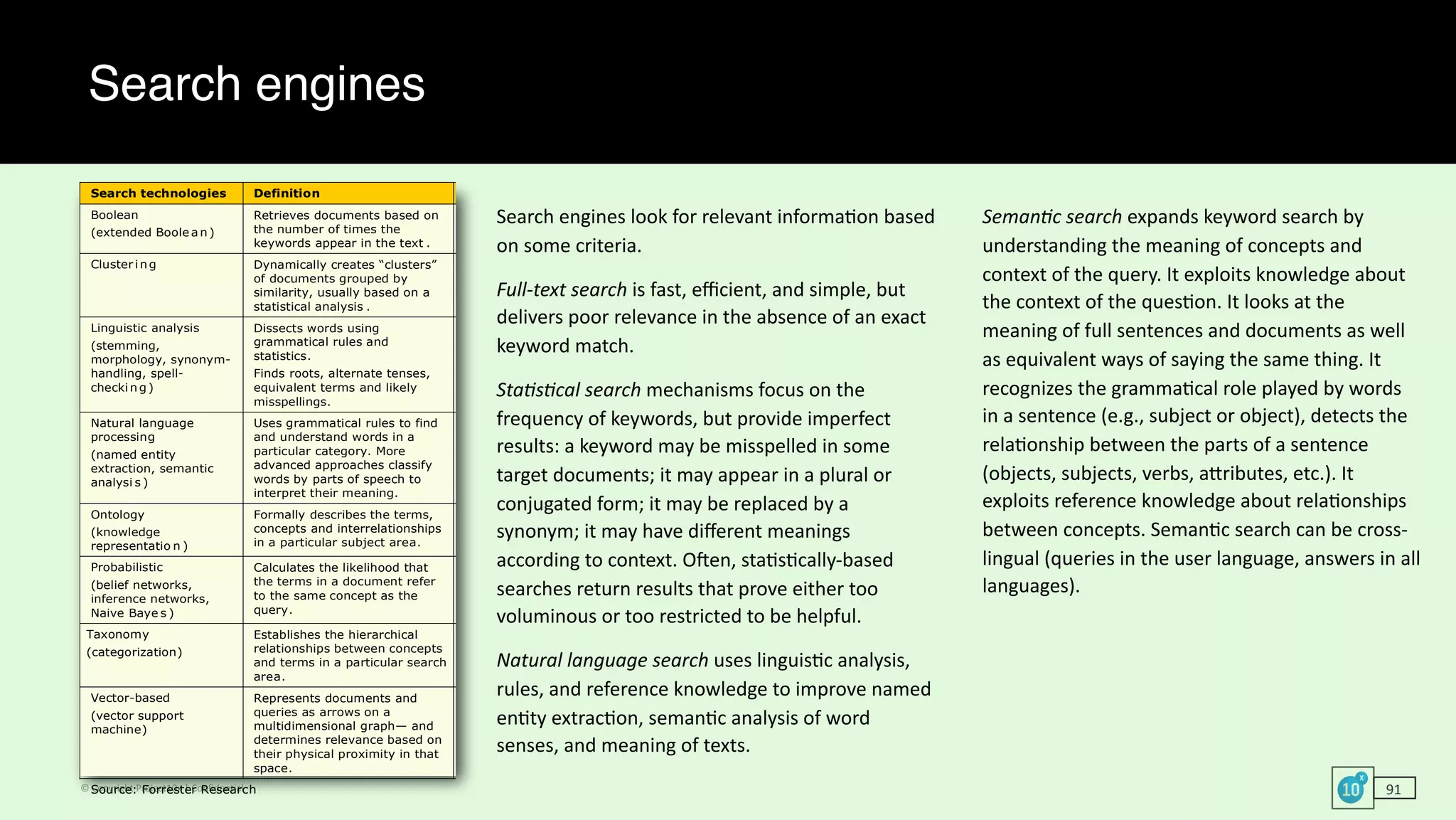

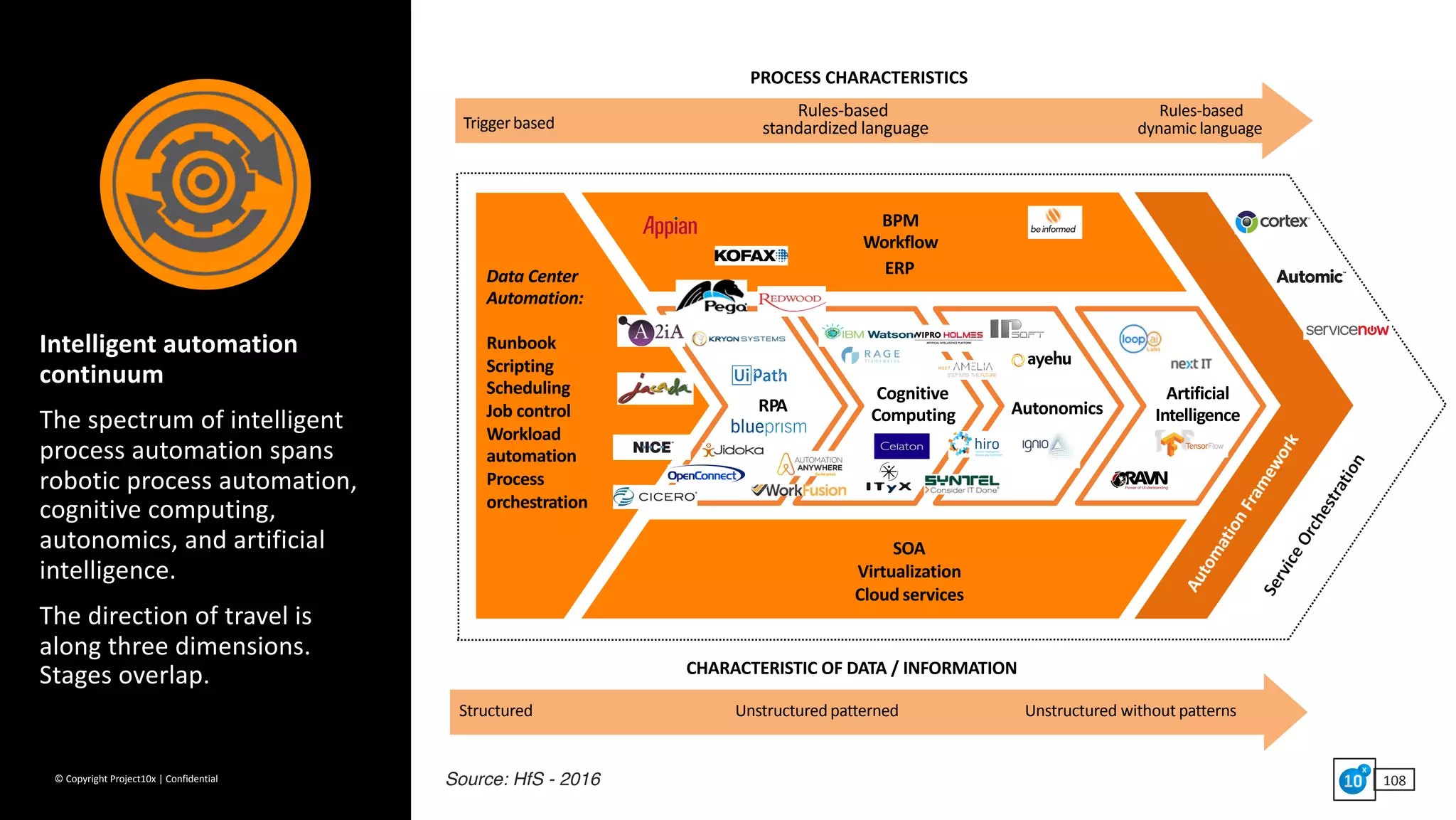

Lawrence Mills Davis, founder of Project10x, specializes in artificial intelligence (AI) technologies and their applications across various industries, offering expertise in areas such as machine learning, natural language processing, and cognitive computing. The document discusses AI's capabilities to sense, think, and act, highlighting innovations in automation, computer vision, and machine perception for user security. Furthermore, it explores the evolution of speech recognition and the growth of affective computing, emphasizing the impact of AI across diverse sectors.