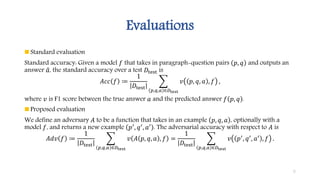

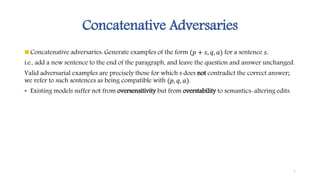

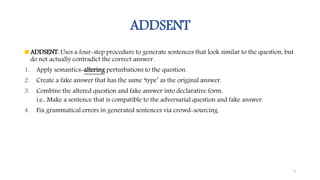

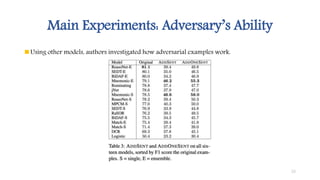

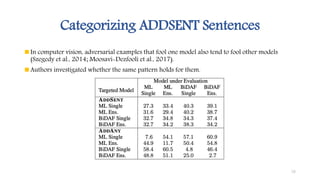

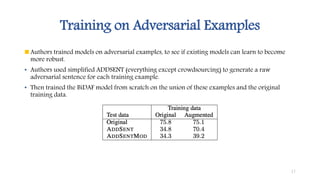

The document summarizes a research paper that proposes using adversarial examples to evaluate reading comprehension systems. The researchers generated adversarial examples for the SQuAD question answering dataset by adding sentences to paragraphs that maintain the original answer but cause systems to predict the wrong answer. They introduced four methods for generating adversarial examples and showed their ability to significantly reduce the accuracy of two state-of-the-art question answering models, demonstrating limitations in these systems' understanding of language.